Bruno Adriano

OpenEarthMap: A Benchmark Dataset for Global High-Resolution Land Cover Mapping

Oct 19, 2022

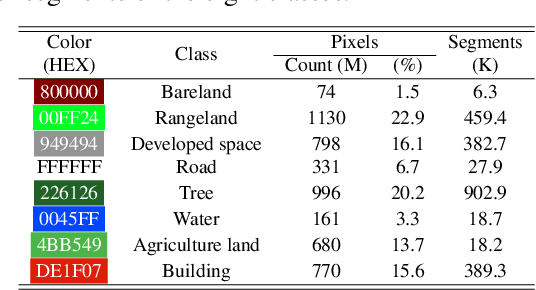

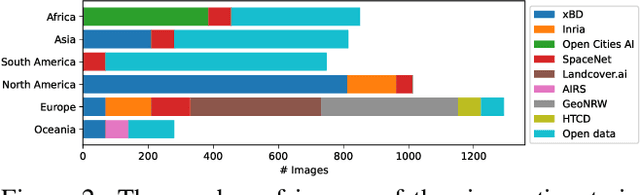

Abstract:We introduce OpenEarthMap, a benchmark dataset, for global high-resolution land cover mapping. OpenEarthMap consists of 2.2 million segments of 5000 aerial and satellite images covering 97 regions from 44 countries across 6 continents, with manually annotated 8-class land cover labels at a 0.25--0.5m ground sampling distance. Semantic segmentation models trained on the OpenEarthMap generalize worldwide and can be used as off-the-shelf models in a variety of applications. We evaluate the performance of state-of-the-art methods for unsupervised domain adaptation and present challenging problem settings suitable for further technical development. We also investigate lightweight models using automated neural architecture search for limited computational resources and fast mapping. The dataset is available at https://open-earth-map.org.

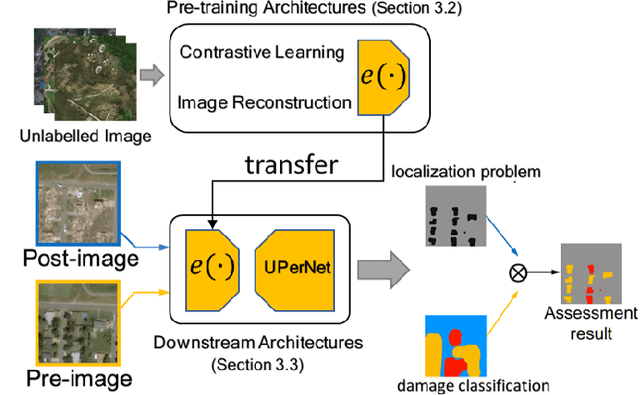

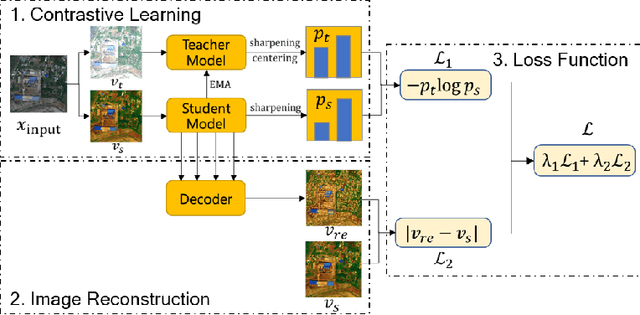

Self-Supervised Learning for Building Damage Assessment from Large-scale xBD Satellite Imagery Benchmark Datasets

Jun 01, 2022

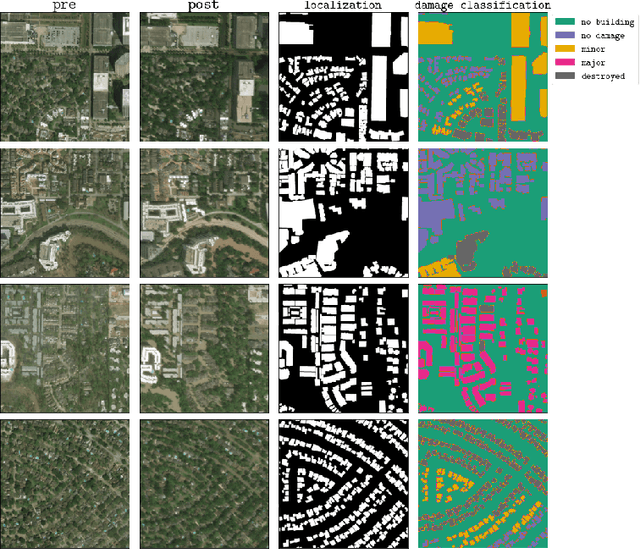

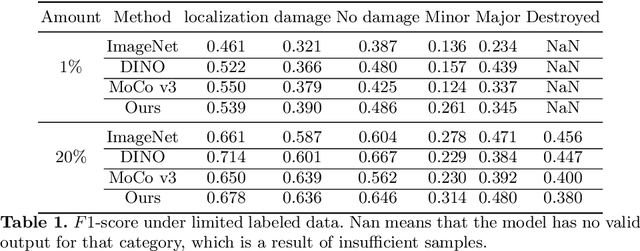

Abstract:In the field of post-disaster assessment, for timely and accurate rescue and localization after a disaster, people need to know the location of damaged buildings. In deep learning, some scholars have proposed methods to make automatic and highly accurate building damage assessments by remote sensing images, which are proved to be more efficient than assessment by domain experts. However, due to the lack of a large amount of labeled data, these kinds of tasks can suffer from being able to do an accurate assessment, as the efficiency of deep learning models relies highly on labeled data. Although existing semi-supervised and unsupervised studies have made breakthroughs in this area, none of them has completely solved this problem. Therefore, we propose adopting a self-supervised comparative learning approach to address the task without the requirement of labeled data. We constructed a novel asymmetric twin network architecture and tested its performance on the xBD dataset. Experiment results of our model show the improvement compared to baseline and commonly used methods. We also demonstrated the potential of self-supervised methods for building damage recognition awareness.

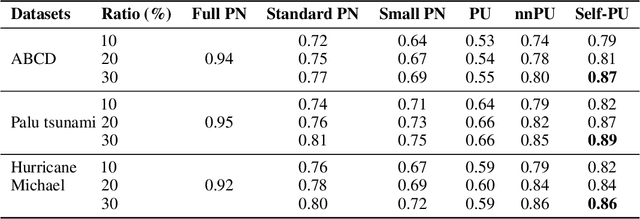

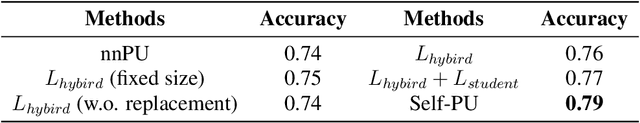

Building Damage Mapping with Self-PositiveUnlabeled Learning

Nov 04, 2021

Abstract:Humanitarian organizations must have fast and reliable data to respond to disasters. Deep learning approaches are difficult to implement in real-world disasters because it might be challenging to collect ground truth data of the damage situation (training data) soon after the event. The implementation of recent self-paced positive-unlabeled learning (PU) is demonstrated in this work by successfully applying to building damage assessment with very limited labeled data and a large amount of unlabeled data. Self-PU learning is compared with the supervised baselines and traditional PU learning using different datasets collected from the 2011 Tohoku earthquake, the 2018 Palu tsunami, and the 2018 Hurricane Michael. By utilizing only a portion of labeled damaged samples, we show how models trained with self-PU techniques may achieve comparable performance as supervised learning.

Learning from Multimodal and Multitemporal Earth Observation Data for Building Damage Mapping

Sep 14, 2020Abstract:Earth observation technologies, such as optical imaging and synthetic aperture radar (SAR), provide excellent means to monitor ever-growing urban environments continuously. Notably, in the case of large-scale disasters (e.g., tsunamis and earthquakes), in which a response is highly time-critical, images from both data modalities can complement each other to accurately convey the full damage condition in the disaster's aftermath. However, due to several factors, such as weather and satellite coverage, it is often uncertain which data modality will be the first available for rapid disaster response efforts. Hence, novel methodologies that can utilize all accessible EO datasets are essential for disaster management. In this study, we have developed a global multisensor and multitemporal dataset for building damage mapping. We included building damage characteristics from three disaster types, namely, earthquakes, tsunamis, and typhoons, and considered three building damage categories. The global dataset contains high-resolution optical imagery and high-to-moderate-resolution multiband SAR data acquired before and after each disaster. Using this comprehensive dataset, we analyzed five data modality scenarios for damage mapping: single-mode (optical and SAR datasets), cross-modal (pre-disaster optical and post-disaster SAR datasets), and mode fusion scenarios. We defined a damage mapping framework for the semantic segmentation of damaged buildings based on a deep convolutional neural network algorithm. We compare our approach to another state-of-the-art baseline model for damage mapping. The results indicated that our dataset, together with a deep learning network, enabled acceptable predictions for all the data modality scenarios.

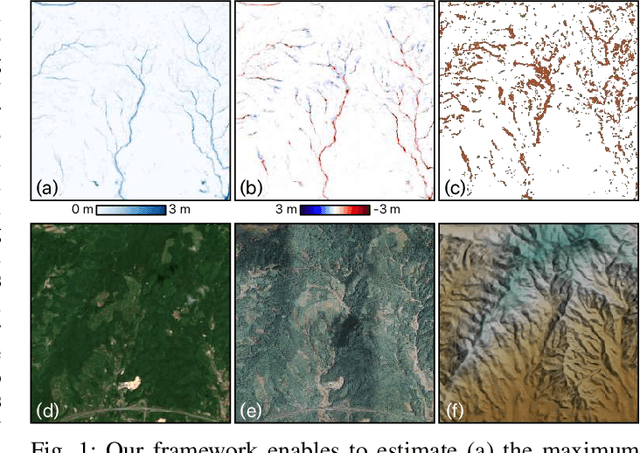

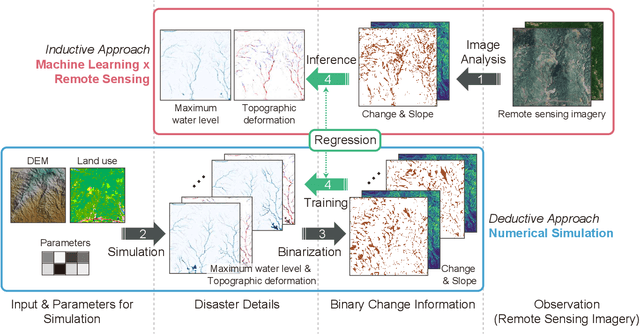

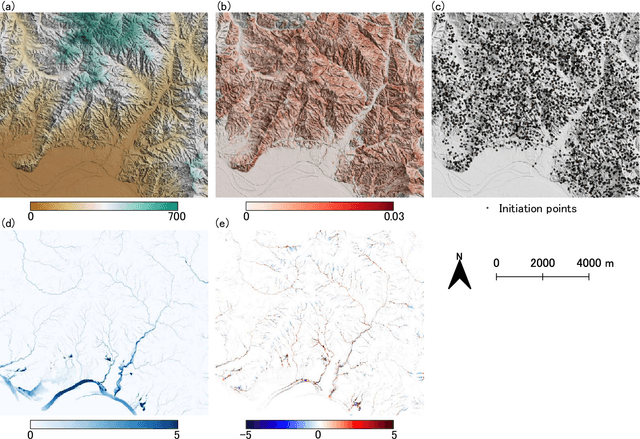

Breaking the Limits of Remote Sensing by Simulation and Deep Learning for Flood and Debris Flow Mapping

Jun 09, 2020

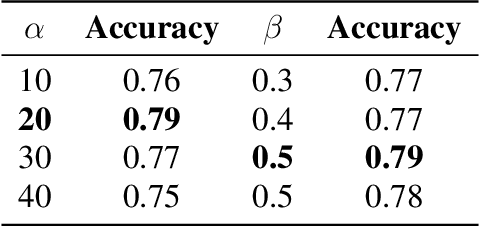

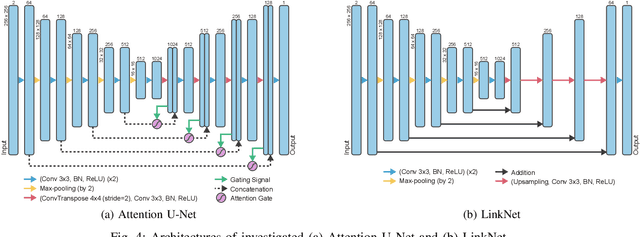

Abstract:We propose a framework that estimates inundation depth (maximum water level) and debris-flow-induced topographic deformation from remote sensing imagery by integrating deep learning and numerical simulation. A water and debris flow simulator generates training data for various artificial disaster scenarios. We show that regression models based on Attention U-Net and LinkNet architectures trained on such synthetic data can predict the maximum water level and topographic deformation from a remote sensing-derived change detection map and a digital elevation model. The proposed framework has an inpainting capability, thus mitigating the false negatives that are inevitable in remote sensing image analysis. Our framework breaks the limits of remote sensing and enables rapid estimation of inundation depth and topographic deformation, essential information for emergency response, including rescue and relief activities. We conduct experiments with both synthetic and real data for two disaster events that caused simultaneous flooding and debris flows and demonstrate the effectiveness of our approach quantitatively and qualitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge