Gerald Baier

Building a Parallel Universe Image Synthesis from Land Cover Maps and Auxiliary Raster Data

Nov 23, 2020

Abstract:We synthesize both optical RGB and SAR remote sensing images from land cover maps and auxiliary raster data using GANs. In remote sensing many types of data, such as digital elevation models or precipitation maps, are often not reflected in land cover maps but still influence image content or structure. Including such data in the synthesis process increases the quality of the generated images and exerts more control on their characteristics. Our method fuses both inputs by spatially adaptive normalization layers, previously published as SPADE semantic image synthesis. In contrast to SPADE, these normalization layers are applied to a full-blown generator architecture consisting of encoder and decoder, to take full advantage of the information content in the auxiliary raster data. Our method successfully synthesizes medium (10m) and high (1m) resolution images, when trained with the corresponding dataset. We show the advantage of data fusion of land cover maps and auxiliary information using mean intersection over union, pixel accuracy and FID using pre-trained U-Net segmentation models. Handpicked images exemplify how fusing information avoids ambiguities in the synthesized images. By slightly editing the input our method can be used to synthesize realistic changes, i.e., raising the water levels. The source code is available at https://github.com/gbaier/rs_img_synth and we published the newly created high-resolution dataset at https://ieee-dataport.org/open-access/geonrw.

Breaking the Limits of Remote Sensing by Simulation and Deep Learning for Flood and Debris Flow Mapping

Jun 09, 2020

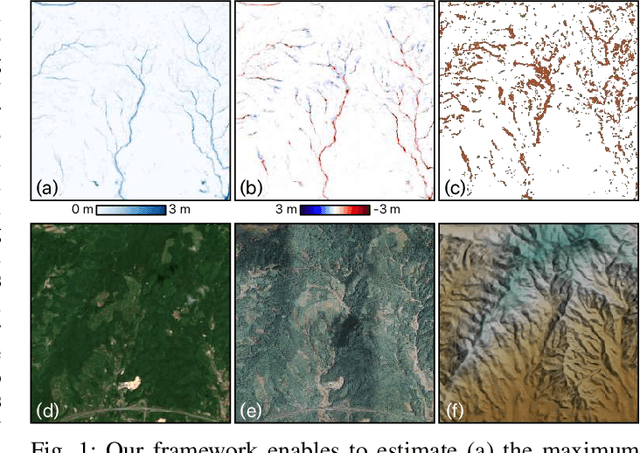

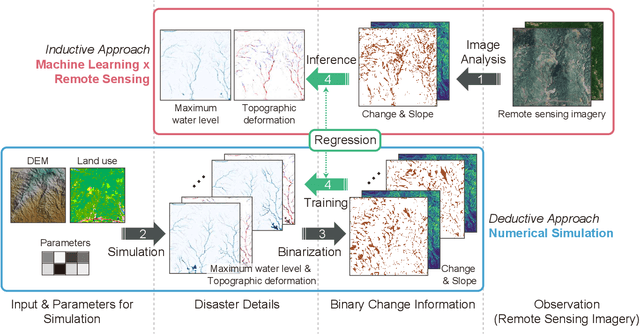

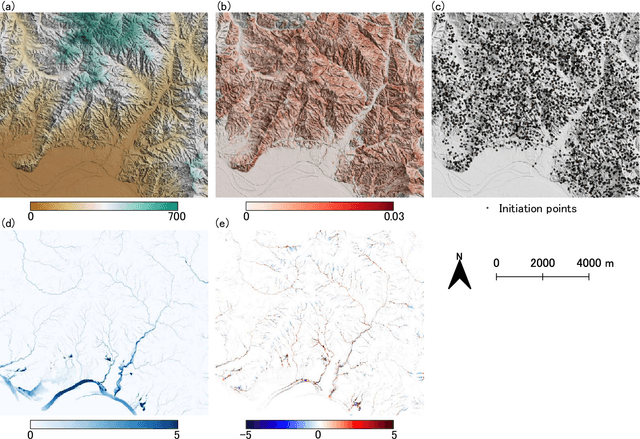

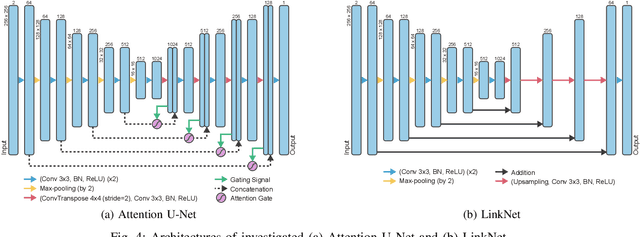

Abstract:We propose a framework that estimates inundation depth (maximum water level) and debris-flow-induced topographic deformation from remote sensing imagery by integrating deep learning and numerical simulation. A water and debris flow simulator generates training data for various artificial disaster scenarios. We show that regression models based on Attention U-Net and LinkNet architectures trained on such synthetic data can predict the maximum water level and topographic deformation from a remote sensing-derived change detection map and a digital elevation model. The proposed framework has an inpainting capability, thus mitigating the false negatives that are inevitable in remote sensing image analysis. Our framework breaks the limits of remote sensing and enables rapid estimation of inundation depth and topographic deformation, essential information for emergency response, including rescue and relief activities. We conduct experiments with both synthetic and real data for two disaster events that caused simultaneous flooding and debris flows and demonstrate the effectiveness of our approach quantitatively and qualitatively.

Learning Convolutional Sparse Coding on Complex Domain for Interferometric Phase Restoration

Mar 06, 2020

Abstract:Interferometric phase restoration has been investigated for decades and most of the state-of-the-art methods have achieved promising performances for InSAR phase restoration. These methods generally follow the nonlocal filtering processing chain aiming at circumventing the staircase effect and preserving the details of phase variations. In this paper, we propose an alternative approach for InSAR phase restoration, i.e. Complex Convolutional Sparse Coding (ComCSC) and its gradient regularized version. To our best knowledge, this is the first time that we solve the InSAR phase restoration problem in a deconvolutional fashion. The proposed methods can not only suppress interferometric phase noise, but also avoid the staircase effect and preserve the details. Furthermore, they provide an insight of the elementary phase components for the interferometric phases. The experimental results on synthetic and realistic high- and medium-resolution datasets from TerraSAR-X StripMap and Sentinel-1 interferometric wide swath mode, respectively, show that our method outperforms those previous state-of-the-art methods based on nonlocal InSAR filters, particularly the state-of-the-art method: InSAR-BM3D. The source code of this paper will be made publicly available for reproducible research inside the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge