Brian Y. Cho

Modeling Kinematic Uncertainty of Tendon-Driven Continuum Robots via Mixture Density Networks

Apr 05, 2024

Abstract:Tendon-driven continuum robot kinematic models are frequently computationally expensive, inaccurate due to unmodeled effects, or both. In particular, unmodeled effects produce uncertainties that arise during the robot's operation that lead to variability in the resulting geometry. We propose a novel solution to these issues through the development of a Gaussian mixture kinematic model. We train a mixture density network to output a Gaussian mixture model representation of the robot geometry given the current tendon displacements. This model computes a probability distribution that is more representative of the true distribution of geometries at a given configuration than a model that outputs a single geometry, while also reducing the computation time. We demonstrate one use of this model through a trajectory optimization method that explicitly reasons about the workspace uncertainty to minimize the probability of collision.

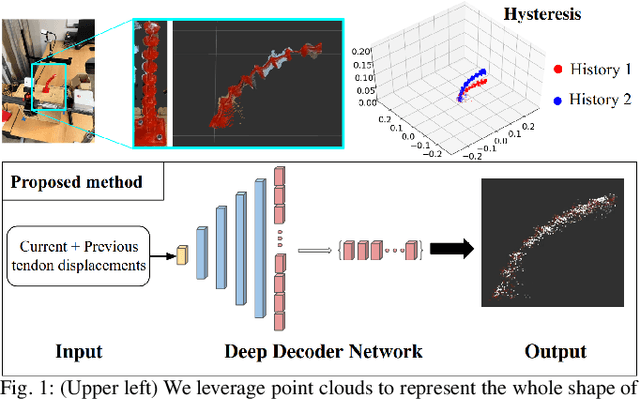

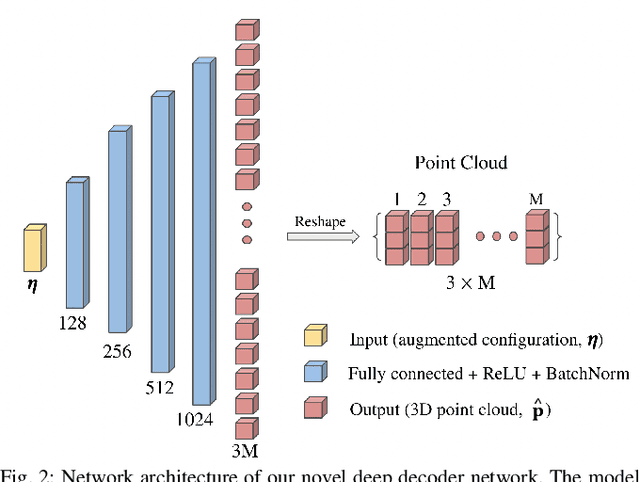

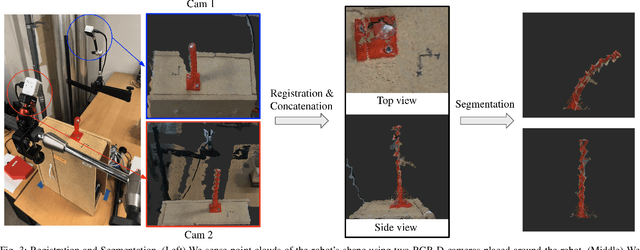

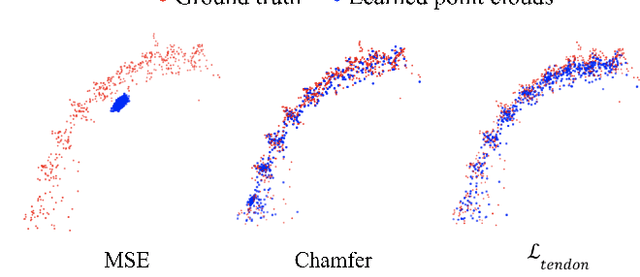

Accounting for Hysteresis in the Forward Kinematics of Nonlinearly-Routed Tendon-Driven Continuum Robots via a Learned Deep Decoder Network

Apr 04, 2024

Abstract:Tendon-driven continuum robots have been gaining popularity in medical applications due to their ability to curve around complex anatomical structures, potentially reducing the invasiveness of surgery. However, accurate modeling is required to plan and control the movements of these flexible robots. Physics-based models have limitations due to unmodeled effects, leading to mismatches between model prediction and actual robot shape. Recently proposed learning-based methods have been shown to overcome some of these limitations but do not account for hysteresis, a significant source of error for these robots. To overcome these challenges, we propose a novel deep decoder neural network that predicts the complete shape of tendon-driven robots using point clouds as the shape representation, conditioned on prior configurations to account for hysteresis. We evaluate our method on a physical tendon-driven robot and show that our network model accurately predicts the robot's shape, significantly outperforming a state-of-the-art physics-based model and a learning-based model that does not account for hysteresis.

Efficient and Accurate Mapping of Subsurface Anatomy via Online Trajectory Optimization for Robot Assisted Surgery

Sep 18, 2023Abstract:Robotic surgical subtask automation has the potential to reduce the per-patient workload of human surgeons. There are a variety of surgical subtasks that require geometric information of subsurface anatomy, such as the location of tumors, which necessitates accurate and efficient surgical sensing. In this work, we propose an automated sensing method that maps 3D subsurface anatomy to provide such geometric knowledge. We model the anatomy via a Bayesian Hilbert map-based probabilistic 3D occupancy map. Using the 3D occupancy map, we plan sensing paths on the surface of the anatomy via a graph search algorithm, $A^*$ search, with a cost function that enables the trajectories generated to balance between exploration of unsensed regions and refining the existing probabilistic understanding. We demonstrate the performance of our proposed method by comparing it against 3 different methods in several anatomical environments including a real-life CT scan dataset. The experimental results show that our method efficiently detects relevant subsurface anatomy with shorter trajectories than the comparison methods, and the resulting occupancy map achieves high accuracy.

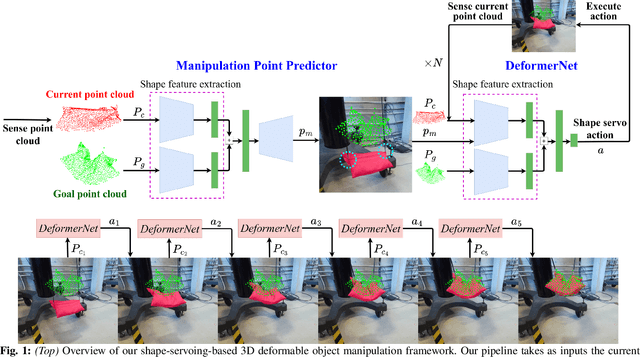

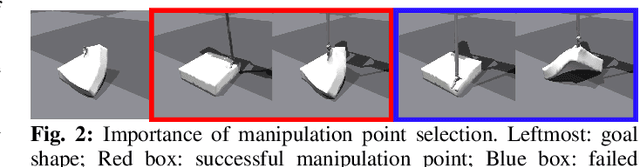

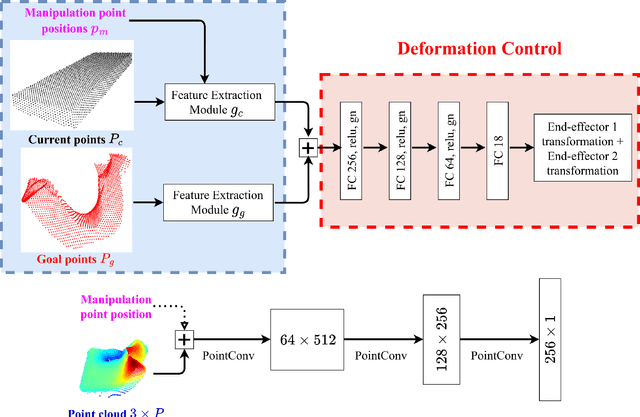

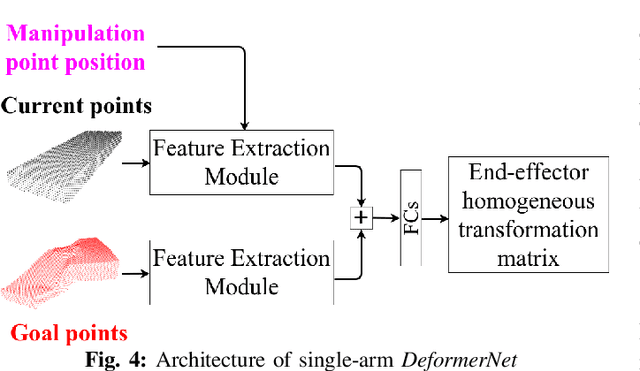

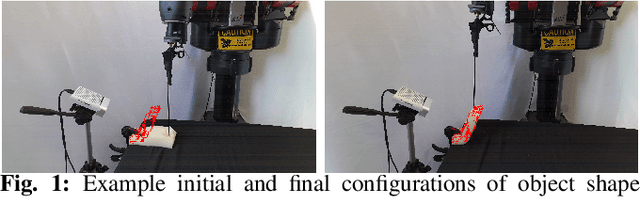

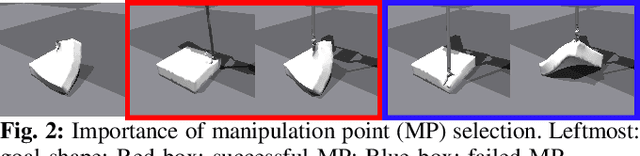

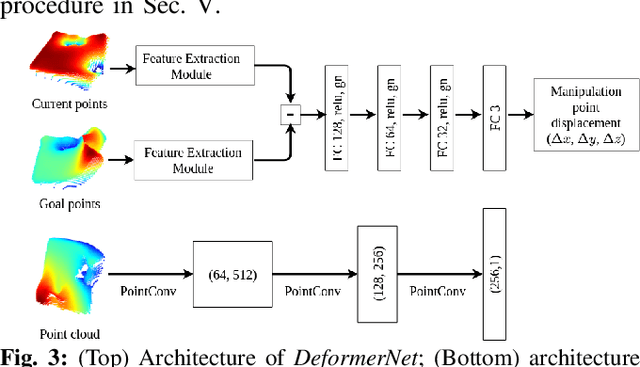

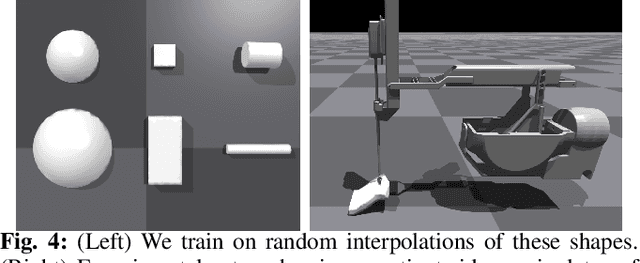

DeformerNet: Learning Bimanual Manipulation of 3D Deformable Objects

May 08, 2023

Abstract:Applications in fields ranging from home care to warehouse fulfillment to surgical assistance require robots to reliably manipulate the shape of 3D deformable objects. Analytic models of elastic, 3D deformable objects require numerous parameters to describe the potentially infinite degrees of freedom present in determining the object's shape. Previous attempts at performing 3D shape control rely on hand-crafted features to represent the object shape and require training of object-specific control models. We overcome these issues through the use of our novel DeformerNet neural network architecture, which operates on a partial-view point cloud of the manipulated object and a point cloud of the goal shape to learn a low-dimensional representation of the object shape. This shape embedding enables the robot to learn a visual servo controller that computes the desired robot end-effector action to iteratively deform the object toward the target shape. We demonstrate both in simulation and on a physical robot that DeformerNet reliably generalizes to object shapes and material stiffness not seen during training. Crucially, using DeformerNet, the robot successfully accomplishes three surgical sub-tasks: retraction (moving tissue aside to access a site underneath it), tissue wrapping (a sub-task in procedures like aortic stent placements), and connecting two tubular pieces of tissue (a sub-task in anastomosis).

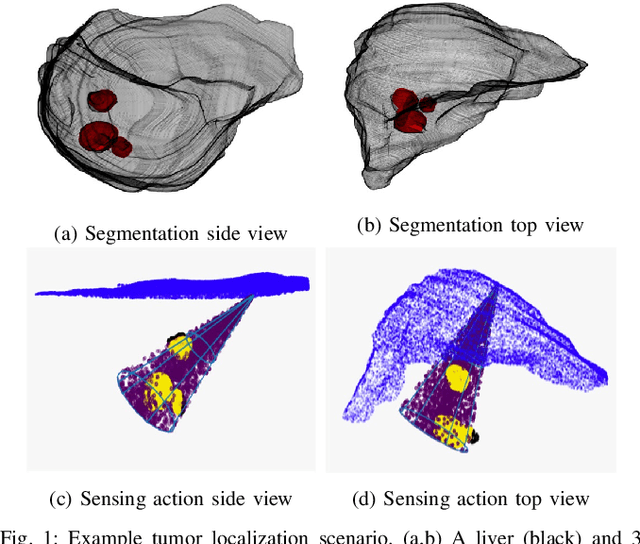

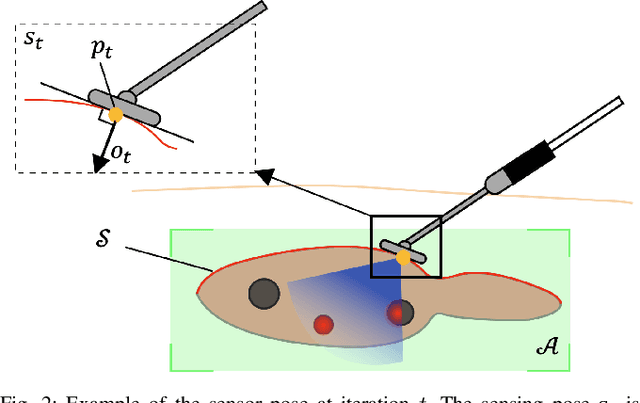

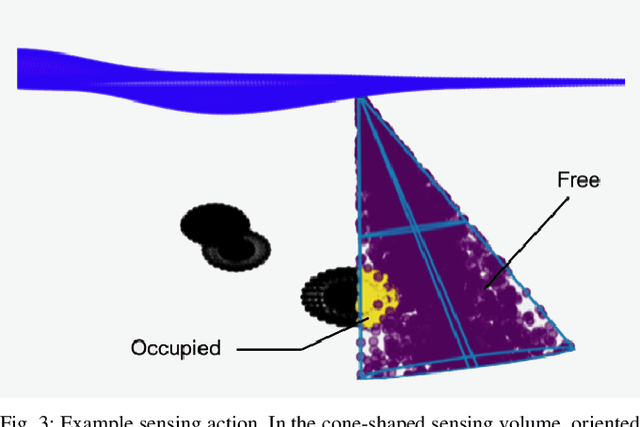

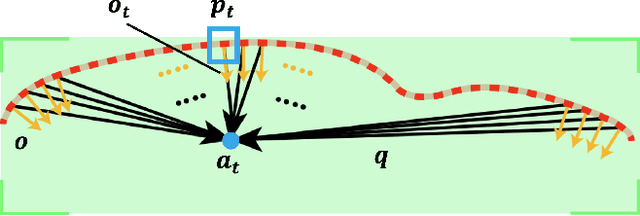

Planning Sensing Sequences for Subsurface 3D Tumor Mapping

Oct 12, 2021

Abstract:Surgical automation has the potential to enable increased precision and reduce the per-patient workload of overburdened human surgeons. An effective automation system must be able to sense and map subsurface anatomy, such as tumors, efficiently and accurately. In this work, we present a method that plans a sequence of sensing actions to map the 3D geometry of subsurface tumors. We leverage a sequential Bayesian Hilbert map to create a 3D probabilistic occupancy model that represents the likelihood that any given point in the anatomy is occupied by a tumor, conditioned on sensor readings. We iteratively update the map, utilizing Bayesian optimization to determine sensing poses that explore unsensed regions of anatomy and exploit the knowledge gained by previous sensing actions. We demonstrate our method's efficiency and accuracy in three anatomical scenarios including a liver tumor scenario generated from a real patient's CT scan. The results show that our proposed method significantly outperforms comparison methods in terms of efficiency while detecting subsurface tumors with high accuracy.

Learning Visual Shape Control of Novel 3D Deformable Objects from Partial-View Point Clouds

Oct 10, 2021

Abstract:If robots could reliably manipulate the shape of 3D deformable objects, they could find applications in fields ranging from home care to warehouse fulfillment to surgical assistance. Analytic models of elastic, 3D deformable objects require numerous parameters to describe the potentially infinite degrees of freedom present in determining the object's shape. Previous attempts at performing 3D shape control rely on hand-crafted features to represent the object shape and require training of object-specific control models. We overcome these issues through the use of our novel DeformerNet neural network architecture, which operates on a partial-view point cloud of the object being manipulated and a point cloud of the goal shape to learn a low-dimensional representation of the object shape. This shape embedding enables the robot to learn to define a visual servo controller that provides Cartesian pose changes to the robot end-effector causing the object to deform towards its target shape. Crucially, we demonstrate both in simulation and on a physical robot that DeformerNet reliably generalizes to object shapes and material stiffness not seen during training and outperforms comparison methods for both the generic shape control and the surgical task of retraction.

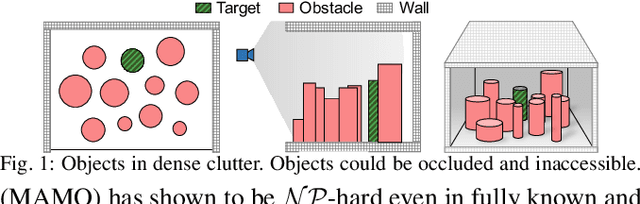

Where to relocate?: Object rearrangement inside cluttered and confined environments for robotic manipulation

Mar 24, 2020

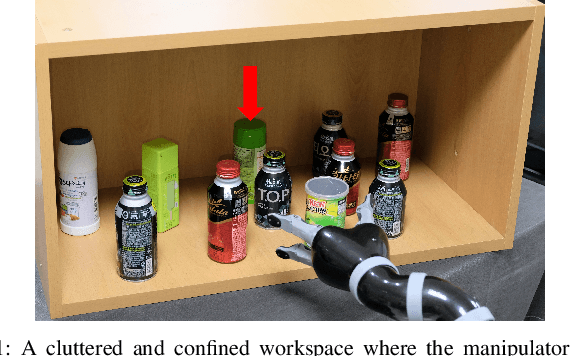

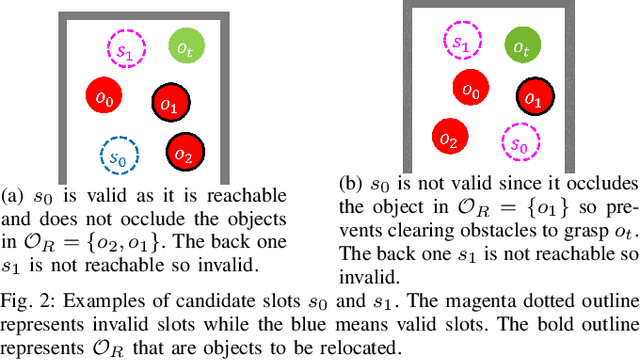

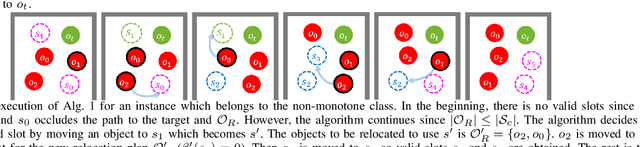

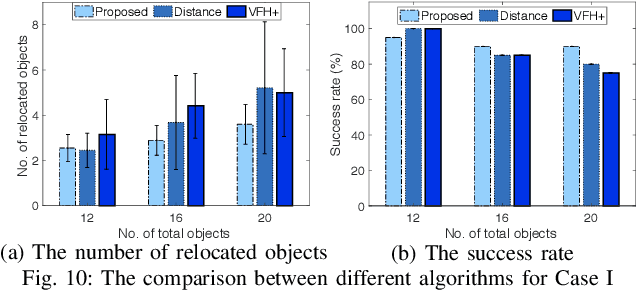

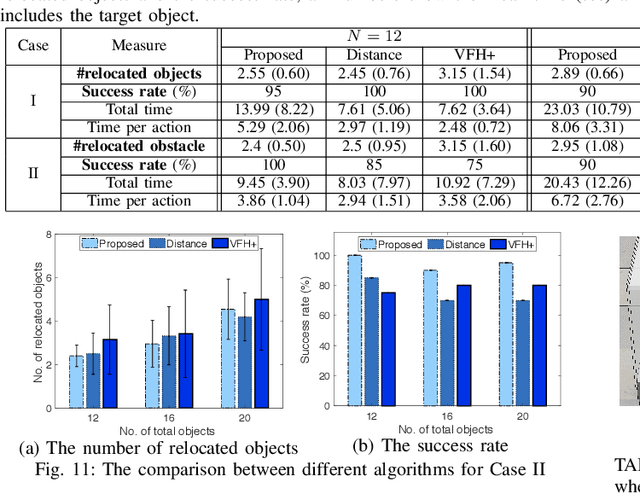

Abstract:We present an algorithm determining where to relocate objects inside a cluttered and confined space while rearranging objects to retrieve a target object. Although methods that decide what to remove have been proposed, planning for the placement of removed objects inside a workspace has not received much attention. Rather, removed objects are often placed outside the workspace, which incurs additional laborious work (e.g., motion planning and execution of the manipulator and the mobile base, perception of other areas). Some other methods manipulate objects only inside the workspace but without a principle so the rearrangement becomes inefficient. In this work, we consider both monotone (each object is moved only once) and non-monotone arrangement problems which have shown to be NP-hard. Once the sequence of objects to be relocated is given by any existing algorithm, our method aims to minimize the number of pick-and-place actions to place the objects until the target becomes accessible. From extensive experiments, we show that our method reduces the number of pick-and-place actions and the total execution time (the reduction is up to 23.1% and 28.1% respectively) compared to baseline methods while achieving higher success rates.

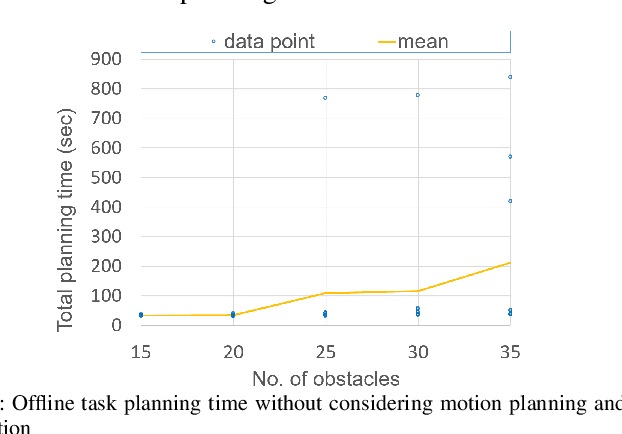

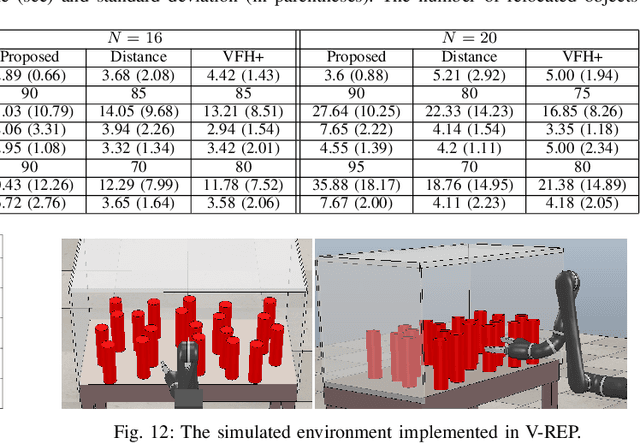

Fast and resilient manipulation planning for target retrieval in clutter

Mar 24, 2020

Abstract:This paper presents a task and motion planning (TAMP) framework for a robotic manipulator in order to retrieve a target object from clutter. We consider a configuration of objects in a confined space with a high density so no collision-free path to the target exists. The robot must relocate some objects to retrieve the target without collisions. For fast completion of object rearrangement, the robot aims to optimize the number of pick-and-place actions which often determines the efficiency of a TAMP framework. We propose a task planner incorporating motion planning to generate executable plans which aims to minimize the number of pick-and-place actions. In addition to fully known and static environments, our method can deal with uncertain and dynamic situations incurred by occluded views. Our method is shown to reduce the number of pick-and-place actions compared to baseline methods (e.g., at least 28.0% of reduction in a known static environment with 20 objects).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge