Boris Bellalta

Evaluating Wi-Fi Performance for VR Streaming: A Study on Realistic HEVC Video Traffic

Jan 23, 2026Abstract:Cloud-based Virtual Reality (VR) streaming presents significant challenges for 802.11 networks due to its high throughput and low latency requirements. When multiple VR users share a Wi-Fi network, the resulting uplink and downlink traffic can quickly saturate the channel. This paper investigates the capacity of 802.11 networks for supporting realistic VR streaming workloads across varying frame rates, bitrates, codec settings, and numbers of users. We develop an emulation framework that reproduces Air Light VR (ALVR) operation, where real HEVC video traffic is fed into an 802.11 simulation model. Our findings explore Wi-Fi's performance anomaly and demonstrate that Intra-refresh (IR) coding effectively reduces latency variability and improves QoS, supporting up to 4 concurrent VR users with Constant Bitrate (CBR) 100 Mbps before the channel is saturated.

Performance Analysis of IEEE 802.11bn Non-Primary Channel Access

Apr 22, 2025Abstract:This paper presents a performance analysis of the Non-Primary Channel Access (NPCA) mechanism, a new feature introduced in IEEE 802.11bn to enhance spectrum utilization in Wi-Fi networks. NPCA enables devices to contend for and transmit on the secondary channel when the primary channel is occupied by transmissions from an Overlapping Basic Service Set (OBSS). We develop a Continuous-Time Markov Chain (CTMC) model that captures the interactions among OBSSs in dense WLAN environments when NPCA is enabled, incorporating new NPCA-specific states and transitions. In addition to the analytical insights offered by the model, we conduct numerical evaluations and simulations to quantify NPCA's impact on throughput and channel access delay across various scenarios. Our results show that NPCA can significantly improve throughput and reduce access delays in favorable conditions for BSSs that support the mechanism. Moreover, NPCA helps mitigate the OBSS performance anomaly, where low-rate OBSS transmissions degrade network performance for all nearby devices. However, we also observe trade-offs: NPCA may increase contention on secondary channels, potentially reducing transmission opportunities for BSSs operating there.

Coordinated Multi-Armed Bandits for Improved Spatial Reuse in Wi-Fi

Dec 04, 2024

Abstract:Multi-Access Point Coordination (MAPC) and Artificial Intelligence and Machine Learning (AI/ML) are expected to be key features in future Wi-Fi, such as the forthcoming IEEE 802.11bn (Wi-Fi 8) and beyond. In this paper, we explore a coordinated solution based on online learning to drive the optimization of Spatial Reuse (SR), a method that allows multiple devices to perform simultaneous transmissions by controlling interference through Packet Detect (PD) adjustment and transmit power control. In particular, we focus on a Multi-Agent Multi-Armed Bandit (MA-MAB) setting, where multiple decision-making agents concurrently configure SR parameters from coexisting networks by leveraging the MAPC framework, and study various algorithms and reward-sharing mechanisms. We evaluate different MA-MAB implementations using Komondor, a well-adopted Wi-Fi simulator, and demonstrate that AI-native SR enabled by coordinated MABs can improve the network performance over current Wi-Fi operation: mean throughput increases by 15%, fairness is improved by increasing the minimum throughput across the network by 210%, while the maximum access delay is kept below 3 ms.

Machine Learning & Wi-Fi: Unveiling the Path Towards AI/ML-Native IEEE 802.11 Networks

May 19, 2024

Abstract:Artificial intelligence (AI) and machine learning (ML) are nowadays mature technologies considered essential for driving the evolution of future communications systems. Simultaneously, Wi-Fi technology has constantly evolved over the past three decades and incorporated new features generation after generation, thus gaining in complexity. As such, researchers have observed that AI/ML functionalities may be required to address the upcoming Wi-Fi challenges that will be otherwise difficult to solve with traditional approaches. This paper discusses the role of AI/ML in current and future Wi-Fi networks and depicts the ways forward. A roadmap towards AI/ML-native Wi-Fi, key challenges, standardization efforts, and major enablers are also discussed. An exemplary use case is provided to showcase the potential of AI/ML in Wi-Fi at different adoption stages.

Throughput Analysis of IEEE 802.11bn Coordinated Spatial Reuse

Sep 17, 2023

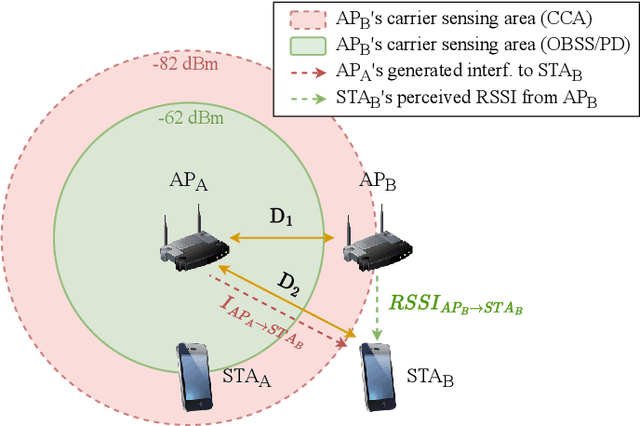

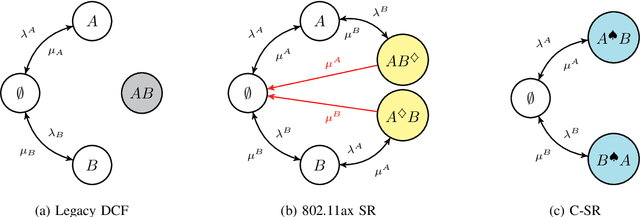

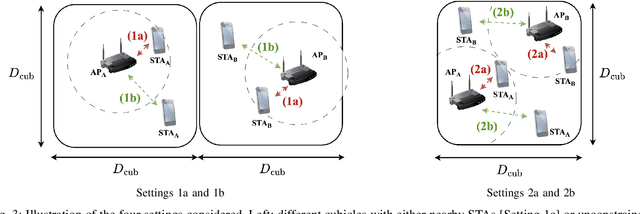

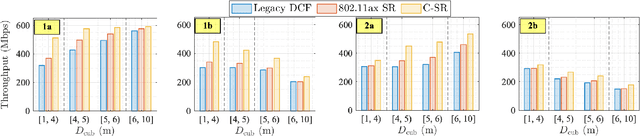

Abstract:Multi-Access Point Coordination (MAPC) is becoming the cornerstone of the IEEE 802.11bn amendment, alias Wi-Fi 8. Among the MAPC features, Coordinated Spatial Reuse (C-SR) stands as one of the most appealing due to its capability to orchestrate simultaneous access point transmissions at a low implementation complexity. In this paper, we contribute to the understanding of C-SR by introducing an analytical model based on Continuous Time Markov Chains (CTMCs) to characterize its throughput and spatial efficiency. Applying the proposed model to several network topologies, we show that C-SR opportunistically enables parallel high-quality transmissions and yields an average throughput gain of up to 59% in comparison to the legacy 802.11 Distributed Coordination Function (DCF) and up to 42% when compared to the 802.11ax Overlapping Basic Service Set Packet Detect (OBSS/PD) mechanism.

A Federated Reinforcement Learning Framework for Link Activation in Multi-link Wi-Fi Networks

Apr 28, 2023

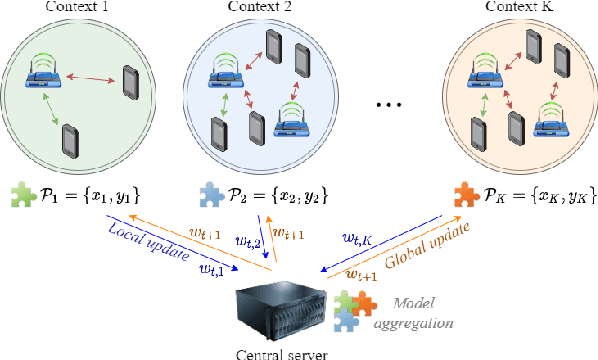

Abstract:Next-generation Wi-Fi networks are looking forward to introducing new features like multi-link operation (MLO) to both achieve higher throughput and lower latency. However, given the limited number of available channels, the use of multiple links by a group of contending Basic Service Sets (BSSs) can result in higher interference and channel contention, thus potentially leading to lower performance and reliability. In such a situation, it could be better for all contending BSSs to use less links if that contributes to reduce channel access contention. Recently, reinforcement learning (RL) has proven its potential for optimizing resource allocation in wireless networks. However, the independent operation of each wireless network makes difficult -- if not almost impossible -- for each individual network to learn a good configuration. To solve this issue, in this paper, we propose the use of a Federated Reinforcement Learning (FRL) framework, i.e., a collaborative machine learning approach to train models across multiple distributed agents without exchanging data, to collaboratively learn the the best MLO-Link Allocation (LA) strategy by a group of neighboring BSSs. The simulation results show that the FRL-based decentralized MLO-LA strategy achieves a better throughput fairness, and so a higher reliability -- because it allows the different BSSs to find a link allocation strategy which maximizes the minimum achieved data rate -- compared to fixed, random and RL-based MLO-LA schemes.

What Will Wi-Fi 8 Be? A Primer on IEEE 802.11bn Ultra High Reliability

Mar 18, 2023

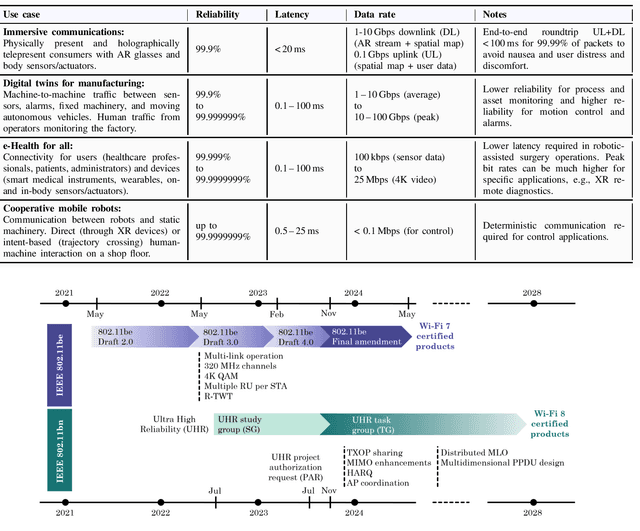

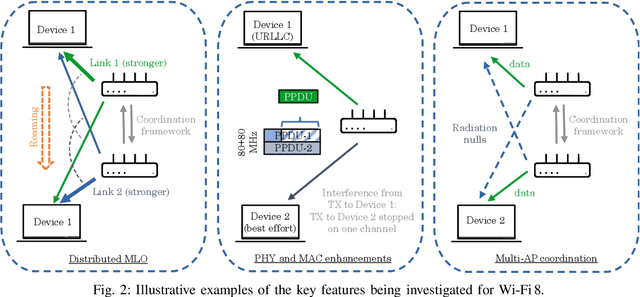

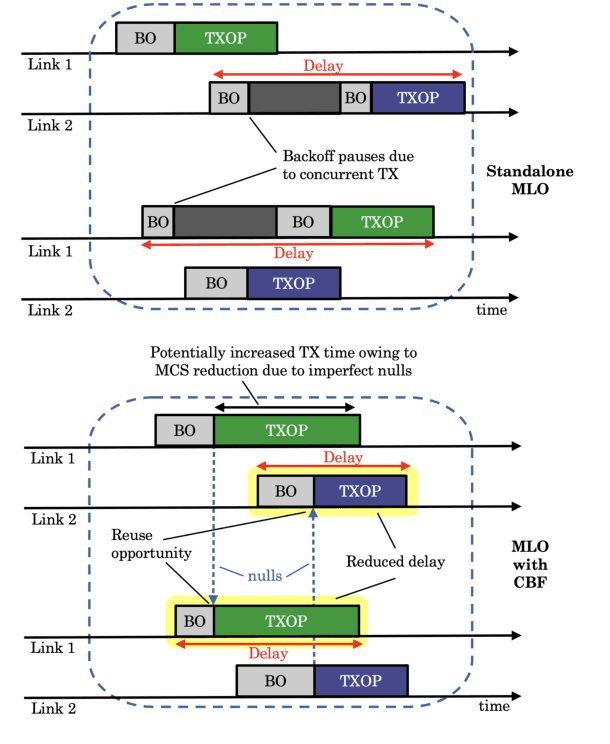

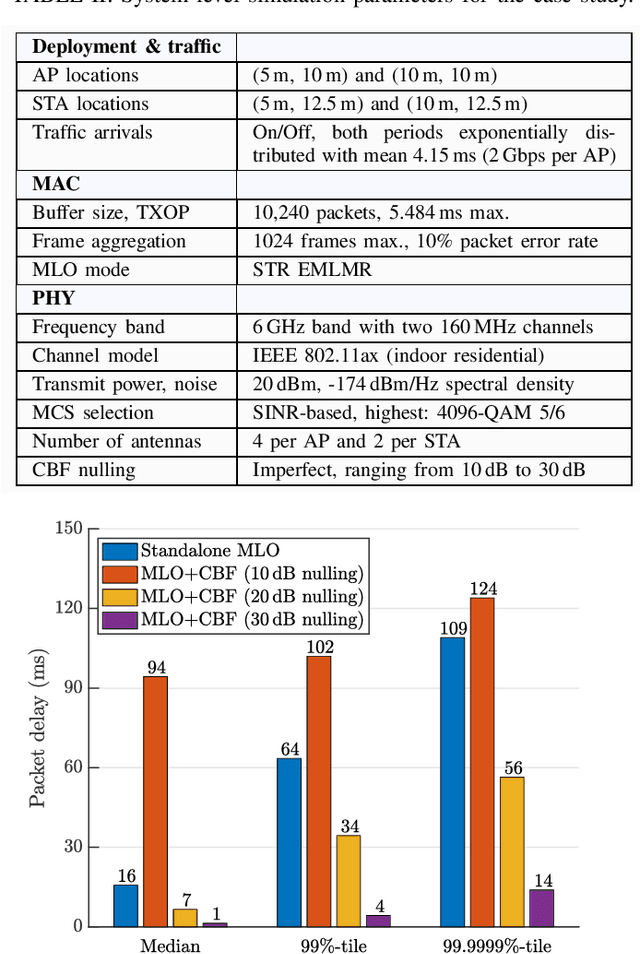

Abstract:What will Wi-Fi 8 be? Driven by the strict requirements of emerging applications, next-generation Wi-Fi is set to prioritize Ultra High Reliability (UHR) above all. In this paper, we explore the journey towards IEEE 802.11bn UHR, the amendment that will form the basis of Wi-Fi 8. After providing an overview of the nearly completed Wi-Fi 7 standard, we present new use cases calling for further Wi-Fi evolution. We also outline current standardization, certification, and spectrum allocation activities, sharing updates from the newly formed UHR Study Group. We then introduce the disruptive new features envisioned for Wi-Fi 8 and discuss the associated research challenges. Among those, we focus on access point coordination and demonstrate that it could build upon 802.11be multi-link operation to make Ultra High Reliability a reality in Wi-Fi 8.

Federated Spatial Reuse Optimization in Next-Generation Decentralized IEEE 802.11 WLANs

Mar 20, 2022

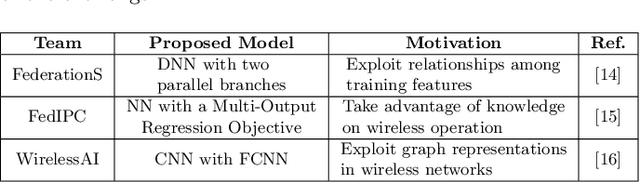

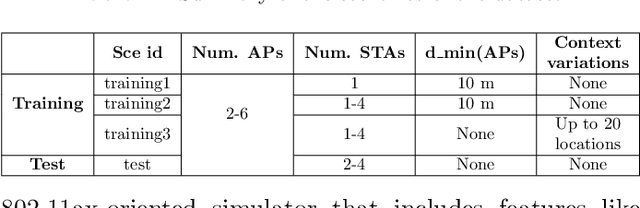

Abstract:As wireless standards evolve, more complex functionalities are introduced to address the increasing requirements in terms of throughput, latency, security, and efficiency. To unleash the potential of such new features, artificial intelligence (AI) and machine learning (ML) are currently being exploited for deriving models and protocols from data, rather than by hand-programming. In this paper, we explore the feasibility of applying ML in next-generation wireless local area networks (WLANs). More specifically, we focus on the IEEE 802.11ax spatial reuse (SR) problem and predict its performance through federated learning (FL) models. The set of FL solutions overviewed in this work is part of the 2021 International Telecommunication Union (ITU) AI for 5G Challenge.

Intelligent Reflecting Surface-Aided Wideband THz Communications: Modeling and Analysis

Oct 29, 2021

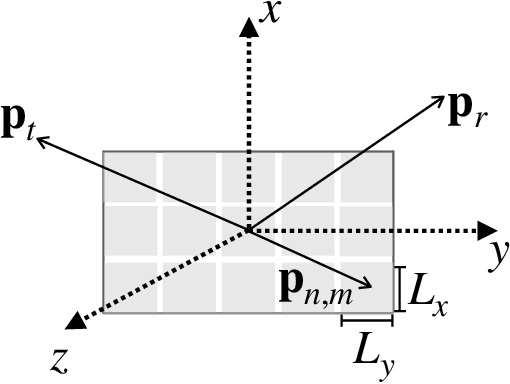

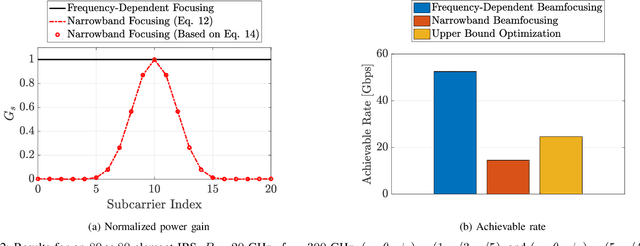

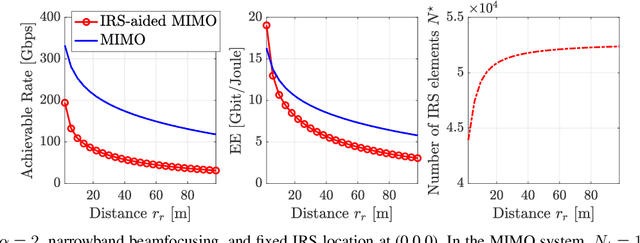

Abstract:In this paper, we study the performance of wideband terahertz (THz) communications assisted by an intelligent reflecting surface (IRS). Specifically, we first introduce a generalized channel model that is suitable for electrically large THz IRSs operating in the near-field. Unlike prior works, our channel model takes into account the spherical wavefront of the emitted electromagnetic waves and the spatial-wideband effect. We next show that conventional frequency-flat beamfocusing significantly reduces the power gain due to beam squint, and hence is highly suboptimal. More importantly, we analytically characterize this reduction when the spacing between adjacent reflecting elements is negligible, i.e., holographic reflecting surfaces. Numerical results corroborate our analysis and provide important insights into the design of future IRS-aided THz systems.

Electromagnetic Modeling of Holographic Intelligent Reflecting Surfaces at Terahertz Bands

Aug 18, 2021

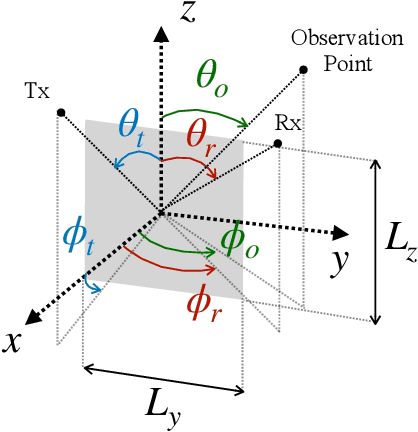

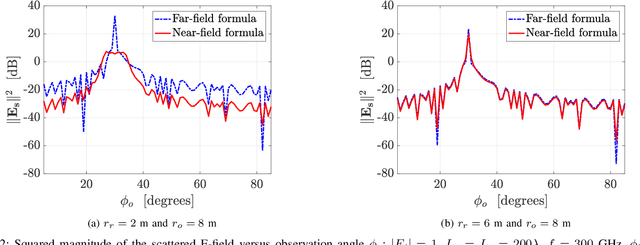

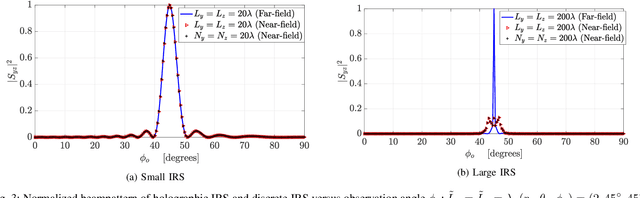

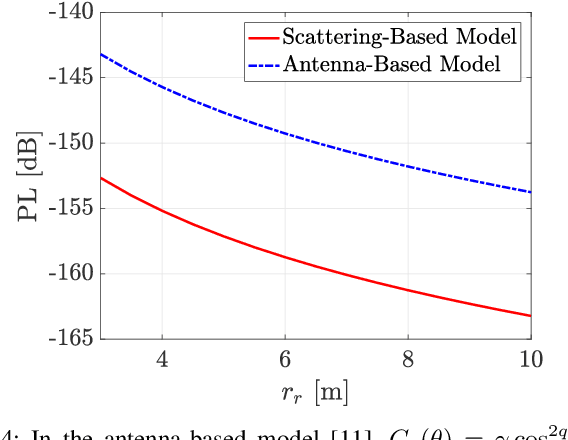

Abstract:Intelligent reflecting surface (IRS)-assisted wireless communication is widely deemed a key technology for 6G systems. The main challenge in deploying an IRS-aided terahertz (THz) link, though, is the severe propagation losses at high frequency bands. Hence, a THz IRS is expected to consist of a massive number of reflecting elements to compensate for those losses. However, as the IRS size grows, the conventional far-field assumption starts becoming invalid and the spherical wavefront of the radiated waves must be taken into account. In this work, we focus on the near-field and analytically determine the IRS response in the Fresnel zone by leveraging electromagnetic theory. Specifically, we derive a novel expression for the path loss and beampattern of a holographic IRS, which is then used to model its discrete counterpart. Our analysis sheds light on the modeling aspects and beamfocusing capabilities of THz IRSs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge