Bojun Xiong

TexGaussian: Generating High-quality PBR Material via Octree-based 3D Gaussian Splatting

Nov 29, 2024

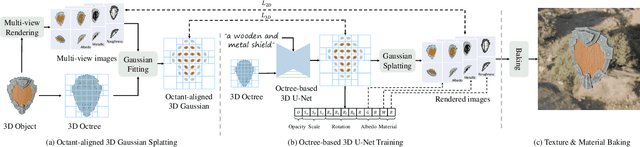

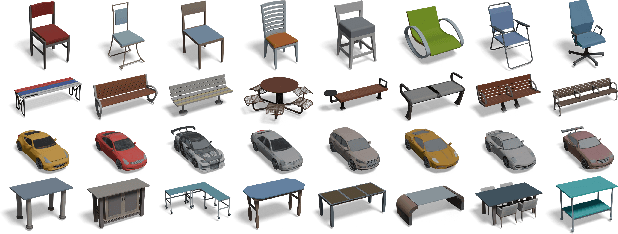

Abstract:Physically Based Rendering (PBR) materials play a crucial role in modern graphics, enabling photorealistic rendering across diverse environment maps. Developing an effective and efficient algorithm that is capable of automatically generating high-quality PBR materials rather than RGB texture for 3D meshes can significantly streamline the 3D content creation. Most existing methods leverage pre-trained 2D diffusion models for multi-view image synthesis, which often leads to severe inconsistency between the generated textures and input 3D meshes. This paper presents TexGaussian, a novel method that uses octant-aligned 3D Gaussian Splatting for rapid PBR material generation. Specifically, we place each 3D Gaussian on the finest leaf node of the octree built from the input 3D mesh to render the multiview images not only for the albedo map but also for roughness and metallic. Moreover, our model is trained in a regression manner instead of diffusion denoising, capable of generating the PBR material for a 3D mesh in a single feed-forward process. Extensive experiments on publicly available benchmarks demonstrate that our method synthesizes more visually pleasing PBR materials and runs faster than previous methods in both unconditional and text-conditional scenarios, which exhibit better consistency with the given geometry. Our code and trained models are available at https://3d-aigc.github.io/TexGaussian.

OctFusion: Octree-based Diffusion Models for 3D Shape Generation

Aug 27, 2024

Abstract:Diffusion models have emerged as a popular method for 3D generation. However, it is still challenging for diffusion models to efficiently generate diverse and high-quality 3D shapes. In this paper, we introduce OctFusion, which can generate 3D shapes with arbitrary resolutions in 2.5 seconds on a single Nvidia 4090 GPU, and the extracted meshes are guaranteed to be continuous and manifold. The key components of OctFusion are the octree-based latent representation and the accompanying diffusion models. The representation combines the benefits of both implicit neural representations and explicit spatial octrees and is learned with an octree-based variational autoencoder. The proposed diffusion model is a unified multi-scale U-Net that enables weights and computation sharing across different octree levels and avoids the complexity of widely used cascaded diffusion schemes. We verify the effectiveness of OctFusion on the ShapeNet and Objaverse datasets and achieve state-of-the-art performances on shape generation tasks. We demonstrate that OctFusion is extendable and flexible by generating high-quality color fields for textured mesh generation and high-quality 3D shapes conditioned on text prompts, sketches, or category labels. Our code and pre-trained models are available at \url{https://github.com/octree-nn/octfusion}.

VecFontSDF: Learning to Reconstruct and Synthesize High-quality Vector Fonts via Signed Distance Functions

Mar 22, 2023Abstract:Font design is of vital importance in the digital content design and modern printing industry. Developing algorithms capable of automatically synthesizing vector fonts can significantly facilitate the font design process. However, existing methods mainly concentrate on raster image generation, and only a few approaches can directly synthesize vector fonts. This paper proposes an end-to-end trainable method, VecFontSDF, to reconstruct and synthesize high-quality vector fonts using signed distance functions (SDFs). Specifically, based on the proposed SDF-based implicit shape representation, VecFontSDF learns to model each glyph as shape primitives enclosed by several parabolic curves, which can be precisely converted to quadratic B\'ezier curves that are widely used in vector font products. In this manner, most image generation methods can be easily extended to synthesize vector fonts. Qualitative and quantitative experiments conducted on a publicly-available dataset demonstrate that our method obtains high-quality results on several tasks, including vector font reconstruction, interpolation, and few-shot vector font synthesis, markedly outperforming the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge