Bjarne Grimstad

Solution Seeker AS, Department of Engineering Cybernetics, Norwegian University of Science and Technology

A deep latent variable model for semi-supervised multi-unit soft sensing in industrial processes

Jul 18, 2024

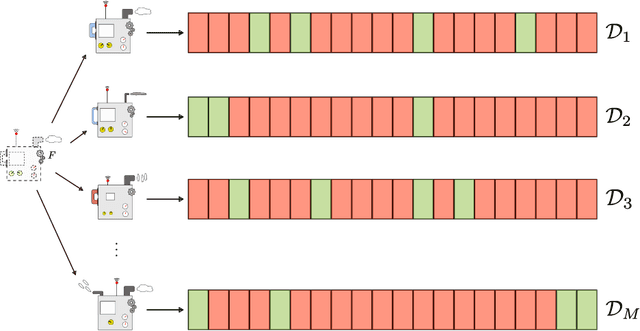

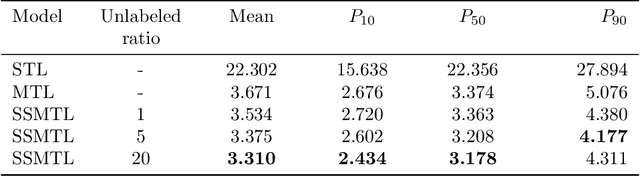

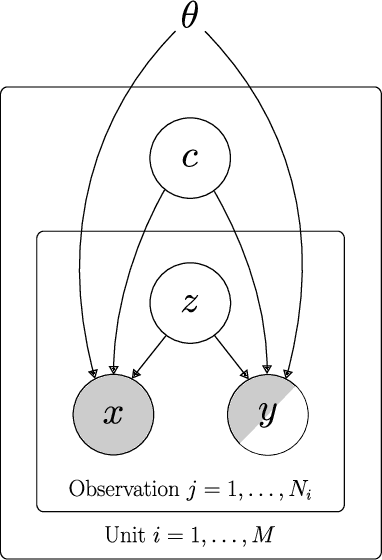

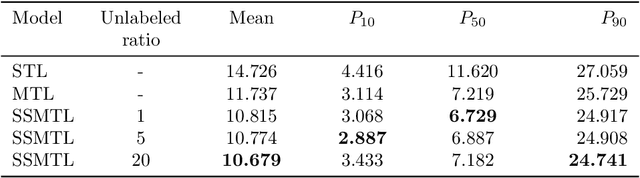

Abstract:In many industrial processes, an apparent lack of data limits the development of data-driven soft sensors. There are, however, often opportunities to learn stronger models by being more data-efficient. To achieve this, one can leverage knowledge about the data from which the soft sensor is learned. Taking advantage of properties frequently possessed by industrial data, we introduce a deep latent variable model for semi-supervised multi-unit soft sensing. This hierarchical, generative model is able to jointly model different units, as well as learning from both labeled and unlabeled data. An empirical study of multi-unit soft sensing is conducted using two datasets: a synthetic dataset of single-phase fluid flow, and a large, real dataset of multi-phase flow in oil and gas wells. We show that by combining semi-supervised and multi-task learning, the proposed model achieves superior results, outperforming current leading methods for this soft sensing problem. We also show that when a model has been trained on a multi-unit dataset, it may be finetuned to previously unseen units using only a handful of data points. In this finetuning procedure, unlabeled data improve soft sensor performance; remarkably, this is true even when no labeled data are available.

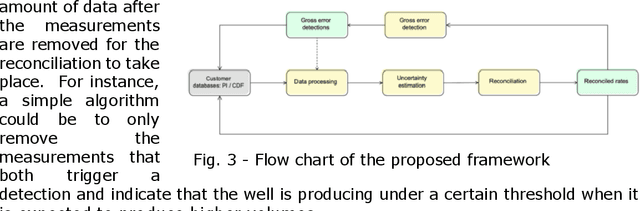

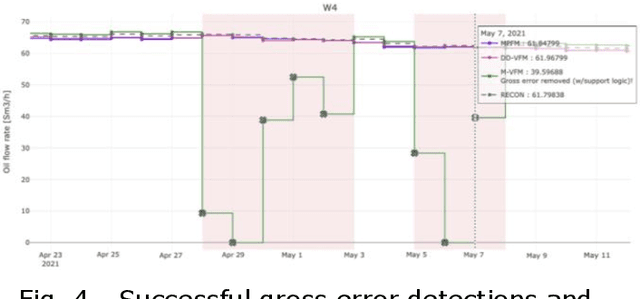

Flow Fusion, Exploiting Measurement Redundancy for Smarter Allocation

Apr 09, 2024

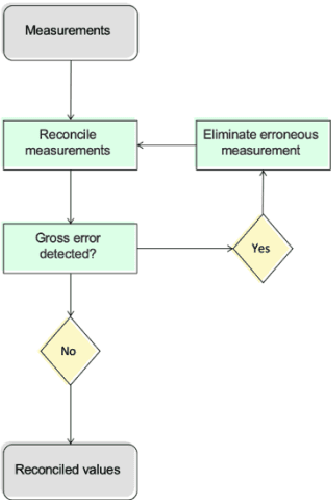

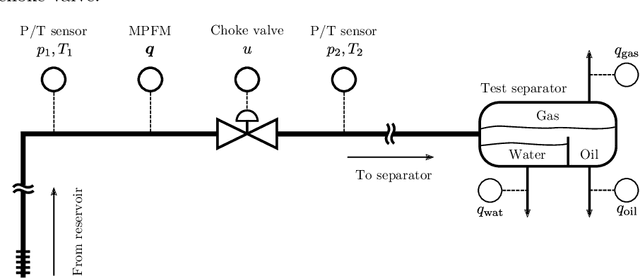

Abstract:In petroleum production systems, continuous multiphase flow rates are essential for efficient operation. They provide situational awareness, enable production optimization, improve reservoir management and planning, and form the basis for allocation. Furthermore, they can be crucial to ensure a fair revenue split between stakeholders for complex production systems where operators share the facilities. Yet, due to complex multiphase flow dynamics and uncertain subsurface fluid properties, the flow rates are challenging to obtain with high accuracy. Consequently, flow rate measurement and estimation solutions, such as multiphase flow meters and virtual flow meters, have different degrees of accuracy and suitability, and impact production decisions and production allocation accordingly. We propose a field-proven, data-driven framework for reconciliation and allocation. With data validation and reconciliation as the theoretical backbone, the solution exploits measurement redundancy to fuse together relevant flow rate information to infer the most likely flow rates in the production system based on quantifiable uncertainties. The framework consists of four modules: data-processing, uncertainty estimation, reconciliation, and gross error detection. The latter, being the focus of this paper, is a means to identify and mitigate the effect of measurements subject to systematic error, which can invalidate the reconciliation. In this paper, we highlight that a combination of statistical tests and supporting logic for gross error detection and elimination can be beneficial in obtaining a more justifiable production allocation. Using the maximum power measurement test, the module can be limited in its ability to pinpoint the erroneous measurement. Yet, it is demonstrated that the detections can be convenient indications of gross errors and where these might reside in the production system.

Multi-unit soft sensing permits few-shot learning

Sep 27, 2023

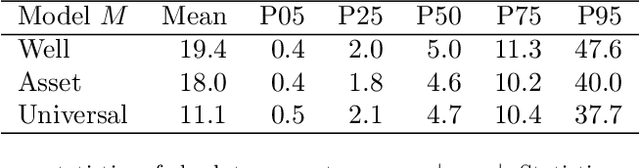

Abstract:Recent literature has explored various ways to improve soft sensors using learning algorithms with transferability. Broadly put, the performance of a soft sensor may be strengthened when it is learned by solving multiple tasks. The usefulness of transferability depends on how strongly related the devised learning tasks are. A particularly relevant case for transferability, is when a soft sensor is to be developed for a process of which there are many realizations, e.g. system or device with many implementations from which data is available. Then, each realization presents a soft sensor learning task, and it is reasonable to expect that the different tasks are strongly related. Applying transferability in this setting leads to what we call multi-unit soft sensing, where a soft sensor models a process by learning from data from all of its realizations. This paper explores the learning abilities of a multi-unit soft sensor, which is formulated as a hierarchical model and implemented using a deep neural network. In particular, we investigate how well the soft sensor generalizes as the number of units increase. Using a large industrial dataset, we demonstrate that, when the soft sensor is learned from a sufficient number of tasks, it permits few-shot learning on data from new units. Surprisingly, regarding the difficulty of the task, few-shot learning on 1-3 data points often leads to a high performance on new units.

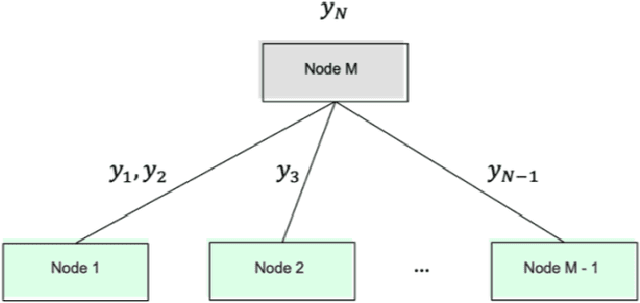

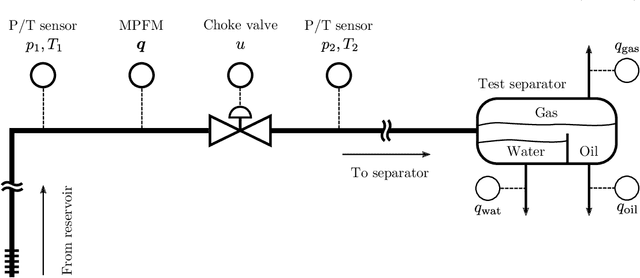

Sequential Monte Carlo applied to virtual flow meter calibration

Apr 13, 2023Abstract:Soft-sensors are gaining popularity due to their ability to provide estimates of key process variables with little intervention required on the asset and at a low cost. In oil and gas production, virtual flow metering (VFM) is a popular soft-sensor that attempts to estimate multiphase flow rates in real time. VFMs are based on models, and these models require calibration. The calibration is highly dependent on the application, both due to the great diversity of the models, and in the available measurements. The most accurate calibration is achieved by careful tuning of the VFM parameters to well tests, but this can be work intensive, and not all wells have frequent well test data available. This paper presents a calibration method based on the measurement provided by the production separator, and the assumption that the observed flow should be equal to the sum of flow rates from each individual well. This allows us to jointly calibrate the VFMs continuously. The method applies Sequential Monte Carlo (SMC) to infer a tuning factor and the flow composition for each well. The method is tested on a case with ten wells, using both synthetic and real data. The results are promising and the method is able to provide reasonable estimates of the parameters without relying on well tests. However, some challenges are identified and discussed, particularly related to the process noise and how to manage varying data quality.

Multi-task neural networks by learned contextual inputs

Mar 01, 2023

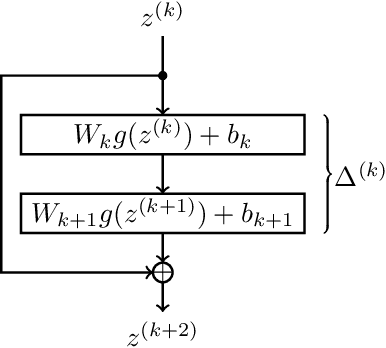

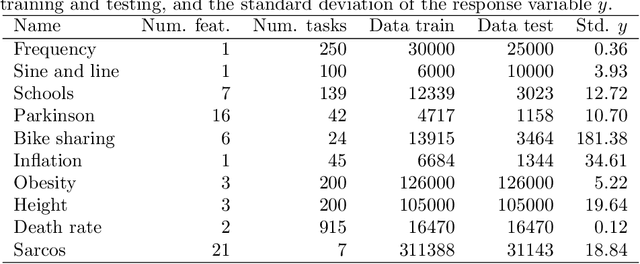

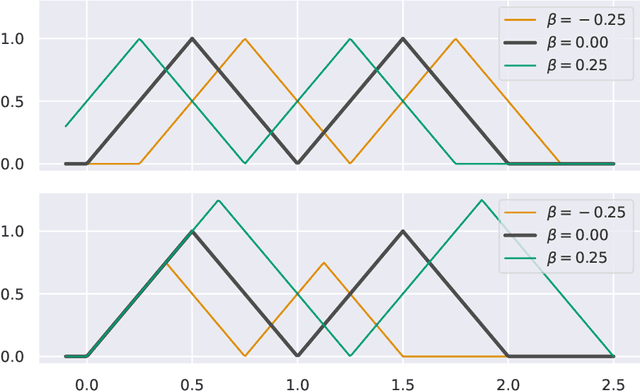

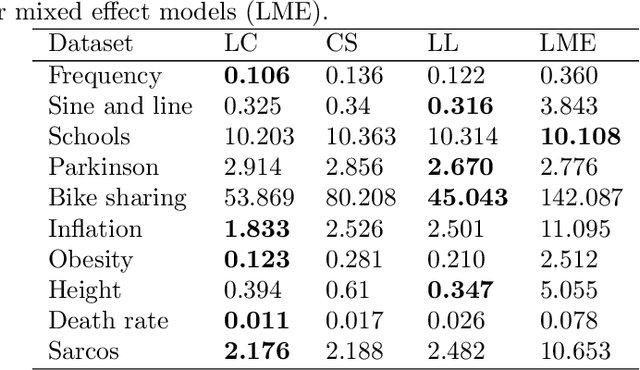

Abstract:This paper explores learned-context neural networks. It is a multi-task learning architecture based on a fully shared neural network and an augmented input vector containing trainable task parameters. The architecture is interesting due to its powerful task adaption mechanism, which facilitates a low-dimensional task parameter space. Theoretically, we show that a scalar task parameter is sufficient for universal approximation of all tasks, which is not necessarily the case for more common architectures. Evidence towards the practicality of such a small task parameter space is given empirically. The task parameter space is found to be well-behaved, and simplifies workflows related to updating models as new data arrives, and training new tasks when the shared parameters are frozen. Additionally, the architecture displays robustness towards cases with few data points. The architecture's performance is compared to similar neural network architectures on ten datasets.

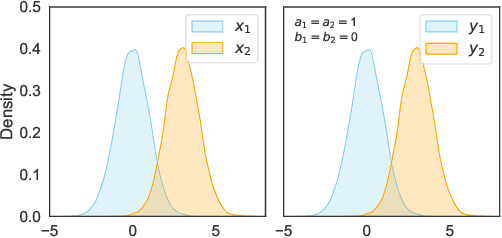

Adjustment formulas for learning causal steady-state models from closed-loop operational data

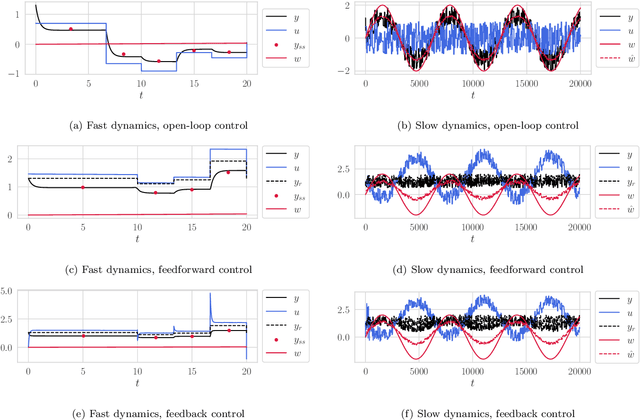

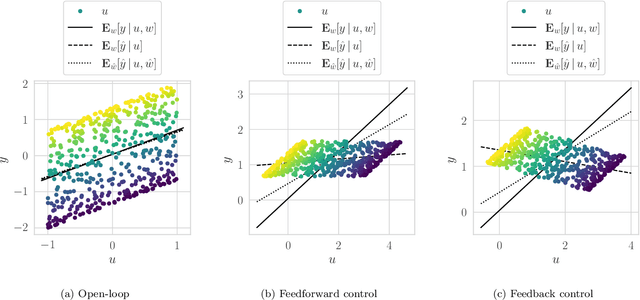

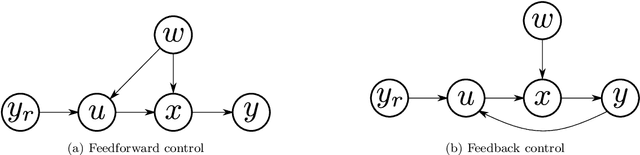

Nov 10, 2022

Abstract:Steady-state models which have been learned from historical operational data may be unfit for model-based optimization unless correlations in the training data which are introduced by control are accounted for. Using recent results from work on structural dynamical causal models, we derive a formula for adjusting for this control confounding, enabling the estimation of a causal steady-state model from closed-loop steady-state data. The formula assumes that the available data have been gathered under some fixed control law. It works by estimating and taking into account the disturbance which the controller is trying to counteract, and enables learning from data gathered under both feedforward and feedback control.

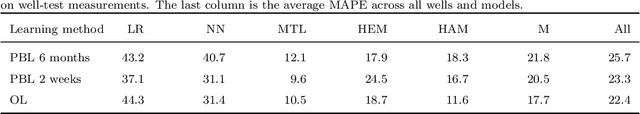

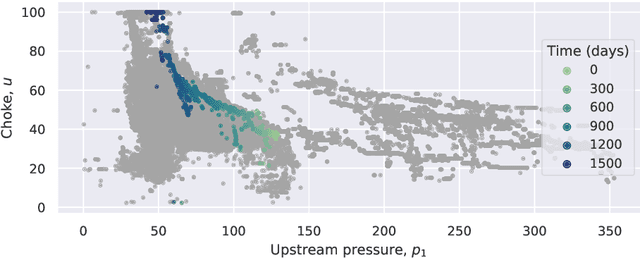

Passive learning to address nonstationarity in virtual flow metering applications

Feb 07, 2022

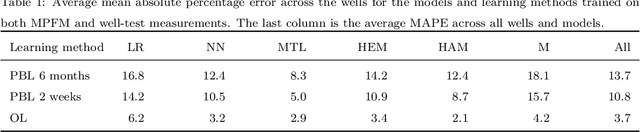

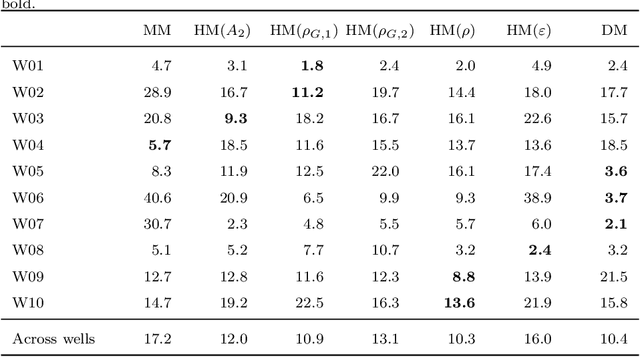

Abstract:Steady-state process models are common in virtual flow meter applications due to low computational complexity, and low model development and maintenance cost. Nevertheless, the prediction performance of steady-state models typically degrades with time due to the inherent nonstationarity of the underlying process being modeled. Few studies have investigated how learning methods can be applied to sustain the prediction accuracy of steady-state virtual flow meters. This paper explores passive learning, where the model is frequently calibrated to new data, as a way to address nonstationarity and improve long-term performance. An advantage with passive learning is that it is compatible with models used in the industry. Two passive learning methods, periodic batch learning and online learning, are applied with varying calibration frequency to train virtual flow meters. Six different model types, ranging from data-driven to first-principles, are trained on historical production data from 10 petroleum wells. The results are two-fold: first, in the presence of frequently arriving measurements, frequent model updating sustains an excellent prediction performance over time; second, in the presence of intermittent and infrequently arriving measurements, frequent updating in addition to the utilization of expert knowledge is essential to increase the performance accuracy. The investigation may be of interest to experts developing soft-sensors for nonstationary processes, such as virtual flow meters.

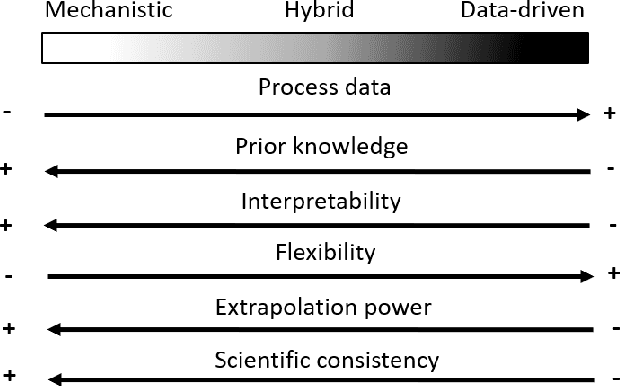

On gray-box modeling for virtual flow metering

Mar 23, 2021

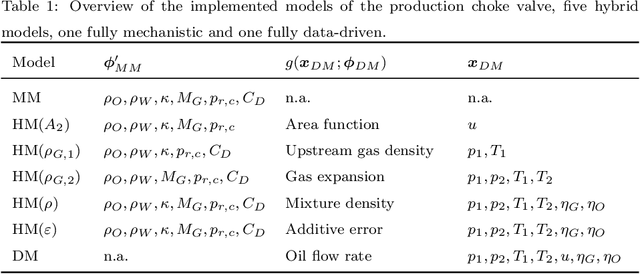

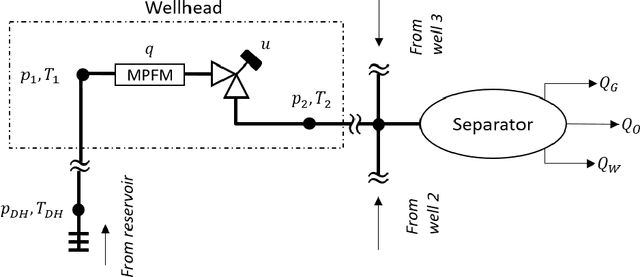

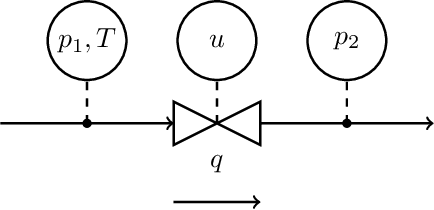

Abstract:A virtual flow meter (VFM) enables continuous prediction of flow rates in petroleum production systems. The predicted flow rates may aid the daily control and optimization of a petroleum asset. Gray-box modeling is an approach that combines mechanistic and data-driven modeling. The objective is to create a VFM with higher accuracy than a mechanistic VFM, and with a higher scientific consistency than a data-driven VFM. This article investigates five different gray-box model types in an industrial case study on 10 petroleum wells. The study casts light upon the nontrivial task of balancing learning from physics and data. The results indicate that the inclusion of data-driven elements in a mechanistic model improves the predictive performance of the model while insignificantly influencing the scientific consistency. However, the results are influenced by the available data. The findings encourage future research into online learning and the utilization of methods that incorporate data from several wells.

Multi-task learning for virtual flow metering

Mar 15, 2021

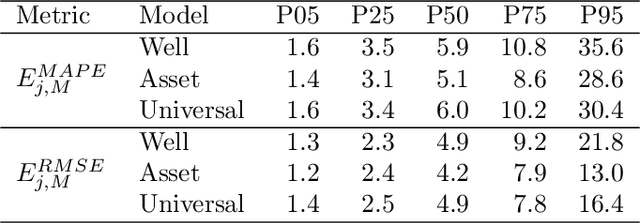

Abstract:Virtual flow metering (VFM) is a cost-effective and non-intrusive technology for inferring multi-phase flow rates in petroleum assets. Inferences about flow rates are fundamental to decision support systems which operators extensively rely on. Data-driven VFM, where mechanistic models are replaced with machine learning models, has recently gained attention due to its promise of lower maintenance costs. While excellent performance in small sample studies have been reported in the literature, there is still considerable doubt towards the robustness of data-driven VFM. In this paper we propose a new multi-task learning (MTL) architecture for data-driven VFM. Our method differs from previous methods in that it enables learning across oil and gas wells. We study the method by modeling 55 wells from four petroleum assets. Our findings show that MTL improves robustness over single task methods, without sacrificing performance. MTL yields a 25-50% error reduction on average for the assets where single task architectures are struggling.

Bayesian Neural Networks for Virtual Flow Metering: An Empirical Study

Feb 02, 2021

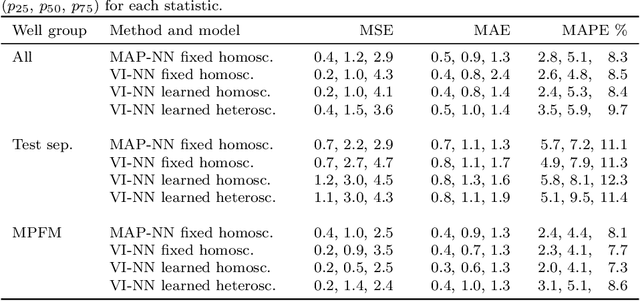

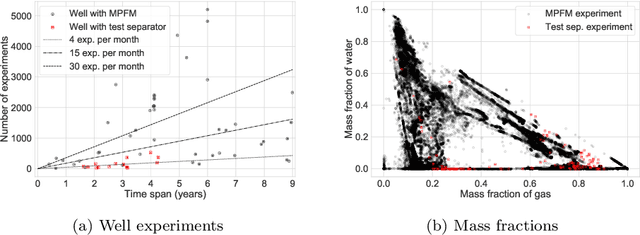

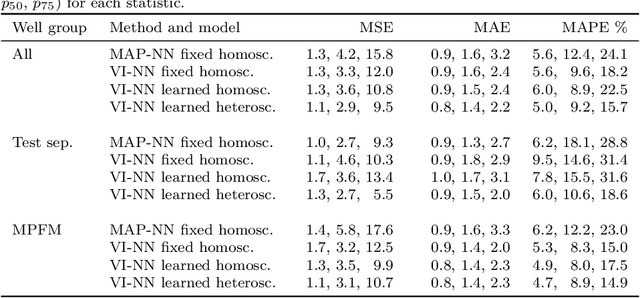

Abstract:Recent works have presented promising results from the application of machine learning (ML) to the modeling of flow rates in oil and gas wells. The encouraging results combined with advantageous properties of ML models, such as computationally cheap evaluation and ease of calibration to new data, have sparked optimism for the development of data-driven virtual flow meters (VFMs). We contribute to this development by presenting a probabilistic VFM based on a Bayesian neural network. We consider homoscedastic and heteroscedastic measurement noise, and show how to train the models using maximum a posteriori estimation and variational inference. We study the methods by modeling on a large and heterogeneous dataset, consisting of 60 wells across five different oil and gas assets. The predictive performance is analyzed on historical and future test data, where we achieve an average error of 5-6% and 9-13% for the 50% best performing models, respectively. Variational inference appears to provide more robust predictions than the reference approach on future data. The difference in prediction performance and uncertainty on historical and future data is explored in detail, and the findings motivate the development of alternative strategies for data-driven VFM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge