Beat Peter Müller-Stich

Semantic segmentation of surgical hyperspectral images under geometric domain shifts

Mar 20, 2023

Abstract:Robust semantic segmentation of intraoperative image data could pave the way for automatic surgical scene understanding and autonomous robotic surgery. Geometric domain shifts, however, although common in real-world open surgeries due to variations in surgical procedures or situs occlusions, remain a topic largely unaddressed in the field. To address this gap in the literature, we (1) present the first analysis of state-of-the-art (SOA) semantic segmentation networks in the presence of geometric out-of-distribution (OOD) data, and (2) address generalizability with a dedicated augmentation technique termed "Organ Transplantation" that we adapted from the general computer vision community. According to a comprehensive validation on six different OOD data sets comprising 600 RGB and hyperspectral imaging (HSI) cubes from 33 pigs semantically annotated with 19 classes, we demonstrate a large performance drop of SOA organ segmentation networks applied to geometric OOD data. Surprisingly, this holds true not only for conventional RGB data (drop of Dice similarity coefficient (DSC) by 46 %) but also for HSI data (drop by 45 %), despite the latter's rich information content per pixel. Using our augmentation scheme improves on the SOA DSC by up to 67 % (RGB) and 90 % (HSI) and renders performance on par with in-distribution performance on real OOD test data. The simplicity and effectiveness of our augmentation scheme makes it a valuable network-independent tool for addressing geometric domain shifts in semantic scene segmentation of intraoperative data. Our code and pre-trained models will be made publicly available.

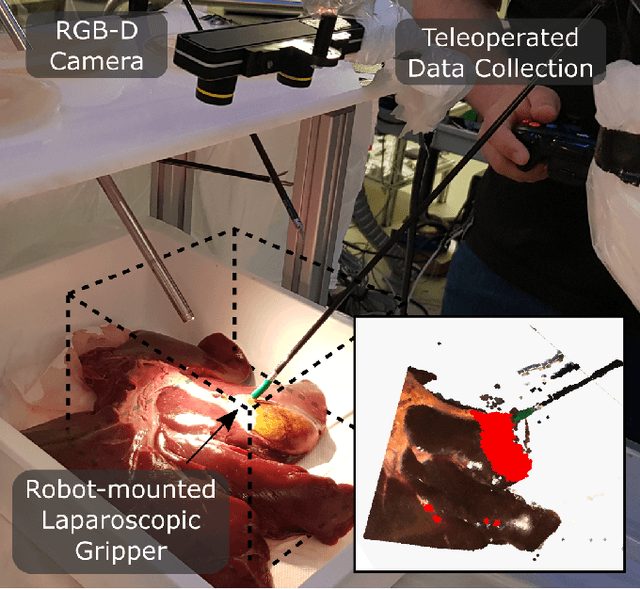

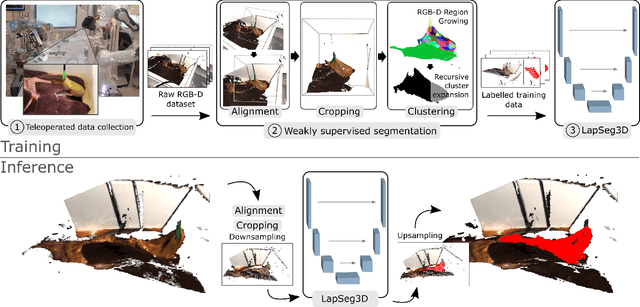

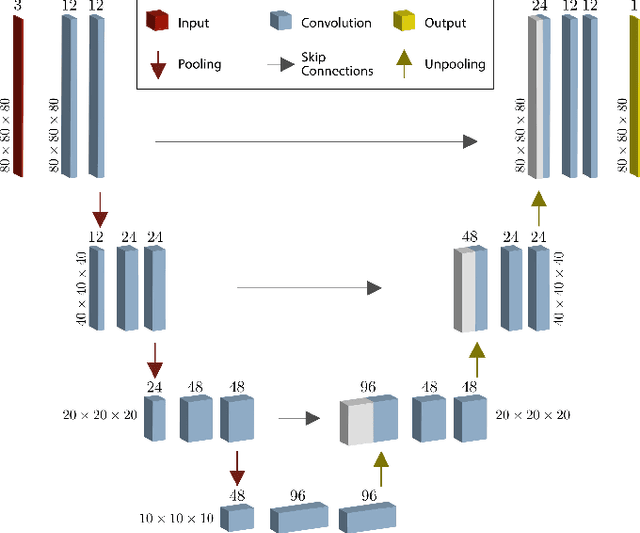

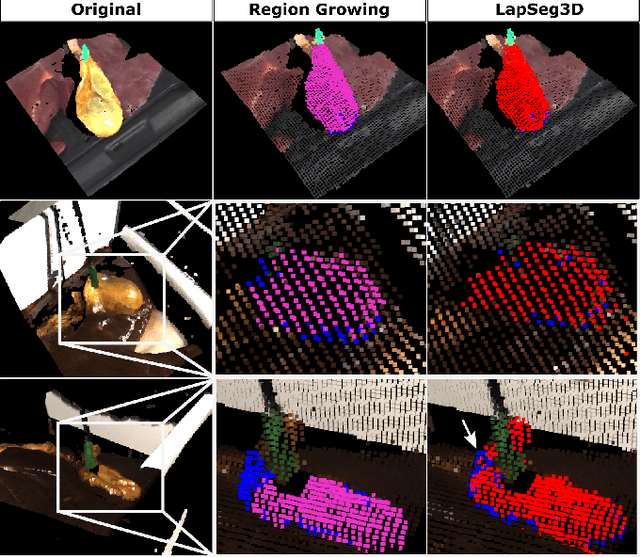

LapSeg3D: Weakly Supervised Semantic Segmentation of Point Clouds Representing Laparoscopic Scenes

Jul 15, 2022

Abstract:The semantic segmentation of surgical scenes is a prerequisite for task automation in robot assisted interventions. We propose LapSeg3D, a novel DNN-based approach for the voxel-wise annotation of point clouds representing surgical scenes. As the manual annotation of training data is highly time consuming, we introduce a semi-autonomous clustering-based pipeline for the annotation of the gallbladder, which is used to generate segmented labels for the DNN. When evaluated against manually annotated data, LapSeg3D achieves an F1 score of 0.94 for gallbladder segmentation on various datasets of ex-vivo porcine livers. We show LapSeg3D to generalize accurately across different gallbladders and datasets recorded with different RGB-D camera systems.

Towards Augmented Reality-based Suturing in Monocular Laparoscopic Training

Jan 19, 2020

Abstract:Minimally Invasive Surgery (MIS) techniques have gained rapid popularity among surgeons since they offer significant clinical benefits including reduced recovery time and diminished post-operative adverse effects. However, conventional endoscopic systems output monocular video which compromises depth perception, spatial orientation and field of view. Suturing is one of the most complex tasks performed under these circumstances. Key components of this tasks are the interplay between needle holder and the surgical needle. Reliable 3D localization of needle and instruments in real time could be used to augment the scene with additional parameters that describe their quantitative geometric relation, e.g. the relation between the estimated needle plane and its rotation center and the instrument. This could contribute towards standardization and training of basic skills and operative techniques, enhance overall surgical performance, and reduce the risk of complications. The paper proposes an Augmented Reality environment with quantitative and qualitative visual representations to enhance laparoscopic training outcomes performed on a silicone pad. This is enabled by a multi-task supervised deep neural network which performs multi-class segmentation and depth map prediction. Scarcity of labels has been conquered by creating a virtual environment which resembles the surgical training scenario to generate dense depth maps and segmentation maps. The proposed convolutional neural network was tested on real surgical training scenarios and showed to be robust to occlusion of the needle. The network achieves a dice score of 0.67 for surgical needle segmentation, 0.81 for needle holder instrument segmentation and a mean absolute error of 6.5 mm for depth estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge