Bart Bakker

Anomaly Detection with Deep Perceptual Autoencoders

Jun 23, 2020

Abstract:Anomaly detection is the problem of recognizing abnormal inputs based on the seen examples of normal data. Despite recent advances of deep learning in recognizing image anomalies, these methods still prove incapable of handling complex medical images, such as barely visible abnormalities in chest X-rays and metastases in lymph nodes. To address this problem, we introduce a new powerful method of image anomaly detection. It relies on the classical autoencoder approach with a re-designed training pipeline to handle high-resolution, complex images and a robust way of computing an image abnormality score. We revisit the very problem statement of fully unsupervised anomaly detection, where no abnormal examples at all are provided during the model setup. We propose to relax this unrealistic assumption by using a very small number of anomalies of confined variability merely to initiate the search of hyperparameters of the model. We evaluate our solution on natural image datasets with a known benchmark, as well as on two medical datasets containing radiology and digital pathology images. The proposed approach suggests a new strong baseline for image anomaly detection and outperforms state-of-the-art approaches in complex medical image analysis tasks.

Deep learning assessment of breast terminal duct lobular unit involution: towards automated prediction of breast cancer risk

Oct 31, 2019

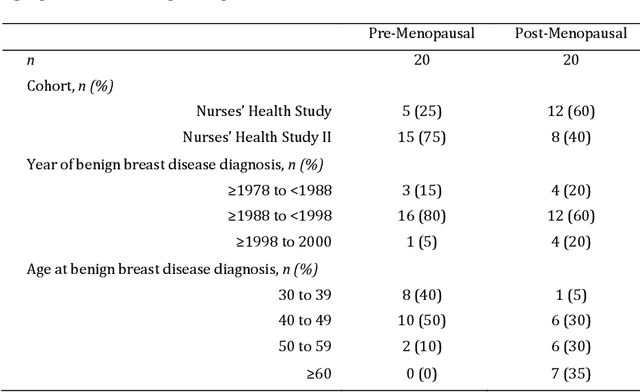

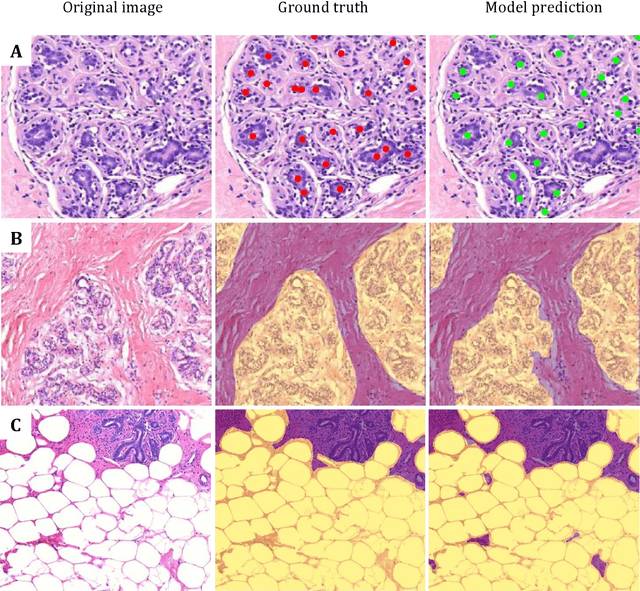

Abstract:Terminal ductal lobular unit (TDLU) involution is the regression of milk-producing structures in the breast. Women with less TDLU involution are more likely to develop breast cancer. A major bottleneck in studying TDLU involution in large cohort studies is the need for labor-intensive manual assessment of TDLUs. We developed a computational pathology solution to automatically capture TDLU involution measures. Whole slide images (WSIs) of benign breast biopsies were obtained from the Nurses' Health Study (NHS). A first set of 92 WSIs was annotated for TDLUs, acini and adipose tissue to train deep convolutional neural network (CNN) models for detection of acini, and segmentation of TDLUs and adipose tissue. These networks were integrated into a single computational method to capture TDLU involution measures including number of TDLUs per tissue area, median TDLU span and median number of acini per TDLU. We validated our method on 40 additional WSIs by comparing with manually acquired measures. Our CNN models detected acini with an F1 score of 0.73$\pm$0.09, and segmented TDLUs and adipose tissue with Dice scores of 0.86$\pm$0.11 and 0.86$\pm$0.04, respectively. The inter-observer ICC scores for manual assessments on 40 WSIs of number of TDLUs per tissue area, median TDLU span, and median acini count per TDLU were 0.71, 95% CI [0.51, 0.83], 0.81, 95% CI [0.67, 0.90], and 0.73, 95% CI [0.54, 0.85], respectively. Intra-observer reliability was evaluated on 10/40 WSIs with ICC scores of >0.8. Inter-observer ICC scores between automated results and the mean of the two observers were: 0.80, 95% CI [0.63, 0.90] for number of TDLUs per tissue area, 0.57, 95% CI [0.19, 0.77] for median TDLU span, and 0.80, 95% CI [0.62, 0.89] for median acini count per TDLU. TDLU involution measures evaluated by manual and automated assessment were inversely associated with age and menopausal status.

i-RIM applied to the fastMRI challenge

Oct 20, 2019

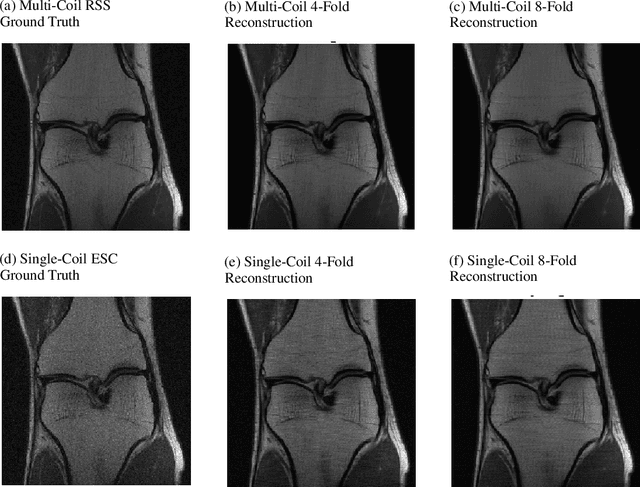

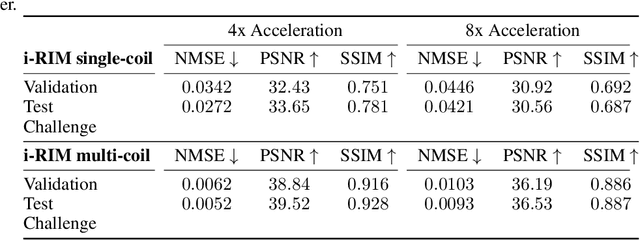

Abstract:We, team AImsterdam, summarize our submission to the fastMRI challenge (Zbontar et al., 2018). Our approach builds on recent advances in invertible learning to infer models as presented in Putzky and Welling (2019). Both, our single-coil and our multi-coil model share the same basic architecture.

Perceptual Image Anomaly Detection

Sep 12, 2019

Abstract:We present a novel method for image anomaly detection, where algorithms that use samples drawn from some distribution of "normal" data, aim to detect out-of-distribution (abnormal) samples. Our approach includes a combination of encoder and generator for mapping an image distribution to a predefined latent distribution and vice versa. It leverages Generative Adversarial Networks to learn these data distributions and uses perceptual loss for the detection of image abnormality. To accomplish this goal, we introduce a new similarity metric, which expresses the perceived similarity between images and is robust to changes in image contrast. Secondly, we introduce a novel approach for the selection of weights of a multi-objective loss function (image reconstruction and distribution mapping) in the absence of a validation dataset for hyperparameter tuning. After training, our model measures the abnormality of the input image as the perceptual dissimilarity between it and the closest generated image of the modeled data distribution. The proposed approach is extensively evaluated on several publicly available image benchmarks and achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge