Arihant Jain

Knowledge Models for Cancer Clinical Practice Guidelines : Construction, Management and Usage in Question Answering

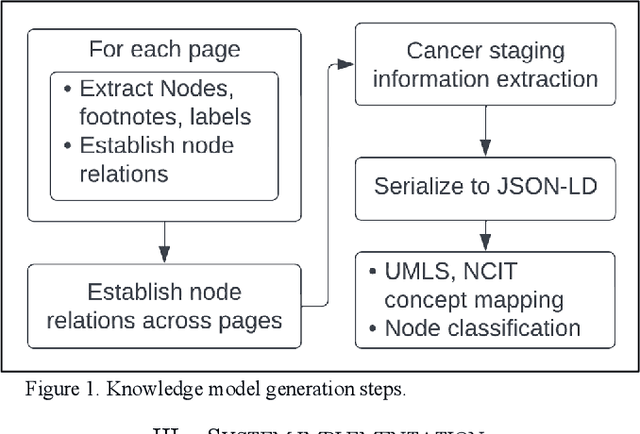

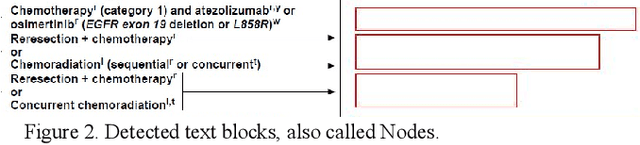

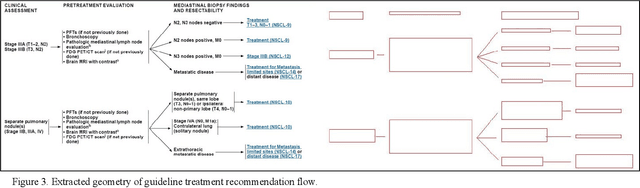

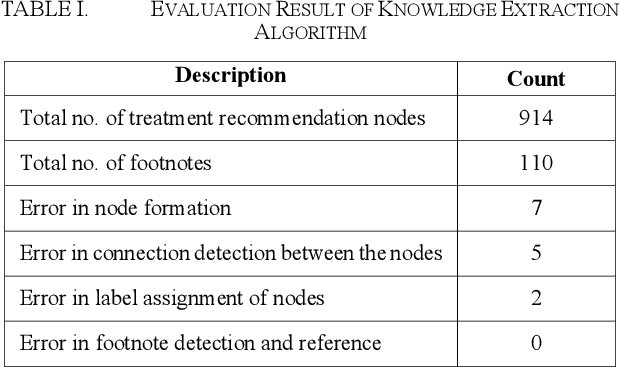

Jul 23, 2024Abstract:An automated knowledge modeling algorithm for Cancer Clinical Practice Guidelines (CPGs) extracts the knowledge contained in the CPG documents and transforms it into a programmatically interactable, easy-to-update structured model with minimal human intervention. The existing automated algorithms have minimal scope and cannot handle the varying complexity of the knowledge content in the CPGs for different cancer types. This work proposes an improved automated knowledge modeling algorithm to create knowledge models from the National Comprehensive Cancer Network (NCCN) CPGs in Oncology for different cancer types. The proposed algorithm has been evaluated with NCCN CPGs for four different cancer types. We also proposed an algorithm to compare the knowledge models for different versions of a guideline to discover the specific changes introduced in the treatment protocol of a new version. We created a question-answering (Q&A) framework with the guideline knowledge models as the augmented knowledge base to study our ability to query the knowledge models. We compiled a set of 32 question-answer pairs derived from two reliable data sources for the treatment of Non-Small Cell Lung Cancer (NSCLC) to evaluate the Q&A framework. The framework was evaluated against the question-answer pairs from one data source, and it can generate the answers with 54.5% accuracy from the treatment algorithm and 81.8% accuracy from the discussion part of the NCCN NSCLC guideline knowledge model.

Automated Knowledge Modeling for Cancer Clinical Practice Guidelines

Jul 15, 2023

Abstract:Clinical Practice Guidelines (CPGs) for cancer diseases evolve rapidly due to new evidence generated by active research. Currently, CPGs are primarily published in a document format that is ill-suited for managing this developing knowledge. A knowledge model of the guidelines document suitable for programmatic interaction is required. This work proposes an automated method for extraction of knowledge from National Comprehensive Cancer Network (NCCN) CPGs in Oncology and generating a structured model containing the retrieved knowledge. The proposed method was tested using two versions of NCCN Non-Small Cell Lung Cancer (NSCLC) CPG to demonstrate the effectiveness in faithful extraction and modeling of knowledge. Three enrichment strategies using Cancer staging information, Unified Medical Language System (UMLS) Metathesaurus & National Cancer Institute thesaurus (NCIt) concepts, and Node classification are also presented to enhance the model towards enabling programmatic traversal and querying of cancer care guidelines. The Node classification was performed using a Support Vector Machine (SVM) model, achieving a classification accuracy of 0.81 with 10-fold cross-validation.

TAME: Task Agnostic Continual Learning using Multiple Experts

Oct 08, 2022

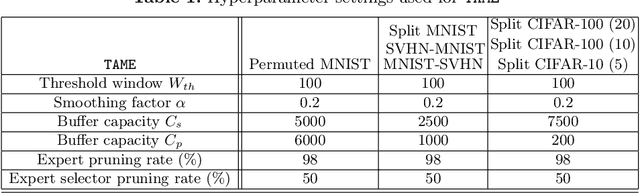

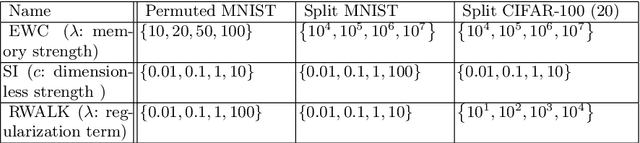

Abstract:The goal of lifelong learning is to continuously learn from non-stationary distributions, where the non-stationarity is typically imposed by a sequence of distinct tasks. Prior works have mostly considered idealistic settings, where the identity of tasks is known at least at training. In this paper we focus on a fundamentally harder, so-called task-agnostic setting where the task identities are not known and the learning machine needs to infer them from the observations. Our algorithm, which we call TAME (Task-Agnostic continual learning using Multiple Experts), automatically detects the shift in data distributions and switches between task expert networks in an online manner. At training, the strategy for switching between tasks hinges on an extremely simple observation that for each new coming task there occurs a statistically-significant deviation in the value of the loss function that marks the onset of this new task. At inference, the switching between experts is governed by the selector network that forwards the test sample to its relevant expert network. The selector network is trained on a small subset of data drawn uniformly at random. We control the growth of the task expert networks as well as selector network by employing online pruning. Our experimental results show the efficacy of our approach on benchmark continual learning data sets, outperforming the previous task-agnostic methods and even the techniques that admit task identities at both training and testing, while at the same time using a comparable model size.

A Closer Look at Smoothness in Domain Adversarial Training

Jun 16, 2022

Abstract:Domain adversarial training has been ubiquitous for achieving invariant representations and is used widely for various domain adaptation tasks. In recent times, methods converging to smooth optima have shown improved generalization for supervised learning tasks like classification. In this work, we analyze the effect of smoothness enhancing formulations on domain adversarial training, the objective of which is a combination of task loss (eg. classification, regression, etc.) and adversarial terms. We find that converging to a smooth minima with respect to (w.r.t.) task loss stabilizes the adversarial training leading to better performance on target domain. In contrast to task loss, our analysis shows that converging to smooth minima w.r.t. adversarial loss leads to sub-optimal generalization on the target domain. Based on the analysis, we introduce the Smooth Domain Adversarial Training (SDAT) procedure, which effectively enhances the performance of existing domain adversarial methods for both classification and object detection tasks. Our analysis also provides insight into the extensive usage of SGD over Adam in the community for domain adversarial training.

S$^3$VAADA: Submodular Subset Selection for Virtual Adversarial Active Domain Adaptation

Sep 18, 2021

Abstract:Unsupervised domain adaptation (DA) methods have focused on achieving maximal performance through aligning features from source and target domains without using labeled data in the target domain. Whereas, in the real-world scenario's it might be feasible to get labels for a small proportion of target data. In these scenarios, it is important to select maximally-informative samples to label and find an effective way to combine them with the existing knowledge from source data. Towards achieving this, we propose S$^3$VAADA which i) introduces a novel submodular criterion to select a maximally informative subset to label and ii) enhances a cluster-based DA procedure through novel improvements to effectively utilize all the available data for improving generalization on target. Our approach consistently outperforms the competing state-of-the-art approaches on datasets with varying degrees of domain shifts.

A Novel Hybrid CNN-AIS Visual Pattern Recognition Engine

Mar 11, 2015

Abstract:Machine learning methods are used today for most recognition problems. Convolutional Neural Networks (CNN) have time and again proved successful for many image processing tasks primarily for their architecture. In this paper we propose to apply CNN to small data sets like for example, personal albums or other similar environs where the size of training dataset is a limitation, within the framework of a proposed hybrid CNN-AIS model. We use Artificial Immune System Principles to enhance small size of training data set. A layer of Clonal Selection is added to the local filtering and max pooling of CNN Architecture. The proposed Architecture is evaluated using the standard MNIST dataset by limiting the data size and also with a small personal data sample belonging to two different classes. Experimental results show that the proposed hybrid CNN-AIS based recognition engine works well when the size of training data is limited in size

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge