Arie E. Kaufman

Memento: Towards Proactive Visualization of Everyday Memories with Personal Wearable AR Assistant

Jan 27, 2026Abstract:We introduce Memento, a conversational AR assistant that permanently captures and memorizes user's verbal queries alongside their spatiotemporal and activity contexts. By storing these "memories," Memento discovers connections between users' recurring interests and the contexts that trigger them. Upon detection of similar or identical spatiotemporal activity, Memento proactively recalls user interests and delivers up-to-date responses through AR, seamlessly integrating AR experience into their daily routine. Unlike prior work, each interaction in Memento is not a transient event, but a connected series of interactions with coherent long--term perspective, tailored to the user's broader multimodal (visual, spatial, temporal, and embodied) context. We conduct preliminary evaluation through user feedbacks with participants of diverse expertise in immersive apps, and explore the value of proactive context-aware AR assistant in everyday settings. We share our findings and challenges in designing a proactive, context-aware AR system.

Explainable XR: Understanding User Behaviors of XR Environments using LLM-assisted Analytics Framework

Jan 23, 2025

Abstract:We present Explainable XR, an end-to-end framework for analyzing user behavior in diverse eXtended Reality (XR) environments by leveraging Large Language Models (LLMs) for data interpretation assistance. Existing XR user analytics frameworks face challenges in handling cross-virtuality - AR, VR, MR - transitions, multi-user collaborative application scenarios, and the complexity of multimodal data. Explainable XR addresses these challenges by providing a virtuality-agnostic solution for the collection, analysis, and visualization of immersive sessions. We propose three main components in our framework: (1) A novel user data recording schema, called User Action Descriptor (UAD), that can capture the users' multimodal actions, along with their intents and the contexts; (2) a platform-agnostic XR session recorder, and (3) a visual analytics interface that offers LLM-assisted insights tailored to the analysts' perspectives, facilitating the exploration and analysis of the recorded XR session data. We demonstrate the versatility of Explainable XR by demonstrating five use-case scenarios, in both individual and collaborative XR applications across virtualities. Our technical evaluation and user studies show that Explainable XR provides a highly usable analytics solution for understanding user actions and delivering multifaceted, actionable insights into user behaviors in immersive environments.

Carve3D: Improving Multi-view Reconstruction Consistency for Diffusion Models with RL Finetuning

Dec 21, 2023

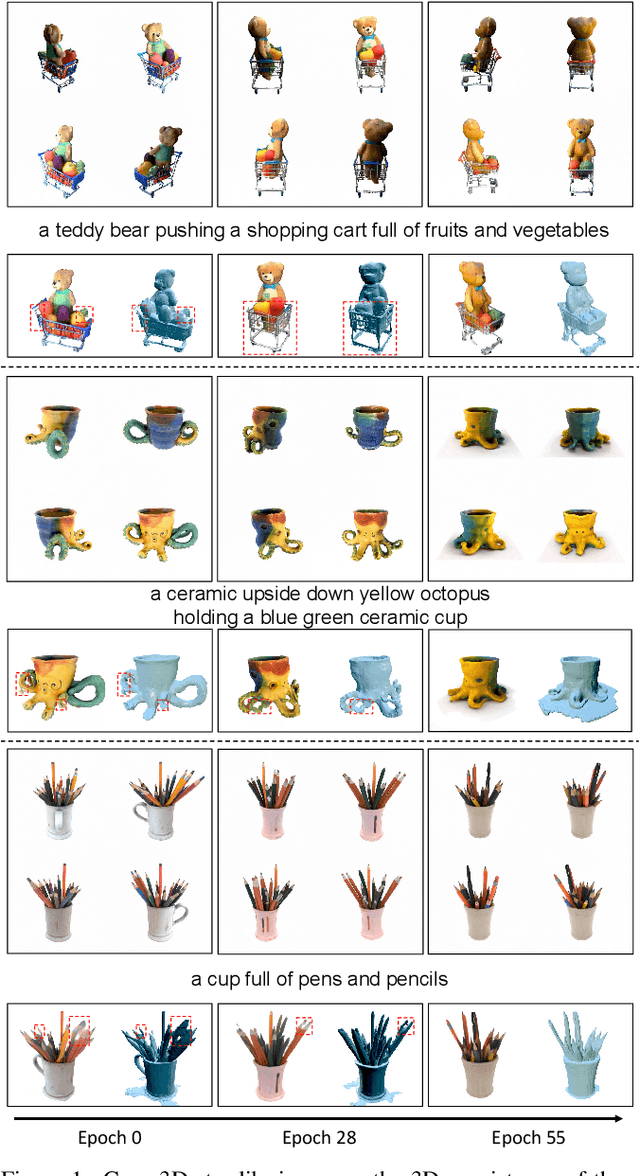

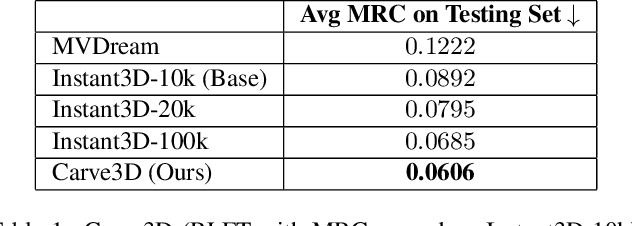

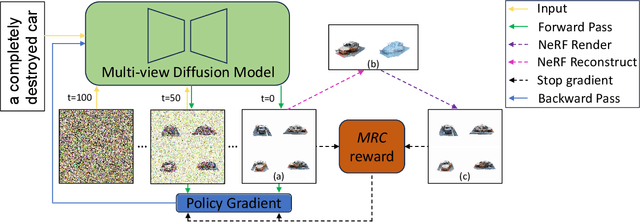

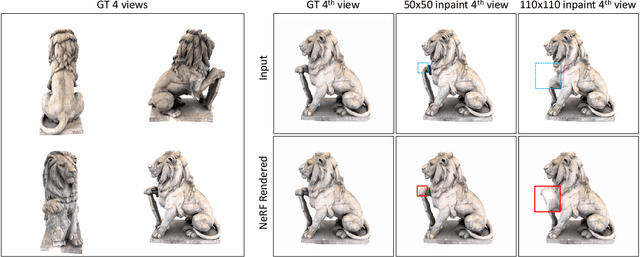

Abstract:Recent advancements in the text-to-3D task leverage finetuned text-to-image diffusion models to generate multi-view images, followed by NeRF reconstruction. Yet, existing supervised finetuned (SFT) diffusion models still suffer from multi-view inconsistency and the resulting NeRF artifacts. Although training longer with SFT improves consistency, it also causes distribution shift, which reduces diversity and realistic details. We argue that the SFT of multi-view diffusion models resembles the instruction finetuning stage of the LLM alignment pipeline and can benefit from RL finetuning (RLFT) methods. Essentially, RLFT methods optimize models beyond their SFT data distribution by using their own outputs, effectively mitigating distribution shift. To this end, we introduce Carve3D, a RLFT method coupled with the Multi-view Reconstruction Consistency (MRC) metric, to improve the consistency of multi-view diffusion models. To compute MRC on a set of multi-view images, we compare them with their corresponding renderings of the reconstructed NeRF at the same viewpoints. We validate the robustness of MRC with extensive experiments conducted under controlled inconsistency levels. We enhance the base RLFT algorithm to stabilize the training process, reduce distribution shift, and identify scaling laws. Through qualitative and quantitative experiments, along with a user study, we demonstrate Carve3D's improved multi-view consistency, the resulting superior NeRF reconstruction quality, and minimal distribution shift compared to longer SFT. Project webpage: https://desaixie.github.io/carve-3d.

NeuRegenerate: A Framework for Visualizing Neurodegeneration

Feb 02, 2022

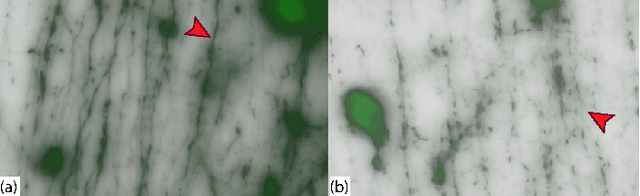

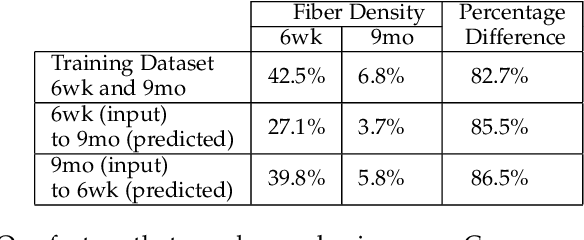

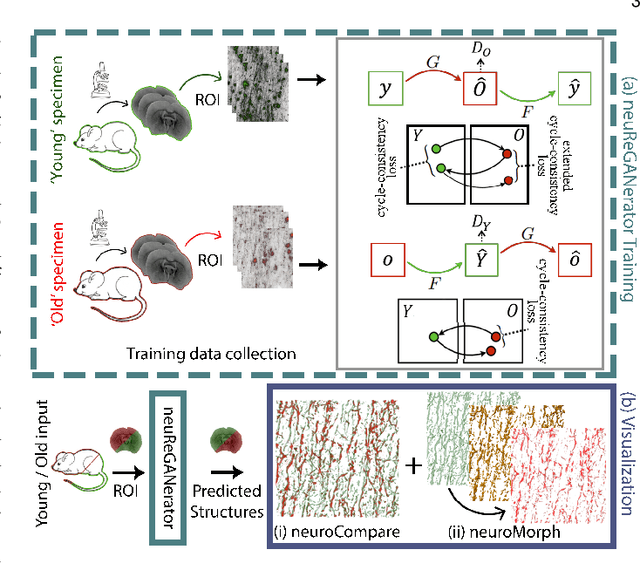

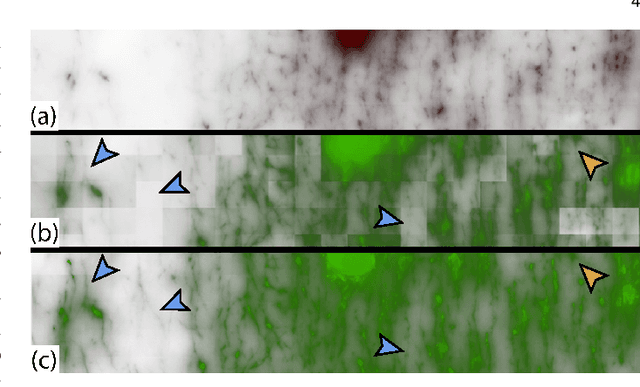

Abstract:Recent advances in high-resolution microscopy have allowed scientists to better understand the underlying brain connectivity. However, due to the limitation that biological specimens can only be imaged at a single timepoint, studying changes to neural projections is limited to general observations using population analysis. In this paper, we introduce NeuRegenerate, a novel end-to-end framework for the prediction and visualization of changes in neural fiber morphology within a subject, for specified age-timepoints.To predict projections, we present neuReGANerator, a deep-learning network based on cycle-consistent generative adversarial network (cycleGAN) that translates features of neuronal structures in a region, across age-timepoints, for large brain microscopy volumes. We improve the reconstruction quality of neuronal structures by implementing a density multiplier and a new loss function, called the hallucination loss.Moreover, to alleviate artifacts that occur due to tiling of large input volumes, we introduce a spatial-consistency module in the training pipeline of neuReGANerator. We show that neuReGANerator has a reconstruction accuracy of 94% in predicting neuronal structures. Finally, to visualize the predicted change in projections, NeuRegenerate offers two modes: (1) neuroCompare to simultaneously visualize the difference in the structures of the neuronal projections, across the age timepoints, and (2) neuroMorph, a vesselness-based morphing technique to interactively visualize the transformation of the structures from one age-timepoint to the other. Our framework is designed specifically for volumes acquired using wide-field microscopy. We demonstrate our framework by visualizing the structural changes in neuronal fibers within the cholinergic system of the mouse brain between a young and old specimen.

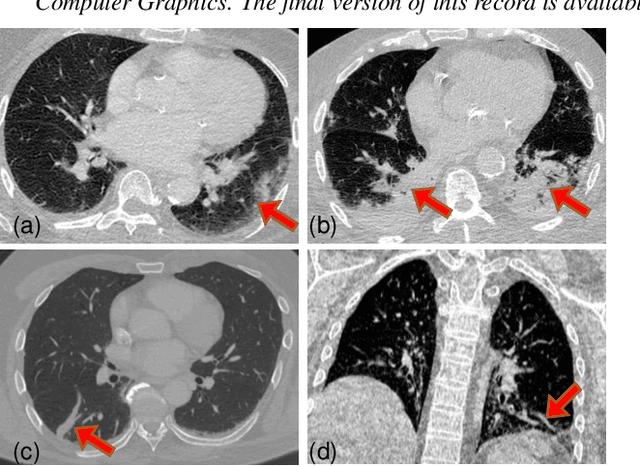

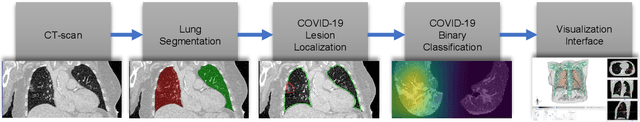

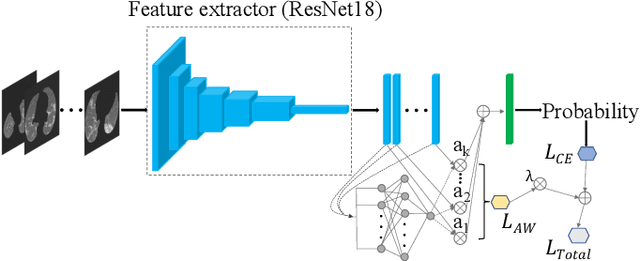

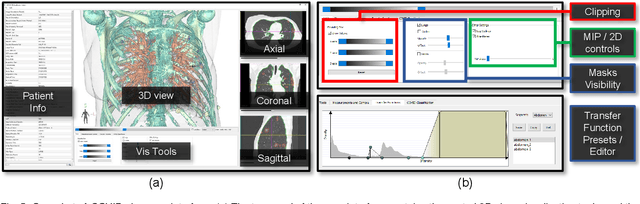

COVID-view: Diagnosis of COVID-19 using Chest CT

Aug 09, 2021

Abstract:Significant work has been done towards deep learning (DL) models for automatic lung and lesion segmentation and classification of COVID-19 on chest CT data. However, comprehensive visualization systems focused on supporting the dual visual+DL diagnosis of COVID-19 are non-existent. We present COVID-view, a visualization application specially tailored for radiologists to diagnose COVID-19 from chest CT data. The system incorporates a complete pipeline of automatic lungs segmentation, localization/ isolation of lung abnormalities, followed by visualization, visual and DL analysis, and measurement/quantification tools. Our system combines the traditional 2D workflow of radiologists with newer 2D and 3D visualization techniques with DL support for a more comprehensive diagnosis. COVID-view incorporates a novel DL model for classifying the patients into positive/negative COVID-19 cases, which acts as a reading aid for the radiologist using COVID-view and provides the attention heatmap as an explainable DL for the model output. We designed and evaluated COVID-view through suggestions, close feedback and conducting case studies of real-world patient data by expert radiologists who have substantial experience diagnosing chest CT scans for COVID-19, pulmonary embolism, and other forms of lung infections. We present requirements and task analysis for the diagnosis of COVID-19 that motivate our design choices and results in a practical system which is capable of handling real-world patient cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge