Saeed Boorboor

Explainable XR: Understanding User Behaviors of XR Environments using LLM-assisted Analytics Framework

Jan 23, 2025

Abstract:We present Explainable XR, an end-to-end framework for analyzing user behavior in diverse eXtended Reality (XR) environments by leveraging Large Language Models (LLMs) for data interpretation assistance. Existing XR user analytics frameworks face challenges in handling cross-virtuality - AR, VR, MR - transitions, multi-user collaborative application scenarios, and the complexity of multimodal data. Explainable XR addresses these challenges by providing a virtuality-agnostic solution for the collection, analysis, and visualization of immersive sessions. We propose three main components in our framework: (1) A novel user data recording schema, called User Action Descriptor (UAD), that can capture the users' multimodal actions, along with their intents and the contexts; (2) a platform-agnostic XR session recorder, and (3) a visual analytics interface that offers LLM-assisted insights tailored to the analysts' perspectives, facilitating the exploration and analysis of the recorded XR session data. We demonstrate the versatility of Explainable XR by demonstrating five use-case scenarios, in both individual and collaborative XR applications across virtualities. Our technical evaluation and user studies show that Explainable XR provides a highly usable analytics solution for understanding user actions and delivering multifaceted, actionable insights into user behaviors in immersive environments.

NeuRegenerate: A Framework for Visualizing Neurodegeneration

Feb 02, 2022

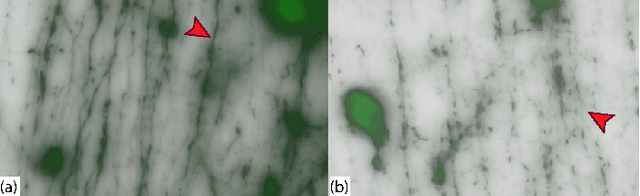

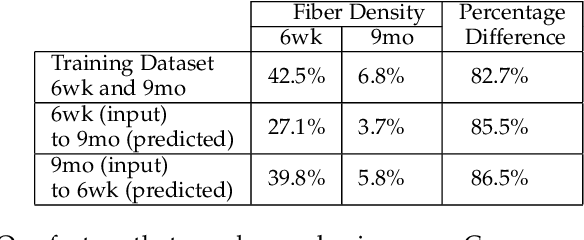

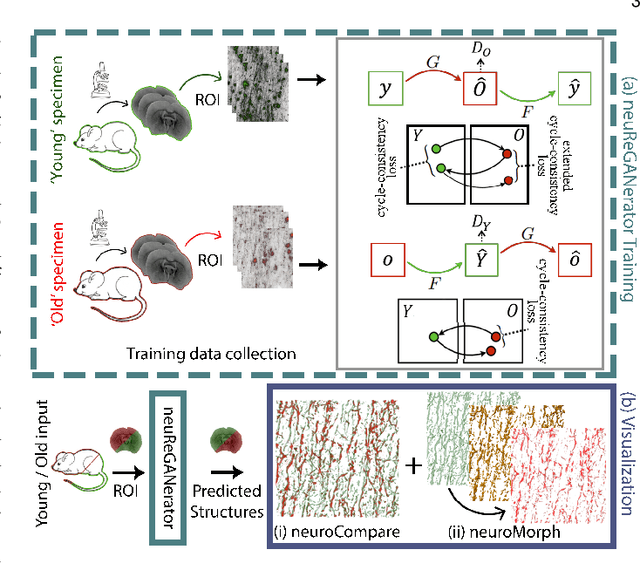

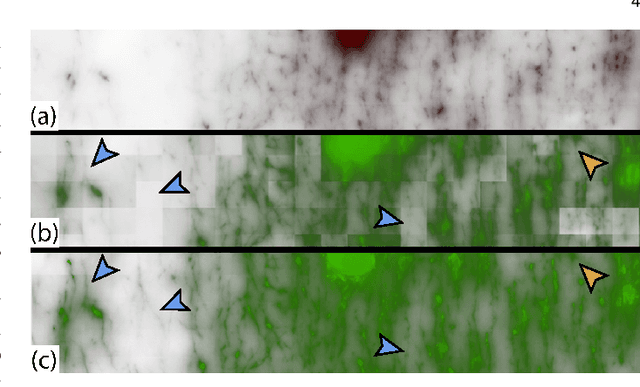

Abstract:Recent advances in high-resolution microscopy have allowed scientists to better understand the underlying brain connectivity. However, due to the limitation that biological specimens can only be imaged at a single timepoint, studying changes to neural projections is limited to general observations using population analysis. In this paper, we introduce NeuRegenerate, a novel end-to-end framework for the prediction and visualization of changes in neural fiber morphology within a subject, for specified age-timepoints.To predict projections, we present neuReGANerator, a deep-learning network based on cycle-consistent generative adversarial network (cycleGAN) that translates features of neuronal structures in a region, across age-timepoints, for large brain microscopy volumes. We improve the reconstruction quality of neuronal structures by implementing a density multiplier and a new loss function, called the hallucination loss.Moreover, to alleviate artifacts that occur due to tiling of large input volumes, we introduce a spatial-consistency module in the training pipeline of neuReGANerator. We show that neuReGANerator has a reconstruction accuracy of 94% in predicting neuronal structures. Finally, to visualize the predicted change in projections, NeuRegenerate offers two modes: (1) neuroCompare to simultaneously visualize the difference in the structures of the neuronal projections, across the age timepoints, and (2) neuroMorph, a vesselness-based morphing technique to interactively visualize the transformation of the structures from one age-timepoint to the other. Our framework is designed specifically for volumes acquired using wide-field microscopy. We demonstrate our framework by visualizing the structural changes in neuronal fibers within the cholinergic system of the mouse brain between a young and old specimen.

Crowdsourcing Lung Nodules Detection and Annotation

Sep 17, 2018Abstract:We present crowdsourcing as an additional modality to aid radiologists in the diagnosis of lung cancer from clinical chest computed tomography (CT) scans. More specifically, a complete workflow is introduced which can help maximize the sensitivity of lung nodule detection by utilizing the collective intelligence of the crowd. We combine the concept of overlapping thin-slab maximum intensity projections (TS-MIPs) and cine viewing to render short videos that can be outsourced as an annotation task to the crowd. These videos are generated by linearly interpolating overlapping TS-MIPs of CT slices through the depth of each quadrant of a patient's lung. The resultant videos are outsourced to an online community of non-expert users who, after a brief tutorial, annotate suspected nodules in these video segments. Using our crowdsourcing workflow, we achieved a lung nodule detection sensitivity of over 90% for 20 patient CT datasets (containing 178 lung nodules with sizes between 1-30mm), and only 47 false positives from a total of 1021 annotations on nodules of all sizes (96% sensitivity for nodules$>$4mm). These results show that crowdsourcing can be a robust and scalable modality to aid radiologists in screening for lung cancer, directly or in combination with computer-aided detection (CAD) algorithms. For CAD algorithms, the presented workflow can provide highly accurate training data to overcome the high false-positive rate (per scan) problem. We also provide, for the first time, analysis on nodule size and position which can help improve CAD algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge