Antonio Artés-Rodríguez

Sleep Activity Recognition and Characterization from Multi-Source Passively Sensed Data

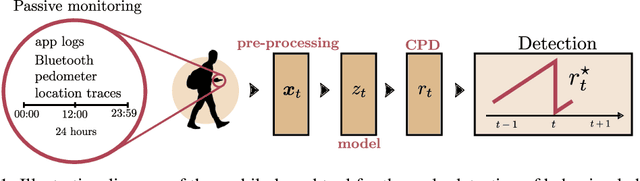

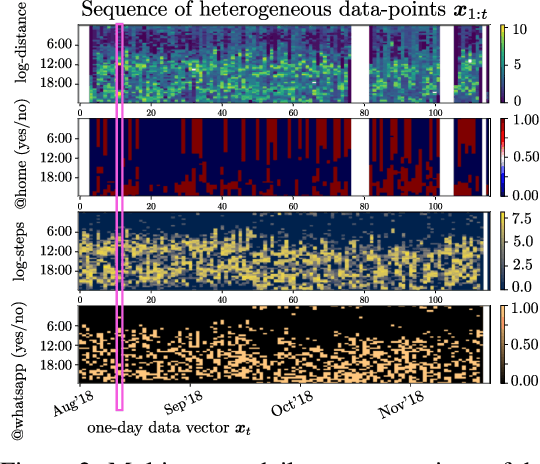

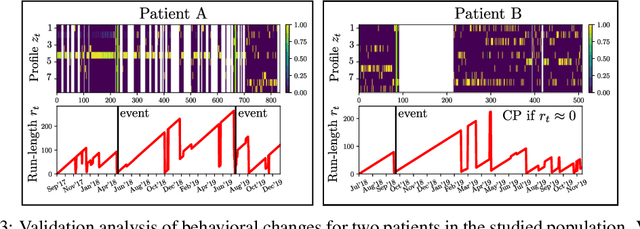

Jan 17, 2023Abstract:Sleep constitutes a key indicator of human health, performance, and quality of life. Sleep deprivation has long been related to the onset, development, and worsening of several mental and metabolic disorders, constituting an essential marker for preventing, evaluating, and treating different health conditions. Sleep Activity Recognition methods can provide indicators to assess, monitor, and characterize subjects' sleep-wake cycles and detect behavioral changes. In this work, we propose a general method that continuously operates on passively sensed data from smartphones to characterize sleep and identify significant sleep episodes. Thanks to their ubiquity, these devices constitute an excellent alternative data source to profile subjects' biorhythms in a continuous, objective, and non-invasive manner, in contrast to traditional sleep assessment methods that usually rely on intrusive and subjective procedures. A Heterogeneous Hidden Markov Model is used to model a discrete latent variable process associated with the Sleep Activity Recognition task in a self-supervised way. We validate our results against sleep metrics reported by tested wearables, proving the effectiveness of the proposed approach and advocating its use to assess sleep without more reliable sources.

Heterogeneous Hidden Markov Models for Sleep Activity Recognition from Multi-Source Passively Sensed Data

Nov 08, 2022Abstract:Psychiatric patients' passive activity monitoring is crucial to detect behavioural shifts in real-time, comprising a tool that helps clinicians supervise patients' evolution over time and enhance the associated treatments' outcomes. Frequently, sleep disturbances and mental health deterioration are closely related, as mental health condition worsening regularly entails shifts in the patients' circadian rhythms. Therefore, Sleep Activity Recognition constitutes a behavioural marker to portray patients' activity cycles and to detect behavioural changes among them. Moreover, mobile passively sensed data captured from smartphones, thanks to these devices' ubiquity, constitute an excellent alternative to profile patients' biorhythm. In this work, we aim to identify major sleep episodes based on passively sensed data. To do so, a Heterogeneous Hidden Markov Model is proposed to model a discrete latent variable process associated with the Sleep Activity Recognition task in a self-supervised way. We validate our results against sleep metrics reported by clinically tested wearables, proving the effectiveness of the proposed approach.

PyHHMM: A Python Library for Heterogeneous Hidden Markov Models

Jan 12, 2022Abstract:We introduce PyHHMM, an object-oriented open-source Python implementation of Heterogeneous-Hidden Markov Models (HHMMs). In addition to HMM's basic core functionalities, such as different initialization algorithms and classical observations models, i.e., continuous and multinoulli, PyHHMM distinctively emphasizes features not supported in similar available frameworks: a heterogeneous observation model, missing data inference, different model order selection criterias, and semi-supervised training. These characteristics result in a feature-rich implementation for researchers working with sequential data. PyHHMM relies on the numpy, scipy, scikit-learn, and seaborn Python packages, and is distributed under the Apache-2.0 License. PyHHMM's source code is publicly available on Github (https://github.com/fmorenopino/HeterogeneousHMM) to facilitate adoptions and future contributions. A detailed documentation (https://pyhhmm.readthedocs.io/en/latest), which covers examples of use and models' theoretical explanation, is available. The package can be installed through the Python Package Index (PyPI), via 'pip install pyhhmm'.

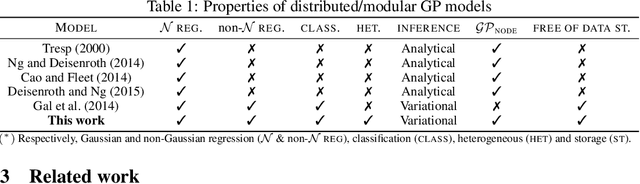

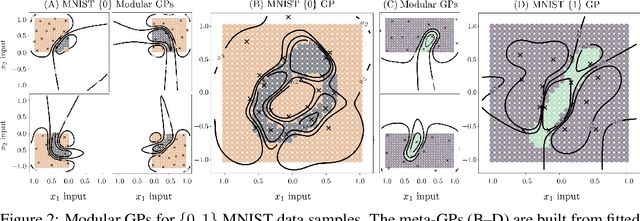

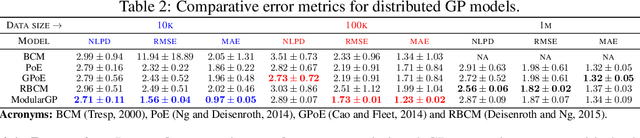

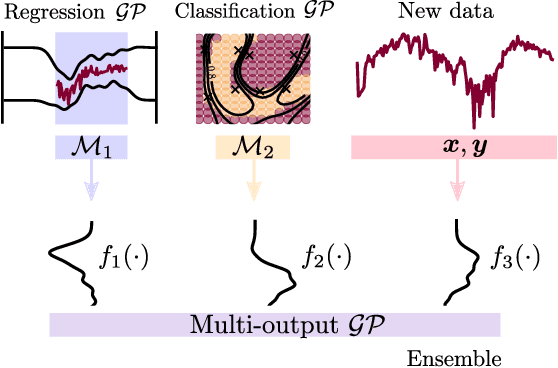

Modular Gaussian Processes for Transfer Learning

Oct 26, 2021

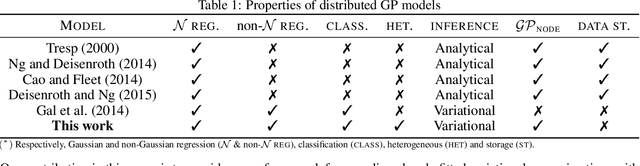

Abstract:We present a framework for transfer learning based on modular variational Gaussian processes (GP). We develop a module-based method that having a dictionary of well fitted GPs, one could build ensemble GP models without revisiting any data. Each model is characterised by its hyperparameters, pseudo-inputs and their corresponding posterior densities. Our method avoids undesired data centralisation, reduces rising computational costs and allows the transfer of learned uncertainty metrics after training. We exploit the augmentation of high-dimensional integral operators based on the Kullback-Leibler divergence between stochastic processes to introduce an efficient lower bound under all the sparse variational GPs, with different complexity and even likelihood distribution. The method is also valid for multi-output GPs, learning correlations a posteriori between independent modules. Extensive results illustrate the usability of our framework in large-scale and multi-task experiments, also compared with the exact inference methods in the literature.

Regularizing Transformers With Deep Probabilistic Layers

Aug 23, 2021

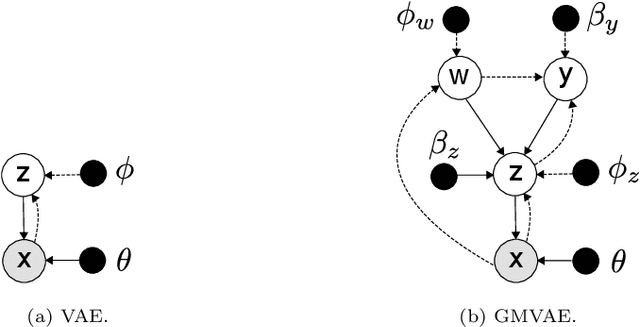

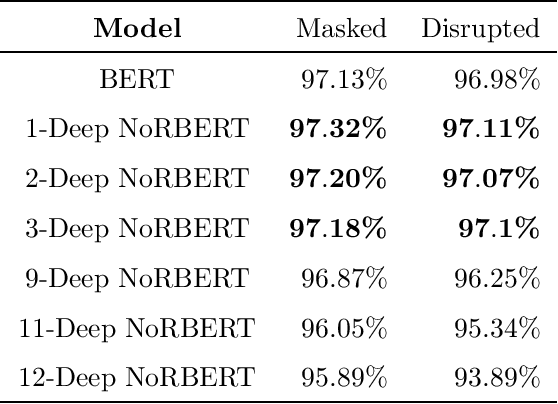

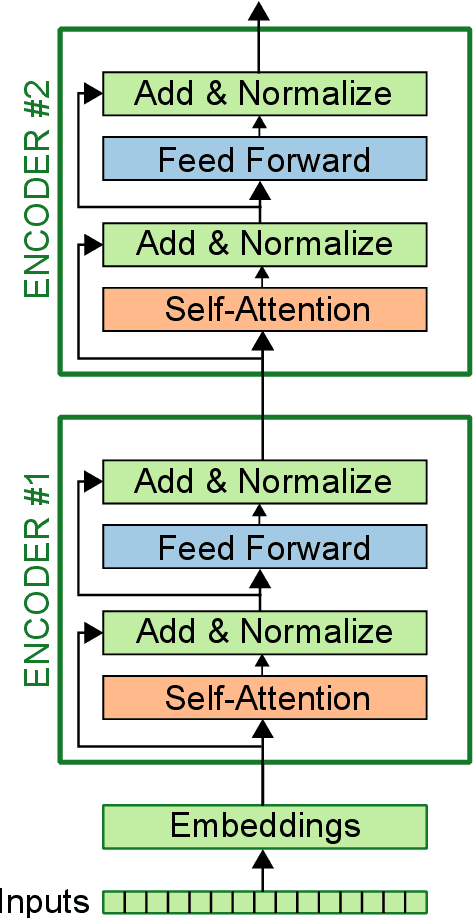

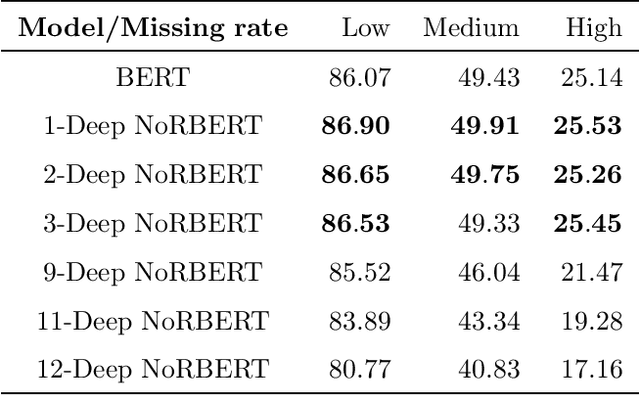

Abstract:Language models (LM) have grown with non-stop in the last decade, from sequence-to-sequence architectures to the state-of-the-art and utter attention-based Transformers. In this work, we demonstrate how the inclusion of deep generative models within BERT can bring more versatile models, able to impute missing/noisy words with richer text or even improve BLEU score. More precisely, we use a Gaussian Mixture Variational Autoencoder (GMVAE) as a regularizer layer and prove its effectiveness not only in Transformers but also in the most relevant encoder-decoder based LM, seq2seq with and without attention.

Deep Autoregressive Models with Spectral Attention

Jul 13, 2021

Abstract:Time series forecasting is an important problem across many domains, playing a crucial role in multiple real-world applications. In this paper, we propose a forecasting architecture that combines deep autoregressive models with a Spectral Attention (SA) module, which merges global and local frequency domain information in the model's embedded space. By characterizing in the spectral domain the embedding of the time series as occurrences of a random process, our method can identify global trends and seasonality patterns. Two spectral attention models, global and local to the time series, integrate this information within the forecast and perform spectral filtering to remove time series's noise. The proposed architecture has a number of useful properties: it can be effectively incorporated into well-know forecast architectures, requiring a low number of parameters and producing interpretable results that improve forecasting accuracy. We test the Spectral Attention Autoregressive Model (SAAM) on several well-know forecast datasets, consistently demonstrating that our model compares favorably to state-of-the-art approaches.

Medical data wrangling with sequential variational autoencoders

Mar 12, 2021

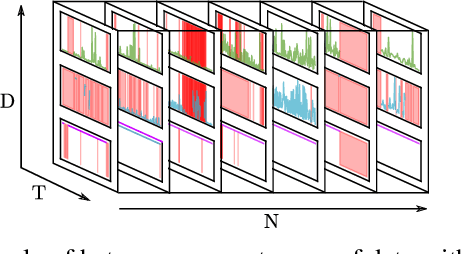

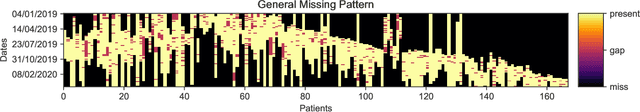

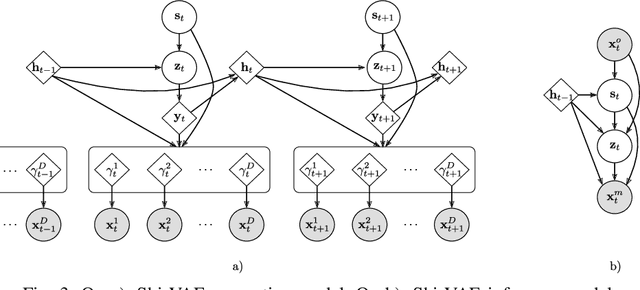

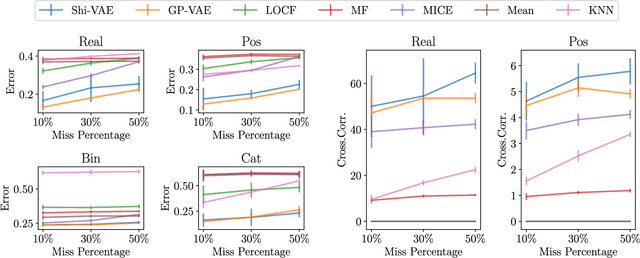

Abstract:Medical data sets are usually corrupted by noise and missing data. These missing patterns are commonly assumed to be completely random, but in medical scenarios, the reality is that these patterns occur in bursts due to sensors that are off for some time or data collected in a misaligned uneven fashion, among other causes. This paper proposes to model medical data records with heterogeneous data types and bursty missing data using sequential variational autoencoders (VAEs). In particular, we propose a new methodology, the Shi-VAE, which extends the capabilities of VAEs to sequential streams of data with missing observations. We compare our model against state-of-the-art solutions in an intensive care unit database (ICU) and a dataset of passive human monitoring. Furthermore, we find that standard error metrics such as RMSE are not conclusive enough to assess temporal models and include in our analysis the cross-correlation between the ground truth and the imputed signal. We show that Shi-VAE achieves the best performance in terms of using both metrics, with lower computational complexity than the GP-VAE model, which is the state-of-the-art method for medical records.

Unsupervised Learning of Global Factors in Deep Generative Models

Dec 16, 2020

Abstract:We present a novel deep generative model based on non i.i.d. variational autoencoders that captures global dependencies among observations in a fully unsupervised fashion. In contrast to the recent semi-supervised alternatives for global modeling in deep generative models, our approach combines a mixture model in the local or data-dependent space and a global Gaussian latent variable, which lead us to obtain three particular insights. First, the induced latent global space captures interpretable disentangled representations with no user-defined regularization in the evidence lower bound (as in $\beta$-VAE and its generalizations). Second, we show that the model performs domain alignment to find correlations and interpolate between different databases. Finally, we study the ability of the global space to discriminate between groups of observations with non-trivial underlying structures, such as face images with shared attributes or defined sequences of digits images.

Passive detection of behavioral shifts for suicide attempt prevention

Nov 14, 2020

Abstract:More than one million people commit suicide every year worldwide. The costs of daily cares, social stigma and treatment issues are still hard barriers to overcome in mental health. Most symptoms of mental disorders are related to the behavioral state of a patient, such as the mobility or social activity. Mobile-based technologies allow the passive collection of patients data, which supplements conventional assessments that rely on biased questionnaires and occasional medical appointments. In this work, we present a non-invasive machine learning (ML) model to detect behavioral shifts in psychiatric patients from unobtrusive data collected by a smartphone app. Our clinically validated results shed light on the idea of an early detection mobile tool for the task of suicide attempt prevention.

Recyclable Gaussian Processes

Oct 06, 2020

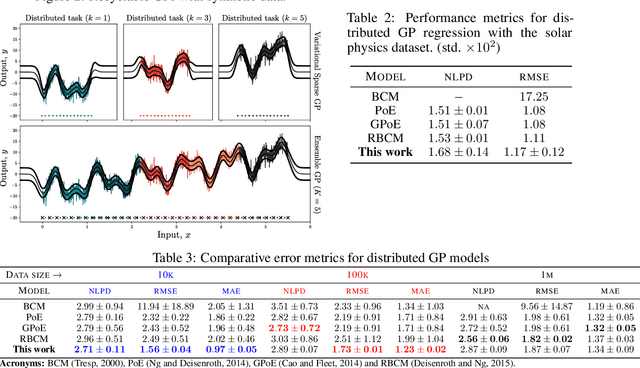

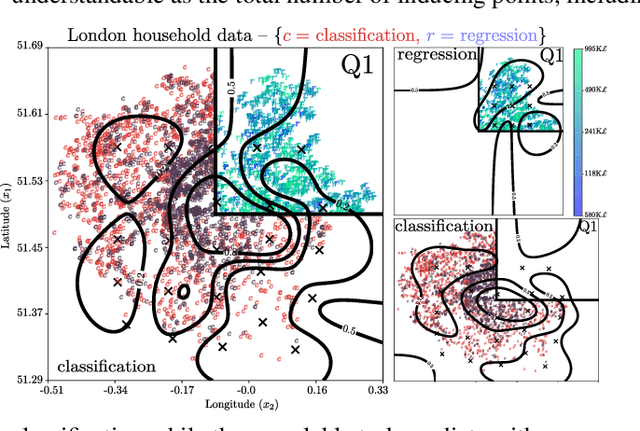

Abstract:We present a new framework for recycling independent variational approximations to Gaussian processes. The main contribution is the construction of variational ensembles given a dictionary of fitted Gaussian processes without revisiting any subset of observations. Our framework allows for regression, classification and heterogeneous tasks, i.e. mix of continuous and discrete variables over the same input domain. We exploit infinite-dimensional integral operators based on the Kullback-Leibler divergence between stochastic processes to re-combine arbitrary amounts of variational sparse approximations with different complexity, likelihood model and location of the pseudo-inputs. Extensive results illustrate the usability of our framework in large-scale distributed experiments, also compared with the exact inference models in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge