Ignacio Peis

Hyper-Transforming Latent Diffusion Models

Apr 24, 2025Abstract:We introduce a novel generative framework for functions by integrating Implicit Neural Representations (INRs) and Transformer-based hypernetworks into latent variable models. Unlike prior approaches that rely on MLP-based hypernetworks with scalability limitations, our method employs a Transformer-based decoder to generate INR parameters from latent variables, addressing both representation capacity and computational efficiency. Our framework extends latent diffusion models (LDMs) to INR generation by replacing standard decoders with a Transformer-based hypernetwork, which can be trained either from scratch or via hyper-transforming-a strategy that fine-tunes only the decoder while freezing the pre-trained latent space. This enables efficient adaptation of existing generative models to INR-based representations without requiring full retraining.

Scalable physical source-to-field inference with hypernetworks

May 07, 2024Abstract:We present a generative model that amortises computation for the field around e.g. gravitational or magnetic sources. Exact numerical calculation has either computational complexity $\mathcal{O}(M\times{}N)$ in the number of sources and field evaluation points, or requires a fixed evaluation grid to exploit fast Fourier transforms. Using an architecture where a hypernetwork produces an implicit representation of the field around a source collection, our model instead performs as $\mathcal{O}(M + N)$, achieves accuracy of $\sim\!4\%-6\%$, and allows evaluation at arbitrary locations for arbitrary numbers of sources, greatly increasing the speed of e.g. physics simulations. We also examine a model relating to the physical properties of the output field and develop two-dimensional examples to demonstrate its application. The code for these models and experiments is available at https://github.com/cmt-dtu-energy/hypermagnetics.

Variational Mixture of HyperGenerators for Learning Distributions Over Functions

Feb 13, 2023Abstract:Recent approaches build on implicit neural representations (INRs) to propose generative models over function spaces. However, they are computationally intensive when dealing with inference tasks, such as missing data imputation, or directly cannot tackle them. In this work, we propose a novel deep generative model, named VAMoH. VAMoH combines the capabilities of modeling continuous functions using INRs and the inference capabilities of Variational Autoencoders (VAEs). In addition, VAMoH relies on a normalizing flow to define the prior, and a mixture of hypernetworks to parametrize the data log-likelihood. This gives VAMoH a high expressive capability and interpretability. Through experiments on a diverse range of data types, such as images, voxels, and climate data, we show that VAMoH can effectively learn rich distributions over continuous functions. Furthermore, it can perform inference-related tasks, such as conditional super-resolution generation and in-painting, as well or better than previous approaches, while being less computationally demanding.

Missing Data Imputation and Acquisition with Deep Hierarchical Models and Hamiltonian Monte Carlo

Feb 09, 2022

Abstract:Variational Autoencoders (VAEs) have recently been highly successful at imputing and acquiring heterogeneous missing data and identifying outliers. However, within this specific application domain, existing VAE methods are restricted by using only one layer of latent variables and strictly Gaussian posterior approximations. To address these limitations, we present HH-VAEM, a Hierarchical VAE model for mixed-type incomplete data that uses Hamiltonian Monte Carlo with automatic hyper-parameter tuning for improved approximate inference. Our experiments show that HH-VAEM outperforms existing baselines in the tasks of missing data imputation, supervised learning and outlier identification with missing features. Finally, we also present a sampling-based approach for efficiently computing the information gain when missing features are to be acquired with HH-VAEM. Our experiments show that this sampling-based approach is superior to alternatives based on Gaussian approximations.

Unsupervised Learning of Global Factors in Deep Generative Models

Dec 16, 2020

Abstract:We present a novel deep generative model based on non i.i.d. variational autoencoders that captures global dependencies among observations in a fully unsupervised fashion. In contrast to the recent semi-supervised alternatives for global modeling in deep generative models, our approach combines a mixture model in the local or data-dependent space and a global Gaussian latent variable, which lead us to obtain three particular insights. First, the induced latent global space captures interpretable disentangled representations with no user-defined regularization in the evidence lower bound (as in $\beta$-VAE and its generalizations). Second, we show that the model performs domain alignment to find correlations and interpolate between different databases. Finally, we study the ability of the global space to discriminate between groups of observations with non-trivial underlying structures, such as face images with shared attributes or defined sequences of digits images.

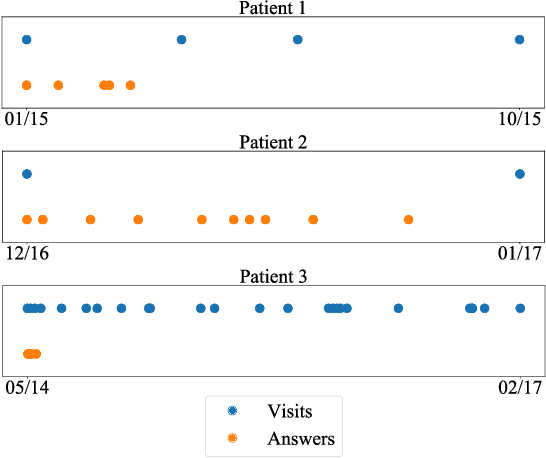

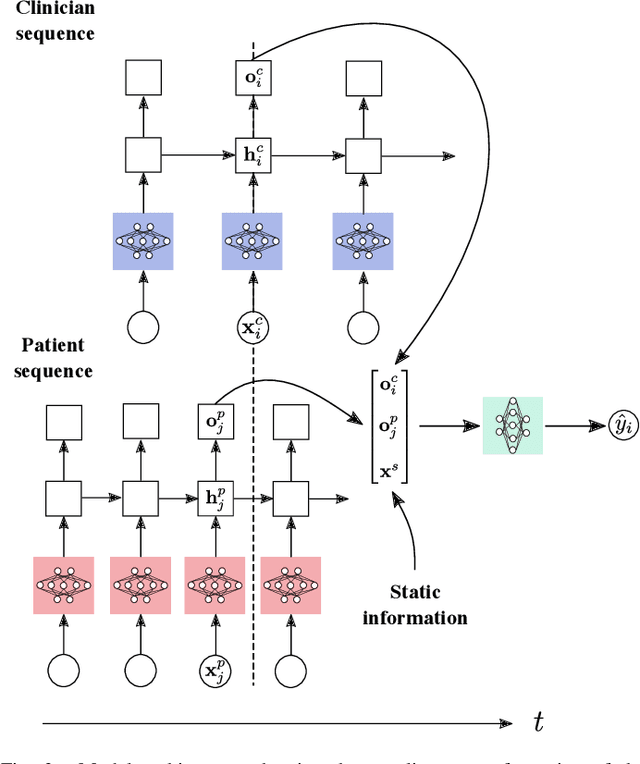

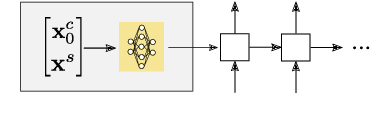

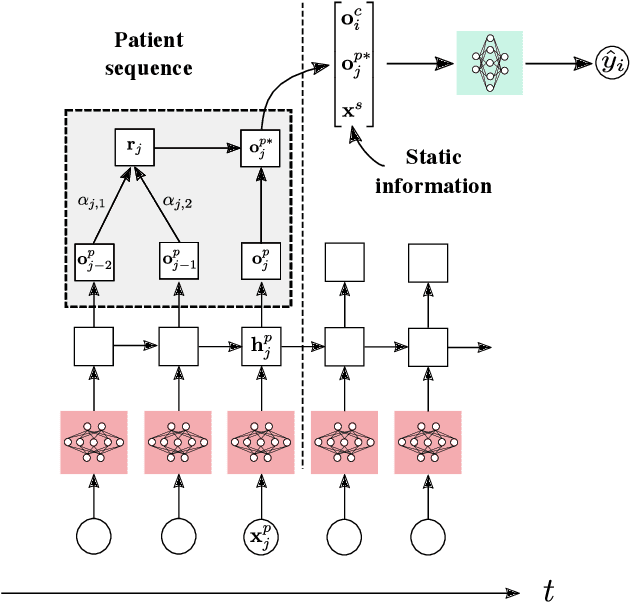

Deep Sequential Models for Suicidal Ideation from Multiple Source Data

Nov 06, 2019

Abstract:This article presents a novel method for predicting suicidal ideation from Electronic Health Records (EHR) and Ecological Momentary Assessment (EMA) data using deep sequential models. Both EHR longitudinal data and EMA question forms are defined by asynchronous, variable length, randomly-sampled data sequences. In our method, we model each of them with a Recurrent Neural Network (RNN), and both sequences are aligned by concatenating the hidden state of each of them using temporal marks. Furthermore, we incorporate attention schemes to improve performance in long sequences and time-independent pre-trained schemes to cope with very short sequences. Using a database of 1023 patients, our experimental results show that the addition of EMA records boosts the system recall to predict the suicidal ideation diagnosis from 48.13% obtained exclusively from EHR-based state-of-the-art methods to 67.78%. Additionally, our method provides interpretability through the t-SNE representation of the latent space. Further, the most relevant input features are identified and interpreted medically.

* Accepted for publication in IEEE Journal of Biomedical and Health Informatics (JBHI)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge