Antoine Cully

AIRL, Imperial College London

From Tabula Rasa to Emergent Abilities: Discovering Robot Skills via Real-World Unsupervised Quality-Diversity

Aug 28, 2025Abstract:Autonomous skill discovery aims to enable robots to acquire diverse behaviors without explicit supervision. Learning such behaviors directly on physical hardware remains challenging due to safety and data efficiency constraints. Existing methods, including Quality-Diversity Actor-Critic (QDAC), require manually defined skill spaces and carefully tuned heuristics, limiting real-world applicability. We propose Unsupervised Real-world Skill Acquisition (URSA), an extension of QDAC that enables robots to autonomously discover and master diverse, high-performing skills directly in the real world. We demonstrate that URSA successfully discovers diverse locomotion skills on a Unitree A1 quadruped in both simulation and the real world. Our approach supports both heuristic-driven skill discovery and fully unsupervised settings. We also show that the learned skill repertoire can be reused for downstream tasks such as real-world damage adaptation, where URSA outperforms all baselines in 5 out of 9 simulated and 3 out of 5 real-world damage scenarios. Our results establish a new framework for real-world robot learning that enables continuous skill discovery with limited human intervention, representing a significant step toward more autonomous and adaptable robotic systems. Demonstration videos are available at https://adaptive-intelligent-robotics.github.io/URSA.

A Path to Universal Neural Cellular Automata

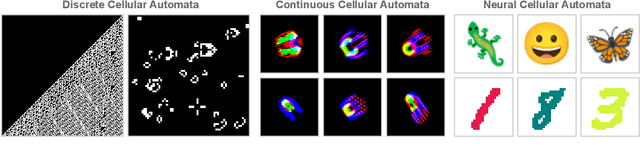

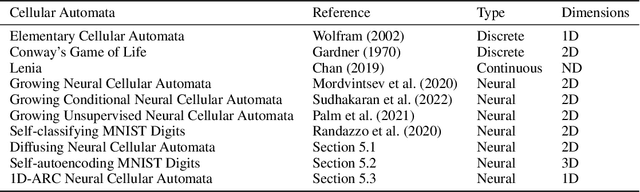

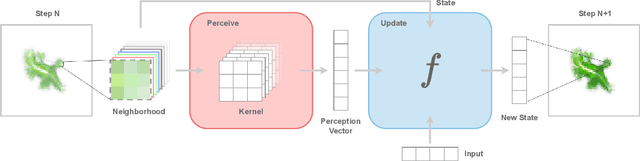

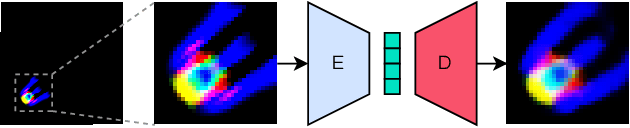

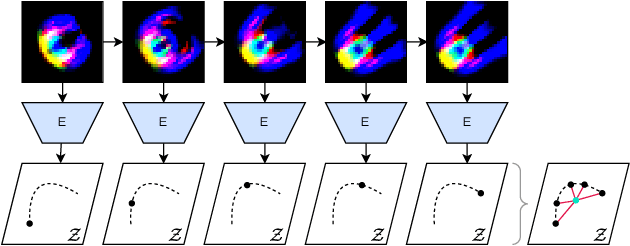

May 19, 2025Abstract:Cellular automata have long been celebrated for their ability to generate complex behaviors from simple, local rules, with well-known discrete models like Conway's Game of Life proven capable of universal computation. Recent advancements have extended cellular automata into continuous domains, raising the question of whether these systems retain the capacity for universal computation. In parallel, neural cellular automata have emerged as a powerful paradigm where rules are learned via gradient descent rather than manually designed. This work explores the potential of neural cellular automata to develop a continuous Universal Cellular Automaton through training by gradient descent. We introduce a cellular automaton model, objective functions and training strategies to guide neural cellular automata toward universal computation in a continuous setting. Our experiments demonstrate the successful training of fundamental computational primitives - such as matrix multiplication and transposition - culminating in the emulation of a neural network solving the MNIST digit classification task directly within the cellular automata state. These results represent a foundational step toward realizing analog general-purpose computers, with implications for understanding universal computation in continuous dynamics and advancing the automated discovery of complex cellular automata behaviors via machine learning.

Overcoming Deceptiveness in Fitness Optimization with Unsupervised Quality-Diversity

Apr 02, 2025Abstract:Policy optimization seeks the best solution to a control problem according to an objective or fitness function, serving as a fundamental field of engineering and research with applications in robotics. Traditional optimization methods like reinforcement learning and evolutionary algorithms struggle with deceptive fitness landscapes, where following immediate improvements leads to suboptimal solutions. Quality-diversity (QD) algorithms offer a promising approach by maintaining diverse intermediate solutions as stepping stones for escaping local optima. However, QD algorithms require domain expertise to define hand-crafted features, limiting their applicability where characterizing solution diversity remains unclear. In this paper, we show that unsupervised QD algorithms - specifically the AURORA framework, which learns features from sensory data - efficiently solve deceptive optimization problems without domain expertise. By enhancing AURORA with contrastive learning and periodic extinction events, we propose AURORA-XCon, which outperforms all traditional optimization baselines and matches, in some cases even improving by up to 34%, the best QD baseline with domain-specific hand-crafted features. This work establishes a novel application of unsupervised QD algorithms, shifting their focus from discovering novel solutions toward traditional optimization and expanding their potential to domains where defining feature spaces poses challenges.

Extract-QD Framework: A Generic Approach for Quality-Diversity in Noisy, Stochastic or Uncertain Domains

Feb 10, 2025

Abstract:Quality-Diversity (QD) has demonstrated potential in discovering collections of diverse solutions to optimisation problems. Originally designed for deterministic environments, QD has been extended to noisy, stochastic, or uncertain domains through various Uncertain-QD (UQD) methods. However, the large number of UQD methods, each with unique constraints, makes selecting the most suitable one challenging. To remedy this situation, we present two contributions: first, the Extract-QD Framework (EQD Framework), and second, Extract-ME (EME), a new method derived from it. The EQD Framework unifies existing approaches within a modular view, and facilitates developing novel methods by interchanging modules. We use it to derive EME, a novel method that consistently outperforms or matches the best existing methods on standard benchmarks, while previous methods show varying performance. In a second experiment, we show how our EQD Framework can be used to augment existing QD algorithms and in particular the well-established Policy-Gradient-Assisted-MAP-Elites method, and demonstrate improved performance in uncertain domains at no additional evaluation cost. For any new uncertain task, our contributions now provide EME as a reliable "first guess" method, and the EQD Framework as a tool for developing task-specific approaches. Together, these contributions aim to lower the cost of adopting UQD insights in QD applications.

Discovering Quality-Diversity Algorithms via Meta-Black-Box Optimization

Feb 04, 2025

Abstract:Quality-Diversity has emerged as a powerful family of evolutionary algorithms that generate diverse populations of high-performing solutions by implementing local competition principles inspired by biological evolution. While these algorithms successfully foster diversity and innovation, their specific mechanisms rely on heuristics, such as grid-based competition in MAP-Elites or nearest-neighbor competition in unstructured archives. In this work, we propose a fundamentally different approach: using meta-learning to automatically discover novel Quality-Diversity algorithms. By parameterizing the competition rules using attention-based neural architectures, we evolve new algorithms that capture complex relationships between individuals in the descriptor space. Our discovered algorithms demonstrate competitive or superior performance compared to established Quality-Diversity baselines while exhibiting strong generalization to higher dimensions, larger populations, and out-of-distribution domains like robot control. Notably, even when optimized solely for fitness, these algorithms naturally maintain diverse populations, suggesting meta-learning rediscovers that diversity is fundamental to effective optimization.

Scaling Policy Gradient Quality-Diversity with Massive Parallelization via Behavioral Variations

Jan 30, 2025

Abstract:Quality-Diversity optimization comprises a family of evolutionary algorithms aimed at generating a collection of diverse and high-performing solutions. MAP-Elites (ME), a notable example, is used effectively in fields like evolutionary robotics. However, the reliance of ME on random mutations from Genetic Algorithms limits its ability to evolve high-dimensional solutions. Methods proposed to overcome this include using gradient-based operators like policy gradients or natural evolution strategies. While successful at scaling ME for neuroevolution, these methods often suffer from slow training speeds, or difficulties in scaling with massive parallelization due to high computational demands or reliance on centralized actor-critic training. In this work, we introduce a fast, sample-efficient ME based algorithm capable of scaling up with massive parallelization, significantly reducing runtimes without compromising performance. Our method, ASCII-ME, unlike existing policy gradient quality-diversity methods, does not rely on centralized actor-critic training. It performs behavioral variations based on time step performance metrics and maps these variations to solutions using policy gradients. Our experiments show that ASCII-ME can generate a diverse collection of high-performing deep neural network policies in less than 250 seconds on a single GPU. Additionally, it operates on average, five times faster than state-of-the-art algorithms while still maintaining competitive sample efficiency.

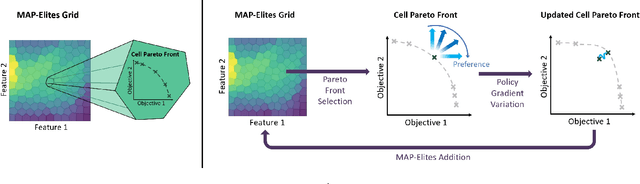

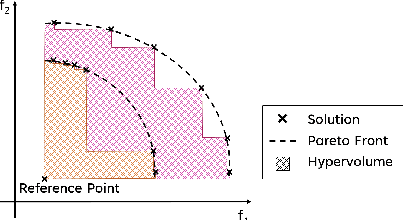

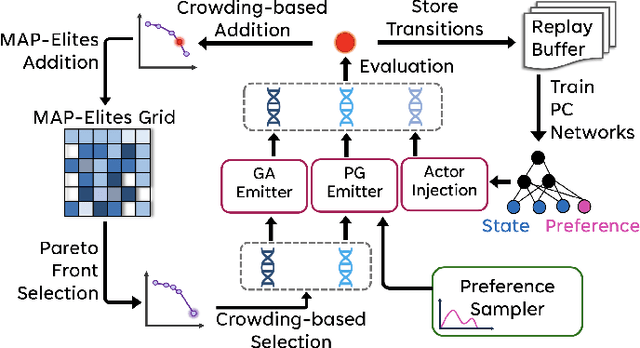

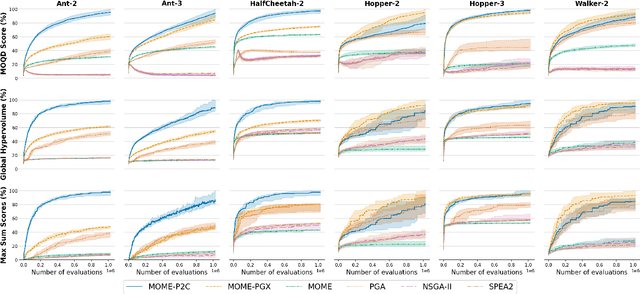

Preference-Conditioned Gradient Variations for Multi-Objective Quality-Diversity

Nov 19, 2024

Abstract:In a variety of domains, from robotics to finance, Quality-Diversity algorithms have been used to generate collections of both diverse and high-performing solutions. Multi-Objective Quality-Diversity algorithms have emerged as a promising approach for applying these methods to complex, multi-objective problems. However, existing methods are limited by their search capabilities. For example, Multi-Objective Map-Elites depends on random genetic variations which struggle in high-dimensional search spaces. Despite efforts to enhance search efficiency with gradient-based mutation operators, existing approaches consider updating solutions to improve on each objective separately rather than achieving desired trade-offs. In this work, we address this limitation by introducing Multi-Objective Map-Elites with Preference-Conditioned Policy-Gradient and Crowding Mechanisms: a new Multi-Objective Quality-Diversity algorithm that uses preference-conditioned policy-gradient mutations to efficiently discover promising regions of the objective space and crowding mechanisms to promote a uniform distribution of solutions on the Pareto front. We evaluate our approach on six robotics locomotion tasks and show that our method outperforms or matches all state-of-the-art Multi-Objective Quality-Diversity methods in all six, including two newly proposed tri-objective tasks. Importantly, our method also achieves a smoother set of trade-offs, as measured by newly-proposed sparsity-based metrics. This performance comes at a lower computational storage cost compared to previous methods.

CAX: Cellular Automata Accelerated in JAX

Oct 03, 2024

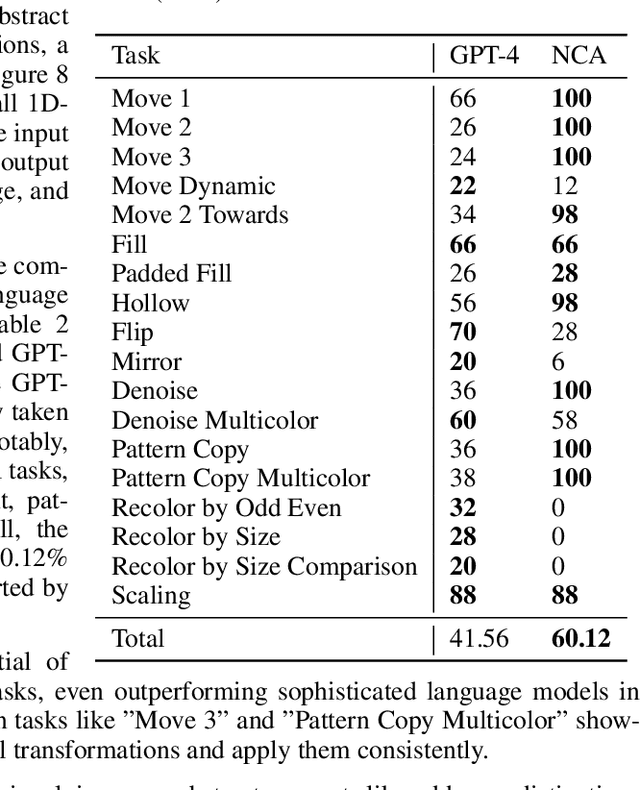

Abstract:Cellular automata have become a cornerstone for investigating emergence and self-organization across diverse scientific disciplines, spanning neuroscience, artificial life, and theoretical physics. However, the absence of a hardware-accelerated cellular automata library limits the exploration of new research directions, hinders collaboration, and impedes reproducibility. In this work, we introduce CAX (Cellular Automata Accelerated in JAX), a high-performance and flexible open-source library designed to accelerate cellular automata research. CAX offers cutting-edge performance and a modular design through a user-friendly interface, and can support both discrete and continuous cellular automata with any number of dimensions. We demonstrate CAX's performance and flexibility through a wide range of benchmarks and applications. From classic models like elementary cellular automata and Conway's Game of Life to advanced applications such as growing neural cellular automata and self-classifying MNIST digits, CAX speeds up simulations up to 2,000 times faster. Furthermore, we demonstrate CAX's potential to accelerate research by presenting a collection of three novel cellular automata experiments, each implemented in just a few lines of code thanks to the library's modular architecture. Notably, we show that a simple one-dimensional cellular automaton can outperform GPT-4 on the 1D-ARC challenge.

Toward Artificial Open-Ended Evolution within Lenia using Quality-Diversity

Jun 06, 2024

Abstract:From the formation of snowflakes to the evolution of diverse life forms, emergence is ubiquitous in our universe. In the quest to understand how complexity can arise from simple rules, abstract computational models, such as cellular automata, have been developed to study self-organization. However, the discovery of self-organizing patterns in artificial systems is challenging and has largely relied on manual or semi-automatic search in the past. In this paper, we show that Quality-Diversity, a family of Evolutionary Algorithms, is an effective framework for the automatic discovery of diverse self-organizing patterns in complex systems. Quality-Diversity algorithms aim to evolve a large population of diverse individuals, each adapted to its ecological niche. Combined with Lenia, a family of continuous cellular automata, we demonstrate that our method is able to evolve a diverse population of lifelike self-organizing autonomous patterns. Our framework, called Leniabreeder, can leverage both manually defined diversity criteria to guide the search toward interesting areas, as well as unsupervised measures of diversity to broaden the scope of discoverable patterns. We demonstrate both qualitatively and quantitatively that Leniabreeder offers a powerful solution for discovering self-organizing patterns. The effectiveness of unsupervised Quality-Diversity methods combined with the rich landscape of Lenia exhibits a sustained generation of diversity and complexity characteristic of biological evolution. We provide empirical evidence that suggests unbounded diversity and argue that Leniabreeder is a step toward replicating open-ended evolution in silico.

OMNI-EPIC: Open-endedness via Models of human Notions of Interestingness with Environments Programmed in Code

May 24, 2024Abstract:Open-ended and AI-generating algorithms aim to continuously generate and solve increasingly complex tasks indefinitely, offering a promising path toward more general intelligence. To accomplish this grand vision, learning must occur within a vast array of potential tasks. Existing approaches to automatically generating environments are constrained within manually predefined, often narrow distributions of environment, limiting their ability to create any learning environment. To address this limitation, we introduce a novel framework, OMNI-EPIC, that augments previous work in Open-endedness via Models of human Notions of Interestingness (OMNI) with Environments Programmed in Code (EPIC). OMNI-EPIC leverages foundation models to autonomously generate code specifying the next learnable (i.e., not too easy or difficult for the agent's current skill set) and interesting (e.g., worthwhile and novel) tasks. OMNI-EPIC generates both environments (e.g., an obstacle course) and reward functions (e.g., progress through the obstacle course quickly without touching red objects), enabling it, in principle, to create any simulatable learning task. We showcase the explosive creativity of OMNI-EPIC, which continuously innovates to suggest new, interesting learning challenges. We also highlight how OMNI-EPIC can adapt to reinforcement learning agents' learning progress, generating tasks that are of suitable difficulty. Overall, OMNI-EPIC can endlessly create learnable and interesting environments, further propelling the development of self-improving AI systems and AI-Generating Algorithms. Project website with videos: https://dub.sh/omniepic

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge