Anqi Chen

Cross-subject Brain Functional Connectivity Analysis for Multi-task Cognitive State Evaluation

Aug 27, 2024

Abstract:Cognition refers to the function of information perception and processing, which is the fundamental psychological essence of human beings. It is responsible for reasoning and decision-making, while its evaluation is significant for the aviation domain in mitigating potential safety risks. Existing studies tend to use varied methods for cognitive state evaluation yet have limitations in timeliness, generalisation, and interpretability. Accordingly, this study adopts brain functional connectivity with electroencephalography signals to capture associations in brain regions across multiple subjects for evaluating real-time cognitive states. Specifically, a virtual reality-based flight platform is constructed with multi-screen embedded. Three distinctive cognitive tasks are designed and each has three degrees of difficulty. Thirty subjects are acquired for analysis and evaluation. The results are interpreted through different perspectives, including inner-subject and cross-subject for task-wise and gender-wise underlying brain functional connectivity. Additionally, this study incorporates questionnaire-based, task performance-based, and physiological measure-based approaches to fairly label the trials. A multi-class cognitive state evaluation is further conducted with the active brain connections. Benchmarking results demonstrate that the identified brain regions have considerable influences in cognition, with a multi-class accuracy rate of 95.83% surpassing existing studies. The derived findings bring significance to understanding the dynamic relationships among human brain functional regions, cross-subject cognitive behaviours, and decision-making, which have promising practical application values.

Experimental Security Analysis of DNN-based Adaptive Cruise Control under Context-Aware Perception Attacks

Jul 18, 2023Abstract:Adaptive Cruise Control (ACC) is a widely used driver assistance feature for maintaining desired speed and safe distance to the leading vehicles. This paper evaluates the security of the deep neural network (DNN) based ACC systems under stealthy perception attacks that strategically inject perturbations into camera data to cause forward collisions. We present a combined knowledge-and-data-driven approach to design a context-aware strategy for the selection of the most critical times for triggering the attacks and a novel optimization-based method for the adaptive generation of image perturbations at run-time. We evaluate the effectiveness of the proposed attack using an actual driving dataset and a realistic simulation platform with the control software from a production ACC system and a physical-world driving simulator while considering interventions by the driver and safety features such as Automatic Emergency Braking (AEB) and Forward Collision Warning (FCW). Experimental results show that the proposed attack achieves 142.9x higher success rate in causing accidents than random attacks and is mitigated 89.6% less by the safety features while being stealthy and robust to real-world factors and dynamic changes in the environment. This study provides insights into the role of human operators and basic safety interventions in preventing attacks.

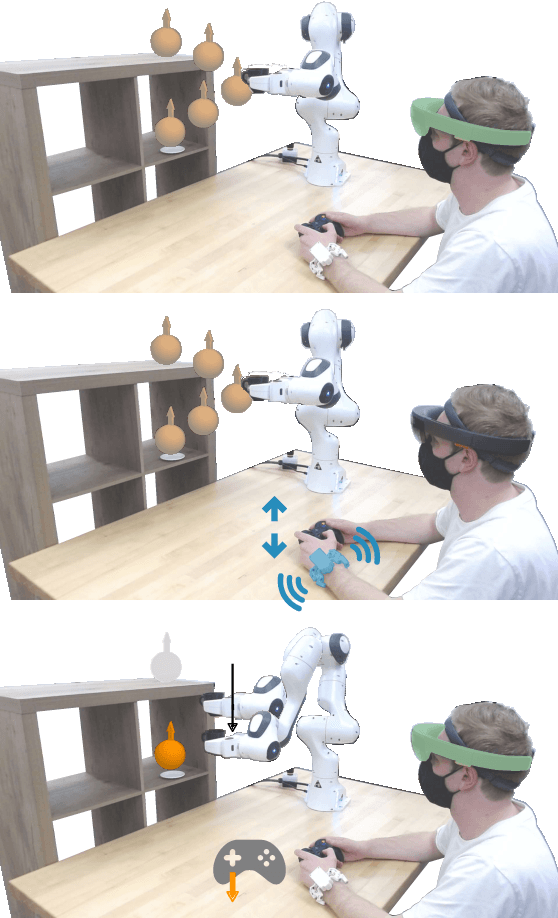

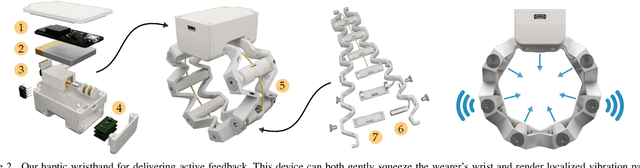

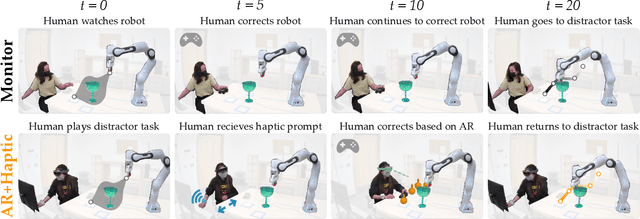

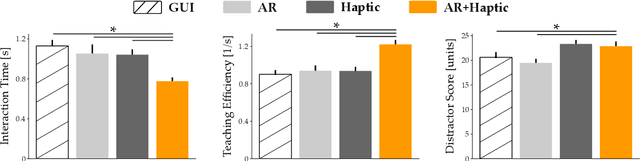

Communicating Inferred Goals with Passive Augmented Reality and Active Haptic Feedback

Sep 03, 2021

Abstract:Robots learn as they interact with humans. Consider a human teleoperating an assistive robot arm: as the human guides and corrects the arm's motion, the robot gathers information about the human's desired task. But how does the human know what their robot has inferred? Today's approaches often focus on conveying intent: for instance, upon legible motions or gestures to indicate what the robot is planning. However, closing the loop on robot inference requires more than just revealing the robot's current policy: the robot should also display the alternatives it thinks are likely, and prompt the human teacher when additional guidance is necessary. In this paper we propose a multimodal approach for communicating robot inference that combines both passive and active feedback. Specifically, we leverage information-rich augmented reality to passively visualize what the robot has inferred, and attention-grabbing haptic wristbands to actively prompt and direct the human's teaching. We apply our system to shared autonomy tasks where the robot must infer the human's goal in real-time. Within this context, we integrate passive and active modalities into a single algorithmic framework that determines when and which type of feedback to provide. Combining both passive and active feedback experimentally outperforms single modality baselines; during an in-person user study, we demonstrate that our integrated approach increases how efficiently humans teach the robot while simultaneously decreasing the amount of time humans spend interacting with the robot. Videos here: https://youtu.be/swq_u4iIP-g

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge