Maxfield Kouzel

KnowSafe: Combined Knowledge and Data Driven Hazard Mitigation in Artificial Pancreas Systems

Nov 13, 2023

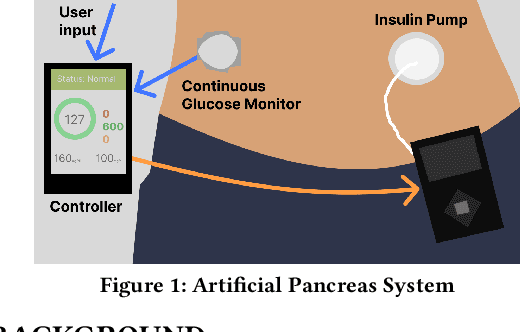

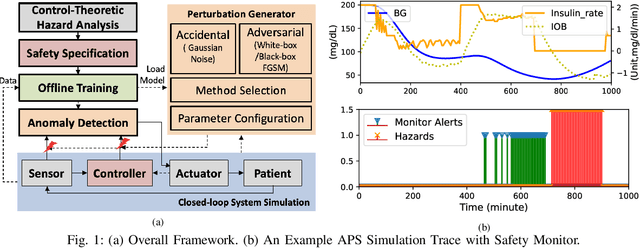

Abstract:Significant progress has been made in anomaly detection and run-time monitoring to improve the safety and security of cyber-physical systems (CPS). However, less attention has been paid to hazard mitigation. This paper proposes a combined knowledge and data driven approach, KnowSafe, for the design of safety engines that can predict and mitigate safety hazards resulting from safety-critical malicious attacks or accidental faults targeting a CPS controller. We integrate domain-specific knowledge of safety constraints and context-specific mitigation actions with machine learning (ML) techniques to estimate system trajectories in the far and near future, infer potential hazards, and generate optimal corrective actions to keep the system safe. Experimental evaluation on two realistic closed-loop testbeds for artificial pancreas systems (APS) and a real-world clinical trial dataset for diabetes treatment demonstrates that KnowSafe outperforms the state-of-the-art by achieving higher accuracy in predicting system state trajectories and potential hazards, a low false positive rate, and no false negatives. It also maintains the safe operation of the simulated APS despite faults or attacks without introducing any new hazards, with a hazard mitigation success rate of 92.8%, which is at least 76% higher than solely rule-based (50.9%) and data-driven (52.7%) methods.

Experimental Security Analysis of DNN-based Adaptive Cruise Control under Context-Aware Perception Attacks

Jul 18, 2023Abstract:Adaptive Cruise Control (ACC) is a widely used driver assistance feature for maintaining desired speed and safe distance to the leading vehicles. This paper evaluates the security of the deep neural network (DNN) based ACC systems under stealthy perception attacks that strategically inject perturbations into camera data to cause forward collisions. We present a combined knowledge-and-data-driven approach to design a context-aware strategy for the selection of the most critical times for triggering the attacks and a novel optimization-based method for the adaptive generation of image perturbations at run-time. We evaluate the effectiveness of the proposed attack using an actual driving dataset and a realistic simulation platform with the control software from a production ACC system and a physical-world driving simulator while considering interventions by the driver and safety features such as Automatic Emergency Braking (AEB) and Forward Collision Warning (FCW). Experimental results show that the proposed attack achieves 142.9x higher success rate in causing accidents than random attacks and is mitigated 89.6% less by the safety features while being stealthy and robust to real-world factors and dynamic changes in the environment. This study provides insights into the role of human operators and basic safety interventions in preventing attacks.

Short: Basal-Adjust: Trend Prediction Alerts and Adjusted Basal Rates for Hyperglycemia Prevention

Mar 16, 2023

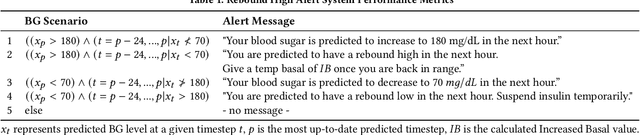

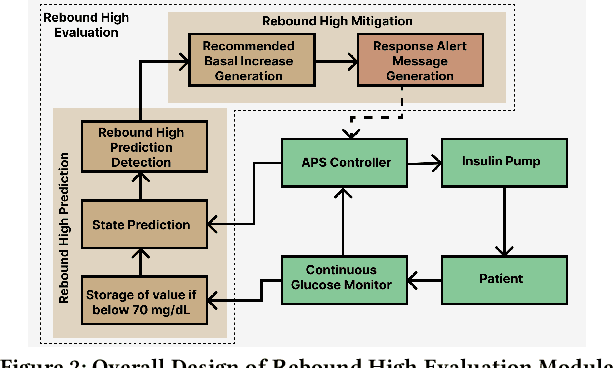

Abstract:Significant advancements in type 1 diabetes treatment have been made in the development of state-of-the-art Artificial Pancreas Systems (APS). However, lapses currently exist in the timely treatment of unsafe blood glucose (BG) levels, especially in the case of rebound hyperglycemia. We propose a machine learning (ML) method for predictive BG scenario categorization that outputs messages alerting the patient to upcoming BG trends to allow for earlier, educated treatment. In addition to standard notifications of predicted hypoglycemia and hyperglycemia, we introduce BG scenario-specific alert messages and the preliminary steps toward precise basal suggestions for the prevention of rebound hyperglycemia. Experimental evaluation on the DCLP3 clinical dataset achieves >98% accuracy and >79% precision for predicting rebound high events for patient alerts.

Robustness Testing of Data and Knowledge Driven Anomaly Detection in Cyber-Physical Systems

Apr 20, 2022

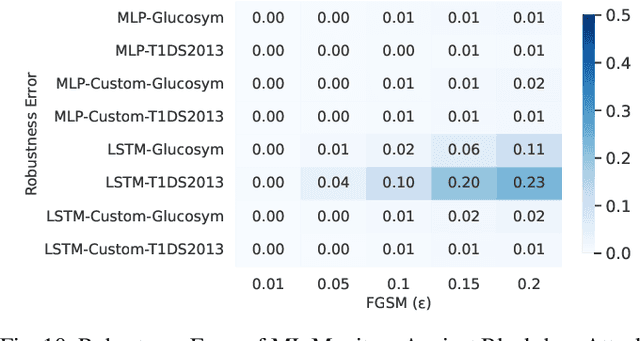

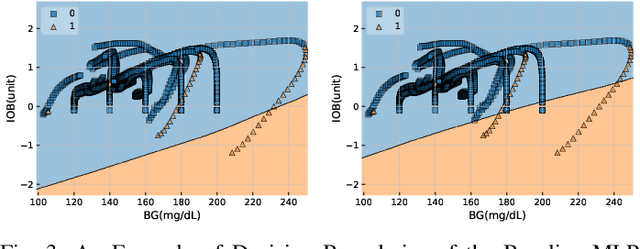

Abstract:The growing complexity of Cyber-Physical Systems (CPS) and challenges in ensuring safety and security have led to the increasing use of deep learning methods for accurate and scalable anomaly detection. However, machine learning (ML) models often suffer from low performance in predicting unexpected data and are vulnerable to accidental or malicious perturbations. Although robustness testing of deep learning models has been extensively explored in applications such as image classification and speech recognition, less attention has been paid to ML-driven safety monitoring in CPS. This paper presents the preliminary results on evaluating the robustness of ML-based anomaly detection methods in safety-critical CPS against two types of accidental and malicious input perturbations, generated using a Gaussian-based noise model and the Fast Gradient Sign Method (FGSM). We test the hypothesis of whether integrating the domain knowledge (e.g., on unsafe system behavior) with the ML models can improve the robustness of anomaly detection without sacrificing accuracy and transparency. Experimental results with two case studies of Artificial Pancreas Systems (APS) for diabetes management show that ML-based safety monitors trained with domain knowledge can reduce on average up to 54.2% of robustness error and keep the average F1 scores high while improving transparency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge