Anil Yaman

A Perturbation and Speciation-Based Algorithm for Dynamic Optimization Uninformed of Change

May 16, 2025Abstract:Dynamic optimization problems (DOPs) are challenging due to their changing conditions. This requires algorithms to be highly adaptable and efficient in terms of finding rapidly new optimal solutions under changing conditions. Traditional approaches often depend on explicit change detection, which can be impractical or inefficient when the change detection is unreliable or unfeasible. We propose Perturbation and Speciation-Based Particle Swarm Optimization (PSPSO), a robust algorithm for uninformed dynamic optimization without requiring the information of environmental changes. The PSPSO combines speciation-based niching, deactivation, and a newly proposed random perturbation mechanism to handle DOPs. PSPSO leverages a cyclical multi-population framework, strategic resource allocation, and targeted noisy updates, to adapt to dynamic environments. We compare PSPSO with several state-of-the-art algorithms on the Generalized Moving Peaks Benchmark (GMPB), which covers a variety of scenarios, including simple and multi-modal dynamic optimization, frequent and intense changes, and high-dimensional spaces. Our results show that PSPSO outperforms other state-of-the-art uninformed algorithms in all scenarios and leads to competitive results compared to informed algorithms. In particular, PSPSO shows strength in functions with high dimensionality or high frequency of change in the GMPB. The ablation study showed the importance of the random perturbation component.

An investigation on the use of Large Language Models for hyperparameter tuning in Evolutionary Algorithms

Aug 05, 2024

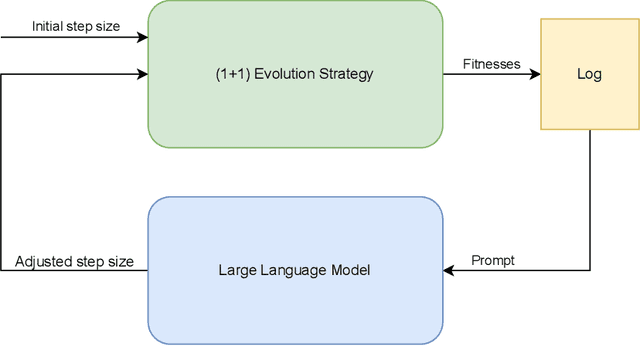

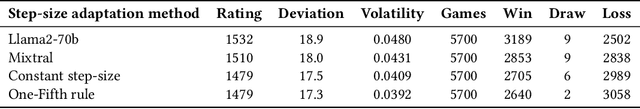

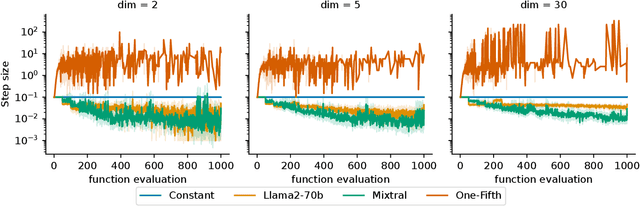

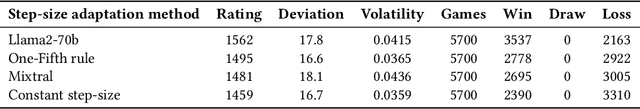

Abstract:Hyperparameter optimization is a crucial problem in Evolutionary Computation. In fact, the values of the hyperparameters directly impact the trajectory taken by the optimization process, and their choice requires extensive reasoning by human operators. Although a variety of self-adaptive Evolutionary Algorithms have been proposed in the literature, no definitive solution has been found. In this work, we perform a preliminary investigation to automate the reasoning process that leads to the choice of hyperparameter values. We employ two open-source Large Language Models (LLMs), namely Llama2-70b and Mixtral, to analyze the optimization logs online and provide novel real-time hyperparameter recommendations. We study our approach in the context of step-size adaptation for (1+1)-ES. The results suggest that LLMs can be an effective method for optimizing hyperparameters in Evolution Strategies, encouraging further research in this direction.

The Effect of Training Schedules on Morphological Robustness and Generalization

Jul 19, 2024

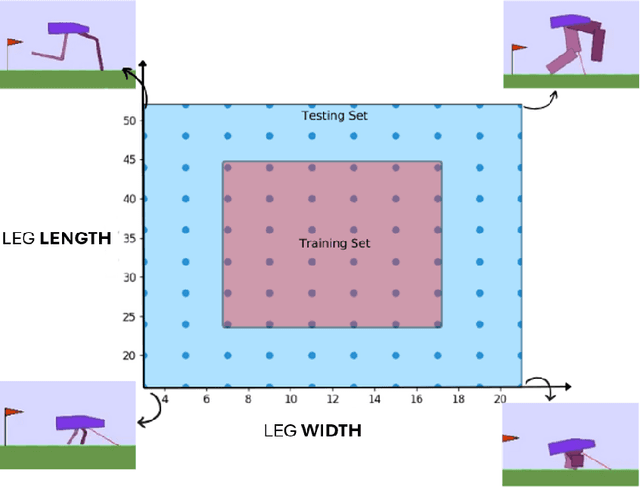

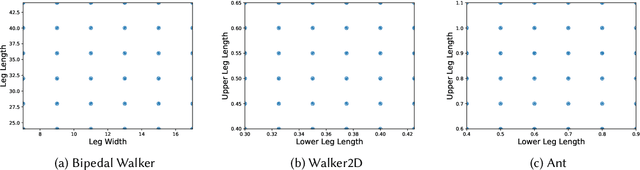

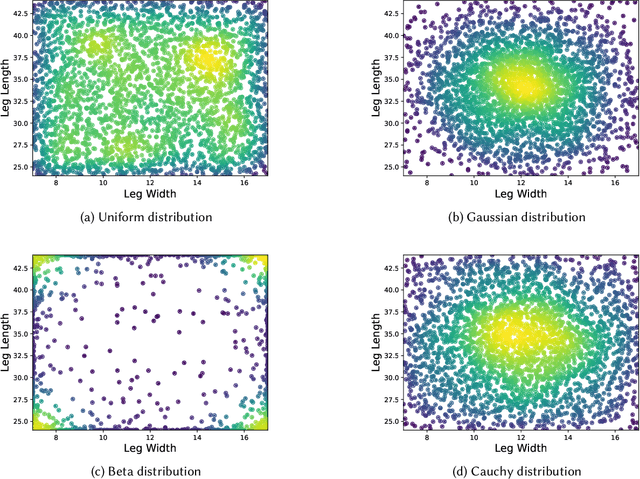

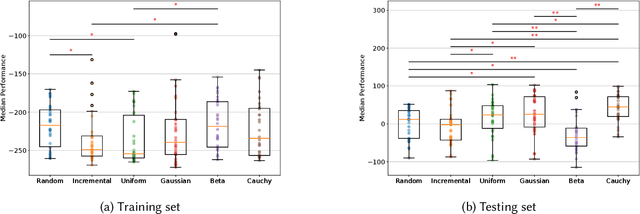

Abstract:Robustness and generalizability are the key properties of artificial neural network (ANN)-based controllers for maintaining a reliable performance in case of changes. It is demonstrated that exposing the ANNs to variations during training processes can improve their robustness and generalization capabilities. However, the way in which this variation is introduced can have a significant impact. In this paper, we define various training schedules to specify how these variations are introduced during an evolutionary learning process. In particular, we focus on morphological robustness and generalizability concerned with finding an ANN-based controller that can provide sufficient performance on a range of physical variations. Then, we perform an extensive analysis of the effect of these training schedules on morphological generalization. Furthermore, we formalize the process of training sample selection (i.e., morphological variations) to improve generalization as a reinforcement learning problem. Overall, our results provide deeper insights into the role of variability and the ways of enhancing the generalization property of evolved ANN-based controllers.

Collaborative Interactive Evolution of Art in the Latent Space of Deep Generative Models

Mar 28, 2024Abstract:Generative Adversarial Networks (GANs) have shown great success in generating high quality images and are thus used as one of the main approaches to generate art images. However, usually the image generation process involves sampling from the latent space of the learned art representations, allowing little control over the output. In this work, we first employ GANs that are trained to produce creative images using an architecture known as Creative Adversarial Networks (CANs), then, we employ an evolutionary approach to navigate within the latent space of the models to discover images. We use automatic aesthetic and collaborative interactive human evaluation metrics to assess the generated images. In the human interactive evaluation case, we propose a collaborative evaluation based on the assessments of several participants. Furthermore, we also experiment with an intelligent mutation operator that aims to improve the quality of the images through local search based on an aesthetic measure. We evaluate the effectiveness of this approach by comparing the results produced by the automatic and collaborative interactive evolution. The results show that the proposed approach can generate highly attractive art images when the evolution is guided by collaborative human feedback.

Evolving generalist controllers to handle a wide range of morphological variations

Sep 20, 2023Abstract:Neuro-evolutionary methods have proven effective in addressing a wide range of tasks. However, the study of the robustness and generalisability of evolved artificial neural networks (ANNs) has remained limited. This has immense implications in the fields like robotics where such controllers are used in control tasks. Unexpected morphological or environmental changes during operation can risk failure if the ANN controllers are unable to handle these changes. This paper proposes an algorithm that aims to enhance the robustness and generalisability of the controllers. This is achieved by introducing morphological variations during the evolutionary process. As a results, it is possible to discover generalist controllers that can handle a wide range of morphological variations sufficiently without the need of the information regarding their morphologies or adaptation of their parameters. We perform an extensive experimental analysis on simulation that demonstrates the trade-off between specialist and generalist controllers. The results show that generalists are able to control a range of morphological variations with a cost of underperforming on a specific morphology relative to a specialist. This research contributes to the field by addressing the limited understanding of robustness and generalisability in neuro-evolutionary methods and proposes a method by which to improve these properties.

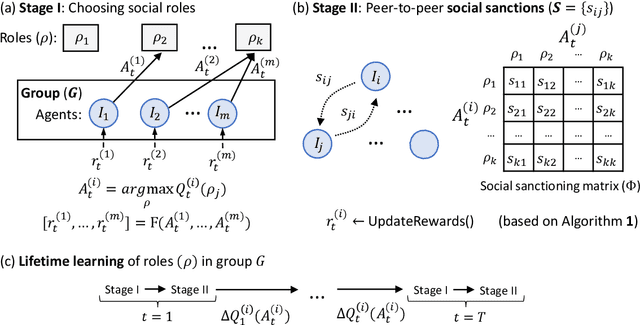

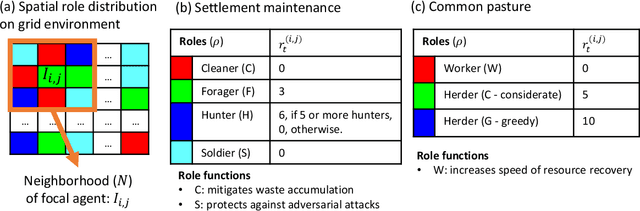

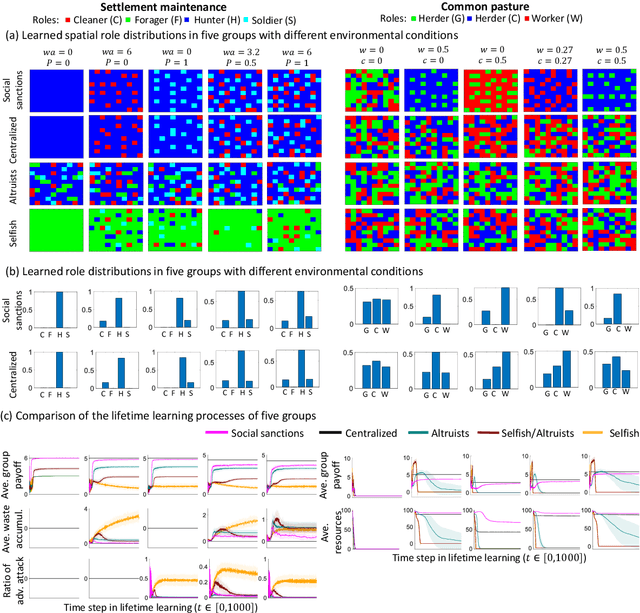

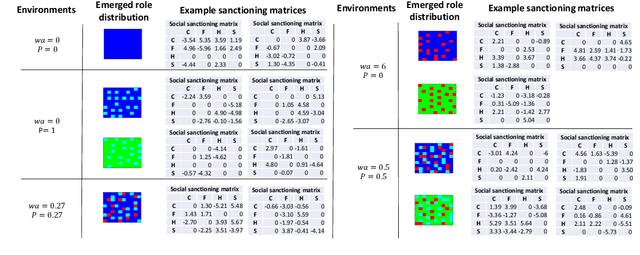

The emergence of division of labor through decentralized social sanctioning

Aug 12, 2022

Abstract:Human ecological success relies on our characteristic ability to flexibly self-organize in cooperative social groups. Successful groups employ substantial specialization and division of labor. Unlike most other animals, humans learn by trial and error during their lives what role to take on. However, when some critical roles are more attractive than others, and individuals are self-interested, then there is a social dilemma: each individual would prefer others take on the critical-but-unremunerative roles so they may remain free to take one that pays better. But disaster occurs if all act thusly and a critical role goes unfilled. In such situations learning an optimum role distribution may not be possible. Consequently, a fundamental question is: how can division of labor emerge in groups of self-interested lifetime-learning individuals? Here we show that by introducing a model of social norms, which we regard as patterns of decentralized social sanctioning, it becomes possible for groups of self-interested individuals to learn a productive division of labor involving all critical roles. Such social norms work by redistributing rewards within the population to disincentivize antisocial roles while incentivizing prosocial roles that do not intrinsically pay as well as others.

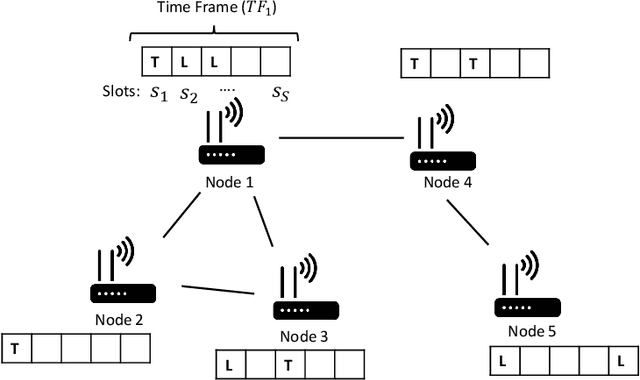

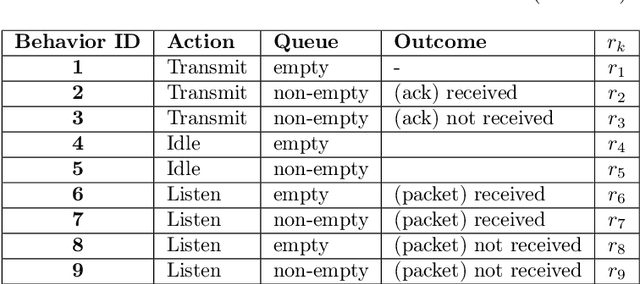

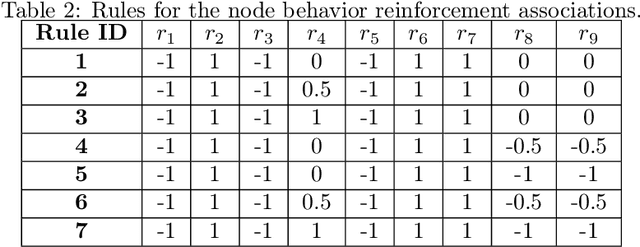

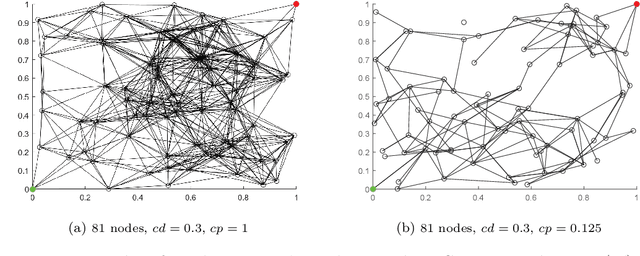

Online Distributed Evolutionary Optimization of Time Division Multiple Access Protocols

Apr 27, 2022

Abstract:With the advent of cheap, miniaturized electronics, ubiquitous networking has reached an unprecedented level of complexity, scale and heterogeneity, becoming the core of several modern applications such as smart industry, smart buildings and smart cities. A crucial element for network performance is the protocol stack, namely the sets of rules and data formats that determine how the nodes in the network exchange information. A great effort has been put to devise formal techniques to synthesize (offline) network protocols, starting from system specifications and strict assumptions on the network environment. However, offline design can be hard to apply in the most modern network applications, either due to numerical complexity, or to the fact that the environment might be unknown and the specifications might not available. In these cases, online protocol design and adaptation has the potential to offer a much more scalable and robust solution. Nevertheless, so far only a few attempts have been done towards online automatic protocol design. Here, we envision a protocol as an emergent property of a network, obtained by an environment-driven Distributed Hill Climbing algorithm that uses node-local reinforcement signals to evolve, at runtime and without any central coordination, a network protocol from scratch. We test this approach with a 3-state Time Division Multiple Access (TDMA) Medium Access Control (MAC) protocol and we observe its emergence in networks of various scales and with various settings. We also show how Distributed Hill Climbing can reach different trade-offs in terms of energy consumption and protocol performance.

Meta-control of social learning strategies

Jun 18, 2021

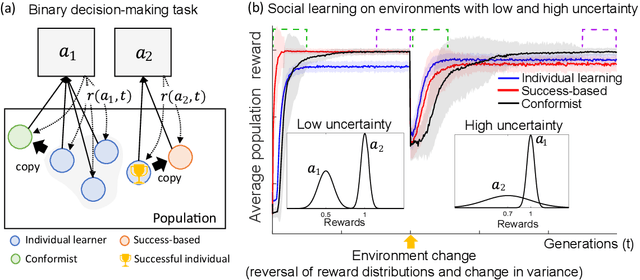

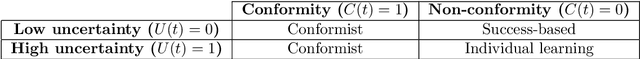

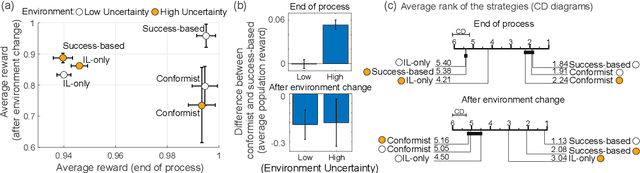

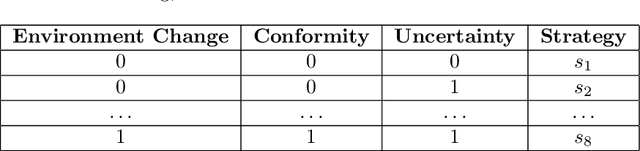

Abstract:Social learning, copying other's behavior without actual experience, offers a cost-effective means of knowledge acquisition. However, it raises the fundamental question of which individuals have reliable information: successful individuals versus the majority. The former and the latter are known respectively as success-based and conformist social learning strategies. We show here that while the success-based strategy fully exploits the benign environment of low uncertainly, it fails in uncertain environments. On the other hand, the conformist strategy can effectively mitigate this adverse effect. Based on these findings, we hypothesized that meta-control of individual and social learning strategies provides effective and sample-efficient learning in volatile and uncertain environments. Simulations on a set of environments with various levels of volatility and uncertainty confirmed our hypothesis. The results imply that meta-control of social learning affords agents the leverage to resolve environmental uncertainty with minimal exploration cost, by exploiting others' learning as an external knowledge base.

A Framework for Knowledge Integrated Evolutionary Algorithms

Mar 31, 2021

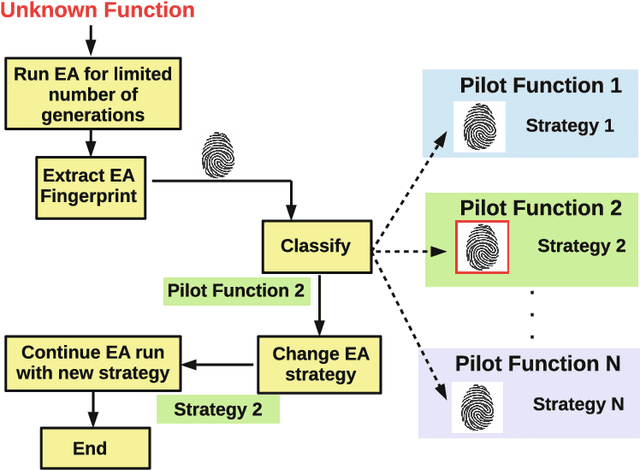

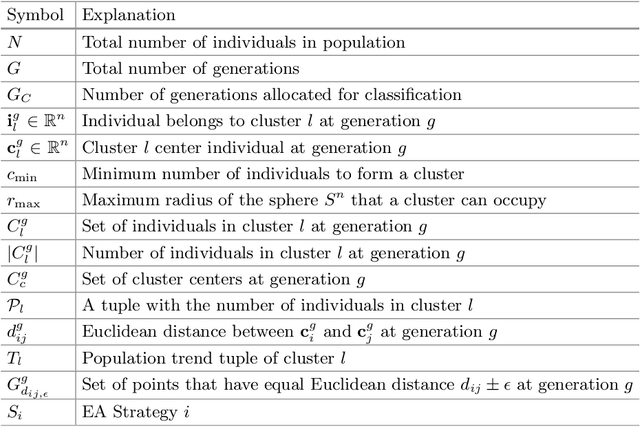

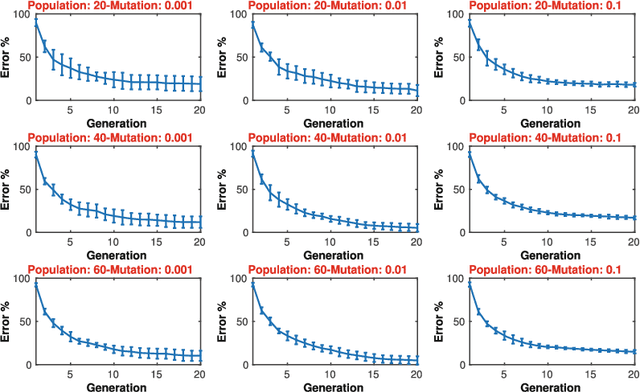

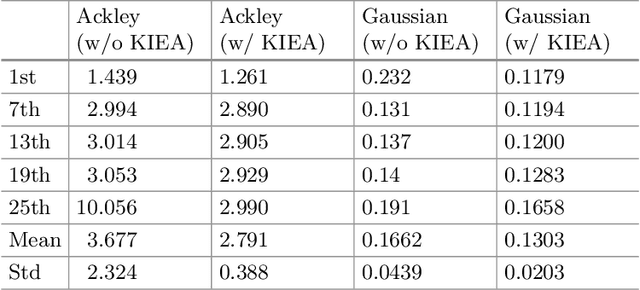

Abstract:One of the main reasons for the success of Evolutionary Algorithms (EAs) is their general-purposeness, i.e., the fact that they can be applied straightforwardly to a broad range of optimization problems, without any specific prior knowledge. On the other hand, it has been shown that incorporating a priori knowledge, such as expert knowledge or empirical findings, can significantly improve the performance of an EA. However, integrating knowledge in EAs poses numerous challenges. It is often the case that the features of the search space are unknown, hence any knowledge associated with the search space properties can be hardly used. In addition, a priori knowledge is typically problem-specific and hard to generalize. In this paper, we propose a framework, called Knowledge Integrated Evolutionary Algorithm (KIEA), which facilitates the integration of existing knowledge into EAs. Notably, the KIEA framework is EA-agnostic (i.e., it works with any evolutionary algorithm), problem-independent (i.e., it is not dedicated to a specific type of problems), expandable (i.e., its knowledge base can grow over time). Furthermore, the framework integrates knowledge while the EA is running, thus optimizing the use of the needed computational power. In the preliminary experiments shown here, we observe that the KIEA framework produces in the worst case an 80% improvement on the converge time, w.r.t. the corresponding "knowledge-free" EA counterpart.

Topological Insights into Sparse Neural Networks

Jul 04, 2020

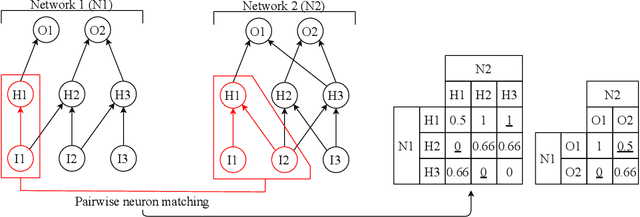

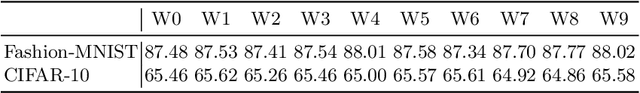

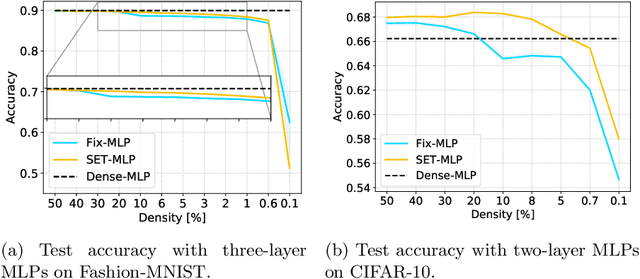

Abstract:Sparse neural networks are effective approaches to reduce the resource requirements for the deployment of deep neural networks. Recently, the concept of adaptive sparse connectivity, has emerged to allow training sparse neural networks from scratch by optimizing the sparse structure during training. However, comparing different sparse topologies and determining how sparse topologies evolve during training, especially for the situation in which the sparse structure optimization is involved, remain as challenging open questions. This comparison becomes increasingly complex as the number of possible topological comparisons increases exponentially with the size of networks. In this work, we introduce an approach to understand and compare sparse neural network topologies from the perspective of graph theory. We first propose Neural Network Sparse Topology Distance (NNSTD) to measure the distance between different sparse neural networks. Further, we demonstrate that sparse neural networks can outperform over-parameterized models in terms of performance, even without any further structure optimization. To the end, we also show that adaptive sparse connectivity can always unveil a plenitude of sparse sub-networks with very different topologies which outperform the dense model, by quantifying and comparing their topological evolutionary processes. The latter findings complement the Lottery Ticket Hypothesis by showing that there is a much more efficient and robust way to find "winning tickets". Altogether, our results start enabling a better theoretical understanding of sparse neural networks, and demonstrate the utility of using graph theory to analyze them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge