Ang Bian

Make Continual Learning Stronger via C-Flat

Apr 01, 2024Abstract:Model generalization ability upon incrementally acquiring dynamically updating knowledge from sequentially arriving tasks is crucial to tackle the sensitivity-stability dilemma in Continual Learning (CL). Weight loss landscape sharpness minimization seeking for flat minima lying in neighborhoods with uniform low loss or smooth gradient is proven to be a strong training regime improving model generalization compared with loss minimization based optimizer like SGD. Yet only a few works have discussed this training regime for CL, proving that dedicated designed zeroth-order sharpness optimizer can improve CL performance. In this work, we propose a Continual Flatness (C-Flat) method featuring a flatter loss landscape tailored for CL. C-Flat could be easily called with only one line of code and is plug-and-play to any CL methods. A general framework of C-Flat applied to all CL categories and a thorough comparison with loss minima optimizer and flat minima based CL approaches is presented in this paper, showing that our method can boost CL performance in almost all cases. Code will be publicly available upon publication.

Progressive Learning without Forgetting

Nov 28, 2022Abstract:Learning from changing tasks and sequential experience without forgetting the obtained knowledge is a challenging problem for artificial neural networks. In this work, we focus on two challenging problems in the paradigm of Continual Learning (CL) without involving any old data: (i) the accumulation of catastrophic forgetting caused by the gradually fading knowledge space from which the model learns the previous knowledge; (ii) the uncontrolled tug-of-war dynamics to balance the stability and plasticity during the learning of new tasks. In order to tackle these problems, we present Progressive Learning without Forgetting (PLwF) and a credit assignment regime in the optimizer. PLwF densely introduces model functions from previous tasks to construct a knowledge space such that it contains the most reliable knowledge on each task and the distribution information of different tasks, while credit assignment controls the tug-of-war dynamics by removing gradient conflict through projection. Extensive ablative experiments demonstrate the effectiveness of PLwF and credit assignment. In comparison with other CL methods, we report notably better results even without relying on any raw data.

A Bhattacharyya Coefficient-Based Framework for Noise Model-Aware Random Walker Image Segmentation

Jun 02, 2022

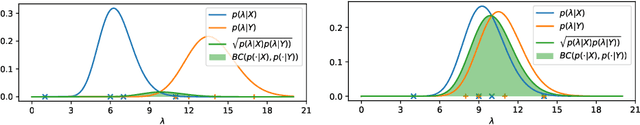

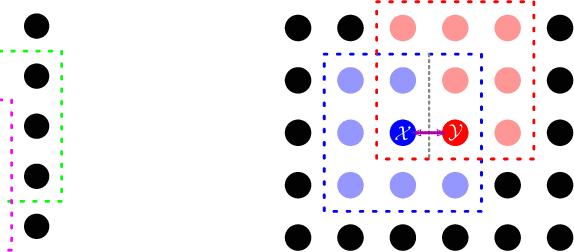

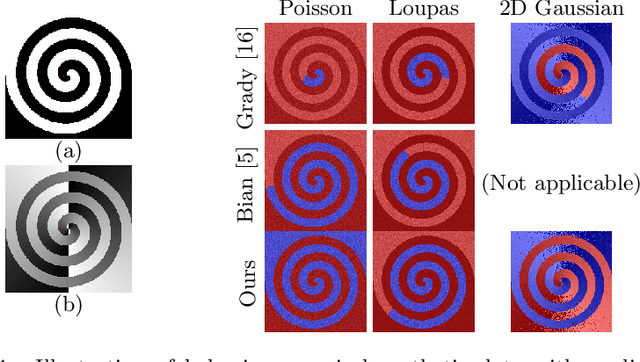

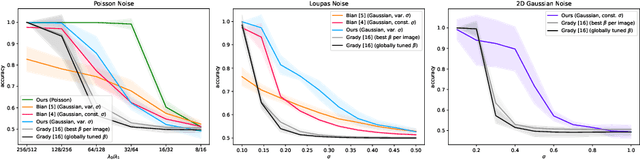

Abstract:One well established method of interactive image segmentation is the random walker algorithm. Considerable research on this family of segmentation methods has been continuously conducted in recent years with numerous applications. These methods are common in using a simple Gaussian weight function which depends on a parameter that strongly influences the segmentation performance. In this work we propose a general framework of deriving weight functions based on probabilistic modeling. This framework can be concretized to cope with virtually any well-defined noise model. It eliminates the critical parameter and thus avoids time-consuming parameter search. We derive the specific weight functions for common noise types and show their superior performance on synthetic data as well as different biomedical image data (MRI images from the NYU fastMRI dataset, larvae images acquired with the FIM technique). Our framework can also be used in multiple other applications, e.g., the graph cut algorithm and its extensions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge