Florian Eilers

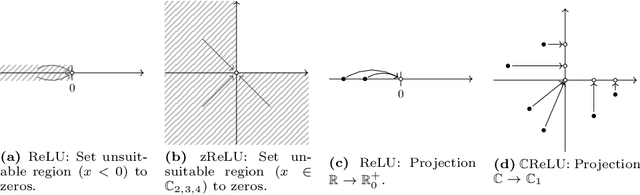

CVKAN: Complex-Valued Kolmogorov-Arnold Networks

Feb 04, 2025

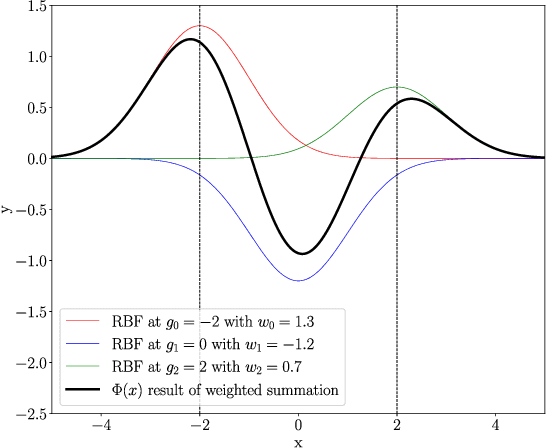

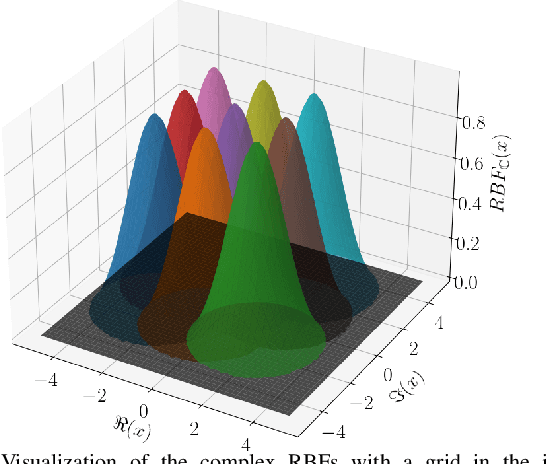

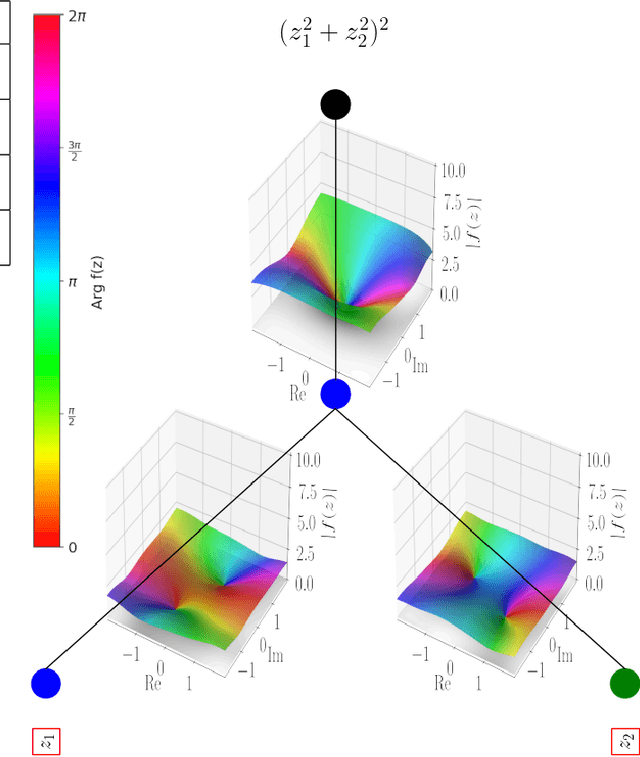

Abstract:In this work we propose CKAN, a complex-valued KAN, to join the intrinsic interpretability of KANs and the advantages of Complex-Valued Neural Networks (CVNNs). We show how to transfer a KAN and the necessary associated mechanisms into the complex domain. To confirm that CKAN meets expectations we conduct experiments on symbolic complex-valued function fitting and physically meaningful formulae as well as on a more realistic dataset from knot theory. Our proposed CKAN is more stable and performs on par or better than real-valued KANs while requiring less parameters and a shallower network architecture, making it more explainable.

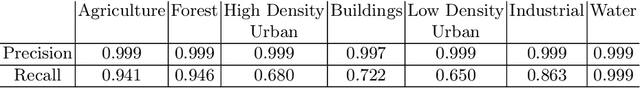

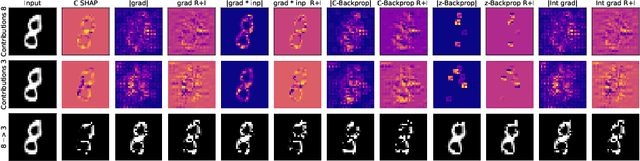

DeepCSHAP: Utilizing Shapley Values to Explain Deep Complex-Valued Neural Networks

Mar 13, 2024

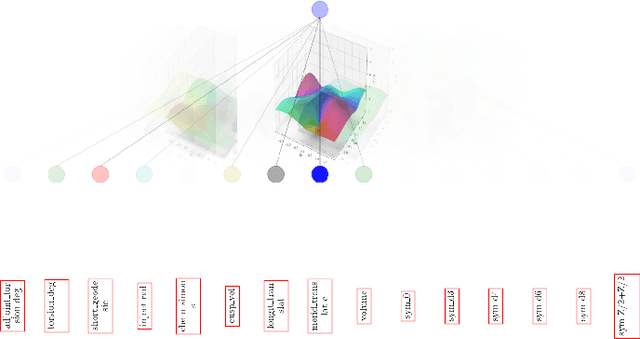

Abstract:Deep Neural Networks are widely used in academy as well as corporate and public applications, including safety critical applications such as health care and autonomous driving. The ability to explain their output is critical for safety reasons as well as acceptance among applicants. A multitude of methods have been proposed to explain real-valued neural networks. Recently, complex-valued neural networks have emerged as a new class of neural networks dealing with complex-valued input data without the necessity of projecting them onto $\mathbb{R}^2$. This brings up the need to develop explanation algorithms for this kind of neural networks. In this paper we provide these developments. While we focus on adapting the widely used DeepSHAP algorithm to the complex domain, we also present versions of four gradient based explanation methods suitable for use in complex-valued neural networks. We evaluate the explanation quality of all presented algorithms and provide all of them as an open source library adaptable to most recent complex-valued neural network architectures.

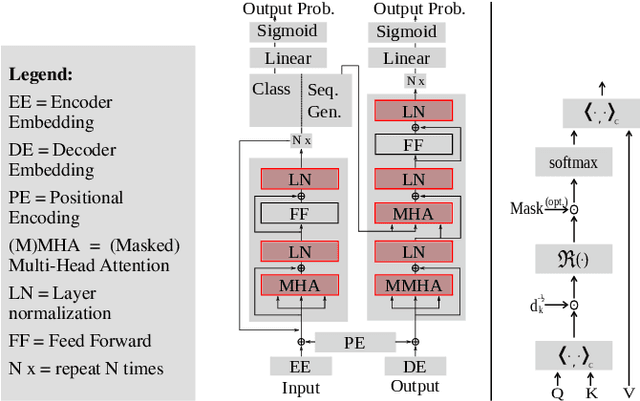

Building Blocks for a Complex-Valued Transformer Architecture

Jun 16, 2023

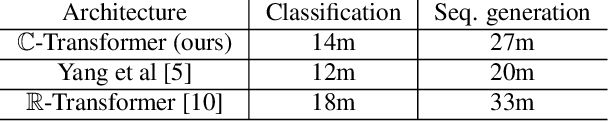

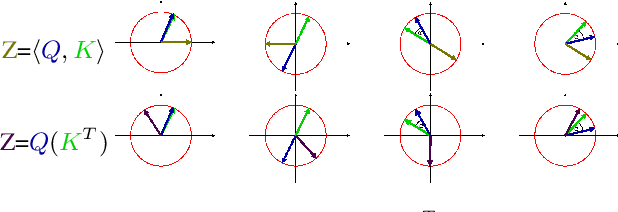

Abstract:Most deep learning pipelines are built on real-valued operations to deal with real-valued inputs such as images, speech or music signals. However, a lot of applications naturally make use of complex-valued signals or images, such as MRI or remote sensing. Additionally the Fourier transform of signals is complex-valued and has numerous applications. We aim to make deep learning directly applicable to these complex-valued signals without using projections into $\mathbb{R}^2$. Thus we add to the recent developments of complex-valued neural networks by presenting building blocks to transfer the transformer architecture to the complex domain. We present multiple versions of a complex-valued Scaled Dot-Product Attention mechanism as well as a complex-valued layer normalization. We test on a classification and a sequence generation task on the MusicNet dataset and show improved robustness to overfitting while maintaining on-par performance when compared to the real-valued transformer architecture.

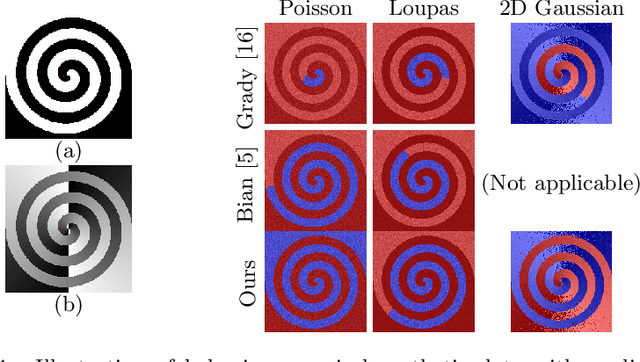

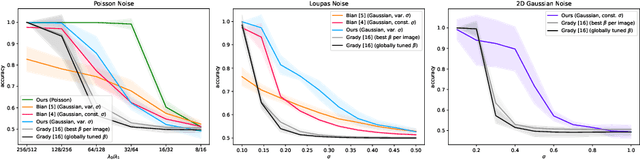

A Bhattacharyya Coefficient-Based Framework for Noise Model-Aware Random Walker Image Segmentation

Jun 02, 2022

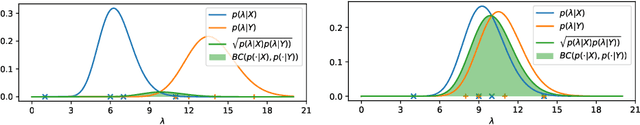

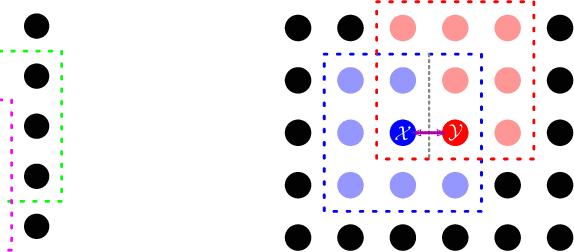

Abstract:One well established method of interactive image segmentation is the random walker algorithm. Considerable research on this family of segmentation methods has been continuously conducted in recent years with numerous applications. These methods are common in using a simple Gaussian weight function which depends on a parameter that strongly influences the segmentation performance. In this work we propose a general framework of deriving weight functions based on probabilistic modeling. This framework can be concretized to cope with virtually any well-defined noise model. It eliminates the critical parameter and thus avoids time-consuming parameter search. We derive the specific weight functions for common noise types and show their superior performance on synthetic data as well as different biomedical image data (MRI images from the NYU fastMRI dataset, larvae images acquired with the FIM technique). Our framework can also be used in multiple other applications, e.g., the graph cut algorithm and its extensions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge