Aojun Lu

CogFlow: Bridging Perception and Reasoning through Knowledge Internalization for Visual Mathematical Problem Solving

Jan 05, 2026Abstract:Despite significant progress, multimodal large language models continue to struggle with visual mathematical problem solving. Some recent works recognize that visual perception is a bottleneck in visual mathematical reasoning, but their solutions are limited to improving the extraction and interpretation of visual inputs. Notably, they all ignore the key issue of whether the extracted visual cues are faithfully integrated and properly utilized in subsequent reasoning. Motivated by this, we present CogFlow, a novel cognitive-inspired three-stage framework that incorporates a knowledge internalization stage, explicitly simulating the hierarchical flow of human reasoning: perception$\Rightarrow$internalization$\Rightarrow$reasoning. Inline with this hierarchical flow, we holistically enhance all its stages. We devise Synergistic Visual Rewards to boost perception capabilities in parametric and semantic spaces, jointly improving visual information extraction from symbols and diagrams. To guarantee faithful integration of extracted visual cues into subsequent reasoning, we introduce a Knowledge Internalization Reward model in the internalization stage, bridging perception and reasoning. Moreover, we design a Visual-Gated Policy Optimization algorithm to further enforce the reasoning is grounded with the visual knowledge, preventing models seeking shortcuts that appear coherent but are visually ungrounded reasoning chains. Moreover, we contribute a new dataset MathCog for model training, which contains samples with over 120K high-quality perception-reasoning aligned annotations. Comprehensive experiments and analysis on commonly used visual mathematical reasoning benchmarks validate the superiority of the proposed CogFlow.

C-Flat++: Towards a More Efficient and Powerful Framework for Continual Learning

Aug 26, 2025Abstract:Balancing sensitivity to new tasks and stability for retaining past knowledge is crucial in continual learning (CL). Recently, sharpness-aware minimization has proven effective in transfer learning and has also been adopted in continual learning (CL) to improve memory retention and learning efficiency. However, relying on zeroth-order sharpness alone may favor sharper minima over flatter ones in certain settings, leading to less robust and potentially suboptimal solutions. In this paper, we propose \textbf{C}ontinual \textbf{Flat}ness (\textbf{C-Flat}), a method that promotes flatter loss landscapes tailored for CL. C-Flat offers plug-and-play compatibility, enabling easy integration with minimal modifications to the code pipeline. Besides, we present a general framework that integrates C-Flat into all major CL paradigms and conduct comprehensive comparisons with loss-minima optimizers and flat-minima-based CL methods. Our results show that C-Flat consistently improves performance across a wide range of settings. In addition, we introduce C-Flat++, an efficient yet effective framework that leverages selective flatness-driven promotion, significantly reducing the update cost required by C-Flat. Extensive experiments across multiple CL methods, datasets, and scenarios demonstrate the effectiveness and efficiency of our proposed approaches. Code is available at https://github.com/WanNaa/C-Flat.

Adapt before Continual Learning

Jun 05, 2025Abstract:Continual Learning (CL) seeks to enable neural networks to incrementally acquire new knowledge (plasticity) while retaining existing knowledge (stability). While pre-trained models (PTMs) have become pivotal in CL, prevailing approaches freeze the PTM backbone to preserve stability, limiting their plasticity, particularly when encountering significant domain gaps in incremental tasks. Conversely, sequentially finetuning the entire PTM risks catastrophic forgetting of generalizable knowledge, exposing a critical stability-plasticity trade-off. To address this challenge, we propose Adapting PTMs before the core CL process (ACL), a novel framework that refines the PTM backbone through a plug-and-play adaptation phase before learning each new task with existing CL approaches (e.g., prompt tuning). ACL enhances plasticity by aligning embeddings with their original class prototypes while distancing them from others, theoretically and empirically shown to balance stability and plasticity. Extensive experiments demonstrate that ACL significantly improves CL performance across benchmarks and integrated methods, offering a versatile solution for PTM-based CL. Code is available at https://github.com/byyx666/ACL_code.

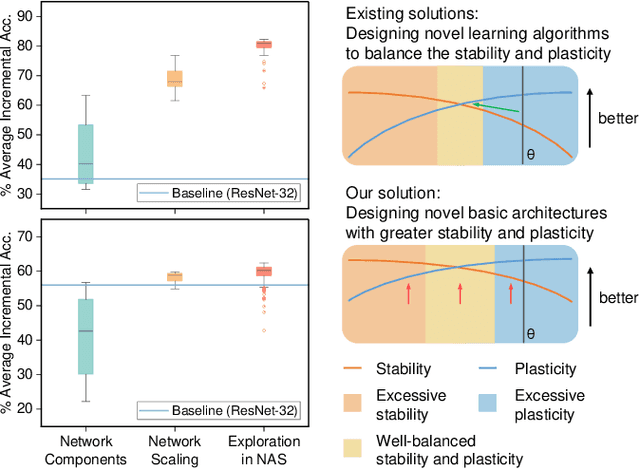

Rethinking the Stability-Plasticity Trade-off in Continual Learning from an Architectural Perspective

Jun 05, 2025Abstract:The quest for Continual Learning (CL) seeks to empower neural networks with the ability to learn and adapt incrementally. Central to this pursuit is addressing the stability-plasticity dilemma, which involves striking a balance between two conflicting objectives: preserving previously learned knowledge and acquiring new knowledge. While numerous CL methods aim to achieve this trade-off, they often overlook the impact of network architecture on stability and plasticity, restricting the trade-off to the parameter level. In this paper, we delve into the conflict between stability and plasticity at the architectural level. We reveal that under an equal parameter constraint, deeper networks exhibit better plasticity, while wider networks are characterized by superior stability. To address this architectural-level dilemma, we introduce a novel framework denoted Dual-Arch, which serves as a plug-in component for CL. This framework leverages the complementary strengths of two distinct and independent networks: one dedicated to plasticity and the other to stability. Each network is designed with a specialized and lightweight architecture, tailored to its respective objective. Extensive experiments demonstrate that Dual-Arch enhances the performance of existing CL methods while being up to 87% more compact in terms of parameters. Code: https://github.com/byyx666/Dual-Arch.

Position: Continual Learning Benefits from An Evolving Population over An Unified Model

Feb 10, 2025

Abstract:Deep neural networks have demonstrated remarkable success in machine learning; however, they remain fundamentally ill-suited for Continual Learning (CL). Recent research has increasingly focused on achieving CL without the need for rehearsal. Among these, parameter isolation-based methods have proven particularly effective in enhancing CL by optimizing model weights for each incremental task. Despite their success, they fall short in optimizing architectures tailored to distinct incremental tasks. To address this limitation, updating a group of models with different architectures offers a promising alternative to the traditional CL paradigm that relies on a single unified model. Building on this insight, this study introduces a novel Population-based Continual Learning (PCL) framework. PCL extends CL to the architectural level by maintaining and evolving a population of neural network architectures, which are continually refined for the current task through NAS. Importantly, the well-evolved population for the current incremental task is naturally inherited by the subsequent one, thereby facilitating forward transfer, a crucial objective in CL. Throughout the CL process, the population evolves, yielding task-specific architectures that collectively form a robust CL system. Experimental results demonstrate that PCL outperforms state-of-the-art rehearsal-free CL methods that employs a unified model, highlighting its potential as a new paradigm for CL.

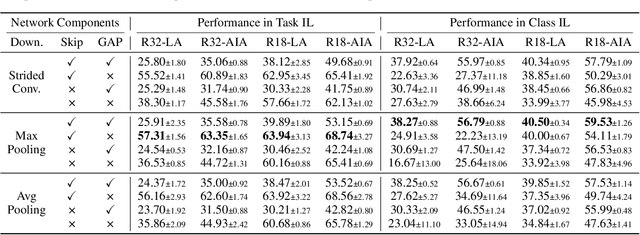

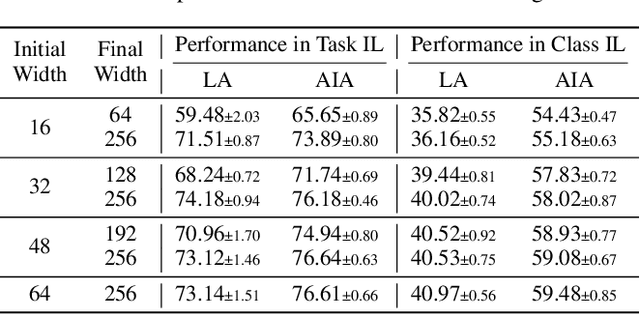

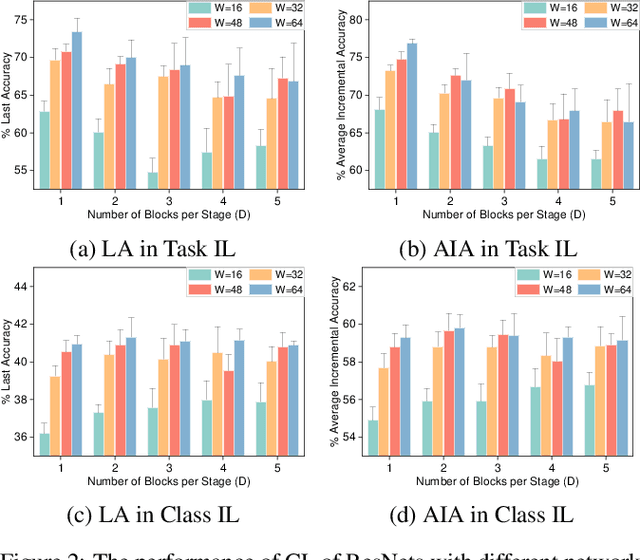

Revisiting Neural Networks for Continual Learning: An Architectural Perspective

Apr 28, 2024

Abstract:Efforts to overcome catastrophic forgetting have primarily centered around developing more effective Continual Learning (CL) methods. In contrast, less attention was devoted to analyzing the role of network architecture design (e.g., network depth, width, and components) in contributing to CL. This paper seeks to bridge this gap between network architecture design and CL, and to present a holistic study on the impact of network architectures on CL. This work considers architecture design at the network scaling level, i.e., width and depth, and also at the network components, i.e., skip connections, global pooling layers, and down-sampling. In both cases, we first derive insights through systematically exploring how architectural designs affect CL. Then, grounded in these insights, we craft a specialized search space for CL and further propose a simple yet effective ArchCraft method to steer a CL-friendly architecture, namely, this method recrafts AlexNet/ResNet into AlexAC/ResAC. Experimental validation across various CL settings and scenarios demonstrates that improved architectures are parameter-efficient, achieving state-of-the-art performance of CL while being 86%, 61%, and 97% more compact in terms of parameters than the naive CL architecture in Task IL and Class IL. Code is available at https://github.com/byyx666/ArchCraft.

Make Continual Learning Stronger via C-Flat

Apr 01, 2024Abstract:Model generalization ability upon incrementally acquiring dynamically updating knowledge from sequentially arriving tasks is crucial to tackle the sensitivity-stability dilemma in Continual Learning (CL). Weight loss landscape sharpness minimization seeking for flat minima lying in neighborhoods with uniform low loss or smooth gradient is proven to be a strong training regime improving model generalization compared with loss minimization based optimizer like SGD. Yet only a few works have discussed this training regime for CL, proving that dedicated designed zeroth-order sharpness optimizer can improve CL performance. In this work, we propose a Continual Flatness (C-Flat) method featuring a flatter loss landscape tailored for CL. C-Flat could be easily called with only one line of code and is plug-and-play to any CL methods. A general framework of C-Flat applied to all CL categories and a thorough comparison with loss minima optimizer and flat minima based CL approaches is presented in this paper, showing that our method can boost CL performance in almost all cases. Code will be publicly available upon publication.

Genetic Auto-prompt Learning for Pre-trained Code Intelligence Language Models

Mar 20, 2024

Abstract:As Pre-trained Language Models (PLMs), a popular approach for code intelligence, continue to grow in size, the computational cost of their usage has become prohibitively expensive. Prompt learning, a recent development in the field of natural language processing, emerges as a potential solution to address this challenge. In this paper, we investigate the effectiveness of prompt learning in code intelligence tasks. We unveil its reliance on manually designed prompts, which often require significant human effort and expertise. Moreover, we discover existing automatic prompt design methods are very limited to code intelligence tasks due to factors including gradient dependence, high computational demands, and limited applicability. To effectively address both issues, we propose Genetic Auto Prompt (GenAP), which utilizes an elaborate genetic algorithm to automatically design prompts. With GenAP, non-experts can effortlessly generate superior prompts compared to meticulously manual-designed ones. GenAP operates without the need for gradients or additional computational costs, rendering it gradient-free and cost-effective. Moreover, GenAP supports both understanding and generation types of code intelligence tasks, exhibiting great applicability. We conduct GenAP on three popular code intelligence PLMs with three canonical code intelligence tasks including defect prediction, code summarization, and code translation. The results suggest that GenAP can effectively automate the process of designing prompts. Specifically, GenAP outperforms all other methods across all three tasks (e.g., improving accuracy by an average of 2.13% for defect prediction). To the best of our knowledge, GenAP is the first work to automatically design prompts for code intelligence PLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge