Andreas Karatzas

CarbonCall: Sustainability-Aware Function Calling for Large Language Models on Edge Devices

Apr 29, 2025Abstract:Large Language Models (LLMs) enable real-time function calling in edge AI systems but introduce significant computational overhead, leading to high power consumption and carbon emissions. Existing methods optimize for performance while neglecting sustainability, making them inefficient for energy-constrained environments. We introduce CarbonCall, a sustainability-aware function-calling framework that integrates dynamic tool selection, carbon-aware execution, and quantized LLM adaptation. CarbonCall adjusts power thresholds based on real-time carbon intensity forecasts and switches between model variants to sustain high tokens-per-second throughput under power constraints. Experiments on an NVIDIA Jetson AGX Orin show that CarbonCall reduces carbon emissions by up to 52%, power consumption by 30%, and execution time by 30%, while maintaining high efficiency.

Ecomap: Sustainability-Driven Optimization of Multi-Tenant DNN Execution on Edge Servers

Mar 06, 2025

Abstract:Edge computing systems struggle to efficiently manage multiple concurrent deep neural network (DNN) workloads while meeting strict latency requirements, minimizing power consumption, and maintaining environmental sustainability. This paper introduces Ecomap, a sustainability-driven framework that dynamically adjusts the maximum power threshold of edge devices based on real-time carbon intensity. Ecomap incorporates the innovative use of mixed-quality models, allowing it to dynamically replace computationally heavy DNNs with lighter alternatives when latency constraints are violated, ensuring service responsiveness with minimal accuracy loss. Additionally, it employs a transformer-based estimator to guide efficient workload mappings. Experimental results using NVIDIA Jetson AGX Xavier demonstrate that Ecomap reduces carbon emissions by an average of 30% and achieves a 25% lower carbon delay product (CDP) compared to state-of-the-art methods, while maintaining comparable or better latency and power efficiency.

Multi-Agent Geospatial Copilots for Remote Sensing Workflows

Jan 27, 2025

Abstract:We present GeoLLM-Squad, a geospatial Copilot that introduces the novel multi-agent paradigm to remote sensing (RS) workflows. Unlike existing single-agent approaches that rely on monolithic large language models (LLM), GeoLLM-Squad separates agentic orchestration from geospatial task-solving, by delegating RS tasks to specialized sub-agents. Built on the open-source AutoGen and GeoLLM-Engine frameworks, our work enables the modular integration of diverse applications, spanning urban monitoring, forestry protection, climate analysis, and agriculture studies. Our results demonstrate that while single-agent systems struggle to scale with increasing RS task complexity, GeoLLM-Squad maintains robust performance, achieving a 17% improvement in agentic correctness over state-of-the-art baselines. Our findings highlight the potential of multi-agent AI in advancing RS workflows.

RankMap: Priority-Aware Multi-DNN Manager for Heterogeneous Embedded Devices

Nov 26, 2024

Abstract:Modern edge data centers simultaneously handle multiple Deep Neural Networks (DNNs), leading to significant challenges in workload management. Thus, current management systems must leverage the architectural heterogeneity of new embedded systems to efficiently handle multi-DNN workloads. This paper introduces RankMap, a priority-aware manager specifically designed for multi-DNN tasks on heterogeneous embedded devices. RankMap addresses the extensive solution space of multi-DNN mapping through stochastic space exploration combined with a performance estimator. Experimental results show that RankMap achieves x3.6 higher average throughput compared to existing methods, while preventing DNN starvation under heavy workloads and improving the prioritization of specified DNNs by x57.5.

Less is More: Optimizing Function Calling for LLM Execution on Edge Devices

Nov 23, 2024Abstract:The advanced function-calling capabilities of foundation models open up new possibilities for deploying agents to perform complex API tasks. However, managing large amounts of data and interacting with numerous APIs makes function calling hardware-intensive and costly, especially on edge devices. Current Large Language Models (LLMs) struggle with function calling at the edge because they cannot handle complex inputs or manage multiple tools effectively. This results in low task-completion accuracy, increased delays, and higher power consumption. In this work, we introduce Less-is-More, a novel fine-tuning-free function-calling scheme for dynamic tool selection. Our approach is based on the key insight that selectively reducing the number of tools available to LLMs significantly improves their function-calling performance, execution time, and power efficiency on edge devices. Experimental results with state-of-the-art LLMs on edge hardware show agentic success rate improvements, with execution time reduced by up to 70% and power consumption by up to 40%.

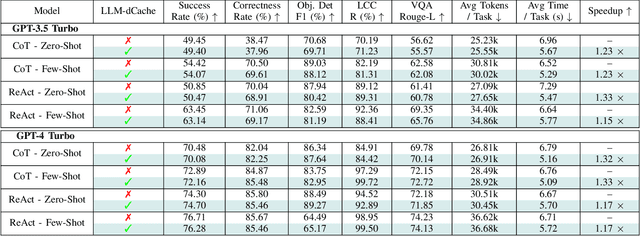

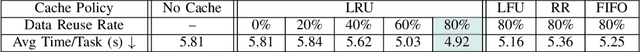

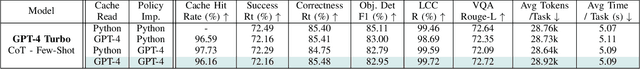

LLM-dCache: Improving Tool-Augmented LLMs with GPT-Driven Localized Data Caching

Jun 10, 2024

Abstract:As Large Language Models (LLMs) broaden their capabilities to manage thousands of API calls, they are confronted with complex data operations across vast datasets with significant overhead to the underlying system. In this work, we introduce LLM-dCache to optimize data accesses by treating cache operations as callable API functions exposed to the tool-augmented agent. We grant LLMs the autonomy to manage cache decisions via prompting, seamlessly integrating with existing function-calling mechanisms. Tested on an industry-scale massively parallel platform that spans hundreds of GPT endpoints and terabytes of imagery, our method improves Copilot times by an average of 1.24x across various LLMs and prompting techniques.

Hardware-Aware DNN Compression via Diverse Pruning and Mixed-Precision Quantization

Dec 23, 2023

Abstract:Deep Neural Networks (DNNs) have shown significant advantages in a wide variety of domains. However, DNNs are becoming computationally intensive and energy hungry at an exponential pace, while at the same time, there is a vast demand for running sophisticated DNN-based services on resource constrained embedded devices. In this paper, we target energy-efficient inference on embedded DNN accelerators. To that end, we propose an automated framework to compress DNNs in a hardware-aware manner by jointly employing pruning and quantization. We explore, for the first time, per-layer fine- and coarse-grained pruning, in the same DNN architecture, in addition to low bit-width mixed-precision quantization for weights and activations. Reinforcement Learning (RL) is used to explore the associated design space and identify the pruning-quantization configuration so that the energy consumption is minimized whilst the prediction accuracy loss is retained at acceptable levels. Using our novel composite RL agent we are able to extract energy-efficient solutions without requiring retraining and/or fine tuning. Our extensive experimental evaluation over widely used DNNs and the CIFAR-10/100 and ImageNet datasets demonstrates that our framework achieves $39\%$ average energy reduction for $1.7\%$ average accuracy loss and outperforms significantly the state-of-the-art approaches.

OmniBoost: Boosting Throughput of Heterogeneous Embedded Devices under Multi-DNN Workload

Jul 06, 2023

Abstract:Modern Deep Neural Networks (DNNs) exhibit profound efficiency and accuracy properties. This has introduced application workloads that comprise of multiple DNN applications, raising new challenges regarding workload distribution. Equipped with a diverse set of accelerators, newer embedded system present architectural heterogeneity, which current run-time controllers are unable to fully utilize. To enable high throughput in multi-DNN workloads, such a controller is ought to explore hundreds of thousands of possible solutions to exploit the underlying heterogeneity. In this paper, we propose OmniBoost, a lightweight and extensible multi-DNN manager for heterogeneous embedded devices. We leverage stochastic space exploration and we combine it with a highly accurate performance estimator to observe a x4.6 average throughput boost compared to other state-of-the-art methods. The evaluation was performed on the HiKey970 development board.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge