Amirali Abdolrashidi

Transferable Graph Optimizers for ML Compilers

Oct 21, 2020

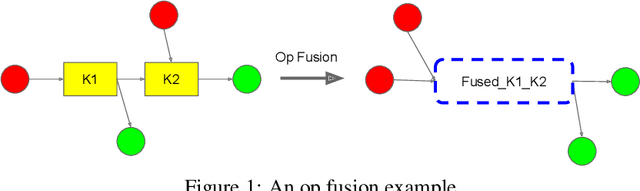

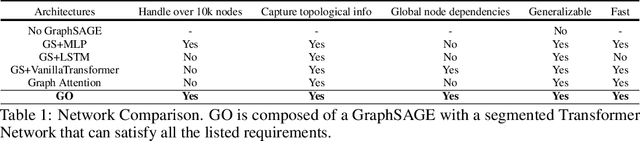

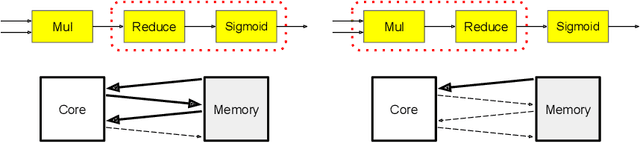

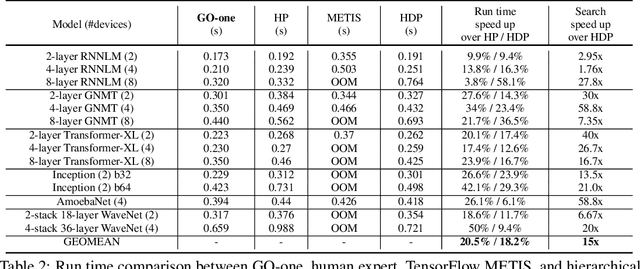

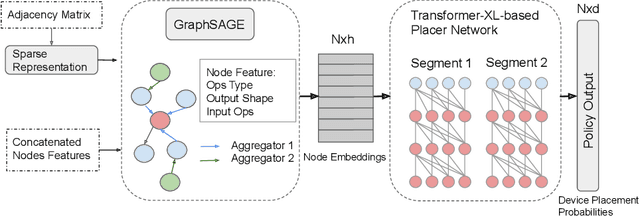

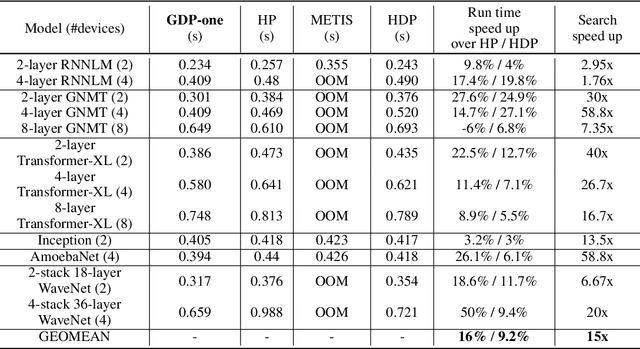

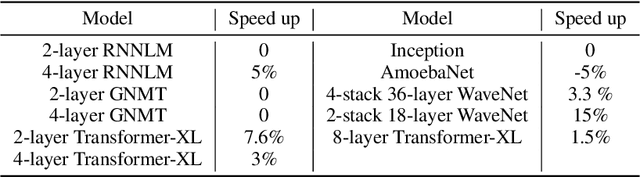

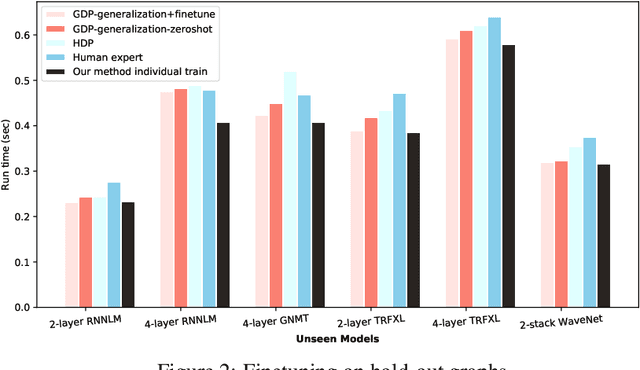

Abstract:Most compilers for machine learning (ML) frameworks need to solve many correlated optimization problems to generate efficient machine code. Current ML compilers rely on heuristics based algorithms to solve these optimization problems one at a time. However, this approach is not only hard to maintain but often leads to sub-optimal solutions especially for newer model architectures. Existing learning based approaches in the literature are sample inefficient, tackle a single optimization problem, and do not generalize to unseen graphs making them infeasible to be deployed in practice. To address these limitations, we propose an end-to-end, transferable deep reinforcement learning method for computational graph optimization (GO), based on a scalable sequential attention mechanism over an inductive graph neural network. GO generates decisions on the entire graph rather than on each individual node autoregressively, drastically speeding up the search compared to prior methods. Moreover, we propose recurrent attention layers to jointly optimize dependent graph optimization tasks and demonstrate 33%-60% speedup on three graph optimization tasks compared to TensorFlow default optimization. On a diverse set of representative graphs consisting of up to 80,000 nodes, including Inception-v3, Transformer-XL, and WaveNet, GO achieves on average 21% improvement over human experts and 18% improvement over the prior state of the art with 15x faster convergence, on a device placement task evaluated in real systems.

* arXiv admin note: text overlap with arXiv:1910.01578

Age and Gender Prediction From Face Images Using Attentional Convolutional Network

Oct 08, 2020

Abstract:Automatic prediction of age and gender from face images has drawn a lot of attention recently, due it is wide applications in various facial analysis problems. However, due to the large intra-class variation of face images (such as variation in lighting, pose, scale, occlusion), the existing models are still behind the desired accuracy level, which is necessary for the use of these models in real-world applications. In this work, we propose a deep learning framework, based on the ensemble of attentional and residual convolutional networks, to predict gender and age group of facial images with high accuracy rate. Using attention mechanism enables our model to focus on the important and informative parts of the face, which can help it to make a more accurate prediction. We train our model in a multi-task learning fashion, and augment the feature embedding of the age classifier, with the predicted gender, and show that doing so can further increase the accuracy of age prediction. Our model is trained on a popular face age and gender dataset, and achieved promising results. Through visualization of the attention maps of the train model, we show that our model has learned to become sensitive to the right regions of the face.

Palm-GAN: Generating Realistic Palmprint Images Using Total-Variation Regularized GAN

Mar 21, 2020

Abstract:Generating realistic palmprint (more generally biometric) images has always been an interesting and, at the same time, challenging problem. Classical statistical models fail to generate realistic-looking palmprint images, as they are not powerful enough to capture the complicated texture representation of palmprint images. In this work, we present a deep learning framework based on generative adversarial networks (GAN), which is able to generate realistic palmprint images. To help the model learn more realistic images, we proposed to add a suitable regularization to the loss function, which imposes the line connectivity of generated palmprint images. This is very desirable for palmprints, as the principal lines in palm are usually connected. We apply this framework to a popular palmprint databases, and generate images which look very realistic, and similar to the samples in this database. Through experimental results, we show that the generated palmprint images look very realistic, have a good diversity, and are able to capture different parts of the prior distribution. We also report the Frechet Inception distance (FID) of the proposed model, and show that our model is able to achieve really good quantitative performance in terms of FID score.

Biometric Recognition Using Deep Learning: A Survey

Nov 30, 2019

Abstract:Deep learning-based models have been very successful in achieving state-of-the-art results in many of the computer vision, speech recognition, and natural language processing tasks in the last few years. These models seem a natural fit for handling the ever-increasing scale of biometric recognition problems, from cellphone authentication to airport security systems. Deep learning-based models have increasingly been leveraged to improve the accuracy of different biometric recognition systems in recent years. In this work, we provide a comprehensive survey of more than 120 promising works on biometric recognition (including face, fingerprint, iris, palmprint, ear, voice, signature, and gait recognition), which deploy deep learning models, and show their strengths and potentials in different applications. For each biometric, we first introduce the available datasets that are widely used in the literature and their characteristics. We will then talk about several promising deep learning works developed for that biometric, and show their performance on popular public benchmarks. We will also discuss some of the main challenges while using these models for biometric recognition, and possible future directions to which research in this area is headed.

GDP: Generalized Device Placement for Dataflow Graphs

Sep 28, 2019

Abstract:Runtime and scalability of large neural networks can be significantly affected by the placement of operations in their dataflow graphs on suitable devices. With increasingly complex neural network architectures and heterogeneous device characteristics, finding a reasonable placement is extremely challenging even for domain experts. Most existing automated device placement approaches are impractical due to the significant amount of compute required and their inability to generalize to new, previously held-out graphs. To address both limitations, we propose an efficient end-to-end method based on a scalable sequential attention mechanism over a graph neural network that is transferable to new graphs. On a diverse set of representative deep learning models, including Inception-v3, AmoebaNet, Transformer-XL, and WaveNet, our method on average achieves 16% improvement over human experts and 9.2% improvement over the prior art with 15 times faster convergence. To further reduce the computation cost, we pre-train the policy network on a set of dataflow graphs and use a superposition network to fine-tune it on each individual graph, achieving state-of-the-art performance on large hold-out graphs with over 50k nodes, such as an 8-layer GNMT.

FingerNet: Pushing The Limits of Fingerprint Recognition Using Convolutional Neural Network

Jul 28, 2019

Abstract:Fingerprint recognition has been utilized for cellphone authentication, airport security and beyond. Many different features and algorithms have been proposed to improve fingerprint recognition. In this paper, we propose an end-to-end deep learning framework for fingerprint recognition using convolutional neural networks (CNNs) which can jointly learn the feature representation and perform recognition. We train our model on a large-scale fingerprint recognition dataset, and improve over previous approaches in terms of accuracy. Our proposed model is able to achieve a very high recognition accuracy on a well-known fingerprint dataset. We believe this framework can be widely used for biometrics recognition tasks, making more scalable and accurate systems possible. We have also used a visualization technique to highlight the important areas in an input fingerprint image, that mostly impact the recognition results.

DeepIris: Iris Recognition Using A Deep Learning Approach

Jul 22, 2019

Abstract:Iris recognition has been an active research area during last few decades, because of its wide applications in security, from airports to homeland security border control. Different features and algorithms have been proposed for iris recognition in the past. In this paper, we propose an end-to-end deep learning framework for iris recognition based on residual convolutional neural network (CNN), which can jointly learn the feature representation and perform recognition. We train our model on a well-known iris recognition dataset using only a few training images from each class, and show promising results and improvements over previous approaches. We also present a visualization technique which is able to detect the important areas in iris images which can mostly impact the recognition results. We believe this framework can be widely used for other biometrics recognition tasks, helping to have a more scalable and accurate systems.

Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network

Feb 04, 2019

Abstract:Facial expression recognition has been an active research area over the past few decades, and it is still challenging due to the high intra-class variation. Traditional approaches for this problem rely on hand-crafted features such as SIFT, HOG and LBP, followed by a classifier trained on a database of images or videos. Most of these works perform reasonably well on datasets of images captured in a controlled condition, but fail to perform as good on more challenging datasets with more image variation and partial faces. In recent years, several works proposed an end-to-end framework for facial expression recognition, using deep learning models. Despite the better performance of these works, there still seems to be a great room for improvement. In this work, we propose a deep learning approach based on attentional convolutional network, which is able to focus on important parts of the face, and achieves significant improvement over previous models on multiple datasets, including FER-2013, CK+, FERG, and JAFFE. We also use a visualization technique which is able to find important face regions for detecting different emotions, based on the classifier's output. Through experimental results, we show that different emotions seems to be sensitive to different parts of the face.

Iris-GAN: Learning to Generate Realistic Iris Images Using Convolutional GAN

Dec 25, 2018

Abstract:Generating iris images which look realistic is both an interesting and challenging problem. Most of the classical statistical models are not powerful enough to capture the complicated texture representation in iris images, and therefore fail to generate iris images which look realistic. In this work, we present a machine learning framework based on generative adversarial network (GAN), which is able to generate iris images sampled from a prior distribution (learned from a set of training images). We apply this framework to two popular iris databases, and generate images which look very realistic, and similar to the image distribution in those databases. Through experimental results, we show that the generated iris images have a good diversity, and are able to capture different part of the prior distribution.

Finger-GAN: Generating Realistic Fingerprint Images Using Connectivity Imposed GAN

Dec 25, 2018

Abstract:Generating realistic biometric images has been an interesting and, at the same time, challenging problem. Classical statistical models fail to generate realistic-looking fingerprint images, as they are not powerful enough to capture the complicated texture representation in fingerprint images. In this work, we present a machine learning framework based on generative adversarial networks (GAN), which is able to generate fingerprint images sampled from a prior distribution (learned from a set of training images). We also add a suitable regularization term to the loss function, to impose the connectivity of generated fingerprint images. This is highly desirable for fingerprints, as the lines in each finger are usually connected. We apply this framework to two popular fingerprint databases, and generate images which look very realistic, and similar to the samples in those databases. Through experimental results, we show that the generated fingerprint images have a good diversity, and are able to capture different parts of the prior distribution. We also evaluate the Frechet Inception distance (FID) of our proposed model, and show that our model is able to achieve good quantitative performance in terms of this score.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge