Allen T. Newton

Inter-Scanner Harmonization of High Angular Resolution DW-MRI using Null Space Deep Learning

Oct 09, 2018

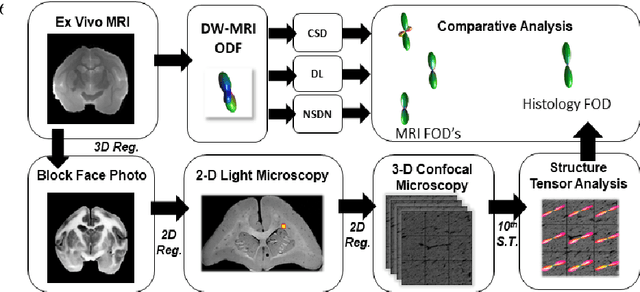

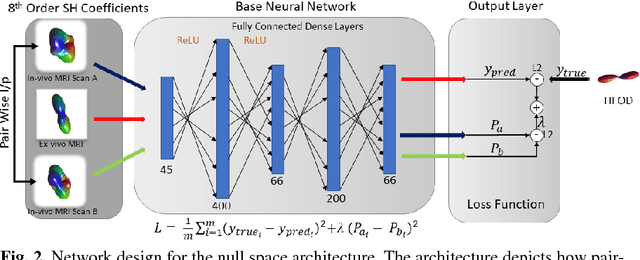

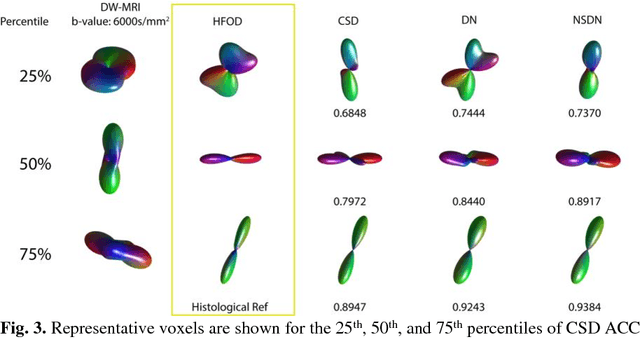

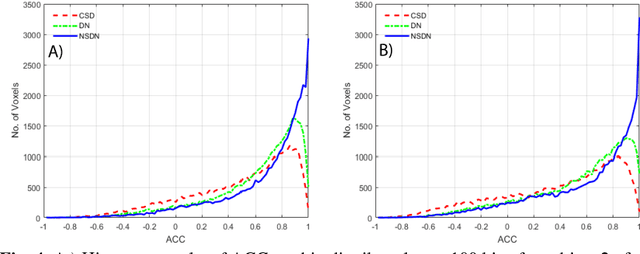

Abstract:Diffusion-weighted magnetic resonance imaging (DW-MRI) allows for non-invasive imaging of the local fiber architecture of the human brain at a millimetric scale. Multiple classical approaches have been proposed to detect both single (e.g., tensors) and multiple (e.g., constrained spherical deconvolution, CSD) fiber population orientations per voxel. However, existing techniques generally exhibit low reproducibility across MRI scanners. Herein, we propose a data-driven tech-nique using a neural network design which exploits two categories of data. First, training data were acquired on three squirrel monkey brains using ex-vivo DW-MRI and histology of the brain. Second, repeated scans of human subjects were acquired on two different scanners to augment the learning of the network pro-posed. To use these data, we propose a new network architecture, the null space deep network (NSDN), to simultaneously learn on traditional observed/truth pairs (e.g., MRI-histology voxels) along with repeated observations without a known truth (e.g., scan-rescan MRI). The NSDN was tested on twenty percent of the histology voxels that were kept completely blind to the network. NSDN significantly improved absolute performance relative to histology by 3.87% over CSD and 1.42% over a recently proposed deep neural network approach. More-over, it improved reproducibility on the paired data by 21.19% over CSD and 10.09% over a recently proposed deep approach. Finally, NSDN improved gen-eralizability of the model to a third in vivo human scanner (which was not used in training) by 16.08% over CSD and 10.41% over a recently proposed deep learn-ing approach. This work suggests that data-driven approaches for local fiber re-construction are more reproducible, informative and precise and offers a novel, practical method for determining these models.

Learning Implicit Brain MRI Manifolds with Deep Learning

Jan 05, 2018Abstract:An important task in image processing and neuroimaging is to extract quantitative information from the acquired images in order to make observations about the presence of disease or markers of development in populations. Having a lowdimensional manifold of an image allows for easier statistical comparisons between groups and the synthesis of group representatives. Previous studies have sought to identify the best mapping of brain MRI to a low-dimensional manifold, but have been limited by assumptions of explicit similarity measures. In this work, we use deep learning techniques to investigate implicit manifolds of normal brains and generate new, high-quality images. We explore implicit manifolds by addressing the problems of image synthesis and image denoising as important tools in manifold learning. First, we propose the unsupervised synthesis of T1-weighted brain MRI using a Generative Adversarial Network (GAN) by learning from 528 examples of 2D axial slices of brain MRI. Synthesized images were first shown to be unique by performing a crosscorrelation with the training set. Real and synthesized images were then assessed in a blinded manner by two imaging experts providing an image quality score of 1-5. The quality score of the synthetic image showed substantial overlap with that of the real images. Moreover, we use an autoencoder with skip connections for image denoising, showing that the proposed method results in higher PSNR than FSL SUSAN after denoising. This work shows the power of artificial networks to synthesize realistic imaging data, which can be used to improve image processing techniques and provide a quantitative framework to structural changes in the brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge