Alessio Fagioli

Medicinal Boxes Recognition on a Deep Transfer Learning Augmented Reality Mobile Application

Mar 26, 2022

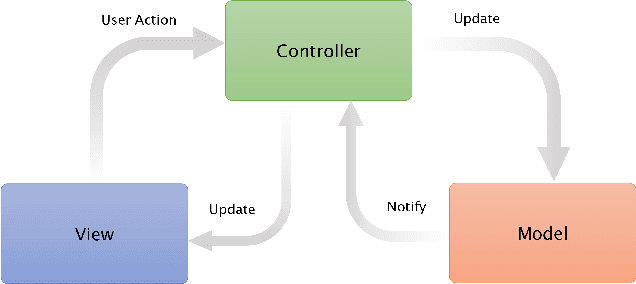

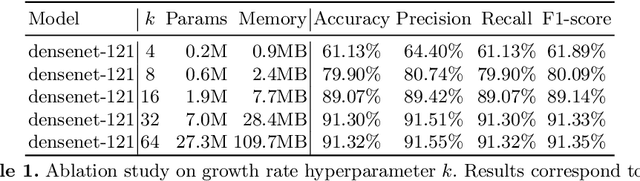

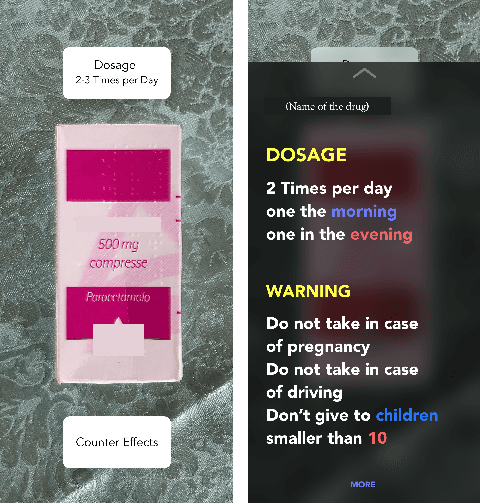

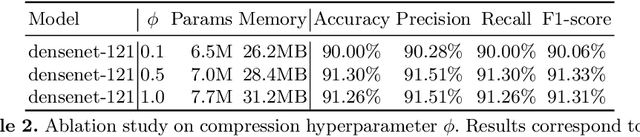

Abstract:Taking medicines is a fundamental aspect to cure illnesses. However, studies have shown that it can be hard for patients to remember the correct posology. More aggravating, a wrong dosage generally causes the disease to worsen. Although, all relevant instructions for a medicine are summarized in the corresponding patient information leaflet, the latter is generally difficult to navigate and understand. To address this problem and help patients with their medication, in this paper we introduce an augmented reality mobile application that can present to the user important details on the framed medicine. In particular, the app implements an inference engine based on a deep neural network, i.e., a densenet, fine-tuned to recognize a medicinal from its package. Subsequently, relevant information, such as posology or a simplified leaflet, is overlaid on the camera feed to help a patient when taking a medicine. Extensive experiments to select the best hyperparameters were performed on a dataset specifically collected to address this task; ultimately obtaining up to 91.30\% accuracy as well as real-time capabilities.

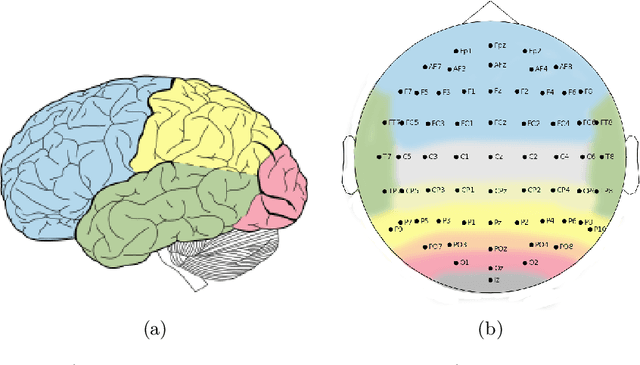

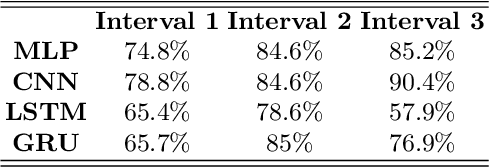

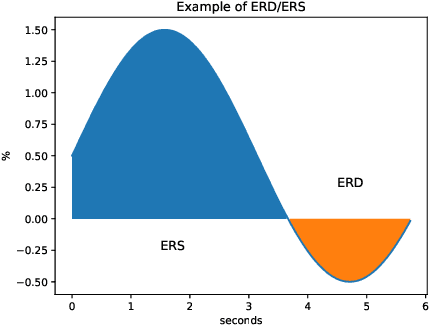

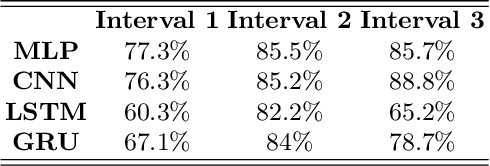

Analyzing EEG Data with Machine and Deep Learning: A Benchmark

Mar 18, 2022

Abstract:Nowadays, machine and deep learning techniques are widely used in different areas, ranging from economics to biology. In general, these techniques can be used in two ways: trying to adapt well-known models and architectures to the available data, or designing custom architectures. In both cases, to speed up the research process, it is useful to know which type of models work best for a specific problem and/or data type. By focusing on EEG signal analysis, and for the first time in literature, in this paper a benchmark of machine and deep learning for EEG signal classification is proposed. For our experiments we used the four most widespread models, i.e., multilayer perceptron, convolutional neural network, long short-term memory, and gated recurrent unit, highlighting which one can be a good starting point for developing EEG classification models.

Human Silhouette and Skeleton Video Synthesis through Wi-Fi signals

Mar 11, 2022

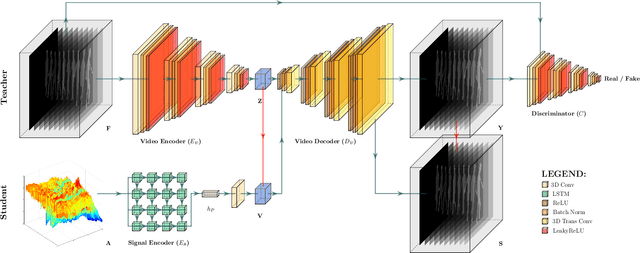

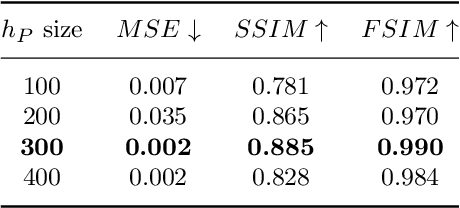

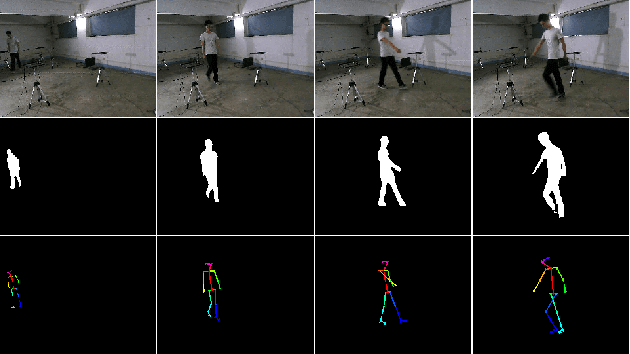

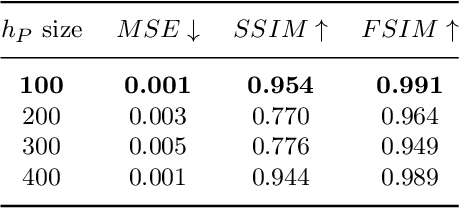

Abstract:The increasing availability of wireless access points (APs) is leading towards human sensing applications based on Wi-Fi signals as support or alternative tools to the widespread visual sensors, where the signals enable to address well-known vision-related problems such as illumination changes or occlusions. Indeed, using image synthesis techniques to translate radio frequencies to the visible spectrum can become essential to obtain otherwise unavailable visual data. This domain-to-domain translation is feasible because both objects and people affect electromagnetic waves, causing radio and optical frequencies variations. In literature, models capable of inferring radio-to-visual features mappings have gained momentum in the last few years since frequency changes can be observed in the radio domain through the channel state information (CSI) of Wi-Fi APs, enabling signal-based feature extraction, e.g., amplitude. On this account, this paper presents a novel two-branch generative neural network that effectively maps radio data into visual features, following a teacher-student design that exploits a cross-modality supervision strategy. The latter conditions signal-based features in the visual domain to completely replace visual data. Once trained, the proposed method synthesizes human silhouette and skeleton videos using exclusively Wi-Fi signals. The approach is evaluated on publicly available data, where it obtains remarkable results for both silhouette and skeleton videos generation, demonstrating the effectiveness of the proposed cross-modality supervision strategy.

Study on Transfer Learning Capabilities for Pneumonia Classification in Chest-X-Rays Image

Oct 06, 2021

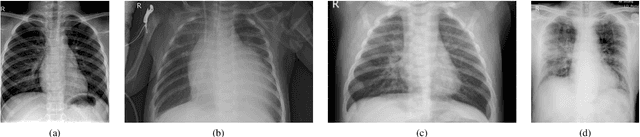

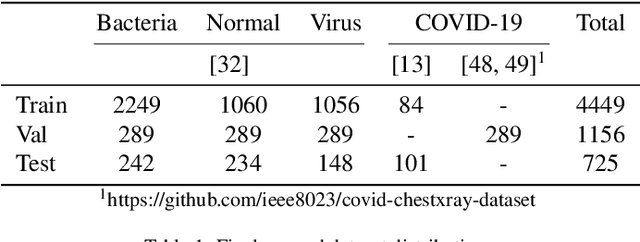

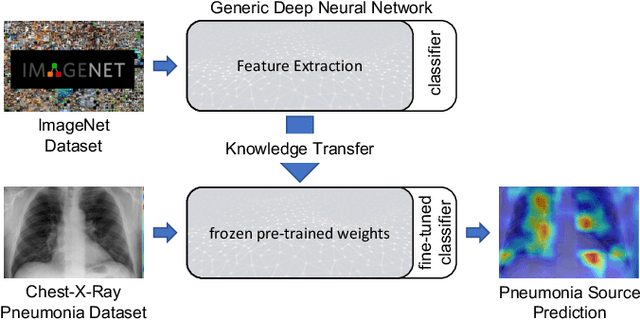

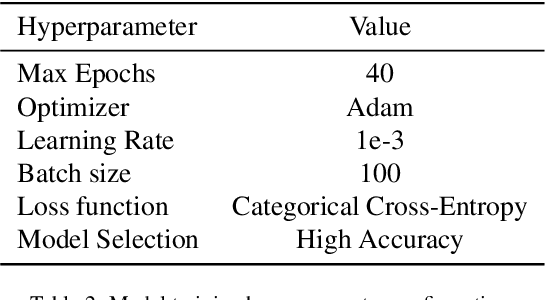

Abstract:Over the last year, the severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2) and its variants have highlighted the importance of screening tools with high diagnostic accuracy for new illnesses such as COVID-19. To that regard, deep learning approaches have proven as effective solutions for pneumonia classification, especially when considering chest-x-rays images. However, this lung infection can also be caused by other viral, bacterial or fungi pathogens. Consequently, efforts are being poured toward distinguishing the infection source to help clinicians to diagnose the correct disease origin. Following this tendency, this study further explores the effectiveness of established neural network architectures on the pneumonia classification task through the transfer learning paradigm. To present a comprehensive comparison, 12 well-known ImageNet pre-trained models were fine-tuned and used to discriminate among chest-x-rays of healthy people, and those showing pneumonia symptoms derived from either a viral (i.e., generic or SARS-CoV-2) or bacterial source. Furthermore, since a common public collection distinguishing between such categories is currently not available, two distinct datasets of chest-x-rays images, describing the aforementioned sources, were combined and employed to evaluate the various architectures. The experiments were performed using a total of 6330 images split between train, validation and test sets. For all models, common classification metrics were computed (e.g., precision, f1-score) and most architectures obtained significant performances, reaching, among the others, up to 84.46% average f1-score when discriminating the 4 identified classes. Moreover, confusion matrices and activation maps computed via the Grad-CAM algorithm were also reported to present an informed discussion on the networks classifications.

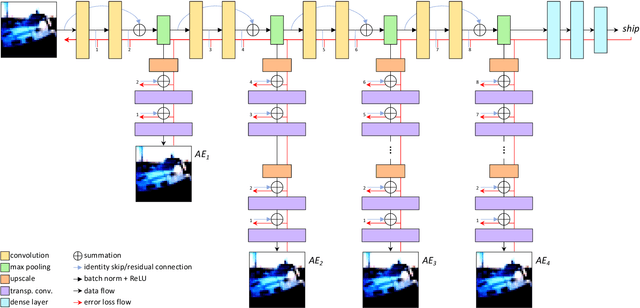

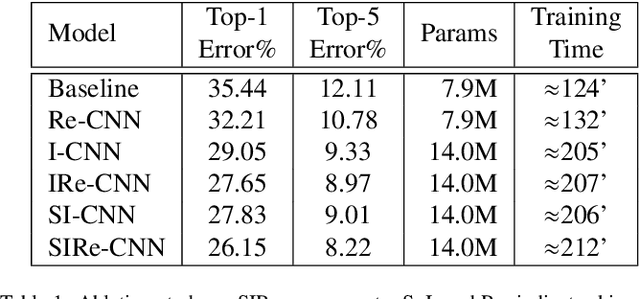

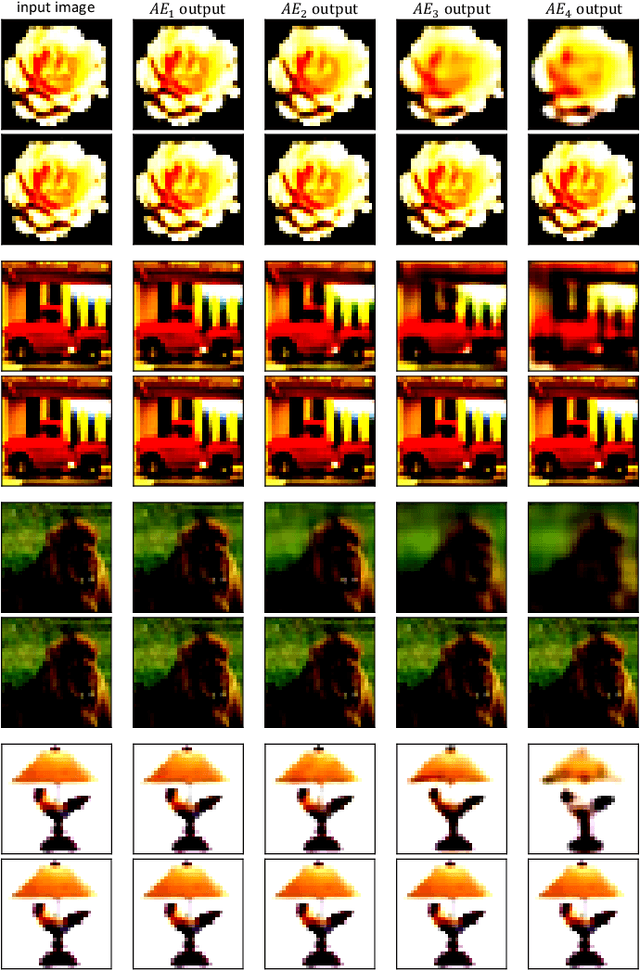

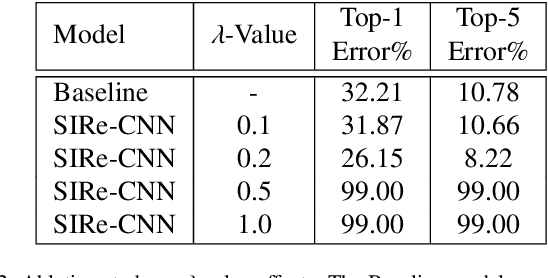

SIRe-Networks: Skip Connections over Interlaced Multi-Task Learning and Residual Connections for Structure Preserving Object Classification

Oct 06, 2021

Abstract:Improving existing neural network architectures can involve several design choices such as manipulating the loss functions, employing a diverse learning strategy, exploiting gradient evolution at training time, optimizing the network hyper-parameters, or increasing the architecture depth. The latter approach is a straightforward solution, since it directly enhances the representation capabilities of a network; however, the increased depth generally incurs in the well-known vanishing gradient problem. In this paper, borrowing from different methods addressing this issue, we introduce an interlaced multi-task learning strategy, defined SIRe, to reduce the vanishing gradient in relation to the object classification task. The presented methodology directly improves a convolutional neural network (CNN) by enforcing the input image structure preservation through interlaced auto-encoders, and further refines the base network architecture by means of skip and residual connections. To validate the presented methodology, a simple CNN and various implementations of famous networks are extended via the SIRe strategy and extensively tested on the CIFAR100 dataset; where the SIRe-extended architectures achieve significantly increased performances across all models, thus confirming the presented approach effectiveness.

3D Hand Pose and Shape Estimation from RGB Images for Improved Keypoint-Based Hand-Gesture Recognition

Sep 28, 2021

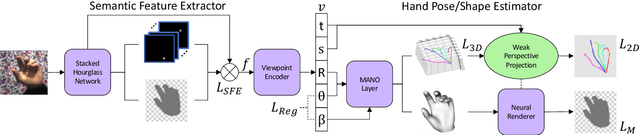

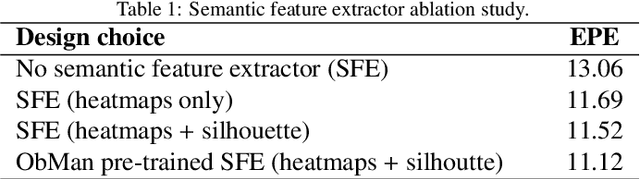

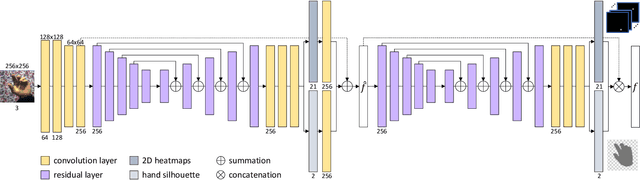

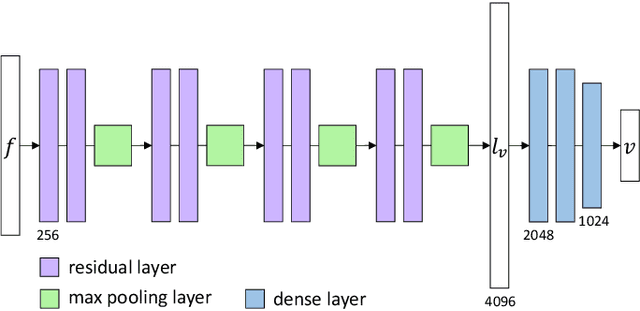

Abstract:Estimating the 3D hand pose from a 2D image is a well-studied problem and a requirement for several real-life applications such as virtual reality, augmented reality, and hand-gesture recognition. Currently, good estimations can be computed starting from single RGB images, especially when forcing the system to also consider, through a multi-task learning approach, the hand shape when the pose is determined. However, when addressing the aforementioned real-life tasks, performances can drop considerably depending on the hand representation, thus suggesting that stable descriptions are required to achieve satisfactory results. As a consequence, in this paper we present a keypoint-based end-to-end framework for the 3D hand and pose estimation, and successfully apply it to the hand-gesture recognition task as a study case. Specifically, after a pre-processing step where the images are normalized, the proposed pipeline comprises a multi-task semantic feature extractor generating 2D heatmaps and hand silhouettes from RGB images; a viewpoint encoder predicting hand and camera view parameters; a stable hand estimator producing the 3D hand pose and shape; and a loss function designed to jointly guide all of the components during the learning phase. To assess the proposed framework, tests were performed on a 3D pose and shape estimation benchmark dataset, obtaining state-of-the-art performances. What is more, the devised system was also evaluated on 2 hand-gesture recognition benchmark datasets, where the framework significantly outperforms other keypoint-based approaches; indicating that the presented method is an effective solution able to generate stable 3D estimates for the hand pose and shape.

Knowledge-Driven Learning via Experts Consult for Thyroid Nodule Classification

May 28, 2020

Abstract:Computer-aided diagnosis (CAD) is becoming a prominent approach to assist clinicians spanning across multiple fields. These automated systems take advantage of various computer vision (CV) procedures, as well as artificial intelligence (AI) techniques, so that a diagnosis of a given image (e.g., computed tomography and ultrasound) can be formulated. Advances in both areas (CV and AI) are enabling ever increasing performances of CAD systems, which can ultimately avoid performing invasive procedures such as fine-needle aspiration. In this study, we focus on thyroid ultrasonography to present a novel knowledge-driven classification framework. The proposed system leverages cues provided by an ensemble of experts, in order to guide the learning phase of a densely connected convolutional network (DenseNet). The ensemble is composed by various networks pretrained on ImageNet, including AlexNet, ResNet, VGG, and others, so that previously computed feature parameters could be used to create ultrasonography domain experts via transfer learning, decreasing, moreover, the number of samples required for training. To validate the proposed method, extensive experiments were performed, providing detailed performances for both the experts ensemble and the knowledge-driven DenseNet. The obtained results, show how the the proposed system can become a great asset when formulating a diagnosis, by leveraging previous knowledge derived from a consult.

Deep Temporal Analysis for Non-Acted Body Affect Recognition

Jul 23, 2019

Abstract:Affective computing is a field of great interest in many computer vision applications, including video surveillance, behaviour analysis, and human-robot interaction. Most of the existing literature has addressed this field by analysing different sets of face features. However, in the last decade, several studies have shown how body movements can play a key role even in emotion recognition. The majority of these experiments on the body are performed by trained actors whose aim is to simulate emotional reactions. These unnatural expressions differ from the more challenging genuine emotions, thus invalidating the obtained results. In this paper, a solution for basic non-acted emotion recognition based on 3D skeleton and Deep Neural Networks (DNNs) is provided. The proposed work introduces three majors contributions. First, unlike the current state-of-the-art in non-acted body affect recognition, where only static or global body features are considered, in this work also temporal local movements performed by subjects in each frame are examined. Second, an original set of global and time-dependent features for body movement description is provided. Third, to the best of out knowledge, this is the first attempt to use deep learning methods for non-acted body affect recognition. Due to the novelty of the topic, only the UCLIC dataset is currently considered the benchmark for comparative tests. On the latter, the proposed method outperforms all the competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge