Alain Finkel

LMF, IUF

Text- and Feature-based Models for Compound Multimodal Emotion Recognition in the Wild

Jul 17, 2024

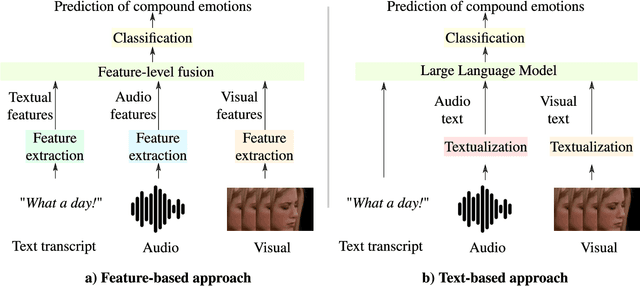

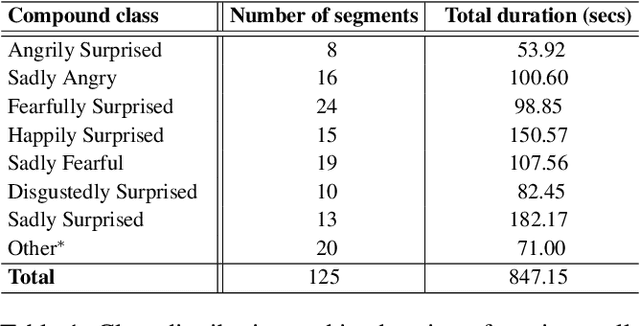

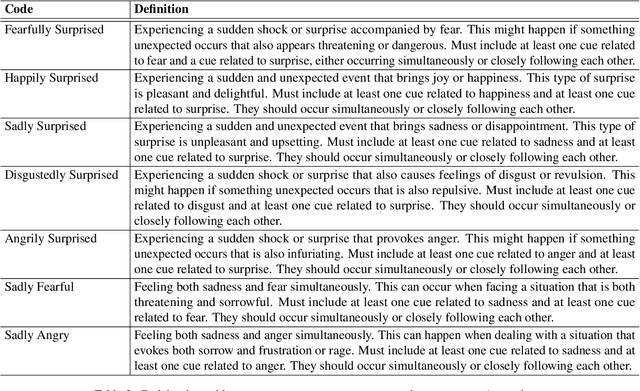

Abstract:Systems for multimodal Emotion Recognition (ER) commonly rely on features extracted from different modalities (e.g., visual, audio, and textual) to predict the seven basic emotions. However, compound emotions often occur in real-world scenarios and are more difficult to predict. Compound multimodal ER becomes more challenging in videos due to the added uncertainty of diverse modalities. In addition, standard features-based models may not fully capture the complex and subtle cues needed to understand compound emotions. %%%% Since relevant cues can be extracted in the form of text, we advocate for textualizing all modalities, such as visual and audio, to harness the capacity of large language models (LLMs). These models may understand the complex interaction between modalities and the subtleties of complex emotions. Although training an LLM requires large-scale datasets, a recent surge of pre-trained LLMs, such as BERT and LLaMA, can be easily fine-tuned for downstream tasks like compound ER. This paper compares two multimodal modeling approaches for compound ER in videos -- standard feature-based vs. text-based. Experiments were conducted on the challenging C-EXPR-DB dataset for compound ER, and contrasted with results on the MELD dataset for basic ER. Our code is available

Emotion Recognition based on Psychological Components in Guided Narratives for Emotion Regulation

May 15, 2023

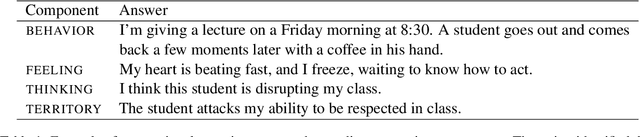

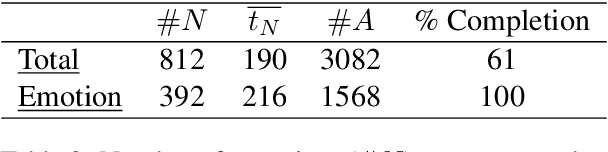

Abstract:Emotion regulation is a crucial element in dealing with emotional events and has positive effects on mental health. This paper aims to provide a more comprehensive understanding of emotional events by introducing a new French corpus of emotional narratives collected using a questionnaire for emotion regulation. We follow the theoretical framework of the Component Process Model which considers emotions as dynamic processes composed of four interrelated components (behavior, feeling, thinking and territory). Each narrative is related to a discrete emotion and is structured based on all emotion components by the writers. We study the interaction of components and their impact on emotion classification with machine learning methods and pre-trained language models. Our results show that each component improves prediction performance, and that the best results are achieved by jointly considering all components. Our results also show the effectiveness of pre-trained language models in predicting discrete emotion from certain components, which reveal differences in how emotion components are expressed.

Natural Language Processing for Cognitive Analysis of Emotions

Oct 11, 2022

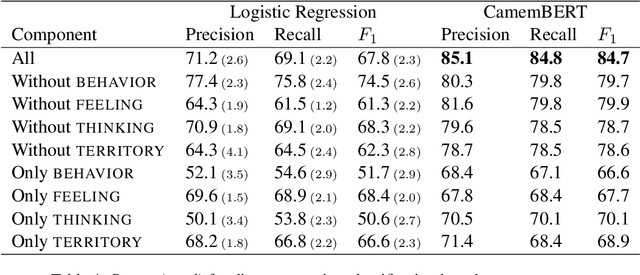

Abstract:Emotion analysis in texts suffers from two major limitations: annotated gold-standard corpora are mostly small and homogeneous, and emotion identification is often simplified as a sentence-level classification problem. To address these issues, we introduce a new annotation scheme for exploring emotions and their causes, along with a new French dataset composed of autobiographical accounts of an emotional scene. The texts were collected by applying the Cognitive Analysis of Emotions developed by A. Finkel to help people improve on their emotion management. The method requires the manual analysis of an emotional event by a coach trained in Cognitive Analysis. We present a rule-based approach to automatically annotate emotions and their semantic roles (e.g. emotion causes) to facilitate the identification of relevant aspects by the coach. We investigate future directions for emotion analysis using graph structures.

Property-Directed Verification of Recurrent Neural Networks

Sep 22, 2020

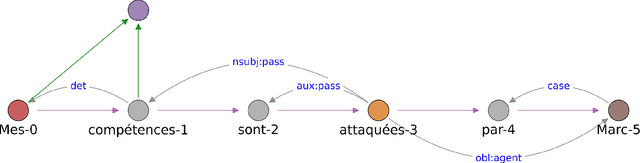

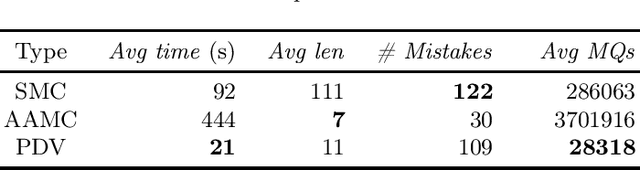

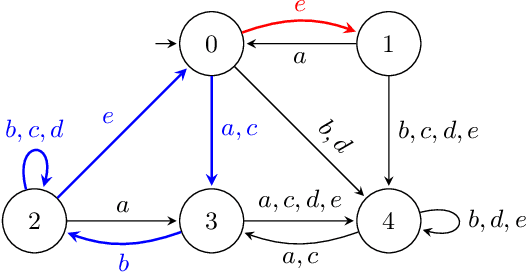

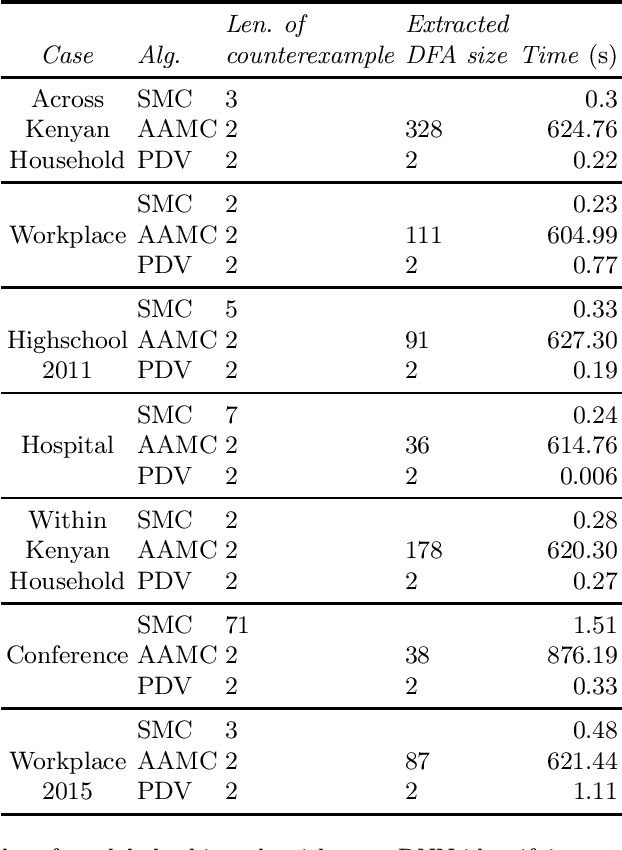

Abstract:This paper presents a property-directed approach to verifying recurrent neural networks (RNNs). To this end, we learn a deterministic finite automaton as a surrogate model from a given RNN using active automata learning. This model may then be analyzed using model checking as verification technique. The term property-directed reflects the idea that our procedure is guided and controlled by the given property rather than performing the two steps separately. We show that this not only allows us to discover small counterexamples fast, but also to generalize them by pumping towards faulty flows hinting at the underlying error in the RNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge