Aili Wang

Efficient ANN-Guided Distillation: Aligning Rate-based Features of Spiking Neural Networks through Hybrid Block-wise Replacement

Mar 20, 2025Abstract:Spiking Neural Networks (SNNs) have garnered considerable attention as a potential alternative to Artificial Neural Networks (ANNs). Recent studies have highlighted SNNs' potential on large-scale datasets. For SNN training, two main approaches exist: direct training and ANN-to-SNN (ANN2SNN) conversion. To fully leverage existing ANN models in guiding SNN learning, either direct ANN-to-SNN conversion or ANN-SNN distillation training can be employed. In this paper, we propose an ANN-SNN distillation framework from the ANN-to-SNN perspective, designed with a block-wise replacement strategy for ANN-guided learning. By generating intermediate hybrid models that progressively align SNN feature spaces to those of ANN through rate-based features, our framework naturally incorporates rate-based backpropagation as a training method. Our approach achieves results comparable to or better than state-of-the-art SNN distillation methods, showing both training and learning efficiency.

Efficient Distillation of Deep Spiking Neural Networks for Full-Range Timestep Deployment

Jan 27, 2025Abstract:Spiking Neural Networks (SNNs) are emerging as a brain-inspired alternative to traditional Artificial Neural Networks (ANNs), prized for their potential energy efficiency on neuromorphic hardware. Despite this, SNNs often suffer from accuracy degradation compared to ANNs and face deployment challenges due to fixed inference timesteps, which require retraining for adjustments, limiting operational flexibility. To address these issues, our work considers the spatio-temporal property inherent in SNNs, and proposes a novel distillation framework for deep SNNs that optimizes performance across full-range timesteps without specific retraining, enhancing both efficacy and deployment adaptability. We provide both theoretical analysis and empirical validations to illustrate that training guarantees the convergence of all implicit models across full-range timesteps. Experimental results on CIFAR-10, CIFAR-100, CIFAR10-DVS, and ImageNet demonstrate state-of-the-art performance among distillation-based SNNs training methods.

Improving Quantization-aware Training of Low-Precision Network via Block Replacement on Full-Precision Counterpart

Dec 20, 2024Abstract:Quantization-aware training (QAT) is a common paradigm for network quantization, in which the training phase incorporates the simulation of the low-precision computation to optimize the quantization parameters in alignment with the task goals. However, direct training of low-precision networks generally faces two obstacles: 1. The low-precision model exhibits limited representation capabilities and cannot directly replicate full-precision calculations, which constitutes a deficiency compared to full-precision alternatives; 2. Non-ideal deviations during gradient propagation are a common consequence of employing pseudo-gradients as approximations in derived quantized functions. In this paper, we propose a general QAT framework for alleviating the aforementioned concerns by permitting the forward and backward processes of the low-precision network to be guided by the full-precision partner during training. In conjunction with the direct training of the quantization model, intermediate mixed-precision models are generated through the block-by-block replacement on the full-precision model and working simultaneously with the low-precision backbone, which enables the integration of quantized low-precision blocks into full-precision networks throughout the training phase. Consequently, each quantized block is capable of: 1. simulating full-precision representation during forward passes; 2. obtaining gradients with improved estimation during backward passes. We demonstrate that the proposed method achieves state-of-the-art results for 4-, 3-, and 2-bit quantization on ImageNet and CIFAR-10. The proposed framework provides a compatible extension for most QAT methods and only requires a concise wrapper for existing codes.

Decoupling Dark Knowledge via Block-wise Logit Distillation for Feature-level Alignment

Nov 03, 2024

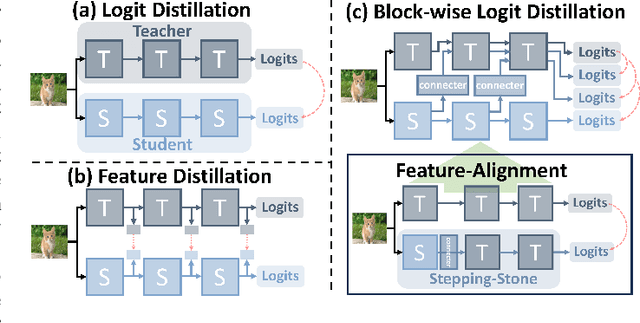

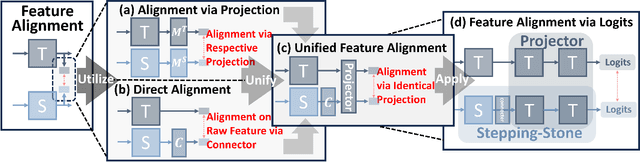

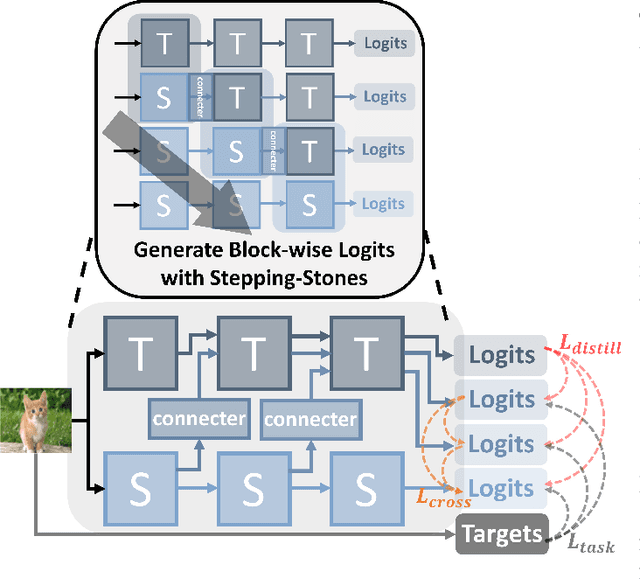

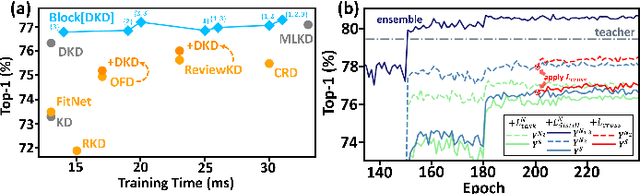

Abstract:Knowledge Distillation (KD), a learning manner with a larger teacher network guiding a smaller student network, transfers dark knowledge from the teacher to the student via logits or intermediate features, with the aim of producing a well-performed lightweight model. Notably, many subsequent feature-based KD methods outperformed the earliest logit-based KD method and iteratively generated numerous state-of-the-art distillation methods. Nevertheless, recent work has uncovered the potential of the logit-based method, bringing the simple KD form based on logits back into the limelight. Features or logits? They partially implement the KD with entirely distinct perspectives; therefore, choosing between logits and features is not straightforward. This paper provides a unified perspective of feature alignment in order to obtain a better comprehension of their fundamental distinction. Inheriting the design philosophy and insights of feature-based and logit-based methods, we introduce a block-wise logit distillation framework to apply implicit logit-based feature alignment by gradually replacing teacher's blocks as intermediate stepping-stone models to bridge the gap between the student and the teacher. Our method obtains comparable or superior results to state-of-the-art distillation methods. This paper demonstrates the great potential of combining logit and features, and we hope it will inspire future research to revisit KD from a higher vantage point.

Advancing Training Efficiency of Deep Spiking Neural Networks through Rate-based Backpropagation

Oct 15, 2024

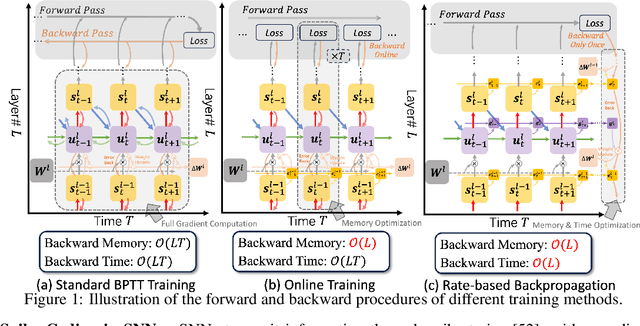

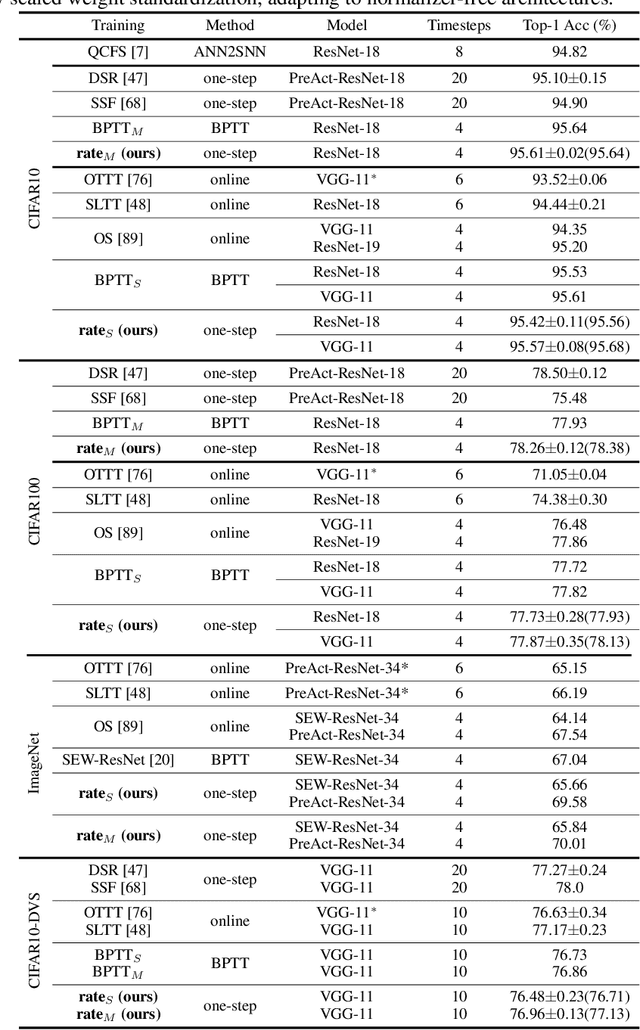

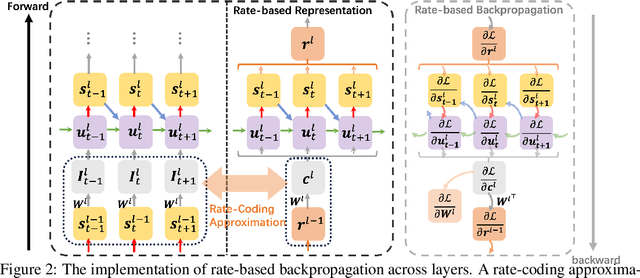

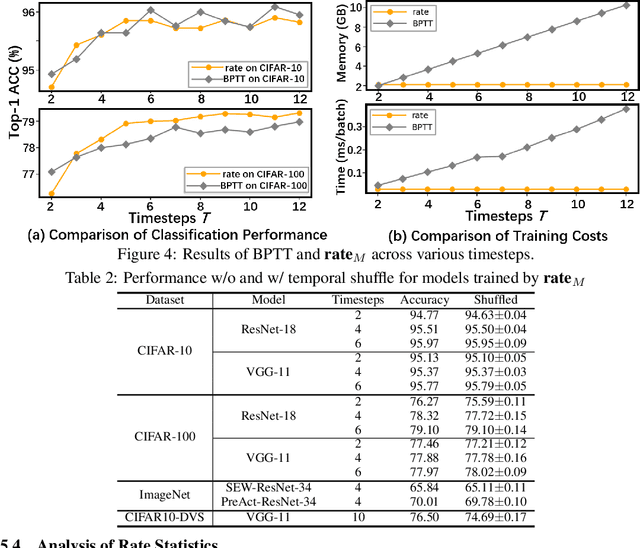

Abstract:Recent insights have revealed that rate-coding is a primary form of information representation captured by surrogate-gradient-based Backpropagation Through Time (BPTT) in training deep Spiking Neural Networks (SNNs). Motivated by these findings, we propose rate-based backpropagation, a training strategy specifically designed to exploit rate-based representations to reduce the complexity of BPTT. Our method minimizes reliance on detailed temporal derivatives by focusing on averaged dynamics, streamlining the computational graph to reduce memory and computational demands of SNNs training. We substantiate the rationality of the gradient approximation between BPTT and the proposed method through both theoretical analysis and empirical observations. Comprehensive experiments on CIFAR-10, CIFAR-100, ImageNet, and CIFAR10-DVS validate that our method achieves comparable performance to BPTT counterparts, and surpasses state-of-the-art efficient training techniques. By leveraging the inherent benefits of rate-coding, this work sets the stage for more scalable and efficient SNNs training within resource-constrained environments. Our code is available at https://github.com/Tab-ct/rate-based-backpropagation.

SDiT: Spiking Diffusion Model with Transformer

Feb 24, 2024

Abstract:Spiking neural networks (SNNs) have low power consumption and bio-interpretable characteristics, and are considered to have tremendous potential for energy-efficient computing. However, the exploration of SNNs on image generation tasks remains very limited, and a unified and effective structure for SNN-based generative models has yet to be proposed. In this paper, we explore a novel diffusion model architecture within spiking neural networks. We utilize transformer to replace the commonly used U-net structure in mainstream diffusion models. It can generate higher quality images with relatively lower computational cost and shorter sampling time. It aims to provide an empirical baseline for research of generative models based on SNNs. Experiments on MNIST, Fashion-MNIST, and CIFAR-10 datasets demonstrate that our work is highly competitive compared to existing SNN generative models.

Go beyond End-to-End Training: Boosting Greedy Local Learning with Context Supply

Dec 12, 2023

Abstract:Traditional end-to-end (E2E) training of deep networks necessitates storing intermediate activations for back-propagation, resulting in a large memory footprint on GPUs and restricted model parallelization. As an alternative, greedy local learning partitions the network into gradient-isolated modules and trains supervisely based on local preliminary losses, thereby providing asynchronous and parallel training methods that substantially reduce memory cost. However, empirical experiments reveal that as the number of segmentations of the gradient-isolated module increases, the performance of the local learning scheme degrades substantially, severely limiting its expansibility. To avoid this issue, we theoretically analyze the greedy local learning from the standpoint of information theory and propose a ContSup scheme, which incorporates context supply between isolated modules to compensate for information loss. Experiments on benchmark datasets (i.e. CIFAR, SVHN, STL-10) achieve SOTA results and indicate that our proposed method can significantly improve the performance of greedy local learning with minimal memory and computational overhead, allowing for the boost of the number of isolated modules. Our codes are available at https://github.com/Tab-ct/ContSup.

STSC-SNN: Spatio-Temporal Synaptic Connection with Temporal Convolution and Attention for Spiking Neural Networks

Oct 11, 2022

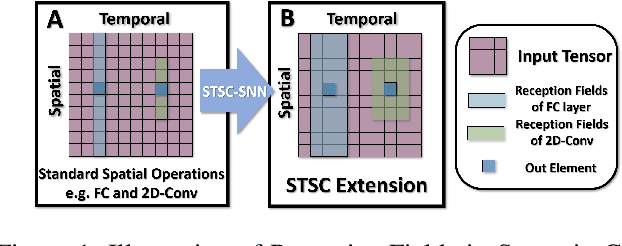

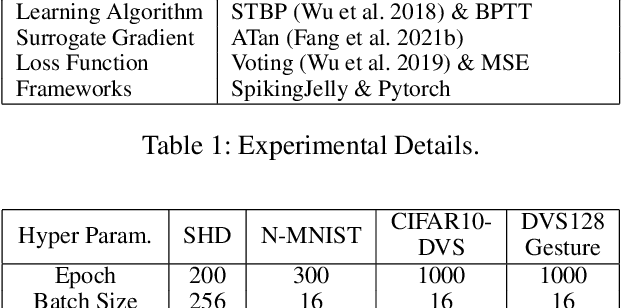

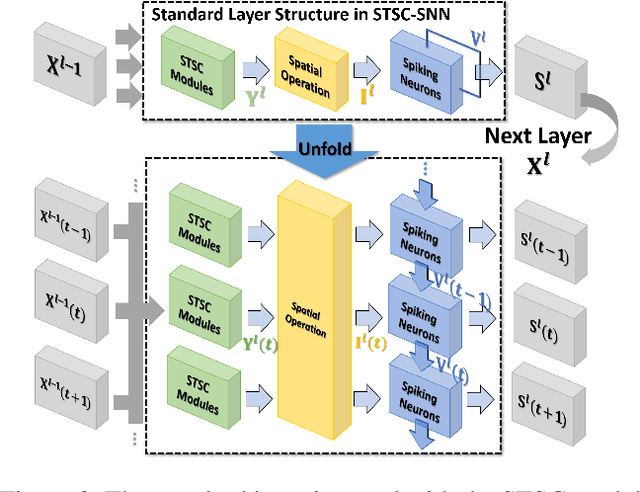

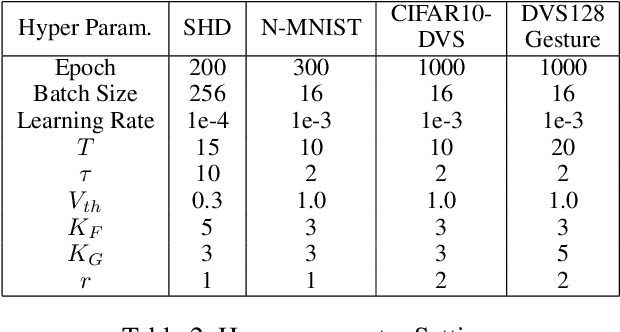

Abstract:Spiking Neural Networks (SNNs), as one of the algorithmic models in neuromorphic computing, have gained a great deal of research attention owing to temporal information processing capability, low power consumption, and high biological plausibility. The potential to efficiently extract spatio-temporal features makes it suitable for processing the event streams. However, existing synaptic structures in SNNs are almost full-connections or spatial 2D convolution, neither of which can extract temporal dependencies adequately. In this work, we take inspiration from biological synapses and propose a spatio-temporal synaptic connection SNN (STSC-SNN) model, to enhance the spatio-temporal receptive fields of synaptic connections, thereby establishing temporal dependencies across layers. Concretely, we incorporate temporal convolution and attention mechanisms to implement synaptic filtering and gating functions. We show that endowing synaptic models with temporal dependencies can improve the performance of SNNs on classification tasks. In addition, we investigate the impact of performance vias varied spatial-temporal receptive fields and reevaluate the temporal modules in SNNs. Our approach is tested on neuromorphic datasets, including DVS128 Gesture (gesture recognition), N-MNIST, CIFAR10-DVS (image classification), and SHD (speech digit recognition). The results show that the proposed model outperforms the state-of-the-art accuracy on nearly all datasets.

MAP-SNN: Mapping Spike Activities with Multiplicity, Adaptability, and Plasticity into Bio-Plausible Spiking Neural Networks

Apr 21, 2022

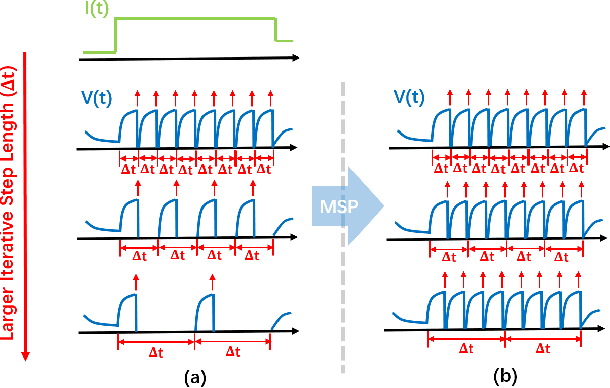

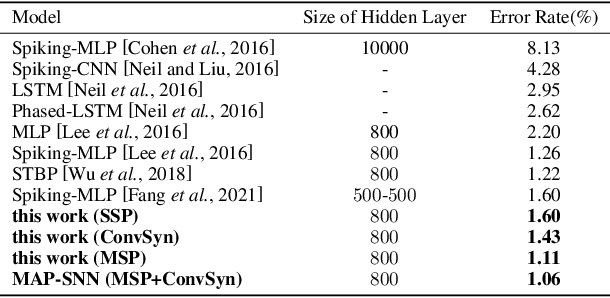

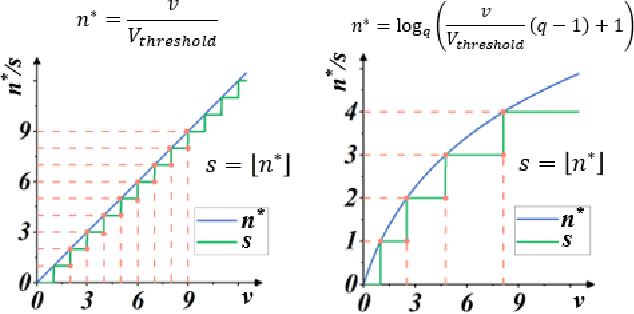

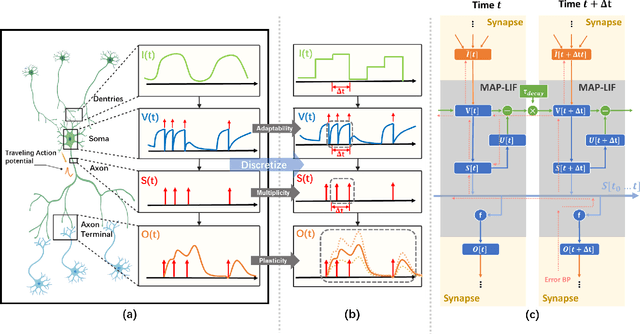

Abstract:Spiking Neural Network (SNN) is considered more biologically realistic and power-efficient as it imitates the fundamental mechanism of the human brain. Recently, backpropagation (BP) based SNN learning algorithms that utilize deep learning frameworks have achieved good performance. However, bio-interpretability is partially neglected in those BP-based algorithms. Toward bio-plausible BP-based SNNs, we consider three properties in modeling spike activities: Multiplicity, Adaptability, and Plasticity (MAP). In terms of multiplicity, we propose a Multiple-Spike Pattern (MSP) with multiple spike transmission to strengthen model robustness in discrete time-iteration. To realize adaptability, we adopt Spike Frequency Adaption (SFA) under MSP to decrease spike activities for improved efficiency. For plasticity, we propose a trainable convolutional synapse that models spike response current to enhance the diversity of spiking neurons for temporal feature extraction. The proposed SNN model achieves competitive performances on neuromorphic datasets: N-MNIST and SHD. Furthermore, experimental results demonstrate that the proposed three aspects are significant to iterative robustness, spike efficiency, and temporal feature extraction capability of spike activities. In summary, this work proposes a feasible scheme for bio-inspired spike activities with MAP, offering a new neuromorphic perspective to embed biological characteristics into spiking neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge