Ahmed Hammam

Structuring a Training Strategy to Robustify Perception Models with Realistic Image Augmentations

Aug 30, 2024

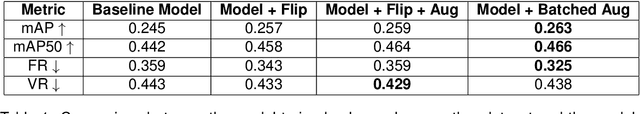

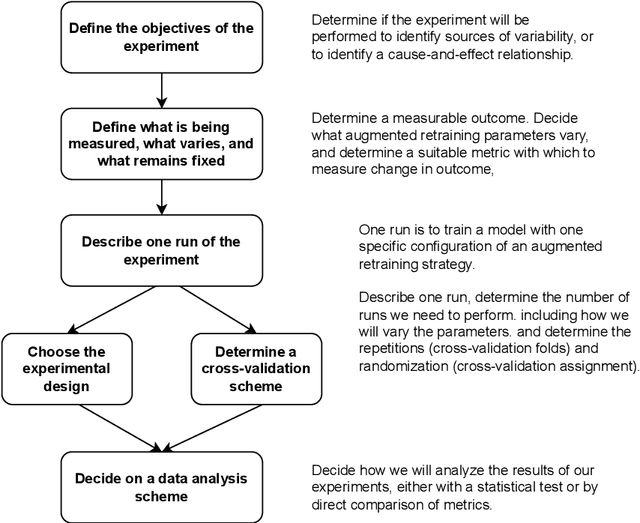

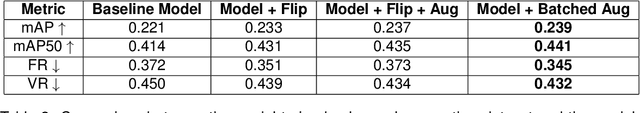

Abstract:Advancing Machine Learning (ML)-based perception models for autonomous systems necessitates addressing weak spots within the models, particularly in challenging Operational Design Domains (ODDs). These are environmental operating conditions of an autonomous vehicle which can contain difficult conditions, e.g., lens flare at night or objects reflected in a wet street. This report introduces a novel methodology for training with augmentations to enhance model robustness and performance in such conditions. The proposed approach leverages customized physics-based augmentation functions, to generate realistic training data that simulates diverse ODD scenarios. We present a comprehensive framework that includes identifying weak spots in ML models, selecting suitable augmentations, and devising effective training strategies. The methodology integrates hyperparameter optimization and latent space optimization to fine-tune augmentation parameters, ensuring they maximally improve the ML models' performance. Experimental results demonstrate improvements in model performance, as measured by commonly used metrics such as mean Average Precision (mAP) and mean Intersection over Union (mIoU) on open-source object detection and semantic segmentation models and datasets. Our findings emphasize that optimal training strategies are model- and data-specific and highlight the benefits of integrating augmentations into the training pipeline. By incorporating augmentations, we observe enhanced robustness of ML-based perception models, making them more resilient to edge cases encountered in real-world ODDs. This work underlines the importance of customized augmentations and offers an effective solution for improving the safety and reliability of autonomous driving functions.

A-BDD: Leveraging Data Augmentations for Safe Autonomous Driving in Adverse Weather and Lighting

Aug 12, 2024Abstract:High-autonomy vehicle functions rely on machine learning (ML) algorithms to understand the environment. Despite displaying remarkable performance in fair weather scenarios, perception algorithms are heavily affected by adverse weather and lighting conditions. To overcome these difficulties, ML engineers mainly rely on comprehensive real-world datasets. However, the difficulties in real-world data collection for critical areas of the operational design domain (ODD) often means synthetic data is required for perception training and safety validation. Thus, we present A-BDD, a large set of over 60,000 synthetically augmented images based on BDD100K that are equipped with semantic segmentation and bounding box annotations (inherited from the BDD100K dataset). The dataset contains augmented data for rain, fog, overcast and sunglare/shadow with varying intensity levels. We further introduce novel strategies utilizing feature-based image quality metrics like FID and CMMD, which help identify useful augmented and real-world data for ML training and testing. By conducting experiments on A-BDD, we provide evidence that data augmentations can play a pivotal role in closing performance gaps in adverse weather and lighting conditions.

A self-supervised text-vision framework for automated brain abnormality detection

May 05, 2024

Abstract:Artificial neural networks trained on large, expert-labelled datasets are considered state-of-the-art for a range of medical image recognition tasks. However, categorically labelled datasets are time-consuming to generate and constrain classification to a pre-defined, fixed set of classes. For neuroradiological applications in particular, this represents a barrier to clinical adoption. To address these challenges, we present a self-supervised text-vision framework that learns to detect clinically relevant abnormalities in brain MRI scans by directly leveraging the rich information contained in accompanying free-text neuroradiology reports. Our training approach consisted of two-steps. First, a dedicated neuroradiological language model - NeuroBERT - was trained to generate fixed-dimensional vector representations of neuroradiology reports (N = 50,523) via domain-specific self-supervised learning tasks. Next, convolutional neural networks (one per MRI sequence) learnt to map individual brain scans to their corresponding text vector representations by optimising a mean square error loss. Once trained, our text-vision framework can be used to detect abnormalities in unreported brain MRI examinations by scoring scans against suitable query sentences (e.g., 'there is an acute stroke', 'there is hydrocephalus' etc.), enabling a range of classification-based applications including automated triage. Potentially, our framework could also serve as a clinical decision support tool, not only by suggesting findings to radiologists and detecting errors in provisional reports, but also by retrieving and displaying examples of pathologies from historical examinations that could be relevant to the current case based on textual descriptors.

Inspect, Understand, Overcome: A Survey of Practical Methods for AI Safety

Apr 29, 2021Abstract:The use of deep neural networks (DNNs) in safety-critical applications like mobile health and autonomous driving is challenging due to numerous model-inherent shortcomings. These shortcomings are diverse and range from a lack of generalization over insufficient interpretability to problems with malicious inputs. Cyber-physical systems employing DNNs are therefore likely to suffer from safety concerns. In recent years, a zoo of state-of-the-art techniques aiming to address these safety concerns has emerged. This work provides a structured and broad overview of them. We first identify categories of insufficiencies to then describe research activities aiming at their detection, quantification, or mitigation. Our paper addresses both machine learning experts and safety engineers: The former ones might profit from the broad range of machine learning topics covered and discussions on limitations of recent methods. The latter ones might gain insights into the specifics of modern ML methods. We moreover hope that our contribution fuels discussions on desiderata for ML systems and strategies on how to propel existing approaches accordingly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge