Adrian E. Bayer

The Denario project: Deep knowledge AI agents for scientific discovery

Oct 30, 2025Abstract:We present Denario, an AI multi-agent system designed to serve as a scientific research assistant. Denario can perform many different tasks, such as generating ideas, checking the literature, developing research plans, writing and executing code, making plots, and drafting and reviewing a scientific paper. The system has a modular architecture, allowing it to handle specific tasks, such as generating an idea, or carrying out end-to-end scientific analysis using Cmbagent as a deep-research backend. In this work, we describe in detail Denario and its modules, and illustrate its capabilities by presenting multiple AI-generated papers generated by it in many different scientific disciplines such as astrophysics, biology, biophysics, biomedical informatics, chemistry, material science, mathematical physics, medicine, neuroscience and planetary science. Denario also excels at combining ideas from different disciplines, and we illustrate this by showing a paper that applies methods from quantum physics and machine learning to astrophysical data. We report the evaluations performed on these papers by domain experts, who provided both numerical scores and review-like feedback. We then highlight the strengths, weaknesses, and limitations of the current system. Finally, we discuss the ethical implications of AI-driven research and reflect on how such technology relates to the philosophy of science. We publicly release the code at https://github.com/AstroPilot-AI/Denario. A Denario demo can also be run directly on the web at https://huggingface.co/spaces/astropilot-ai/Denario, and the full app will be deployed on the cloud.

Transfer Learning Beyond the Standard Model

Oct 22, 2025Abstract:Machine learning enables powerful cosmological inference but typically requires many high-fidelity simulations covering many cosmological models. Transfer learning offers a way to reduce the simulation cost by reusing knowledge across models. We show that pre-training on the standard model of cosmology, $\Lambda$CDM, and fine-tuning on various beyond-$\Lambda$CDM scenarios -- including massive neutrinos, modified gravity, and primordial non-Gaussianities -- can enable inference with significantly fewer beyond-$\Lambda$CDM simulations. However, we also show that negative transfer can occur when strong physical degeneracies exist between $\Lambda$CDM and beyond-$\Lambda$CDM parameters. We consider various transfer architectures, finding that including bottleneck structures provides the best performance. Our findings illustrate the opportunities and pitfalls of foundation-model approaches in physics: pre-training can accelerate inference, but may also hinder learning new physics.

Initial Conditions from Galaxies: Machine-Learning Subgrid Correction to Standard Reconstruction

Apr 01, 2025Abstract:We present a hybrid method for reconstructing the primordial density from late-time halos and galaxies. Our approach involves two steps: (1) apply standard Baryon Acoustic Oscillation (BAO) reconstruction to recover the large-scale features in the primordial density field and (2) train a deep learning model to learn small-scale corrections on partitioned subgrids of the full volume. At inference, this correction is then convolved across the full survey volume, enabling scaling to large survey volumes. We train our method on both mock halo catalogs and mock galaxy catalogs in both configuration and redshift space from the Quijote $1(h^{-1}\,\mathrm{Gpc})^3$ simulation suite. When evaluated on held-out simulations, our combined approach significantly improves the reconstruction cross-correlation coefficient with the true initial density field and remains robust to moderate model misspecification. Additionally, we show that models trained on $1(h^{-1}\,\mathrm{Gpc})^3$ can be applied to larger boxes--e.g., $(3h^{-1}\,\mathrm{Gpc})^3$--without retraining. Finally, we perform a Fisher analysis on our method's recovery of the BAO peak, and find that it significantly improves the error on the acoustic scale relative to standard BAO reconstruction. Ultimately, this method robustly captures nonlinearities and bias without sacrificing large-scale accuracy, and its flexibility to handle arbitrarily large volumes without escalating computational requirements makes it especially promising for large-volume surveys like DESI.

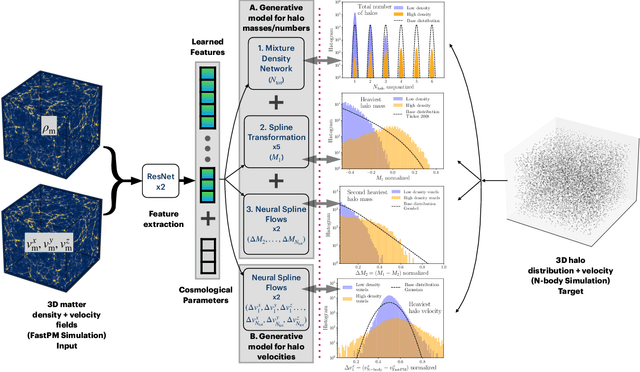

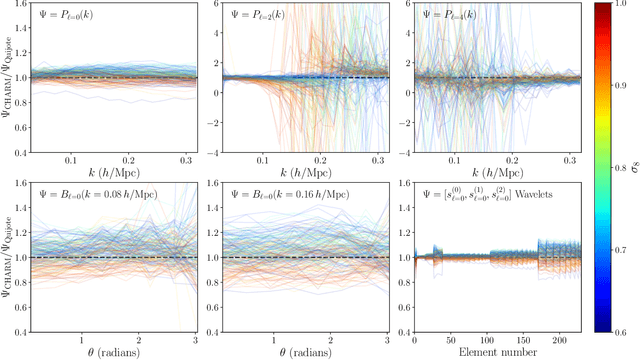

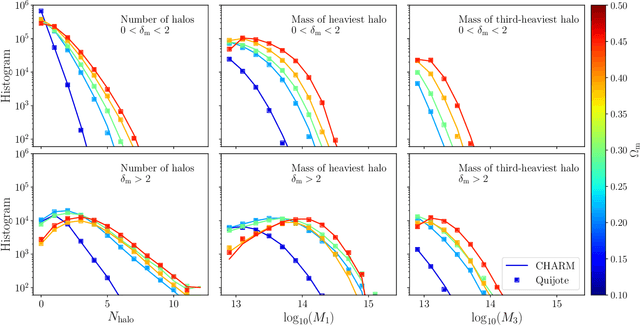

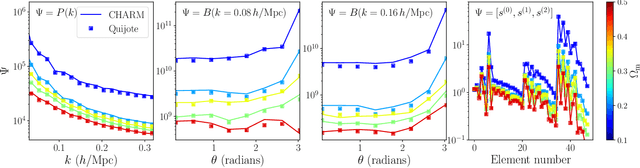

CHARM: Creating Halos with Auto-Regressive Multi-stage networks

Sep 13, 2024

Abstract:To maximize the amount of information extracted from cosmological datasets, simulations that accurately represent these observations are necessary. However, traditional simulations that evolve particles under gravity by estimating particle-particle interactions (N-body simulations) are computationally expensive and prohibitive to scale to the large volumes and resolutions necessary for the upcoming datasets. Moreover, modeling the distribution of galaxies typically involves identifying virialized dark matter halos, which is also a time- and memory-consuming process for large N-body simulations, further exacerbating the computational cost. In this study, we introduce CHARM, a novel method for creating mock halo catalogs by matching the spatial, mass, and velocity statistics of halos directly from the large-scale distribution of the dark matter density field. We develop multi-stage neural spline flow-based networks to learn this mapping at redshift z=0.5 directly with computationally cheaper low-resolution particle mesh simulations instead of relying on the high-resolution N-body simulations. We show that the mock halo catalogs and painted galaxy catalogs have the same statistical properties as obtained from $N$-body simulations in both real space and redshift space. Finally, we use these mock catalogs for cosmological inference using redshift-space galaxy power spectrum, bispectrum, and wavelet-based statistics using simulation-based inference, performing the first inference with accelerated forward model simulations and finding unbiased cosmological constraints with well-calibrated posteriors. The code was developed as part of the Simons Collaboration on Learning the Universe and is publicly available at \url{https://github.com/shivampcosmo/CHARM}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge