Adam Marblestone

NeuroAI for AI Safety

Nov 27, 2024

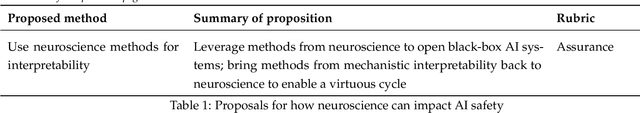

Abstract:As AI systems become increasingly powerful, the need for safe AI has become more pressing. Humans are an attractive model for AI safety: as the only known agents capable of general intelligence, they perform robustly even under conditions that deviate significantly from prior experiences, explore the world safely, understand pragmatics, and can cooperate to meet their intrinsic goals. Intelligence, when coupled with cooperation and safety mechanisms, can drive sustained progress and well-being. These properties are a function of the architecture of the brain and the learning algorithms it implements. Neuroscience may thus hold important keys to technical AI safety that are currently underexplored and underutilized. In this roadmap, we highlight and critically evaluate several paths toward AI safety inspired by neuroscience: emulating the brain's representations, information processing, and architecture; building robust sensory and motor systems from imitating brain data and bodies; fine-tuning AI systems on brain data; advancing interpretability using neuroscience methods; and scaling up cognitively-inspired architectures. We make several concrete recommendations for how neuroscience can positively impact AI safety.

Toward Next-Generation Artificial Intelligence: Catalyzing the NeuroAI Revolution

Oct 15, 2022Abstract:Neuroscience has long been an important driver of progress in artificial intelligence (AI). We propose that to accelerate progress in AI, we must invest in fundamental research in NeuroAI.

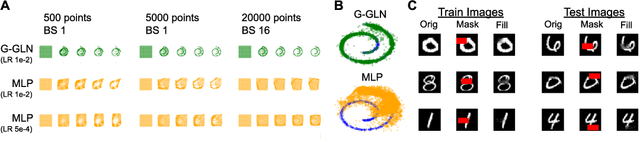

Gaussian Gated Linear Networks

Jun 10, 2020

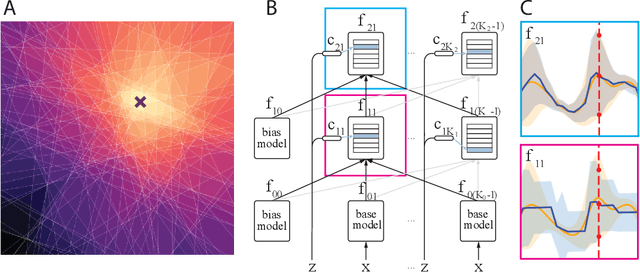

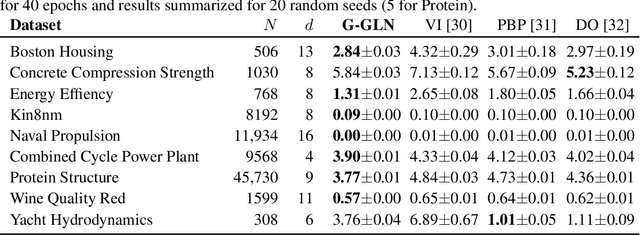

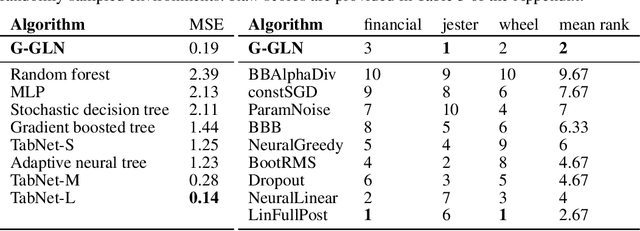

Abstract:We propose the Gaussian Gated Linear Network (G-GLN), an extension to the recently proposed GLN family of deep neural networks. Instead of using backpropagation to learn features, GLNs have a distributed and local credit assignment mechanism based on optimizing a convex objective. This gives rise to many desirable properties including universality, data-efficient online learning, trivial interpretability and robustness to catastrophic forgetting. We extend the GLN framework from classification to multiple regression and density modelling by generalizing geometric mixing to a product of Gaussian densities. The G-GLN achieves competitive or state-of-the-art performance on several univariate and multivariate regression benchmarks, and we demonstrate its applicability to practical tasks including online contextual bandits and density estimation via denoising.

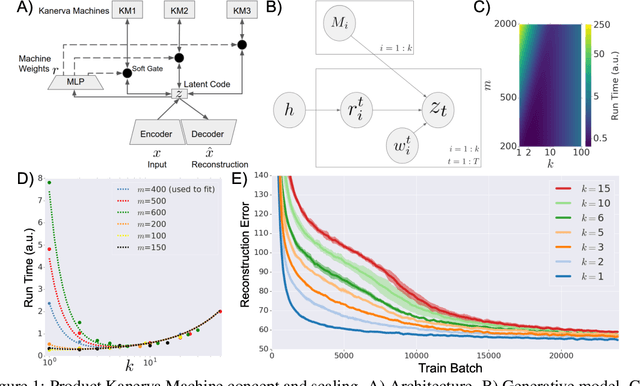

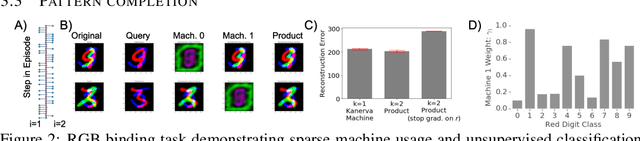

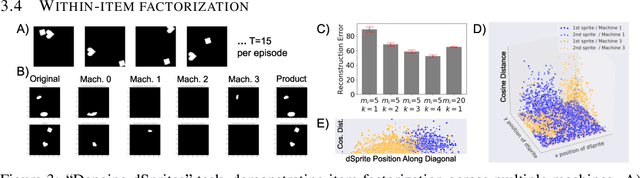

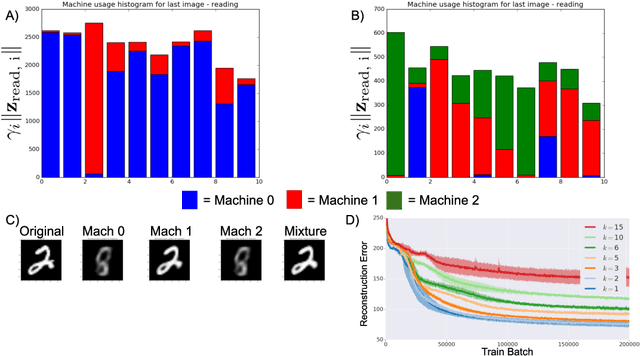

Product Kanerva Machines: Factorized Bayesian Memory

Feb 06, 2020

Abstract:An ideal cognitively-inspired memory system would compress and organize incoming items. The Kanerva Machine (Wu et al, 2018) is a Bayesian model that naturally implements online memory compression. However, the organization of the Kanerva Machine is limited by its use of a single Gaussian random matrix for storage. Here we introduce the Product Kanerva Machine, which dynamically combines many smaller Kanerva Machines. Its hierarchical structure provides a principled way to abstract invariant features and gives scaling and capacity advantages over single Kanerva Machines. We show that it can exhibit unsupervised clustering, find sparse and combinatorial allocation patterns, and discover spatial tunings that approximately factorize simple images by object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge