Abdul Rashid Mussah

Application of 2D Homography for High Resolution Traffic Data Collection using CCTV Cameras

Jan 14, 2024

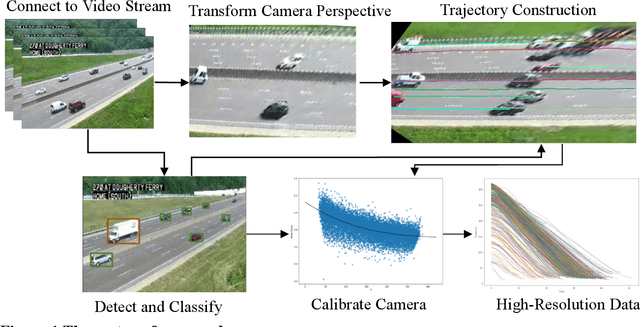

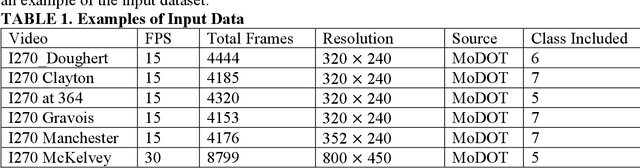

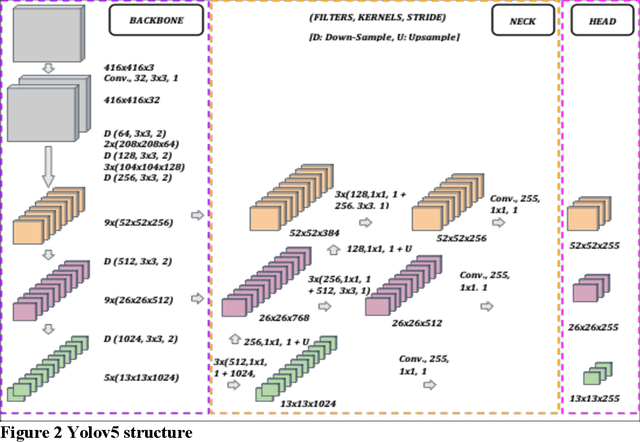

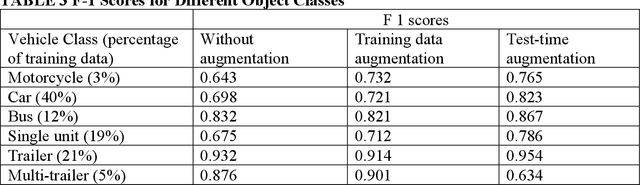

Abstract:Traffic cameras remain the primary source data for surveillance activities such as congestion and incident monitoring. To date, State agencies continue to rely on manual effort to extract data from networked cameras due to limitations of the current automatic vision systems including requirements for complex camera calibration and inability to generate high resolution data. This study implements a three-stage video analytics framework for extracting high-resolution traffic data such vehicle counts, speed, and acceleration from infrastructure-mounted CCTV cameras. The key components of the framework include object recognition, perspective transformation, and vehicle trajectory reconstruction for traffic data collection. First, a state-of-the-art vehicle recognition model is implemented to detect and classify vehicles. Next, to correct for camera distortion and reduce partial occlusion, an algorithm inspired by two-point linear perspective is utilized to extracts the region of interest (ROI) automatically, while a 2D homography technique transforms the CCTV view to bird's-eye view (BEV). Cameras are calibrated with a two-layer matrix system to enable the extraction of speed and acceleration by converting image coordinates to real-world measurements. Individual vehicle trajectories are constructed and compared in BEV using two time-space-feature-based object trackers, namely Motpy and BYTETrack. The results of the current study showed about +/- 4.5% error rate for directional traffic counts, less than 10% MSE for speed bias between camera estimates in comparison to estimates from probe data sources. Extracting high-resolution data from traffic cameras has several implications, ranging from improvements in traffic management and identify dangerous driving behavior, high-risk areas for accidents, and other safety concerns, enabling proactive measures to reduce accidents and fatalities.

DeepSegmenter: Temporal Action Localization for Detecting Anomalies in Untrimmed Naturalistic Driving Videos

Apr 13, 2023

Abstract:Identifying unusual driving behaviors exhibited by drivers during driving is essential for understanding driver behavior and the underlying causes of crashes. Previous studies have primarily approached this problem as a classification task, assuming that naturalistic driving videos come discretized. However, both activity segmentation and classification are required for this task due to the continuous nature of naturalistic driving videos. The current study therefore departs from conventional approaches and introduces a novel methodological framework, DeepSegmenter, that simultaneously performs activity segmentation and classification in a single framework. The proposed framework consists of four major modules namely Data Module, Activity Segmentation Module, Classification Module and Postprocessing Module. Our proposed method won 8th place in the 2023 AI City Challenge, Track 3, with an activity overlap score of 0.5426 on experimental validation data. The experimental results demonstrate the effectiveness, efficiency, and robustness of the proposed system.

AI-Based Framework for Understanding Car Following Behaviors of Drivers in A Naturalistic Driving Environment

Jan 23, 2023

Abstract:The most common type of accident on the road is a rear-end crash. These crashes have a significant negative impact on traffic flow and are frequently fatal. To gain a more practical understanding of these scenarios, it is necessary to accurately model car following behaviors that result in rear-end crashes. Numerous studies have been carried out to model drivers' car-following behaviors; however, the majority of these studies have relied on simulated data, which may not accurately represent real-world incidents. Furthermore, most studies are restricted to modeling the ego vehicle's acceleration, which is insufficient to explain the behavior of the ego vehicle. As a result, the current study attempts to address these issues by developing an artificial intelligence framework for extracting features relevant to understanding driver behavior in a naturalistic environment. Furthermore, the study modeled the acceleration of both the ego vehicle and the leading vehicle using extracted information from NDS videos. According to the study's findings, young people are more likely to be aggressive drivers than elderly people. In addition, when modeling the ego vehicle's acceleration, it was discovered that the relative velocity between the ego vehicle and the leading vehicle was more important than the distance between the two vehicles.

Deep Learning Frameworks for Pavement Distress Classification: A Comparative Analysis

Oct 21, 2020

Abstract:Automatic detection and classification of pavement distresses is critical in timely maintaining and rehabilitating pavement surfaces. With the evolution of deep learning and high performance computing, the feasibility of vision-based pavement defect assessments has significantly improved. In this study, the authors deploy state-of-the-art deep learning algorithms based on different network backbones to detect and characterize pavement distresses. The influence of different backbone models such as CSPDarknet53, Hourglass-104 and EfficientNet were studied to evaluate their classification performance. The models were trained using 21,041 images captured across urban and rural streets of Japan, Czech Republic and India. Finally, the models were assessed based on their ability to predict and classify distresses, and tested using F1 score obtained from the statistical precision and recall values. The best performing model achieved an F1 score of 0.58 and 0.57 on two test datasets released by the IEEE Global Road Damage Detection Challenge. The source code including the trained models are made available at [1].

Artificial Intelligence Enabled Traffic Monitoring System

Oct 02, 2020

Abstract:Manual traffic surveillance can be a daunting task as Traffic Management Centers operate a myriad of cameras installed over a network. Injecting some level of automation could help lighten the workload of human operators performing manual surveillance and facilitate making proactive decisions which would reduce the impact of incidents and recurring congestion on roadways. This article presents a novel approach to automatically monitor real time traffic footage using deep convolutional neural networks and a stand-alone graphical user interface. The authors describe the results of research received in the process of developing models that serve as an integrated framework for an artificial intelligence enabled traffic monitoring system. The proposed system deploys several state-of-the-art deep learning algorithms to automate different traffic monitoring needs. Taking advantage of a large database of annotated video surveillance data, deep learning-based models are trained to detect queues, track stationary vehicles, and tabulate vehicle counts. A pixel-level segmentation approach is applied to detect traffic queues and predict severity. Real-time object detection algorithms coupled with different tracking systems are deployed to automatically detect stranded vehicles as well as perform vehicular counts. At each stages of development, interesting experimental results are presented to demonstrate the effectiveness of the proposed system. Overall, the results demonstrate that the proposed framework performs satisfactorily under varied conditions without being immensely impacted by environmental hazards such as blurry camera views, low illumination, rain, or snow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge