Learning to Smooth and Fold Real Fabric Using Dense Object Descriptors Trained on Synthetic Color Images

Paper and Code

Mar 28, 2020

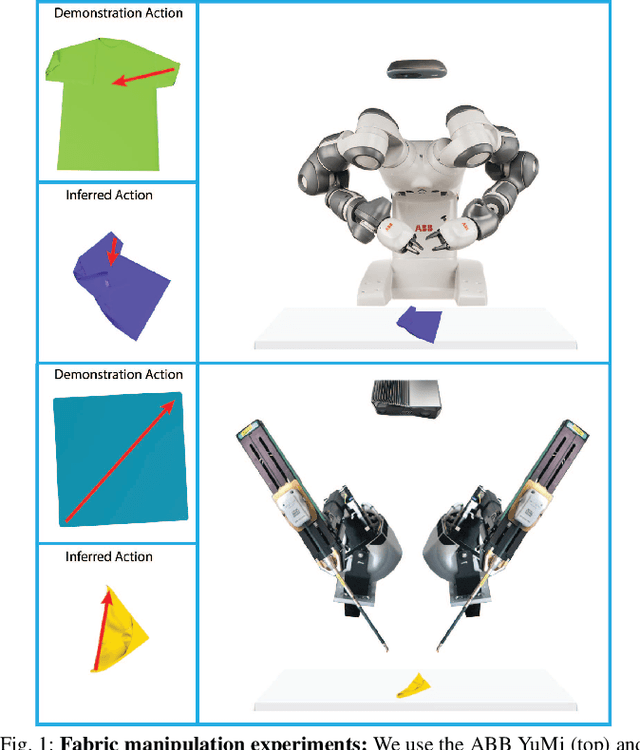

Robotic fabric manipulation is challenging due to the infinite dimensional configuration space and complex dynamics. In this paper, we learn visual representations of deformable fabric by training dense object descriptors that capture correspondences across images of fabric in various configurations. The learned descriptors capture higher level geometric structure, facilitating design of explainable policies. We demonstrate that the learned representation facilitates multistep fabric smoothing and folding tasks on two real physical systems, the da Vinci surgical robot and the ABB YuMi given high level demonstrations from a supervisor. The system achieves a 78.8% average task success rate across six fabric manipulation tasks. See https://tinyurl.com/fabric-descriptors for supplementary material and videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge