Zuowei Cao

Perceptual Quality Assessment for Video Frame Interpolation

Dec 25, 2023

Abstract:The quality of frames is significant for both research and application of video frame interpolation (VFI). In recent VFI studies, the methods of full-reference image quality assessment have generally been used to evaluate the quality of VFI frames. However, high frame rate reference videos, necessities for the full-reference methods, are difficult to obtain in most applications of VFI. To evaluate the quality of VFI frames without reference videos, a no-reference perceptual quality assessment method is proposed in this paper. This method is more compatible with VFI application and the evaluation scores from it are consistent with human subjective opinions. A new quality assessment dataset for VFI was constructed through subjective experiments firstly, to assess the opinion scores of interpolated frames. The dataset was created from triplets of frames extracted from high-quality videos using 9 state-of-the-art VFI algorithms. The proposed method evaluates the perceptual coherence of frames incorporating the original pair of VFI inputs. Specifically, the method applies a triplet network architecture, including three parallel feature pipelines, to extract the deep perceptual features of the interpolated frame as well as the original pair of frames. Coherence similarities of the two-way parallel features are jointly calculated and optimized as a perceptual metric. In the experiments, both full-reference and no-reference quality assessment methods were tested on the new quality dataset. The results show that the proposed method achieves the best performance among all compared quality assessment methods on the dataset.

NTIRE 2022 Challenge on Efficient Super-Resolution: Methods and Results

May 11, 2022

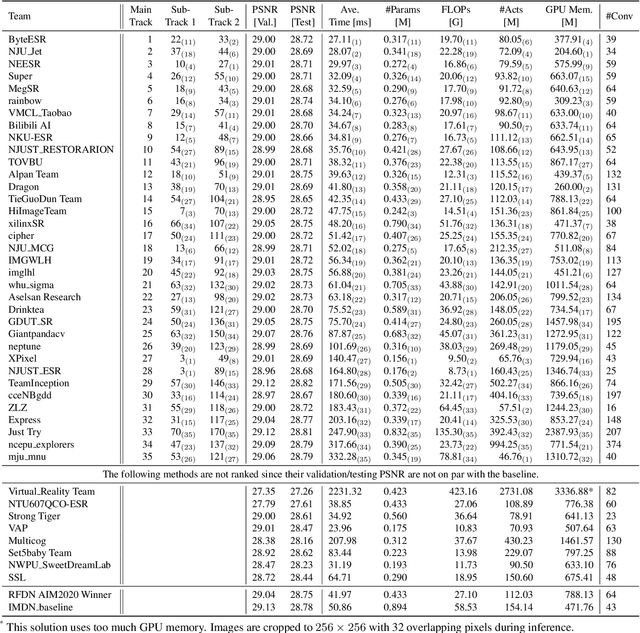

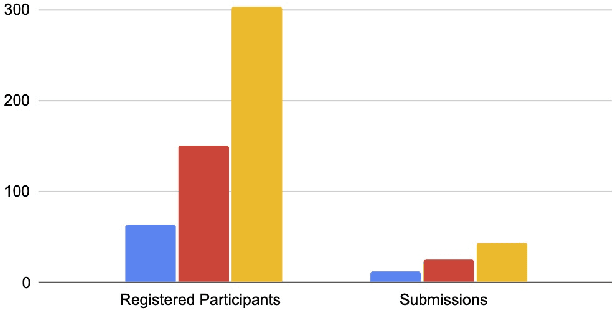

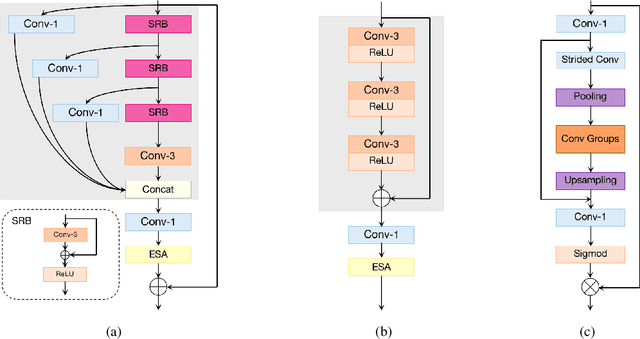

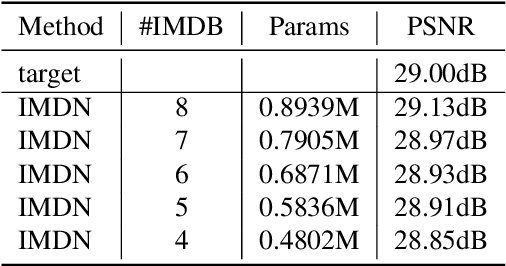

Abstract:This paper reviews the NTIRE 2022 challenge on efficient single image super-resolution with focus on the proposed solutions and results. The task of the challenge was to super-resolve an input image with a magnification factor of $\times$4 based on pairs of low and corresponding high resolution images. The aim was to design a network for single image super-resolution that achieved improvement of efficiency measured according to several metrics including runtime, parameters, FLOPs, activations, and memory consumption while at least maintaining the PSNR of 29.00dB on DIV2K validation set. IMDN is set as the baseline for efficiency measurement. The challenge had 3 tracks including the main track (runtime), sub-track one (model complexity), and sub-track two (overall performance). In the main track, the practical runtime performance of the submissions was evaluated. The rank of the teams were determined directly by the absolute value of the average runtime on the validation set and test set. In sub-track one, the number of parameters and FLOPs were considered. And the individual rankings of the two metrics were summed up to determine a final ranking in this track. In sub-track two, all of the five metrics mentioned in the description of the challenge including runtime, parameter count, FLOPs, activations, and memory consumption were considered. Similar to sub-track one, the rankings of five metrics were summed up to determine a final ranking. The challenge had 303 registered participants, and 43 teams made valid submissions. They gauge the state-of-the-art in efficient single image super-resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge