Zhijin Wang

pyFAST: A Modular PyTorch Framework for Time Series Modeling with Multi-source and Sparse Data

Aug 26, 2025Abstract:Modern time series analysis demands frameworks that are flexible, efficient, and extensible. However, many existing Python libraries exhibit limitations in modularity and in their native support for irregular, multi-source, or sparse data. We introduce pyFAST, a research-oriented PyTorch framework that explicitly decouples data processing from model computation, fostering a cleaner separation of concerns and facilitating rapid experimentation. Its data engine is engineered for complex scenarios, supporting multi-source loading, protein sequence handling, efficient sequence- and patch-level padding, dynamic normalization, and mask-based modeling for both imputation and forecasting. pyFAST integrates LLM-inspired architectures for the alignment-free fusion of sparse data sources and offers native sparse metrics, specialized loss functions, and flexible exogenous data fusion. Training utilities include batch-based streaming aggregation for evaluation and device synergy to maximize computational efficiency. A comprehensive suite of classical and deep learning models (Linears, CNNs, RNNs, Transformers, and GNNs) is provided within a modular architecture that encourages extension. Released under the MIT license at GitHub, pyFAST provides a compact yet powerful platform for advancing time series research and applications.

BadDepth: Backdoor Attacks Against Monocular Depth Estimation in the Physical World

May 22, 2025Abstract:In recent years, deep learning-based Monocular Depth Estimation (MDE) models have been widely applied in fields such as autonomous driving and robotics. However, their vulnerability to backdoor attacks remains unexplored. To fill the gap in this area, we conduct a comprehensive investigation of backdoor attacks against MDE models. Typically, existing backdoor attack methods can not be applied to MDE models. This is because the label used in MDE is in the form of a depth map. To address this, we propose BadDepth, the first backdoor attack targeting MDE models. BadDepth overcomes this limitation by selectively manipulating the target object's depth using an image segmentation model and restoring the surrounding areas via depth completion, thereby generating poisoned datasets for object-level backdoor attacks. To improve robustness in physical world scenarios, we further introduce digital-to-physical augmentation to adapt to the domain gap between the physical world and the digital domain. Extensive experiments on multiple models validate the effectiveness of BadDepth in both the digital domain and the physical world, without being affected by environmental factors.

TransRec: Learning Transferable Recommendation from Mixture-of-Modality Feedback

Jun 13, 2022

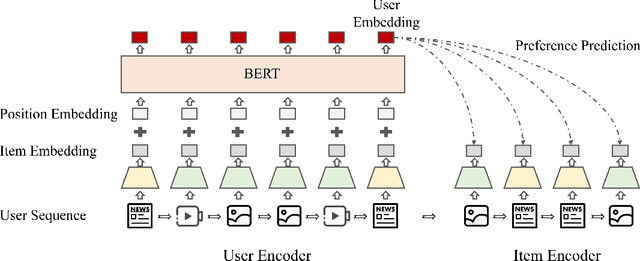

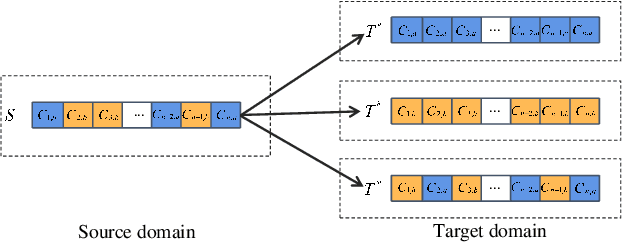

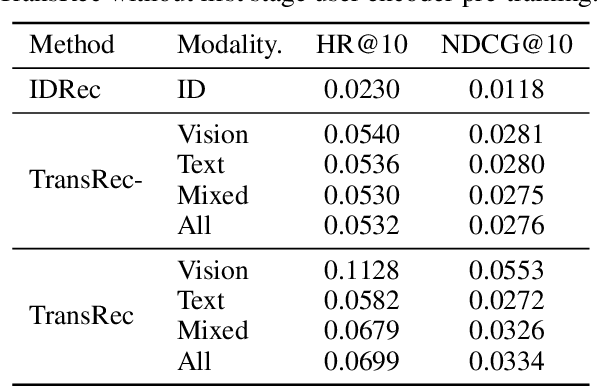

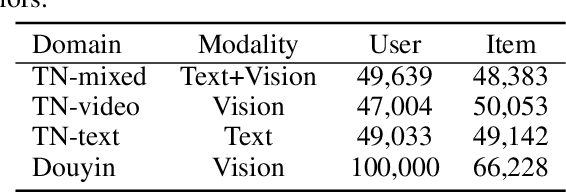

Abstract:Learning big models and then transfer has become the de facto practice in computer vision (CV) and natural language processing (NLP). However, such unified paradigm is uncommon for recommender systems (RS). A critical issue that hampers this is that standard recommendation models are built on unshareable identity data, where both users and their interacted items are represented by unique IDs. In this paper, we study a novel scenario where user's interaction feedback involves mixture-of-modality (MoM) items. We present TransRec, a straightforward modification done on the popular ID-based RS framework. TransRec directly learns from MoM feedback in an end-to-end manner, and thus enables effective transfer learning under various scenarios without relying on overlapped users or items. We empirically study the transferring ability of TransRec across four different real-world recommendation settings. Besides, we study its effects by scaling the size of source and target data. Our results suggest that learning recommenders from MoM feedback provides a promising way to realize universal recommender systems. Our code and datasets will be made available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge