Zhi Guo

Rectify Evaluation Preference: Improving LLMs' Critique on Math Reasoning via Perplexity-aware Reinforcement Learning

Nov 13, 2025Abstract:To improve Multi-step Mathematical Reasoning (MsMR) of Large Language Models (LLMs), it is crucial to obtain scalable supervision from the corpus by automatically critiquing mistakes in the reasoning process of MsMR and rendering a final verdict of the problem-solution. Most existing methods rely on crafting high-quality supervised fine-tuning demonstrations for critiquing capability enhancement and pay little attention to delving into the underlying reason for the poor critiquing performance of LLMs. In this paper, we orthogonally quantify and investigate the potential reason -- imbalanced evaluation preference, and conduct a statistical preference analysis. Motivated by the analysis of the reason, a novel perplexity-aware reinforcement learning algorithm is proposed to rectify the evaluation preference, elevating the critiquing capability. Specifically, to probe into LLMs' critiquing characteristics, a One-to-many Problem-Solution (OPS) benchmark is meticulously constructed to quantify the behavior difference of LLMs when evaluating the problem solutions generated by itself and others. Then, to investigate the behavior difference in depth, we conduct a statistical preference analysis oriented on perplexity and find an intriguing phenomenon -- ``LLMs incline to judge solutions with lower perplexity as correct'', which is dubbed as \textit{imbalanced evaluation preference}. To rectify this preference, we regard perplexity as the baton in the algorithm of Group Relative Policy Optimization, supporting the LLMs to explore trajectories that judge lower perplexity as wrong and higher perplexity as correct. Extensive experimental results on our built OPS and existing available critic benchmarks demonstrate the validity of our method.

AiAds: Automated and Intelligent Advertising System for Sponsored Search

Jul 28, 2019

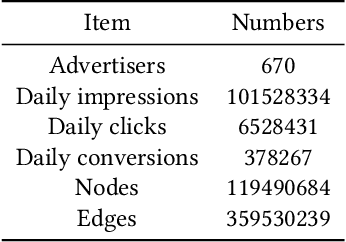

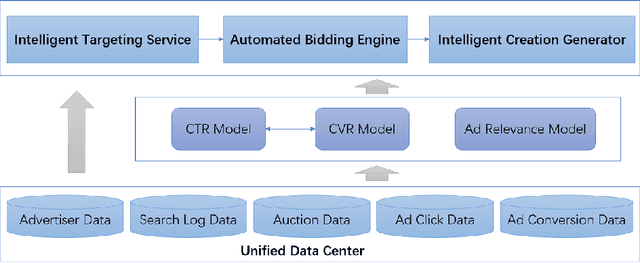

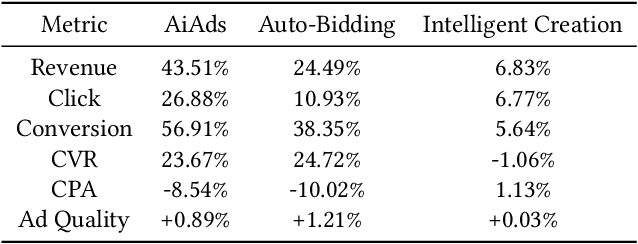

Abstract:Sponsored search has more than 20 years of history, and it has been proven to be a successful business model for online advertising. Based on the pay-per-click pricing model and the keyword targeting technology, the sponsored system runs online auctions to determine the allocations and prices of search advertisements. In the traditional setting, advertisers should manually create lots of ad creatives and bid on some relevant keywords to target their audience. Due to the huge amount of search traffic and a wide variety of ad creations, the limits of manual optimizations from advertisers become the main bottleneck for improving the efficiency of this market. Moreover, as many emerging advertising forms and supplies are growing, it's crucial for sponsored search platform to pay more attention to the ROI metrics of ads for getting the marketing budgets of advertisers. In this paper, we present the AiAds system developed at Baidu, which use machine learning techniques to build an automated and intelligent advertising system. By designing and implementing the automated bidding strategy, the intelligent targeting and the intelligent creation models, the AiAds system can transform the manual optimizations into multiple automated tasks and optimize these tasks in advanced methods. AiAds is a brand-new architecture of sponsored search system which changes the bidding language and allocation mechanism, breaks the limit of keyword targeting with end-to-end ad retrieval framework and provides global optimization of ad creation. This system can increase the advertiser's campaign performance, the user experience and the revenue of the advertising platform simultaneously and significantly. We present the overall architecture and modeling techniques for each module of the system and share our lessons learned in solving several key challenges.

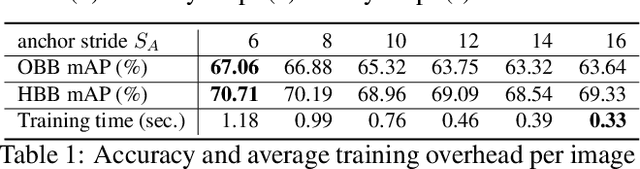

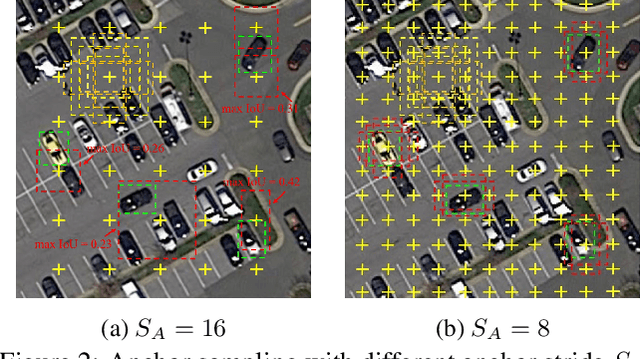

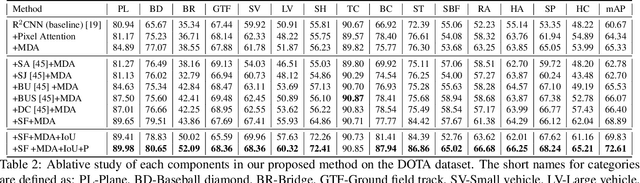

R2CNN++: Multi-Dimensional Attention Based Rotation Invariant Detector with Robust Anchor Strategy

Nov 20, 2018

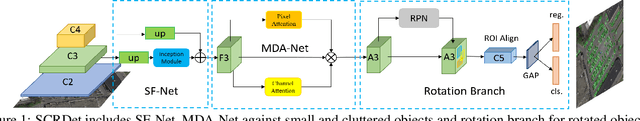

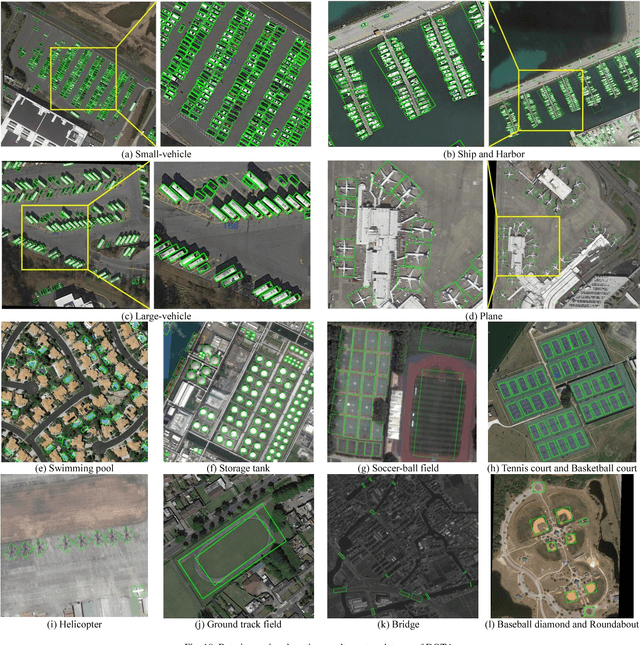

Abstract:Object detection plays a vital role in natural scene and aerial scene and is full of challenges. Although many advanced algorithms have succeeded in the natural scene, the progress in the aerial scene has been slow due to the complexity of the aerial image and the large degree of freedom of remote sensing objects in scale, orientation, and density. In this paper, a novel multi-category rotation detector is proposed, which can efficiently detect small objects, arbitrary direction objects, and dense objects in complex remote sensing images. Specifically, the proposed model adopts a targeted feature fusion strategy called inception fusion network, which fully considers factors such as feature fusion, anchor sampling, and receptive field to improve the ability to handle small objects. Then we combine the pixel attention network and the channel attention network to weaken the noise information and highlight the objects feature. Finally, the rotational object detection algorithm is realized by redefining the rotating bounding box. Experiments on public datasets including DOTA, NWPU VHR-10 demonstrate that the proposed algorithm significantly outperforms state-of-the-art methods. The code and models will be available at https://github.com/DetectionTeamUCAS/R2CNN-Plus-Plus_Tensorflow.

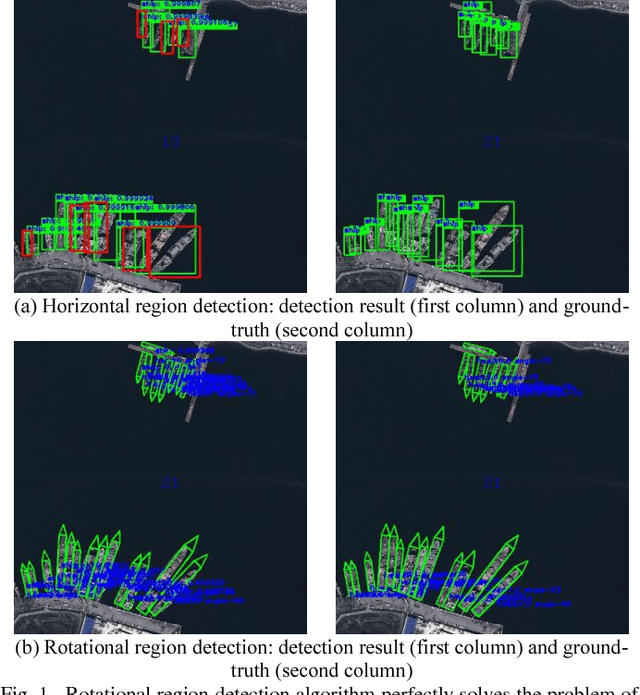

Position Detection and Direction Prediction for Arbitrary-Oriented Ships via Multiscale Rotation Region Convolutional Neural Network

Jun 13, 2018

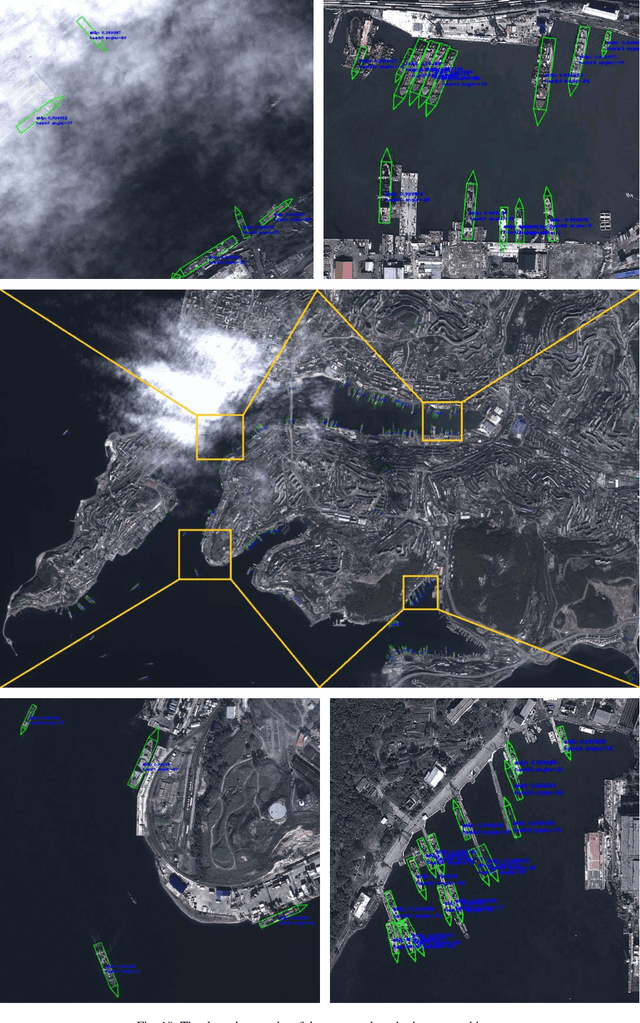

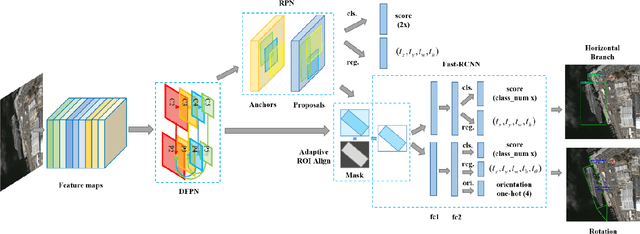

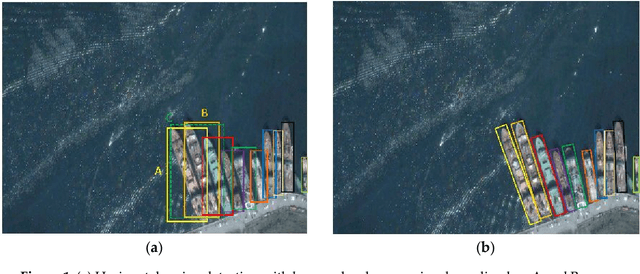

Abstract:Ship detection is of great importance and full of challenges in the field of remote sensing. The complexity of application scenarios, the redundancy of detection region, and the difficulty of dense ship detection are all the main obstacles that limit the successful operation of traditional methods in ship detection. In this paper, we propose a brand new detection model based on multiscale rotational region convolutional neural network to solve the problems above. This model is mainly consist of five consecutive parts: Dense Feature Pyramid Network (DFPN), adaptive region of interest (ROI) Align, rotational bounding box regression, prow direction prediction and rotational nonmaximum suppression (R-NMS). First of all, the low-level location information and high-level semantic information are fully utilized through multiscale feature networks. Then, we design adaptive ROI Align to obtain high quality proposals which remain complete spatial and semantic information. Unlike most previous approaches, the prediction obtained by our method is the minimum bounding rectangle of the object with less redundant regions. Therefore, rotational region detection framework is more suitable to detect the dense object than traditional detection model. Additionally, we can find the berthing and sailing direction of ship through prediction. A detailed evaluation based on SRSS and DOTA dataset for rotation detection shows that our detection method has a competitive performance.

Automatic Ship Detection of Remote Sensing Images from Google Earth in Complex Scenes Based on Multi-Scale Rotation Dense Feature Pyramid Networks

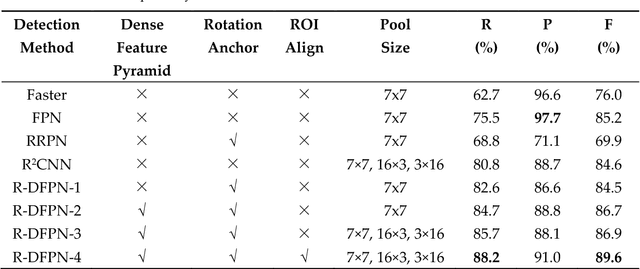

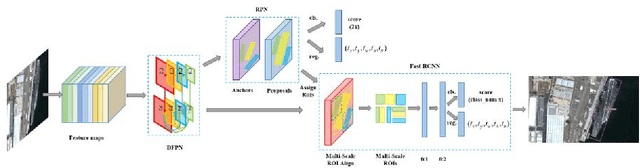

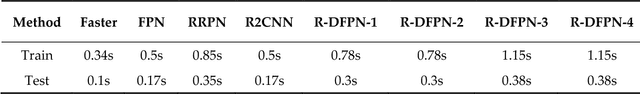

Jun 12, 2018

Abstract:Ship detection has been playing a significant role in the field of remote sensing for a long time but it is still full of challenges. The main limitations of traditional ship detection methods usually lie in the complexity of application scenarios, the difficulty of intensive object detection and the redundancy of detection region. In order to solve such problems above, we propose a framework called Rotation Dense Feature Pyramid Networks (R-DFPN) which can effectively detect ship in different scenes including ocean and port. Specifically, we put forward the Dense Feature Pyramid Network (DFPN), which is aimed at solving the problem resulted from the narrow width of the ship. Compared with previous multi-scale detectors such as Feature Pyramid Network (FPN), DFPN builds the high-level semantic feature-maps for all scales by means of dense connections, through which enhances the feature propagation and encourages the feature reuse. Additionally, in the case of ship rotation and dense arrangement, we design a rotation anchor strategy to predict the minimum circumscribed rectangle of the object so as to reduce the redundant detection region and improve the recall. Furthermore, we also propose multi-scale ROI Align for the purpose of maintaining the completeness of semantic and spatial information. Experiments based on remote sensing images from Google Earth for ship detection show that our detection method based on R-DFPN representation has a state-of-the-art performance.

* 14 pages, 11 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge