Ze Cao

InDL: A New Dataset and Benchmark for In-Diagram Logic Interpretation based on Visual Illusion

Jun 05, 2023Abstract:This paper introduces a novel approach to evaluating deep learning models' capacity for in-diagram logic interpretation. Leveraging the intriguing realm of visual illusions, we establish a unique dataset, InDL, designed to rigorously test and benchmark these models. Deep learning has witnessed remarkable progress in domains such as computer vision and natural language processing. However, models often stumble in tasks requiring logical reasoning due to their inherent 'black box' characteristics, which obscure the decision-making process. Our work presents a new lens to understand these models better by focusing on their handling of visual illusions -- a complex interplay of perception and logic. We utilize six classic geometric optical illusions to create a comparative framework between human and machine visual perception. This methodology offers a quantifiable measure to rank models, elucidating potential weaknesses and providing actionable insights for model improvements. Our experimental results affirm the efficacy of our benchmarking strategy, demonstrating its ability to effectively rank models based on their logic interpretation ability. As part of our commitment to reproducible research, the source code and datasets will be made publicly available at https://github.com/rabbit-magic-wh/InDL

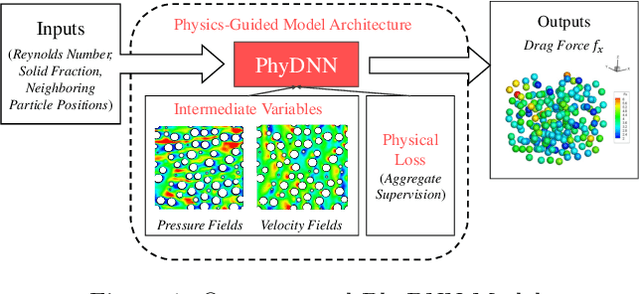

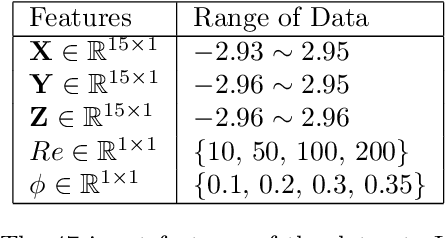

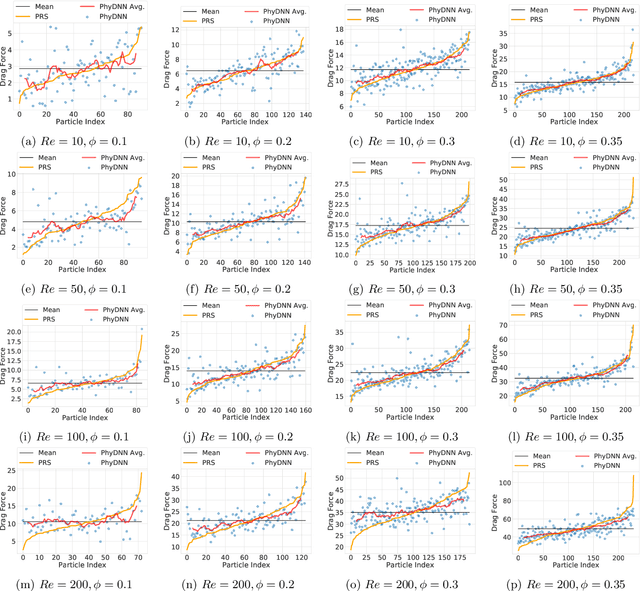

Physics-guided Design and Learning of Neural Networks for Predicting Drag Force on Particle Suspensions in Moving Fluids

Nov 06, 2019

Abstract:Physics-based simulations are often used to model and understand complex physical systems and processes in domains like fluid dynamics. Such simulations, although used frequently, have many limitations which could arise either due to the inability to accurately model a physical process owing to incomplete knowledge about certain facets of the process or due to the underlying process being too complex to accurately encode into a simulation model. In such situations, it is often useful to rely on machine learning methods to fill in the gap by learning a model of the complex physical process directly from simulation data. However, as data generation through simulations is costly, we need to develop models, being cognizant of data paucity issues. In such scenarios it is often helpful if the rich physical knowledge of the application domain is incorporated in the architectural design of machine learning models. Further, we can also use information from physics-based simulations to guide the learning process using aggregate supervision to favorably constrain the learning process. In this paper, we propose PhyDNN, a deep learning model using physics-guided structural priors and physics-guided aggregate supervision for modeling the drag forces acting on each particle in a Computational Fluid Dynamics-Discrete Element Method(CFD-DEM). We conduct extensive experiments in the context of drag force prediction and showcase the usefulness of including physics knowledge in our deep learning formulation both in the design and through learning process. Our proposed PhyDNN model has been compared to several state-of-the-art models and achieves a significant performance improvement of 8.46% on average across all baseline models. The source code has been made available and the dataset used is detailed in [1, 2].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge