Zahra Abbasiantaeb

Query Understanding in LLM-based Conversational Information Seeking

Apr 08, 2025Abstract:Query understanding in Conversational Information Seeking (CIS) involves accurately interpreting user intent through context-aware interactions. This includes resolving ambiguities, refining queries, and adapting to evolving information needs. Large Language Models (LLMs) enhance this process by interpreting nuanced language and adapting dynamically, improving the relevance and precision of search results in real-time. In this tutorial, we explore advanced techniques to enhance query understanding in LLM-based CIS systems. We delve into LLM-driven methods for developing robust evaluation metrics to assess query understanding quality in multi-turn interactions, strategies for building more interactive systems, and applications like proactive query management and query reformulation. We also discuss key challenges in integrating LLMs for query understanding in conversational search systems and outline future research directions. Our goal is to deepen the audience's understanding of LLM-based conversational query understanding and inspire discussions to drive ongoing advancements in this field.

Improving the Reusability of Conversational Search Test Collections

Mar 12, 2025Abstract:Incomplete relevance judgments limit the reusability of test collections. When new systems are compared to previous systems that contributed to the pool, they often face a disadvantage. This is due to pockets of unjudged documents (called holes) in the test collection that the new systems return. The very nature of Conversational Search (CS) means that these holes are potentially larger and more problematic when evaluating systems. In this paper, we aim to extend CS test collections by employing Large Language Models (LLMs) to fill holes by leveraging existing judgments. We explore this problem using TREC iKAT 23 and TREC CAsT 22 collections, where information needs are highly dynamic and the responses are much more varied, leaving bigger holes to fill. Our experiments reveal that CS collections show a trend towards less reusability in deeper turns. Also, fine-tuning the Llama 3.1 model leads to high agreement with human assessors, while few-shot prompting the ChatGPT results in low agreement with humans. Consequently, filling the holes of a new system using ChatGPT leads to a higher change in the location of the new system. While regenerating the assessment pool with few-shot prompting the ChatGPT model and using it for evaluation achieves a high rank correlation with human-assessed pools. We show that filling the holes using few-shot training the Llama 3.1 model enables a fairer comparison between the new system and the systems contributed to the pool. Our hole-filling model based on few-shot training of the Llama 3.1 model can improve the reusability of test collections.

Conversational Gold: Evaluating Personalized Conversational Search System using Gold Nuggets

Mar 12, 2025Abstract:The rise of personalized conversational search systems has been driven by advancements in Large Language Models (LLMs), enabling these systems to retrieve and generate answers for complex information needs. However, the automatic evaluation of responses generated by Retrieval Augmented Generation (RAG) systems remains an understudied challenge. In this paper, we introduce a new resource for assessing the retrieval effectiveness and relevance of response generated by RAG systems, using a nugget-based evaluation framework. Built upon the foundation of TREC iKAT 2023, our dataset extends to the TREC iKAT 2024 collection, which includes 17 conversations and 20,575 relevance passage assessments, together with 2,279 extracted gold nuggets, and 62 manually written gold answers from NIST assessors. While maintaining the core structure of its predecessor, this new collection enables a deeper exploration of generation tasks in conversational settings. Key improvements in iKAT 2024 include: (1) ``gold nuggets'' -- concise, essential pieces of information extracted from relevant passages of the collection -- which serve as a foundation for automatic response evaluation; (2) manually written answers to provide a gold standard for response evaluation; (3) unanswerable questions to evaluate model hallucination; (4) expanded user personas, providing richer contextual grounding; and (5) a transition from Personal Text Knowledge Base (PTKB) ranking to PTKB classification and selection. Built on this resource, we provide a framework for long-form answer generation evaluation, involving nuggets extraction and nuggets matching, linked to retrieval. This establishes a solid resource for advancing research in personalized conversational search and long-form answer generation. Our resources are publicly available at https://github.com/irlabamsterdam/CONE-RAG.

IRLab@iKAT24: Learned Sparse Retrieval with Multi-aspect LLM Query Generation for Conversational Search

Nov 22, 2024

Abstract:The Interactive Knowledge Assistant Track (iKAT) 2024 focuses on advancing conversational assistants, able to adapt their interaction and responses from personalized user knowledge. The track incorporates a Personal Textual Knowledge Base (PTKB) alongside Conversational AI tasks, such as passage ranking and response generation. Query Rewrite being an effective approach for resolving conversational context, we explore Large Language Models (LLMs), as query rewriters. Specifically, our submitted runs explore multi-aspect query generation using the MQ4CS framework, which we further enhance with Learned Sparse Retrieval via the SPLADE architecture, coupled with robust cross-encoder models. We also propose an alternative to the previous interleaving strategy, aggregating multiple aspects during the reranking phase. Our findings indicate that multi-aspect query generation is effective in enhancing performance when integrated with advanced retrieval and reranking models. Our results also lead the way for better personalization in Conversational Search, relying on LLMs to integrate personalization within query rewrite, and outperforming human rewrite performance.

Can We Use Large Language Models to Fill Relevance Judgment Holes?

May 09, 2024Abstract:Incomplete relevance judgments limit the re-usability of test collections. When new systems are compared against previous systems used to build the pool of judged documents, they often do so at a disadvantage due to the ``holes'' in test collection (i.e., pockets of un-assessed documents returned by the new system). In this paper, we take initial steps towards extending existing test collections by employing Large Language Models (LLM) to fill the holes by leveraging and grounding the method using existing human judgments. We explore this problem in the context of Conversational Search using TREC iKAT, where information needs are highly dynamic and the responses (and, the results retrieved) are much more varied (leaving bigger holes). While previous work has shown that automatic judgments from LLMs result in highly correlated rankings, we find substantially lower correlates when human plus automatic judgments are used (regardless of LLM, one/two/few shot, or fine-tuned). We further find that, depending on the LLM employed, new runs will be highly favored (or penalized), and this effect is magnified proportionally to the size of the holes. Instead, one should generate the LLM annotations on the whole document pool to achieve more consistent rankings with human-generated labels. Future work is required to prompt engineering and fine-tuning LLMs to reflect and represent the human annotations, in order to ground and align the models, such that they are more fit for purpose.

TREC iKAT 2023: A Test Collection for Evaluating Conversational and Interactive Knowledge Assistants

May 04, 2024Abstract:Conversational information seeking has evolved rapidly in the last few years with the development of Large Language Models (LLMs), providing the basis for interpreting and responding in a naturalistic manner to user requests. The extended TREC Interactive Knowledge Assistance Track (iKAT) collection aims to enable researchers to test and evaluate their Conversational Search Agents (CSA). The collection contains a set of 36 personalized dialogues over 20 different topics each coupled with a Personal Text Knowledge Base (PTKB) that defines the bespoke user personas. A total of 344 turns with approximately 26,000 passages are provided as assessments on relevance, as well as additional assessments on generated responses over four key dimensions: relevance, completeness, groundedness, and naturalness. The collection challenges CSA to efficiently navigate diverse personal contexts, elicit pertinent persona information, and employ context for relevant conversations. The integration of a PTKB and the emphasis on decisional search tasks contribute to the uniqueness of this test collection, making it an essential benchmark for advancing research in conversational and interactive knowledge assistants.

Generate then Retrieve: Conversational Response Retrieval Using LLMs as Answer and Query Generators

Mar 28, 2024Abstract:CIS is a prominent area in IR that focuses on developing interactive knowledge assistants. These systems must adeptly comprehend the user's information requirements within the conversational context and retrieve the relevant information. To this aim, the existing approaches model the user's information needs with one query called rewritten query and use this query for passage retrieval. In this paper, we propose three different methods for generating multiple queries to enhance the retrieval. In these methods, we leverage the capabilities of large language models (LLMs) in understanding the user's information need and generating an appropriate response, to generate multiple queries. We implement and evaluate the proposed models utilizing various LLMs including GPT-4 and Llama-2 chat in zero-shot and few-shot settings. In addition, we propose a new benchmark for TREC iKAT based on gpt 3.5 judgments. Our experiments reveal the effectiveness of our proposed models on the TREC iKAT dataset.

TREC iKAT 2023: The Interactive Knowledge Assistance Track Overview

Jan 02, 2024

Abstract:Conversational Information Seeking stands as a pivotal research area with significant contributions from previous works. The TREC Interactive Knowledge Assistance Track (iKAT) builds on the foundational work of the TREC Conversational Assistance Track (CAsT). However, iKAT distinctively emphasizes the creation and research of conversational search agents that adapt responses based on user's prior interactions and present context. The challenge lies in enabling Conversational Search Agents (CSA) to incorporate this personalized context to efficiency and effectively guide users through the relevant information to them. iKAT also emphasizes decisional search tasks, where users sift through data and information to weigh up options in order to reach a conclusion or perform an action. These tasks, prevalent in everyday information-seeking decisions -- be it related to travel, health, or shopping -- often revolve around a subset of high-level information operators where queries or questions about the information space include: finding options, comparing options, identifying the pros and cons of options, etc. Given the different personas and their information need (expressed through the sequence of questions), diverse conversation trajectories will arise -- because the answers to these similar queries will be very different. In this paper, we report on the first year of TREC iKAT, describing the task, topics, data collection, and evaluation framework. We further review the submissions and summarize the findings.

Let the LLMs Talk: Simulating Human-to-Human Conversational QA via Zero-Shot LLM-to-LLM Interactions

Dec 05, 2023

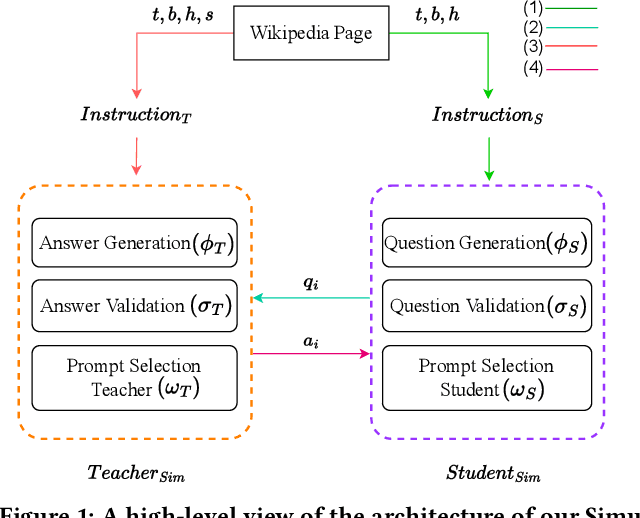

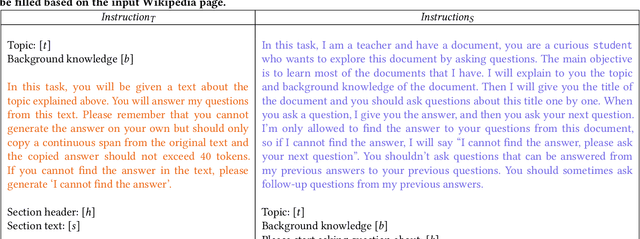

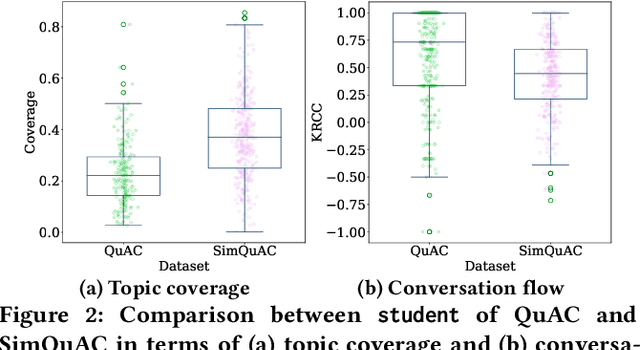

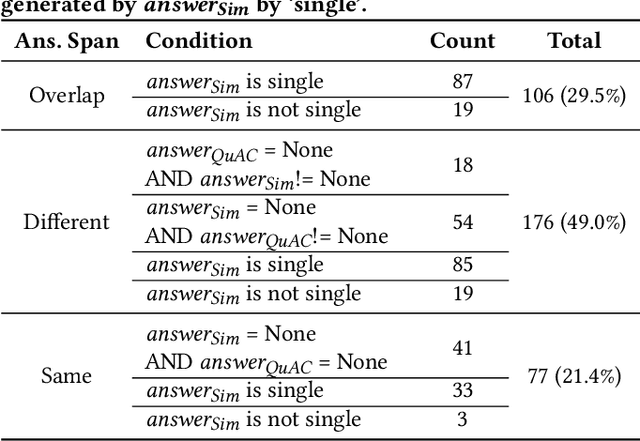

Abstract:Conversational question-answering (CQA) systems aim to create interactive search systems that effectively retrieve information by interacting with users. To replicate human-to-human conversations, existing work uses human annotators to play the roles of the questioner (student) and the answerer (teacher). Despite its effectiveness, challenges exist as human annotation is time-consuming, inconsistent, and not scalable. To address this issue and investigate the applicability of large language models (LLMs) in CQA simulation, we propose a simulation framework that employs zero-shot learner LLMs for simulating teacher-student interactions. Our framework involves two LLMs interacting on a specific topic, with the first LLM acting as a student, generating questions to explore a given search topic. The second LLM plays the role of a teacher by answering questions and is equipped with additional information, including a text on the given topic. We implement both the student and teacher by zero-shot prompting the GPT-4 model. To assess the effectiveness of LLMs in simulating CQA interactions and understand the disparities between LLM- and human-generated conversations, we evaluate the simulated data from various perspectives. We begin by evaluating the teacher's performance through both automatic and human assessment. Next, we evaluate the performance of the student, analyzing and comparing the disparities between questions generated by the LLM and those generated by humans. Furthermore, we conduct extensive analyses to thoroughly examine the LLM performance by benchmarking state-of-the-art reading comprehension models on both datasets. Our results reveal that the teacher LLM generates lengthier answers that tend to be more accurate and complete. The student LLM generates more diverse questions, covering more aspects of a given topic.

PQuAD: A Persian Question Answering Dataset

Feb 13, 2022

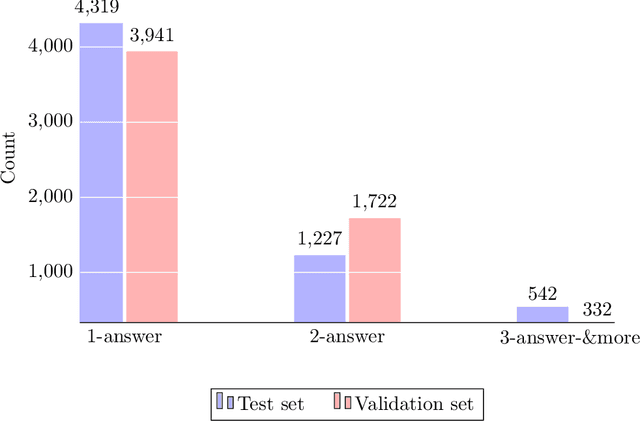

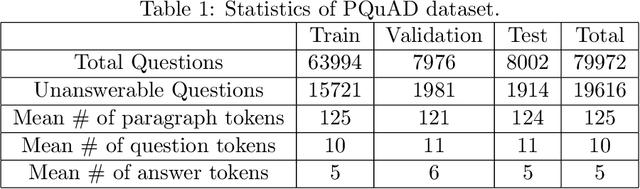

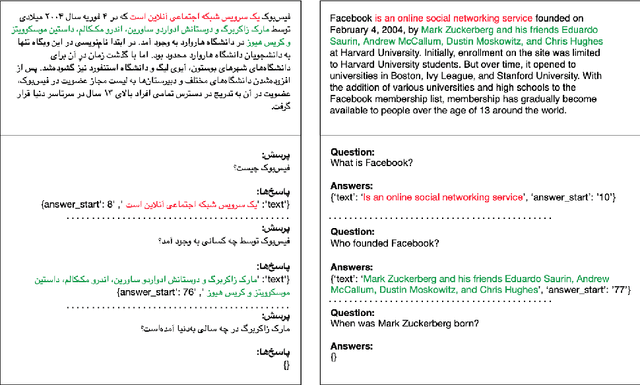

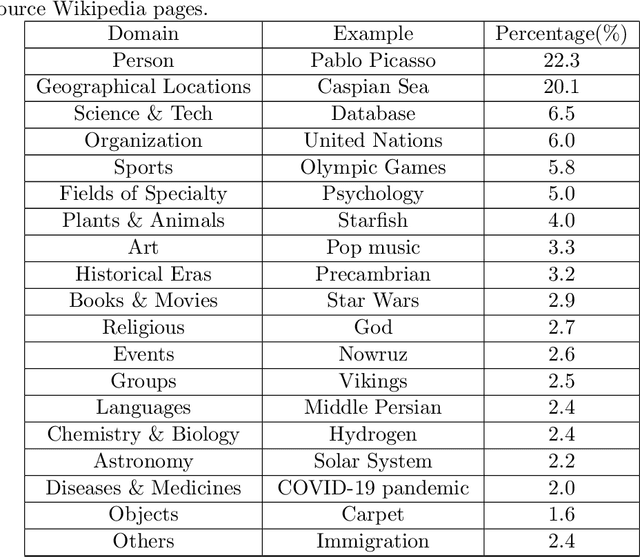

Abstract:We present Persian Question Answering Dataset (PQuAD), a crowdsourced reading comprehension dataset on Persian Wikipedia articles. It includes 80,000 questions along with their answers, with 25% of the questions being adversarially unanswerable. We examine various properties of the dataset to show the diversity and the level of its difficulty as an MRC benchmark. By releasing this dataset, we aim to ease research on Persian reading comprehension and development of Persian question answering systems. Our experiments on different state-of-the-art pre-trained contextualized language models show 74.8% Exact Match (EM) and 87.6% F1-score that can be used as the baseline results for further research on Persian QA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge