Yung-Han Ho

Learned Video Compression for YUV 4:2:0 Content Using Flow-based Conditional Inter-frame Coding

Oct 15, 2022

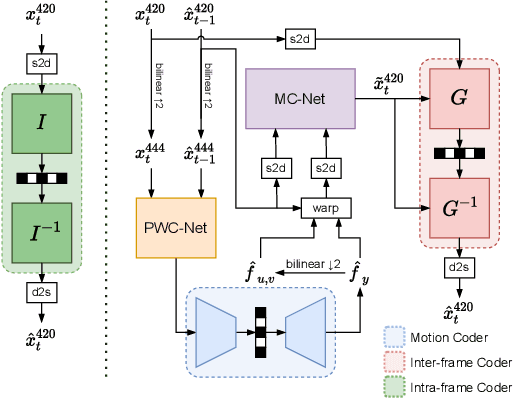

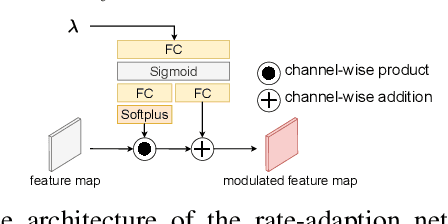

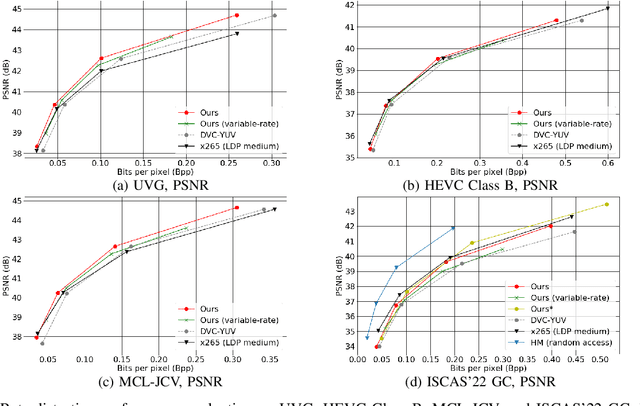

Abstract:This paper proposes a learning-based video compression framework for variable-rate coding on YUV 4:2:0 content. Most existing learning-based video compression models adopt the traditional hybrid-based coding architecture, which involves temporal prediction followed by residual coding. However, recent studies have shown that residual coding is sub-optimal from the information-theoretic perspective. In addition, most existing models are optimized with respect to RGB content. Furthermore, they require separate models for variable-rate coding. To address these issues, this work presents an attempt to incorporate the conditional inter-frame coding for YUV 4:2:0 content. We introduce a conditional flow-based inter-frame coder to improve the inter-frame coding efficiency. To adapt our codec to YUV 4:2:0 content, we adopt a simple strategy of using space-to-depth and depth-to-space conversions. Lastly, we employ a rate-adaption net to achieve variable-rate coding without training multiple models. Experimental results show that our model performs better than x265 on UVG and MCL-JCV datasets in terms of PSNR-YUV. However, on the more challenging datasets from ISCAS'22 GC, there is still ample room for improvement. This insufficient performance is due to the lack of inter-frame coding capability at a large GOP size and can be mitigated by increasing the model capacity and applying an error propagation-aware training strategy.

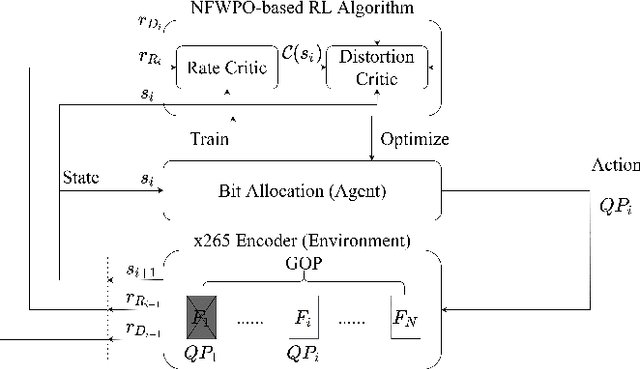

Neural Frank-Wolfe Policy Optimization for Region-of-Interest Intra-Frame Coding with HEVC/H.265

Sep 27, 2022

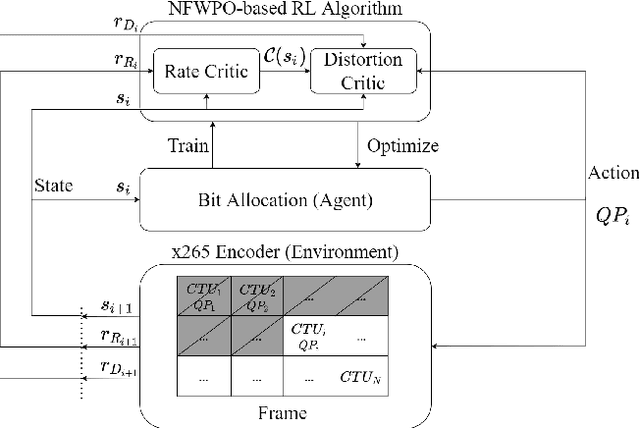

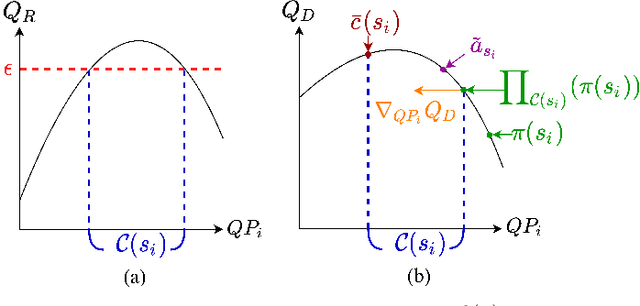

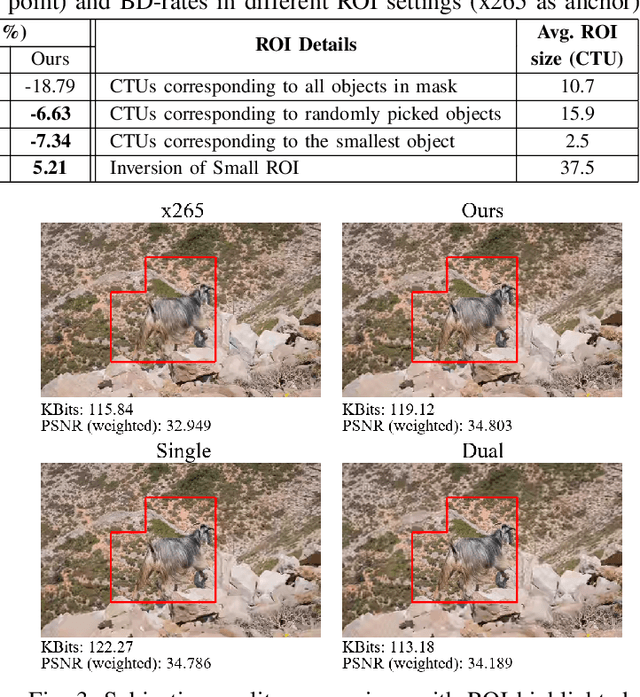

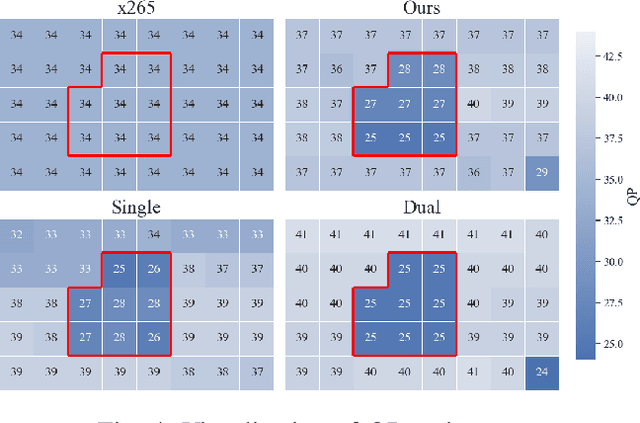

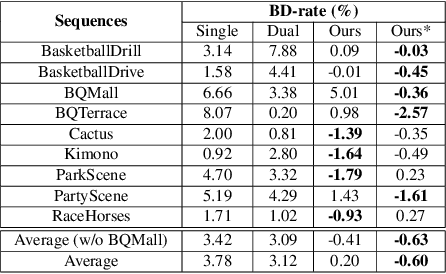

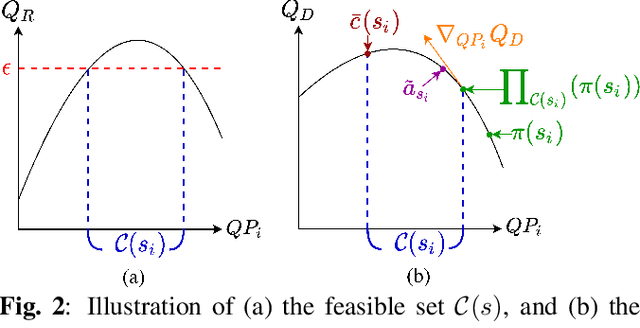

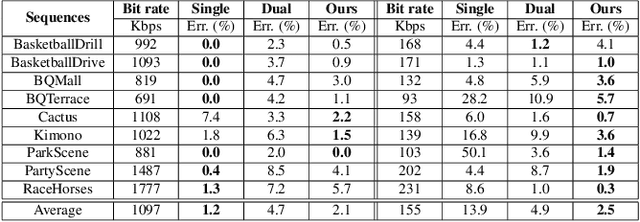

Abstract:This paper presents a reinforcement learning (RL) framework that utilizes Frank-Wolfe policy optimization to solve Coding-Tree-Unit (CTU) bit allocation for Region-of-Interest (ROI) intra-frame coding. Most previous RL-based methods employ the single-critic design, where the rewards for distortion minimization and rate regularization are weighted by an empirically chosen hyper-parameter. Recently, the dual-critic design is proposed to update the actor by alternating the rate and distortion critics. However, its convergence is not guaranteed. To address these issues, we introduce Neural Frank-Wolfe Policy Optimization (NFWPO) in formulating the CTU-level bit allocation as an action-constrained RL problem. In this new framework, we exploit a rate critic to predict a feasible set of actions. With this feasible set, a distortion critic is invoked to update the actor to maximize the ROI-weighted image quality subject to a rate constraint. Experimental results produced with x265 confirm the superiority of the proposed method to the other baselines.

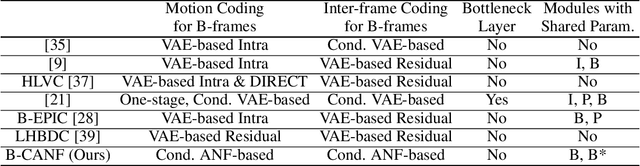

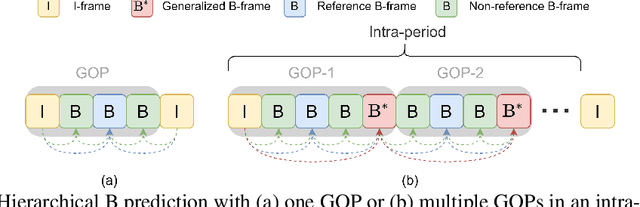

B-CANF: Adaptive B-frame Coding with Conditional Augmented Normalizing Flows

Sep 05, 2022

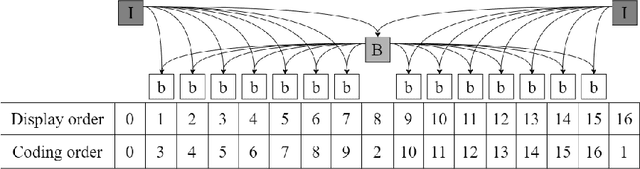

Abstract:This work introduces a B-frame coding framework, termed B-CANF, that exploits conditional augmented normalizing flows for B-frame coding. Learned B-frame coding is less explored and more challenging. Motivated by recent advances in conditional P-frame coding, B-CANF is the first attempt at applying flow-based models to both conditional motion and inter-frame coding. B-CANF features frame-type adaptive coding that learns better bit allocation for hierarchical B-frame coding. B-CANF also introduces a special type of B-frame, called B*-frame, to mimic P-frame coding. On commonly used datasets, B-CANF achieves the state-of-the-art compression performance, showing comparable BD-rate results (in terms of PSNR-RGB) to HM-16.23 under the random access configuration.

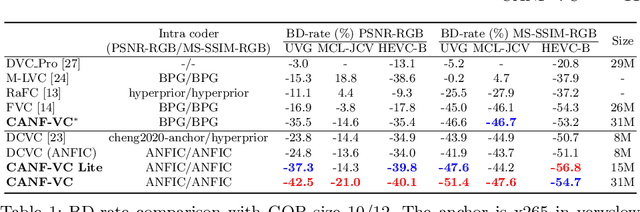

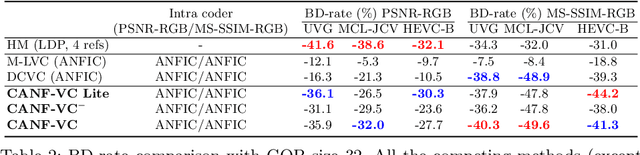

CANF-VC: Conditional Augmented Normalizing Flows for Video Compression

Jul 18, 2022

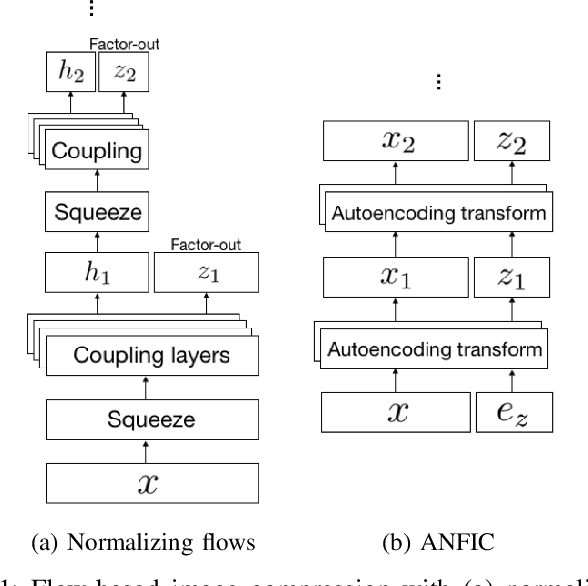

Abstract:This paper presents an end-to-end learning-based video compression system, termed CANF-VC, based on conditional augmented normalizing flows (CANF). Most learned video compression systems adopt the same hybrid-based coding architecture as the traditional codecs. Recent research on conditional coding has shown the sub-optimality of the hybrid-based coding and opens up opportunities for deep generative models to take a key role in creating new coding frameworks. CANF-VC represents a new attempt that leverages the conditional ANF to learn a video generative model for conditional inter-frame coding. We choose ANF because it is a special type of generative model, which includes variational autoencoder as a special case and is able to achieve better expressiveness. CANF-VC also extends the idea of conditional coding to motion coding, forming a purely conditional coding framework. Extensive experimental results on commonly used datasets confirm the superiority of CANF-VC to the state-of-the-art methods. The source code of CANF-VC is available at https://github.com/NYCU-MAPL/CANF-VC.

Action-Constrained Reinforcement Learning for Frame-Level Bit Allocation in HEVC/H.265 through Frank-Wolfe Policy Optimization

Mar 10, 2022

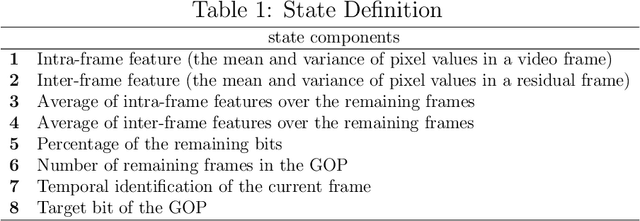

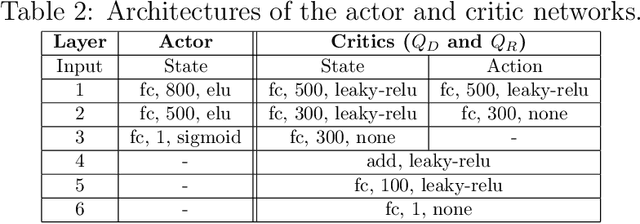

Abstract:This paper presents a reinforcement learning (RL) framework that leverages Frank-Wolfe policy optimization to address frame-level bit allocation for HEVC/H.265. Most previous RL-based approaches adopt the single-critic design, which weights the rewards for distortion minimization and rate regularization by an empirically chosen hyper-parameter. More recently, the dual-critic design is proposed to update the actor network by alternating the rate and distortion critics. However, the convergence of training is not guaranteed. To address this issue, we introduce Neural Frank-Wolfe Policy Optimization (NFWPO) in formulating the frame-level bit allocation as an action-constrained RL problem. In this new framework, the rate critic serves to specify a feasible action set, and the distortion critic updates the actor network towards maximizing the reconstruction quality while conforming to the action constraint. Experimental results show that when trained to optimize the video multi-method assessment fusion (VMAF) metric, our NFWPO-based model outperforms both the single-critic and the dual-critic methods. It also demonstrates comparable rate-distortion performance to the 2-pass average bit rate control of x265.

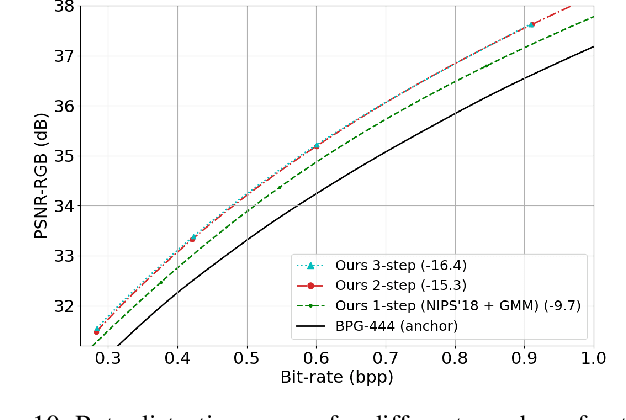

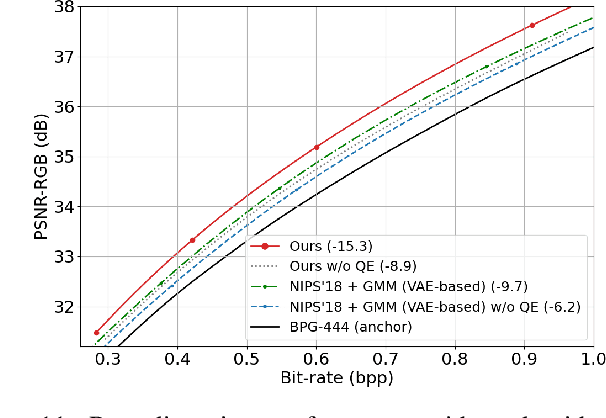

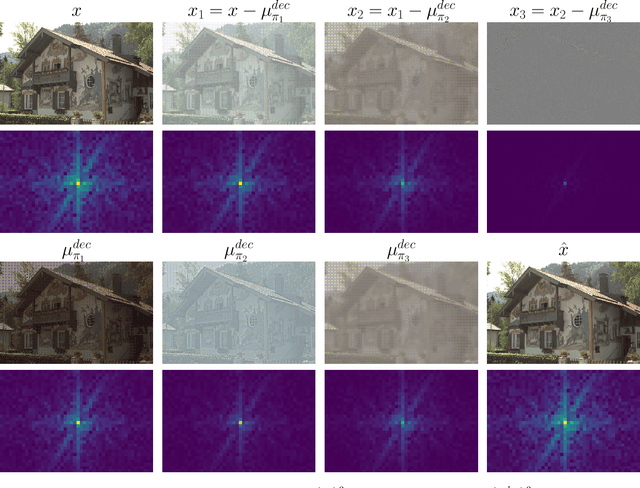

ANFIC: Image Compression Using Augmented Normalizing Flows

Jul 18, 2021

Abstract:This paper introduces an end-to-end learned image compression system, termed ANFIC, based on Augmented Normalizing Flows (ANF). ANF is a new type of flow model, which stacks multiple variational autoencoders (VAE) for greater model expressiveness. The VAE-based image compression has gone mainstream, showing promising compression performance. Our work presents the first attempt to leverage VAE-based compression in a flow-based framework. ANFIC advances further compression efficiency by stacking and extending hierarchically multiple VAE's. The invertibility of ANF, together with our training strategies, enables ANFIC to support a wide range of quality levels without changing the encoding and decoding networks. Extensive experimental results show that in terms of PSNR-RGB, ANFIC performs comparably to or better than the state-of-the-art learned image compression. Moreover, it performs close to VVC intra coding, from low-rate compression up to nearly-lossless compression. In particular, ANFIC achieves the state-of-the-art performance, when extended with conditional convolution for variable rate compression with a single model.

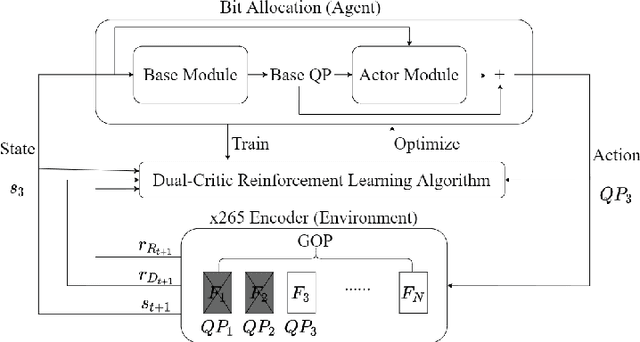

A Dual-Critic Reinforcement Learning Framework for Frame-level Bit Allocation in HEVC/H.265

Apr 05, 2021

Abstract:This paper introduces a dual-critic reinforcement learning (RL) framework to address the problem of frame-level bit allocation in HEVC/H.265. The objective is to minimize the distortion of a group of pictures (GOP) under a rate constraint. Previous RL-based methods tackle such a constrained optimization problem by maximizing a single reward function that often combines a distortion and a rate reward. However, the way how these rewards are combined is usually ad hoc and may not generalize well to various coding conditions and video sequences. To overcome this issue, we adapt the deep deterministic policy gradient (DDPG) reinforcement learning algorithm for use with two critics, with one learning to predict the distortion reward and the other the rate reward. In particular, the distortion critic works to update the agent when the rate constraint is satisfied. By contrast, the rate critic makes the rate constraint a priority when the agent goes over the bit budget. Experimental results on commonly used datasets show that our method outperforms the bit allocation scheme in x265 and the single-critic baseline by a significant margin in terms of rate-distortion performance while offering fairly precise rate control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge