Mu-Jung Chen

Learned Hierarchical B-frame Coding with Adaptive Feature Modulation for YUV 4:2:0 Content

Dec 29, 2022

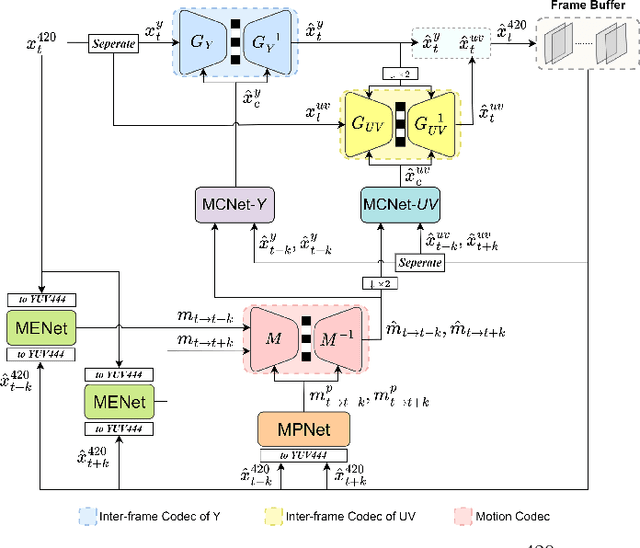

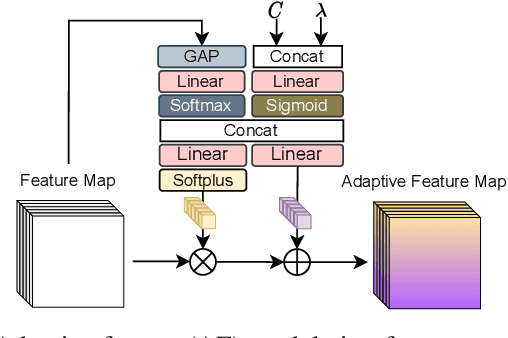

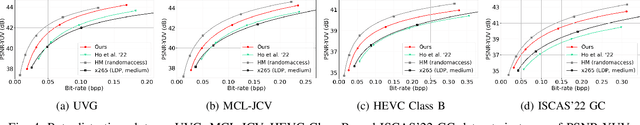

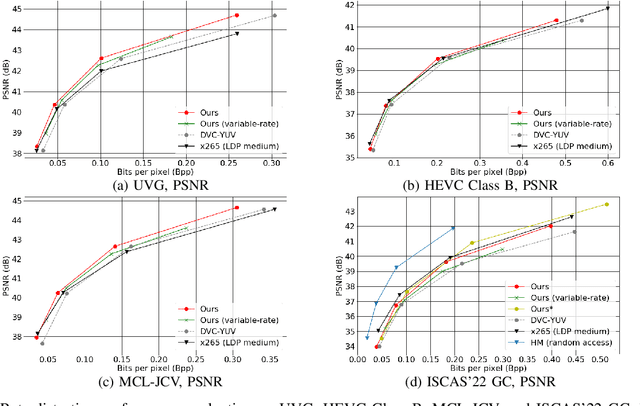

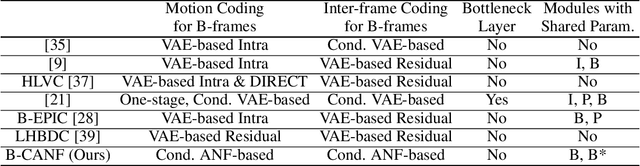

Abstract:This paper introduces a learned hierarchical B-frame coding scheme in response to the Grand Challenge on Neural Network-based Video Coding at ISCAS 2023. We address specifically three issues, including (1) B-frame coding, (2) YUV 4:2:0 coding, and (3) content-adaptive variable-rate coding with only one single model. Most learned video codecs operate internally in the RGB domain for P-frame coding. B-frame coding for YUV 4:2:0 content is largely under-explored. In addition, while there have been prior works on variable-rate coding with conditional convolution, most of them fail to consider the content information. We build our scheme on conditional augmented normalized flows (CANF). It features conditional motion and inter-frame codecs for efficient B-frame coding. To cope with YUV 4:2:0 content, two conditional inter-frame codecs are used to process the Y and UV components separately, with the coding of the UV components conditioned additionally on the Y component. Moreover, we introduce adaptive feature modulation in every convolutional layer, taking into account both the content information and the coding levels of B-frames to achieve content-adaptive variable-rate coding. Experimental results show that our model outperforms x265 and the winner of last year's challenge on commonly used datasets in terms of PSNR-YUV.

Learned Video Compression for YUV 4:2:0 Content Using Flow-based Conditional Inter-frame Coding

Oct 15, 2022

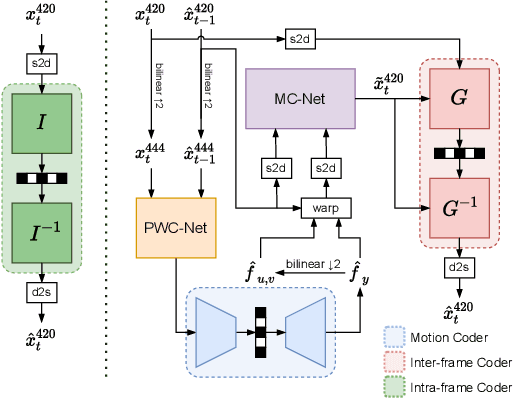

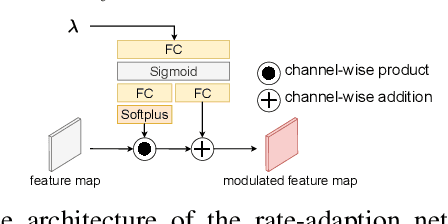

Abstract:This paper proposes a learning-based video compression framework for variable-rate coding on YUV 4:2:0 content. Most existing learning-based video compression models adopt the traditional hybrid-based coding architecture, which involves temporal prediction followed by residual coding. However, recent studies have shown that residual coding is sub-optimal from the information-theoretic perspective. In addition, most existing models are optimized with respect to RGB content. Furthermore, they require separate models for variable-rate coding. To address these issues, this work presents an attempt to incorporate the conditional inter-frame coding for YUV 4:2:0 content. We introduce a conditional flow-based inter-frame coder to improve the inter-frame coding efficiency. To adapt our codec to YUV 4:2:0 content, we adopt a simple strategy of using space-to-depth and depth-to-space conversions. Lastly, we employ a rate-adaption net to achieve variable-rate coding without training multiple models. Experimental results show that our model performs better than x265 on UVG and MCL-JCV datasets in terms of PSNR-YUV. However, on the more challenging datasets from ISCAS'22 GC, there is still ample room for improvement. This insufficient performance is due to the lack of inter-frame coding capability at a large GOP size and can be mitigated by increasing the model capacity and applying an error propagation-aware training strategy.

B-CANF: Adaptive B-frame Coding with Conditional Augmented Normalizing Flows

Sep 05, 2022

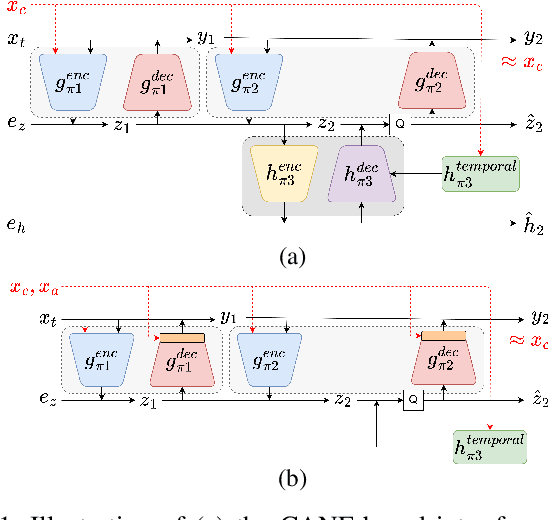

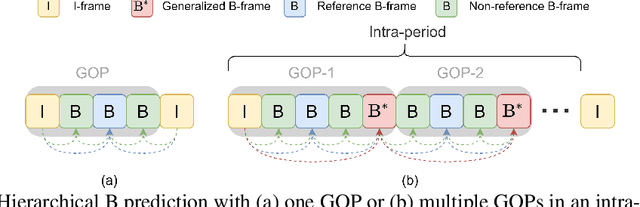

Abstract:This work introduces a B-frame coding framework, termed B-CANF, that exploits conditional augmented normalizing flows for B-frame coding. Learned B-frame coding is less explored and more challenging. Motivated by recent advances in conditional P-frame coding, B-CANF is the first attempt at applying flow-based models to both conditional motion and inter-frame coding. B-CANF features frame-type adaptive coding that learns better bit allocation for hierarchical B-frame coding. B-CANF also introduces a special type of B-frame, called B*-frame, to mimic P-frame coding. On commonly used datasets, B-CANF achieves the state-of-the-art compression performance, showing comparable BD-rate results (in terms of PSNR-RGB) to HM-16.23 under the random access configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge