Yufeng Zhou

UI-Mem: Self-Evolving Experience Memory for Online Reinforcement Learning in Mobile GUI Agents

Feb 05, 2026Abstract:Online Reinforcement Learning (RL) offers a promising paradigm for enhancing GUI agents through direct environment interaction. However, its effectiveness is severely hindered by inefficient credit assignment in long-horizon tasks and repetitive errors across tasks due to the lack of experience transfer. To address these challenges, we propose UI-Mem, a novel framework that enhances GUI online RL with a Hierarchical Experience Memory. Unlike traditional replay buffers, our memory accumulates structured knowledge, including high-level workflows, subtask skills, and failure patterns. These experiences are stored as parameterized templates that enable cross-task and cross-application transfer. To effectively integrate memory guidance into online RL, we introduce Stratified Group Sampling, which injects varying levels of guidance across trajectories within each rollout group to maintain outcome diversity, driving the unguided policy toward internalizing guided behaviors. Furthermore, a Self-Evolving Loop continuously abstracts novel strategies and errors to keep the memory aligned with the agent's evolving policy. Experiments on online GUI benchmarks demonstrate that UI-Mem significantly outperforms traditional RL baselines and static reuse strategies, with strong generalization to unseen applications. Project page: https://ui-mem.github.io

Seed1.5-VL Technical Report

May 11, 2025

Abstract:We present Seed1.5-VL, a vision-language foundation model designed to advance general-purpose multimodal understanding and reasoning. Seed1.5-VL is composed with a 532M-parameter vision encoder and a Mixture-of-Experts (MoE) LLM of 20B active parameters. Despite its relatively compact architecture, it delivers strong performance across a wide spectrum of public VLM benchmarks and internal evaluation suites, achieving the state-of-the-art performance on 38 out of 60 public benchmarks. Moreover, in agent-centric tasks such as GUI control and gameplay, Seed1.5-VL outperforms leading multimodal systems, including OpenAI CUA and Claude 3.7. Beyond visual and video understanding, it also demonstrates strong reasoning abilities, making it particularly effective for multimodal reasoning challenges such as visual puzzles. We believe these capabilities will empower broader applications across diverse tasks. In this report, we mainly provide a comprehensive review of our experiences in building Seed1.5-VL across model design, data construction, and training at various stages, hoping that this report can inspire further research. Seed1.5-VL is now accessible at https://www.volcengine.com/ (Volcano Engine Model ID: doubao-1-5-thinking-vision-pro-250428)

H-SGANet: Hybrid Sparse Graph Attention Network for Deformable Medical Image Registration

Aug 29, 2024

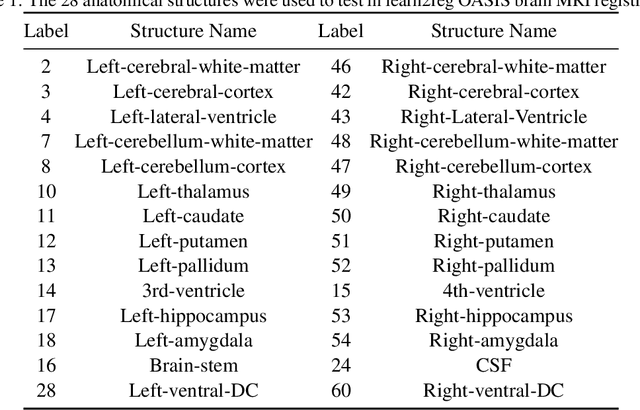

Abstract:The integration of Convolutional Neural Network (ConvNet) and Transformer has emerged as a strong candidate for image registration, leveraging the strengths of both models and a large parameter space. However, this hybrid model, treating brain MRI volumes as grid or sequence structures, faces challenges in accurately representing anatomical connectivity, diverse brain regions, and vital connections contributing to the brain's internal architecture. Concerns also arise regarding the computational expense and GPU memory usage associated with this model. To tackle these issues, a lightweight hybrid sparse graph attention network (H-SGANet) has been developed. This network incorporates a central mechanism, Sparse Graph Attention (SGA), based on a Vision Graph Neural Network (ViG) with predetermined anatomical connections. The SGA module expands the model's receptive field and seamlessly integrates into the network. To further amplify the advantages of the hybrid network, the Separable Self-Attention (SSA) is employed as an enhanced token mixer, integrated with depth-wise convolution to constitute SSAFormer. This strategic integration is designed to more effectively extract long-range dependencies. As a hybrid ConvNet-ViG-Transformer model, H-SGANet offers threefold benefits for volumetric medical image registration. It optimizes fixed and moving images concurrently through a hybrid feature fusion layer and an end-to-end learning framework. Compared to VoxelMorph, a model with a similar parameter count, H-SGANet demonstrates significant performance enhancements of 3.5% and 1.5% in Dice score on the OASIS dataset and LPBA40 dataset, respectively.

Movable Antenna Empowered Downlink NOMA Systems: Power Allocation and Antenna Position Optimization

May 29, 2024Abstract:This paper investigates a novel communication paradigm employing movable antennas (MAs) within a multiple-input single-output (MISO) non-orthogonal multiple access (NOMA) downlink framework, where users are equipped with MAs. Initially, leveraging the far-field response, we delineate the channel characteristics concerning both the power allocation coefficient and positions of MAs. Subsequently, we endeavor to maximize the channel capacity by jointly optimizing power allocation and antenna positions. To tackle the resultant non-convex problem, we propose an alternating optimization (AO) scheme underpinned by successive convex approximation (SCA) to converge towards a stationary point. Through numerical simulations, our findings substantiate the superiority of the MA-assisted NOMA system over both orthogonal multiple access (OMA) and conventional NOMA configurations in terms of average sum rate and outage probability.

Power Efficient Video Super-Resolution on Mobile NPUs with Deep Learning, Mobile AI & AIM 2022 challenge: Report

Nov 07, 2022

Abstract:Video super-resolution is one of the most popular tasks on mobile devices, being widely used for an automatic improvement of low-bitrate and low-resolution video streams. While numerous solutions have been proposed for this problem, they are usually quite computationally demanding, demonstrating low FPS rates and power efficiency on mobile devices. In this Mobile AI challenge, we address this problem and propose the participants to design an end-to-end real-time video super-resolution solution for mobile NPUs optimized for low energy consumption. The participants were provided with the REDS training dataset containing video sequences for a 4X video upscaling task. The runtime and power efficiency of all models was evaluated on the powerful MediaTek Dimensity 9000 platform with a dedicated AI processing unit capable of accelerating floating-point and quantized neural networks. All proposed solutions are fully compatible with the above NPU, demonstrating an up to 500 FPS rate and 0.2 [Watt / 30 FPS] power consumption. A detailed description of all models developed in the challenge is provided in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge