H-SGANet: Hybrid Sparse Graph Attention Network for Deformable Medical Image Registration

Paper and Code

Aug 29, 2024

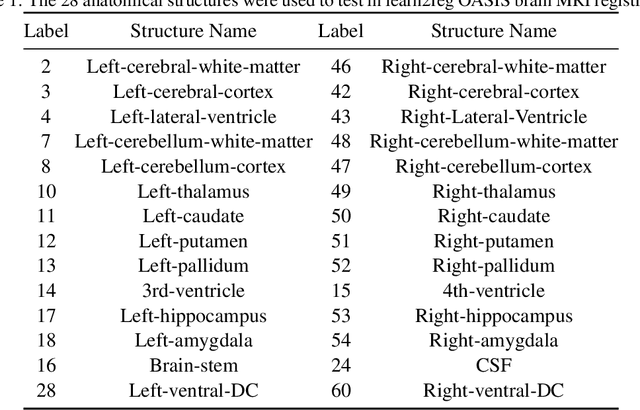

The integration of Convolutional Neural Network (ConvNet) and Transformer has emerged as a strong candidate for image registration, leveraging the strengths of both models and a large parameter space. However, this hybrid model, treating brain MRI volumes as grid or sequence structures, faces challenges in accurately representing anatomical connectivity, diverse brain regions, and vital connections contributing to the brain's internal architecture. Concerns also arise regarding the computational expense and GPU memory usage associated with this model. To tackle these issues, a lightweight hybrid sparse graph attention network (H-SGANet) has been developed. This network incorporates a central mechanism, Sparse Graph Attention (SGA), based on a Vision Graph Neural Network (ViG) with predetermined anatomical connections. The SGA module expands the model's receptive field and seamlessly integrates into the network. To further amplify the advantages of the hybrid network, the Separable Self-Attention (SSA) is employed as an enhanced token mixer, integrated with depth-wise convolution to constitute SSAFormer. This strategic integration is designed to more effectively extract long-range dependencies. As a hybrid ConvNet-ViG-Transformer model, H-SGANet offers threefold benefits for volumetric medical image registration. It optimizes fixed and moving images concurrently through a hybrid feature fusion layer and an end-to-end learning framework. Compared to VoxelMorph, a model with a similar parameter count, H-SGANet demonstrates significant performance enhancements of 3.5% and 1.5% in Dice score on the OASIS dataset and LPBA40 dataset, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge