Yuchen Zhao

A Delay-free Control Method Based On Function Approximation And Broadcast For Robotic Surface And Multiactuator Systems

Nov 30, 2024

Abstract:Robotic surface consisting of many actuators can change shape to perform tasks, such as facilitating human-machine interactions and transporting objects. Increasing the number of actuators can enhance the robot's capacity, but controlling them requires communication bandwidth to increase equally in order to avoid time delays. We propose a novel control method that has constant time delays no matter how many actuators are in the robot. Having a distributed nature, the method first approximates target shapes, then broadcasts the approximation coefficients to the actuators, and relies on themselves to compute the inputs. We build a robotic pin array and measure the time delay as a function of the number of actuators to confirm the system size-independent scaling behavior. The shape-changing ability is achieved based on function approximation algorithms, i.e. discrete cosine transform or matching pursuit. We perform experiments to approximate target shapes and make quantitative comparison with those obtained from standard sequential control method. A good agreement between the experiments and theoretical predictions is achieved, and our method is more efficient in the sense that it requires less control messages to generate shapes with the same accuracy. Our method is also capable of dynamic tasks such as object manipulation.

Towards Low-Energy Adaptive Personalization for Resource-Constrained Devices

Mar 29, 2024Abstract:The personalization of machine learning (ML) models to address data drift is a significant challenge in the context of Internet of Things (IoT) applications. Presently, most approaches focus on fine-tuning either the full base model or its last few layers to adapt to new data, while often neglecting energy costs. However, various types of data drift exist, and fine-tuning the full base model or the last few layers may not result in optimal performance in certain scenarios. We propose Target Block Fine-Tuning (TBFT), a low-energy adaptive personalization framework designed for resource-constrained devices. We categorize data drift and personalization into three types: input-level, feature-level, and output-level. For each type, we fine-tune different blocks of the model to achieve optimal performance with reduced energy costs. Specifically, input-, feature-, and output-level correspond to fine-tuning the front, middle, and rear blocks of the model. We evaluate TBFT on a ResNet model, three datasets, three different training sizes, and a Raspberry Pi. Compared with the $Block Avg$, where each block is fine-tuned individually and their performance improvements are averaged, TBFT exhibits an improvement in model accuracy by an average of 15.30% whilst saving 41.57% energy consumption on average compared with full fine-tuning.

MicroT: Low-Energy and Adaptive Models for MCUs

Mar 12, 2024

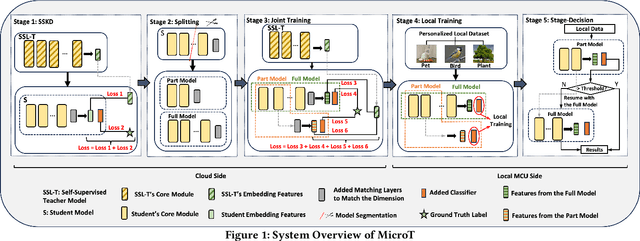

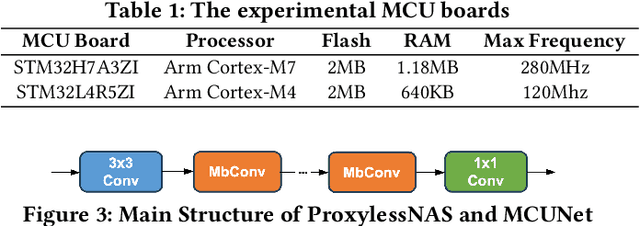

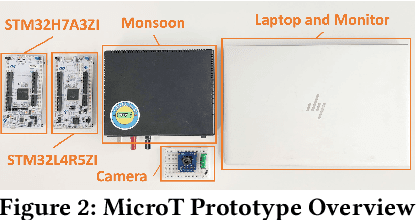

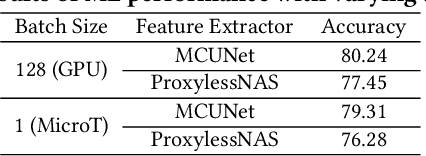

Abstract:We propose MicroT, a low-energy, multi-task adaptive model framework for resource-constrained MCUs. We divide the original model into a feature extractor and a classifier. The feature extractor is obtained through self-supervised knowledge distillation and further optimized into part and full models through model splitting and joint training. These models are then deployed on MCUs, with classifiers added and trained on local tasks, ultimately performing stage-decision for joint inference. In this process, the part model initially processes the sample, and if the confidence score falls below the set threshold, the full model will resume and continue the inference. We evaluate MicroT on two models, three datasets, and two MCU boards. Our experimental evaluation shows that MicroT effectively improves model performance and reduces energy consumption when dealing with multiple local tasks. Compared to the unoptimized feature extractor, MicroT can improve accuracy by up to 9.87%. On MCUs, compared to the standard full model inference, MicroT can save up to about 29.13% in energy consumption. MicroT also allows users to adaptively adjust the stage-decision ratio as needed, better balancing model performance and energy consumption. Under the standard stage-decision ratio configuration, MicroT can increase accuracy by 5.91% and save about 14.47% of energy consumption.

Effective Abnormal Activity Detection on Multivariate Time Series Healthcare Data

Sep 11, 2023

Abstract:Multivariate time series (MTS) data collected from multiple sensors provide the potential for accurate abnormal activity detection in smart healthcare scenarios. However, anomalies exhibit diverse patterns and become unnoticeable in MTS data. Consequently, achieving accurate anomaly detection is challenging since we have to capture both temporal dependencies of time series and inter-relationships among variables. To address this problem, we propose a Residual-based Anomaly Detection approach, Rs-AD, for effective representation learning and abnormal activity detection. We evaluate our scheme on a real-world gait dataset and the experimental results demonstrate an F1 score of 0.839.

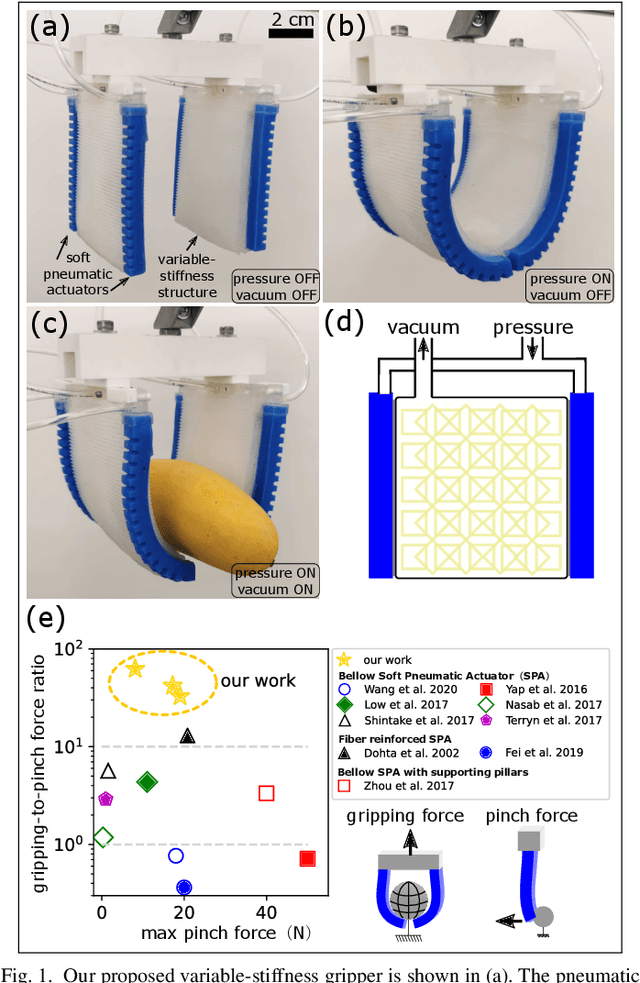

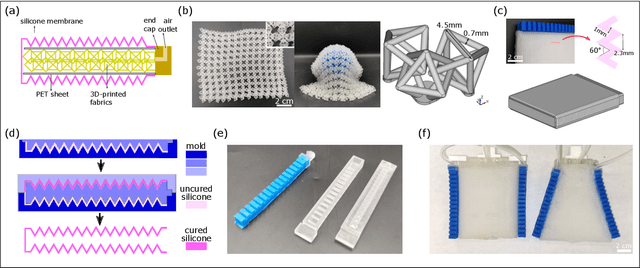

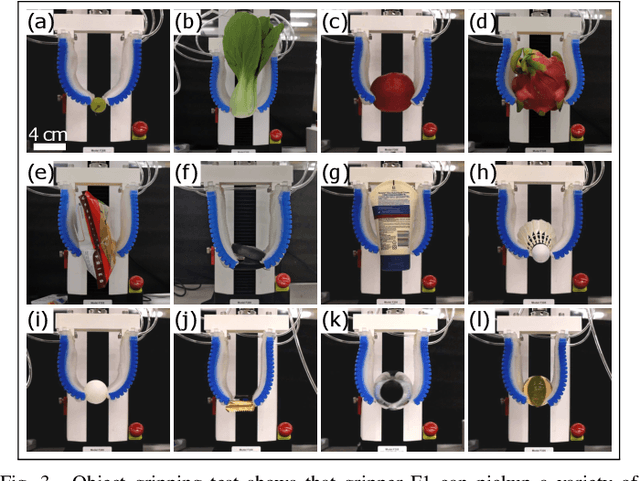

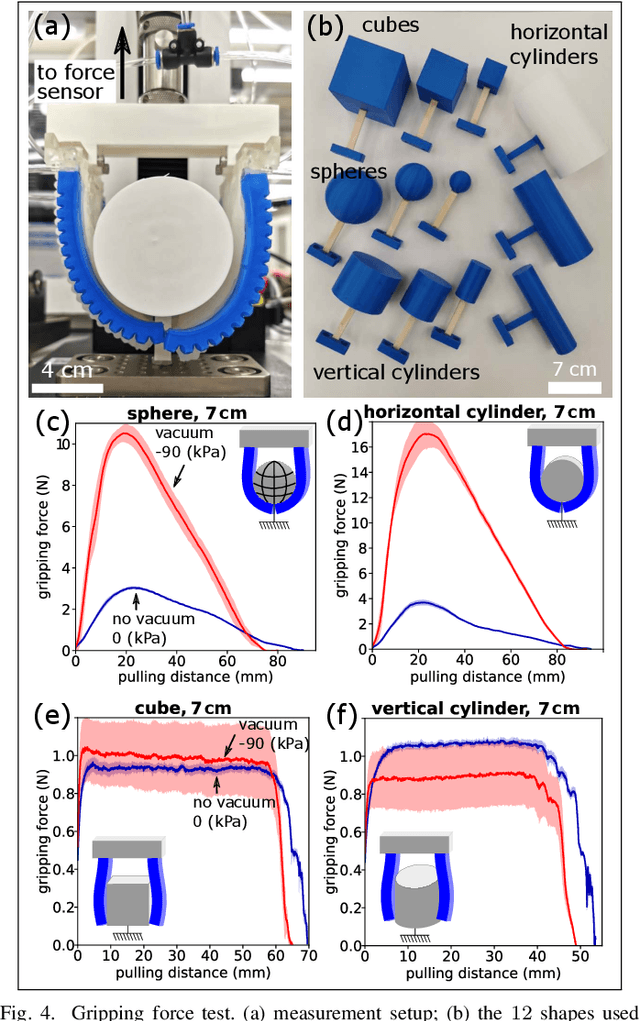

A Palm-Shape Variable-Stiffness Gripper based on 3D-Printed Fabric Jamming

Apr 12, 2023

Abstract:Soft grippers have excellent adaptability for a variety of objects and tasks. Jamming-based variable stiffness materials can further increase soft grippers' gripping force and capacity. Previous universal grippers enabled by granular jamming have shown great capability of handling objects with various shapes and weight. However, they require a large pushing force on the object during gripping, which is not suitable for very soft or free-hanging objects. In this paper, we create a novel palm-shape anthropomorphic variable-stiffness gripper enabled by jamming of 3D printed fabrics. This gripper is conformable and gentle to objects with different shapes, requires little pushing force, and increases gripping strength only when necessary. We present the design, fabrication and performance of this gripper and tested its conformability and gripping capacity. Our design utilizes soft pneumatic actuators to drive two wide palms to enclose objects, thanks to the excellent conformability of the structured fabrics. While the pinch force is low, the palm can significantly increase stiffness to lift heavy objects with a maximum gripping force of $17\,$N and grip-to-pinch force ratio of $42$. We also explore different variable-stiffness materials in the gripper, including sheets for layer jamming, to compare their performances. We conduct gripping tests on standard objects and daily items to show the great capacity of our gripper design.

Measuring a Soft Resistive Strain Sensor Array by Solving the Resistor Network Inverse Problem

Apr 12, 2023Abstract:Soft robotics is applicable to a variety of domains due to the adaptability offered by the soft and compliant materials. To develop future intelligent soft robots, soft sensors that can capture deformation with nearly infinite degree-of-freedom are necessary. Soft sensor networks can address this problem, however, measuring all sensor values throughout the body requires excessive wiring and complex fabrication that may hinder robot performance. We circumvent these challenges by developing a non-invasive measurement technique, which is based on an algorithm that solves the inverse problem of resistor network, and implement this algorithm on a soft resistive, strain sensor network. Our algorithm works by iteratively computing the resistor values based on the applied boundary voltage and current responses, and we analyze the reconstruction error of the algorithm as a function of network size and measurement error. We further develop electronics setup to implement our algorithm on a stretchable resistive strain sensor network made of soft conductive silicone, and show the response of the measured network to different deformation modes. Our work opens a new path to address the challenge of measuring many sensor values in soft sensors, and could be applied to soft robotic sensor systems.

Information Theory Inspired Pattern Analysis for Time-series Data

Feb 22, 2023

Abstract:Current methods for pattern analysis in time series mainly rely on statistical features or probabilistic learning and inference methods to identify patterns and trends in the data. Such methods do not generalize well when applied to multivariate, multi-source, state-varying, and noisy time-series data. To address these issues, we propose a highly generalizable method that uses information theory-based features to identify and learn from patterns in multivariate time-series data. To demonstrate the proposed approach, we analyze pattern changes in human activity data. For applications with stochastic state transitions, features are developed based on Shannon's entropy of Markov chains, entropy rates of Markov chains, entropy production of Markov chains, and von Neumann entropy of Markov chains. For applications where state modeling is not applicable, we utilize five entropy variants, including approximate entropy, increment entropy, dispersion entropy, phase entropy, and slope entropy. The results show the proposed information theory-based features improve the recall rate, F1 score, and accuracy on average by up to 23.01\% compared with the baseline models and a simpler model structure, with an average reduction of 18.75 times in the number of model parameters.

Using Entropy Measures for Monitoring the Evolution of Activity Patterns

Oct 05, 2022

Abstract:In this work, we apply information theory inspired methods to quantify changes in daily activity patterns. We use in-home movement monitoring data and show how they can help indicate the occurrence of healthcare-related events. Three different types of entropy measures namely Shannon's entropy, entropy rates for Markov chains, and entropy production rate have been utilised. The measures are evaluated on a large-scale in-home monitoring dataset that has been collected within our dementia care clinical study. The study uses Internet of Things (IoT) enabled solutions for continuous monitoring of in-home activity, sleep, and physiology to develop care and early intervention solutions to support people living with dementia (PLWD) in their own homes. Our main goal is to show the applicability of the entropy measures to time-series activity data analysis and to use the extracted measures as new engineered features that can be fed into inference and analysis models. The results of our experiments show that in most cases the combination of these measures can indicate the occurrence of healthcare-related events. We also find that different participants with the same events may have different measures based on one entropy measure. So using a combination of these measures in an inference model will be more effective than any of the single measures.

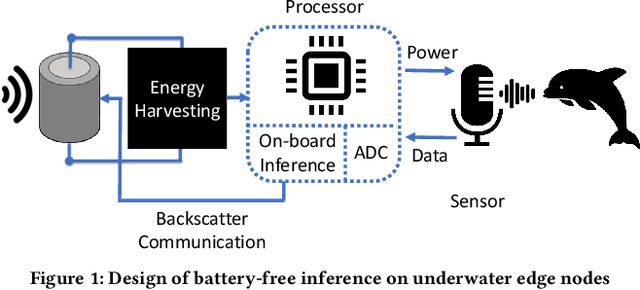

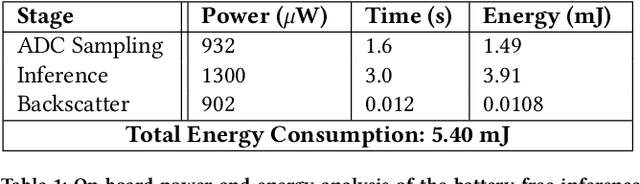

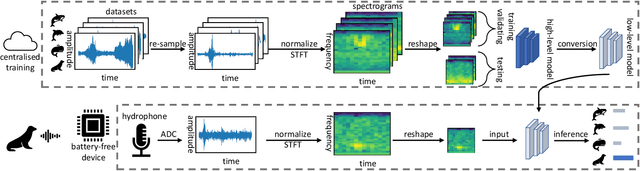

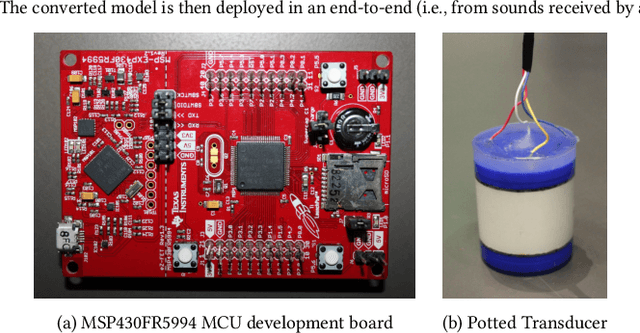

Towards Battery-Free Machine Learning and Inference in Underwater Environments

Feb 16, 2022

Abstract:This paper is motivated by a simple question: Can we design and build battery-free devices capable of machine learning and inference in underwater environments? An affirmative answer to this question would have significant implications for a new generation of underwater sensing and monitoring applications for environmental monitoring, scientific exploration, and climate/weather prediction. To answer this question, we explore the feasibility of bridging advances from the past decade in two fields: battery-free networking and low-power machine learning. Our exploration demonstrates that it is indeed possible to enable battery-free inference in underwater environments. We designed a device that can harvest energy from underwater sound, power up an ultra-low-power microcontroller and on-board sensor, perform local inference on sensed measurements using a lightweight Deep Neural Network, and communicate the inference result via backscatter to a receiver. We tested our prototype in an emulated marine bioacoustics application, demonstrating the potential to recognize underwater animal sounds without batteries. Through this exploration, we highlight the challenges and opportunities for making underwater battery-free inference and machine learning ubiquitous.

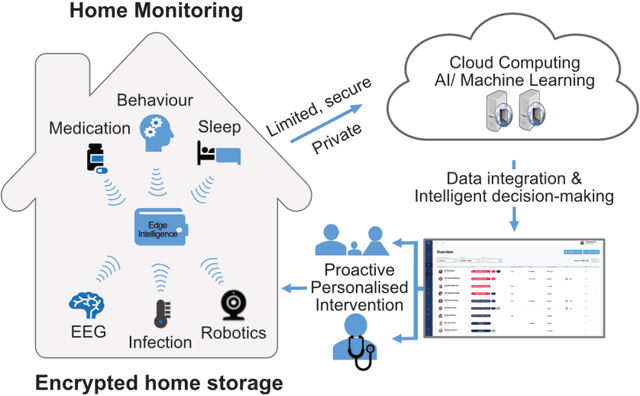

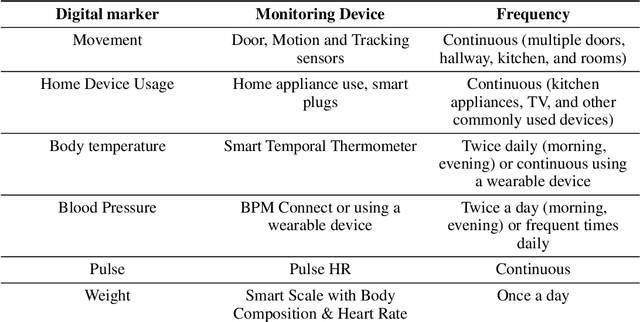

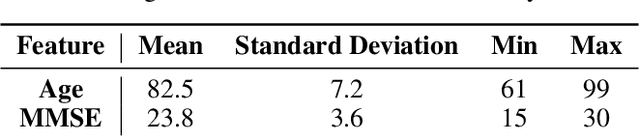

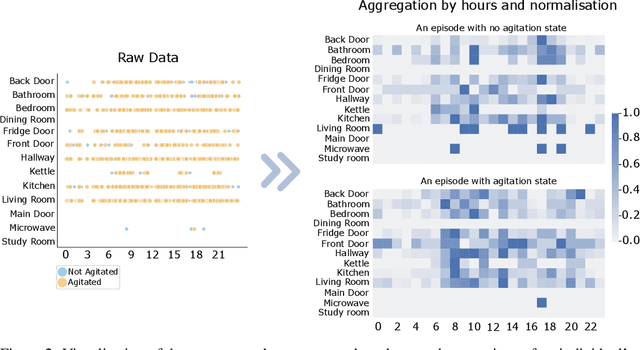

Designing A Clinically Applicable Deep Recurrent Model to Identify Neuropsychiatric Symptoms in People Living with Dementia Using In-Home Monitoring Data

Oct 19, 2021

Abstract:Agitation is one of the neuropsychiatric symptoms with high prevalence in dementia which can negatively impact the Activities of Daily Living (ADL) and the independence of individuals. Detecting agitation episodes can assist in providing People Living with Dementia (PLWD) with early and timely interventions. Analysing agitation episodes will also help identify modifiable factors such as ambient temperature and sleep as possible components causing agitation in an individual. This preliminary study presents a supervised learning model to analyse the risk of agitation in PLWD using in-home monitoring data. The in-home monitoring data includes motion sensors, physiological measurements, and the use of kitchen appliances from 46 homes of PLWD between April 2019-June 2021. We apply a recurrent deep learning model to identify agitation episodes validated and recorded by a clinical monitoring team. We present the experiments to assess the efficacy of the proposed model. The proposed model achieves an average of 79.78% recall, 27.66% precision and 37.64% F1 scores when employing the optimal parameters, suggesting a good ability to recognise agitation events. We also discuss using machine learning models for analysing the behavioural patterns using continuous monitoring data and explore clinical applicability and the choices between sensitivity and specificity in-home monitoring applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge