Yu-Hsi Cheng

Automatic Concept Extraction for Concept Bottleneck-based Video Classification

Jun 21, 2022

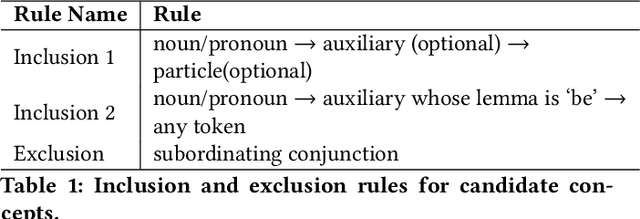

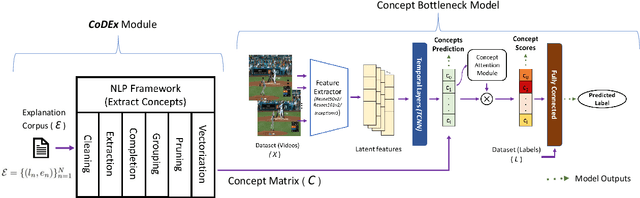

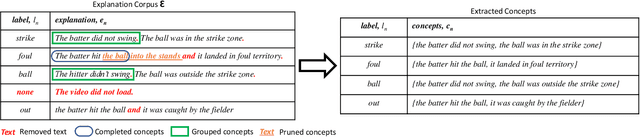

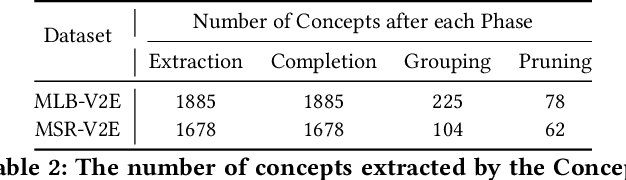

Abstract:Recent efforts in interpretable deep learning models have shown that concept-based explanation methods achieve competitive accuracy with standard end-to-end models and enable reasoning and intervention about extracted high-level visual concepts from images, e.g., identifying the wing color and beak length for bird-species classification. However, these concept bottleneck models rely on a necessary and sufficient set of predefined concepts-which is intractable for complex tasks such as video classification. For complex tasks, the labels and the relationship between visual elements span many frames, e.g., identifying a bird flying or catching prey-necessitating concepts with various levels of abstraction. To this end, we present CoDEx, an automatic Concept Discovery and Extraction module that rigorously composes a necessary and sufficient set of concept abstractions for concept-based video classification. CoDEx identifies a rich set of complex concept abstractions from natural language explanations of videos-obviating the need to predefine the amorphous set of concepts. To demonstrate our method's viability, we construct two new public datasets that combine existing complex video classification datasets with short, crowd-sourced natural language explanations for their labels. Our method elicits inherent complex concept abstractions in natural language to generalize concept-bottleneck methods to complex tasks.

flip-hoisting: Exploiting Repeated Parameters in Discrete Probabilistic Programs

Oct 19, 2021

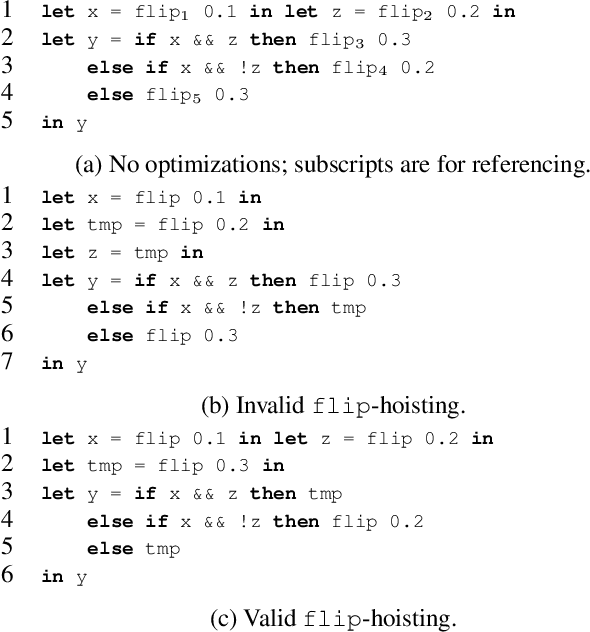

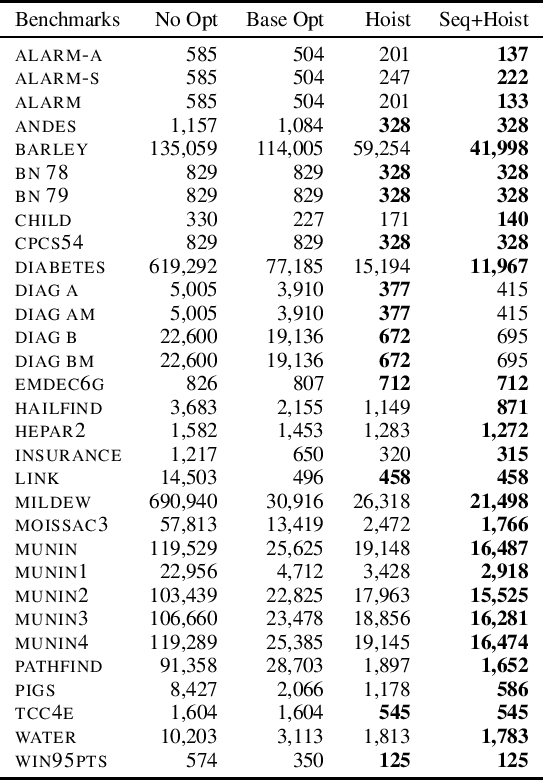

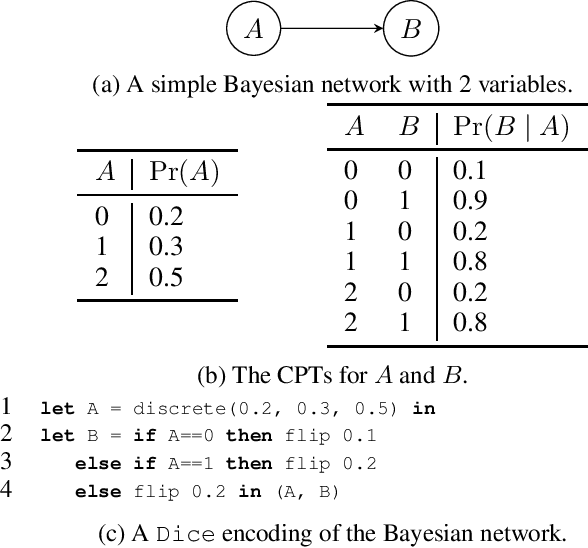

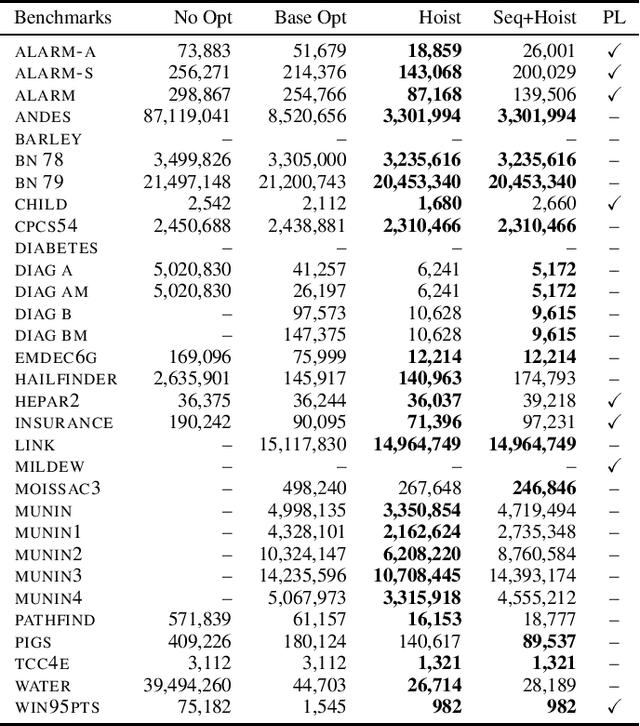

Abstract:Probabilistic programming is emerging as a popular and effective means of probabilistic modeling and an alternative to probabilistic graphical models. Probabilistic programs provide greater expressivity and flexibility in modeling probabilistic systems than graphical models, but this flexibility comes at a cost: there remains a significant disparity in performance between specialized Bayesian network solvers and probabilistic program inference algorithms. In this work we present a program analysis and associated optimization, flip-hoisting, that collapses repetitious parameters in discrete probabilistic programs to improve inference performance. flip-hoisting generalizes parameter sharing - a well-known important optimization from discrete graphical models - to probabilistic programs. We implement flip-hoisting in an existing probabilistic programming language and show empirically that it significantly improves inference performance, narrowing the gap between the performances of probabilistic programs and probabilistic graphical models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge