flip-hoisting: Exploiting Repeated Parameters in Discrete Probabilistic Programs

Paper and Code

Oct 19, 2021

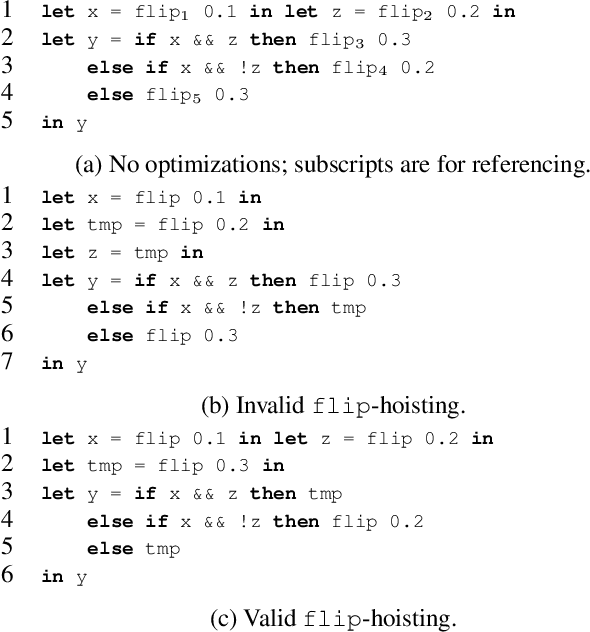

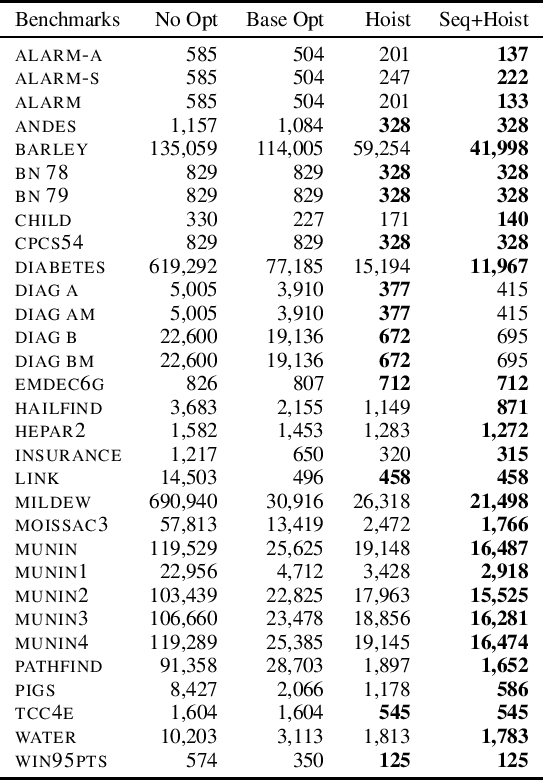

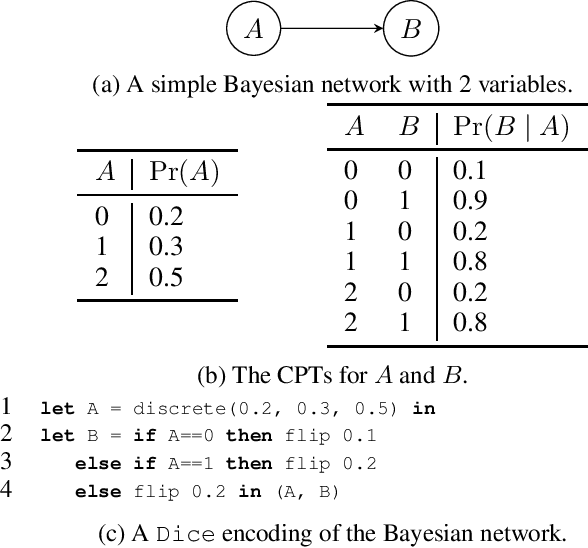

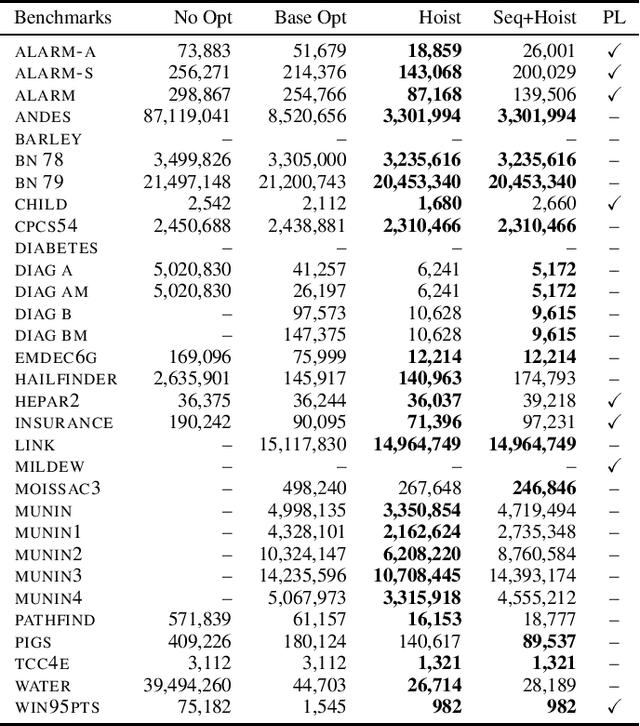

Probabilistic programming is emerging as a popular and effective means of probabilistic modeling and an alternative to probabilistic graphical models. Probabilistic programs provide greater expressivity and flexibility in modeling probabilistic systems than graphical models, but this flexibility comes at a cost: there remains a significant disparity in performance between specialized Bayesian network solvers and probabilistic program inference algorithms. In this work we present a program analysis and associated optimization, flip-hoisting, that collapses repetitious parameters in discrete probabilistic programs to improve inference performance. flip-hoisting generalizes parameter sharing - a well-known important optimization from discrete graphical models - to probabilistic programs. We implement flip-hoisting in an existing probabilistic programming language and show empirically that it significantly improves inference performance, narrowing the gap between the performances of probabilistic programs and probabilistic graphical models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge