Yordan Hristov

FAN-Trans: Online Knowledge Distillation for Facial Action Unit Detection

Nov 11, 2022Abstract:Due to its importance in facial behaviour analysis, facial action unit (AU) detection has attracted increasing attention from the research community. Leveraging the online knowledge distillation framework, we propose the ``FANTrans" method for AU detection. Our model consists of a hybrid network of convolution and transformer blocks to learn per-AU features and to model AU co-occurrences. The model uses a pre-trained face alignment network as the feature extractor. After further transformation by a small learnable add-on convolutional subnet, the per-AU features are fed into transformer blocks to enhance their representation. As multiple AUs often appear together, we propose a learnable attention drop mechanism in the transformer block to learn the correlation between the features for different AUs. We also design a classifier that predicts AU presence by considering all AUs' features, to explicitly capture label dependencies. Finally, we make the attempt of adapting online knowledge distillation in the training stage for this task, further improving the model's performance. Experiments on the BP4D and DISFA datasets demonstrating the effectiveness of proposed method.

* 9 pages, 6 figures

Learning from Demonstration with Weakly Supervised Disentanglement

Jun 16, 2020

Abstract:Robotic manipulation tasks, such as wiping with a soft sponge, require control from multiple rich sensory modalities. Human-robot interaction, aimed at teaching robots, is difficult in this setting as there is potential for mismatch between human and machine comprehension of the rich data streams. We treat the task of interpretable learning from demonstration as an optimisation problem over a probabilistic generative model. To account for the high-dimensionality of the data, a high-capacity neural network is chosen to represent the model. The latent variables in this model are explicitly aligned with high-level notions and concepts that are manifested in a set of demonstrations. We show that such alignment is best achieved through the use of labels from the end user, in an appropriately restricted vocabulary, in contrast to the conventional approach of the designer picking a prior over the latent variables. Our approach is evaluated in the context of a table-top robot manipulation task performed by a PR2 robot -- that of dabbing liquids with a sponge (forcefully pressing a sponge and moving it along a surface). The robot provides visual information, arm joint positions and arm joint efforts. We have made videos of the task and data available - see supplementary materials at https://sites.google.com/view/weak-label-lfd

From Demonstrations to Task-Space Specifications: Using Causal Analysis to Extract Rule Parameterization from Demonstrations

Jun 08, 2020

Abstract:Learning models of user behaviour is an important problem that is broadly applicable across many application domains requiring human-robot interaction. In this work, we show that it is possible to learn generative models for distinct user behavioural types, extracted from human demonstrations, by enforcing clustering of preferred task solutions within the latent space. We use these models to differentiate between user types and to find cases with overlapping solutions. Moreover, we can alter an initially guessed solution to satisfy the preferences that constitute a particular user type by backpropagating through the learned differentiable models. An advantage of structuring generative models in this way is that we can extract causal relationships between symbols that might form part of the user's specification of the task, as manifested in the demonstrations. We further parameterize these specifications through constraint optimization in order to find a safety envelope under which motion planning can be performed. We show that the proposed method is capable of correctly distinguishing between three user types, who differ in degrees of cautiousness in their motion, while performing the task of moving objects with a kinesthetically driven robot in a tabletop environment. Our method successfully identifies the correct type, within the specified time, in 99% [97.8 - 99.8] of the cases, which outperforms an IRL baseline. We also show that our proposed method correctly changes a default trajectory to one satisfying a particular user specification even with unseen objects. The resulting trajectory is shown to be directly implementable on a PR2 humanoid robot completing the same task.

Hybrid system identification using switching density networks

Aug 06, 2019Abstract:Behaviour cloning is a commonly used strategy for imitation learning and can be extremely effective in constrained domains. However, in cases where the dynamics of an environment may be state dependent and varying, behaviour cloning places a burden on model capacity and the number of demonstrations required. This paper introduces switching density networks, which rely on a categorical reparametrisation for hybrid system identification. This results in a network comprising a classification layer that is followed by a regression layer. We use switching density networks to predict the parameters of hybrid control laws, which are toggled by a switching layer to produce different controller outputs, when conditioned on an input state. This work shows how switching density networks can be used for hybrid system identification in a variety of tasks, successfully identifying the key joint angle goals that make up manipulation tasks, while simultaneously learning image-based goal classifiers and regression networks that predict joint angles from images. We also show that they can cluster the phase space of an inverted pendulum, identifying the balance, spin and pump controllers required to solve this task. Switching density networks can be difficult to train, but we introduce a cross entropy regularisation loss that stabilises training.

Disentangled Relational Representations for Explaining and Learning from Demonstration

Jul 31, 2019

Abstract:Learning from demonstration is an effective method for human users to instruct desired robot behaviour. However, for most non-trivial tasks of practical interest, efficient learning from demonstration depends crucially on inductive bias in the chosen structure for rewards/costs and policies. We address the case where this inductive bias comes from an exchange with a human user. We propose a method in which a learning agent utilizes the information bottleneck layer of a high-parameter variational neural model, with auxiliary loss terms, in order to ground abstract concepts such as spatial relations. The concepts are referred to in natural language instructions and are manifested in the high-dimensional sensory input stream the agent receives from the world. We evaluate the properties of the latent space of the learned model in a photorealistic synthetic environment and particularly focus on examining its usability for downstream tasks. Additionally, through a series of controlled table-top manipulation experiments, we demonstrate that the learned manifold can be used to ground demonstrations as symbolic plans, which can then be executed on a PR2 robot.

Composing Diverse Policies for Temporally Extended Tasks

Jul 18, 2019

Abstract:Temporally extended and sequenced robot motion tasks are often characterized by discontinuous switches between different types of local dynamics. These change-points can be exploited to build approximate models of the interleaving regions, which in turn allow the design of region-specific controllers. These can then be combined to create the initiation state-space of a final policy. However, such a pipeline can become challenging to implement for combinatorially complex, temporarily extended tasks - especially so when sub-controllers work on different information streams, time scales and action spaces. In this paper, we introduce a method that can compose diverse policies based on scripted motion planning, dynamic motion primitives and neural networks. In order to do this, we extend the options framework to introduce a per-option dynamics module and a global function that evaluates a goal metric. Additionally, we can leverage expert demonstrations to sequence these local policies, converting the learning problem in hierarchical reinforcement learning to a planning problem at inference time. We first illustrate the core concepts with an MDP benchmark, and then with a physical gear assembly task solved on a PR2 robot. We show that the proposed approach successfully discovers the optimal sequence of policies and solves both tasks efficiently.

DynoPlan: Combining Motion Planning and Deep Neural Network based Controllers for Safe HRL

Jun 24, 2019

Abstract:Many realistic robotics tasks are best solved compositionally, through control architectures that sequentially invoke primitives and achieve error correction through the use of loops and conditionals taking the system back to alternative earlier states. Recent end-to-end approaches to task learning attempt to directly learn a single controller that solves an entire task, but this has been difficult for complex control tasks that would have otherwise required a diversity of local primitive moves, and the resulting solutions are also not easy to inspect for plan monitoring purposes. In this work, we aim to bridge the gap between hand designed and learned controllers, by representing each as an option in a hybrid hierarchical Reinforcement Learning framework - DynoPlan. We extend the options framework by adding a dynamics model and the use of a nearness-to-goal heuristic, derived from demonstrations. This translates the optimization of a hierarchical policy controller to a problem of planning with a model predictive controller. By unrolling the dynamics of each option and assessing the expected value of each future state, we can create a simple switching controller for choosing the optimal policy within a constrained time horizon similarly to hill climbing heuristic search. The individual dynamics model allows each option to iterate and be activated independently of the specific underlying instantiation, thus allowing for a mix of motion planning and deep neural network based primitives. We can assess the safety regions of the resulting hybrid controller by investigating the initiation sets of the different options, and also by reasoning about the completeness and performance guarantees of the underpinning motion planners.

Using Causal Analysis to Learn Specifications from Task Demonstrations

Mar 04, 2019

Abstract:Learning models of user behaviour is an important problem that is broadly applicable across many application domains requiring human-robot interaction. In this work we show that it is possible to learn a generative model for distinct user behavioral types, extracted from human demonstrations, by enforcing clustering of preferred task solutions within the latent space. We use this model to differentiate between user types and to find cases with overlapping solutions. Moreover, we can alter an initially guessed solution to satisfy the preferences that constitute a particular user type by backpropagating through the learned differentiable model. An advantage of structuring generative models in this way is that it allows us to extract causal relationships between symbols that might form part of the user's specification of the task, as manifested in the demonstrations. We show that the proposed method is capable of correctly distinguishing between three user types, who differ in degrees of cautiousness in their motion, while performing the task of moving objects with a kinesthetically driven robot in a tabletop environment. Our method successfully identifies the correct type, within the specified time, in 99% [97.8 - 99.8] of the cases, which outperforms an IRL baseline. We also show that our proposed method correctly changes a default trajectory to one satisfying a particular user specification even with unseen objects. The resulting trajectory is shown to be directly implementable on a PR2 humanoid robot completing the same task.

Interpretable Latent Spaces for Learning from Demonstration

Oct 02, 2018

Abstract:Effective human-robot interaction, such as in robot learning from human demonstration, requires the learning agent to be able to ground abstract concepts (such as those contained within instructions) in a corresponding high-dimensional sensory input stream from the world. Models such as deep neural networks, with high capacity through their large parameter spaces, can be used to compress the high-dimensional sensory data to lower dimensional representations. These low-dimensional representations facilitate symbol grounding, but may not guarantee that the representation would be human-interpretable. We propose a method which utilises the grouping of user-defined symbols and their corresponding sensory observations in order to align the learnt compressed latent representation with the semantic notions contained in the abstract labels. We demonstrate this through experiments with both simulated and real-world object data, showing that such alignment can be achieved in a process of physical symbol grounding.

Grounding Symbols in Multi-Modal Instructions

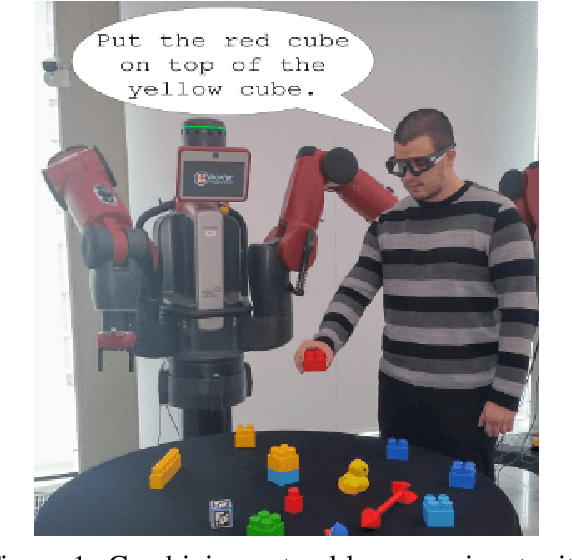

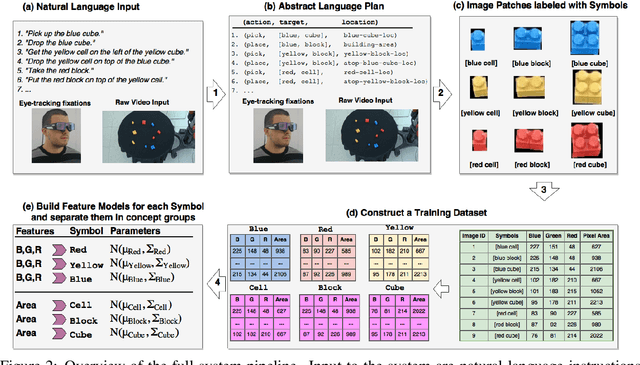

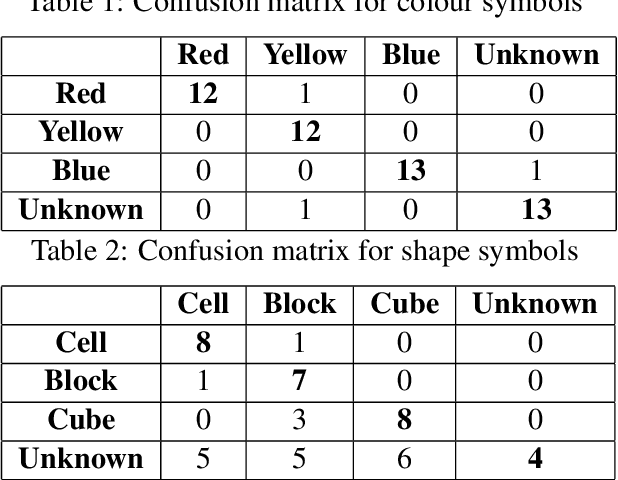

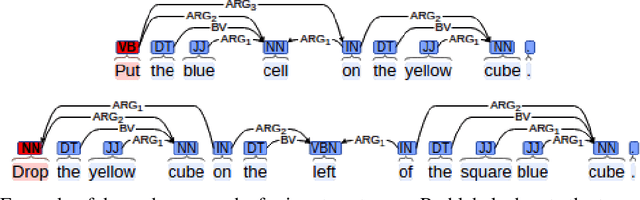

Jun 01, 2017

Abstract:As robots begin to cohabit with humans in semi-structured environments, the need arises to understand instructions involving rich variability---for instance, learning to ground symbols in the physical world. Realistically, this task must cope with small datasets consisting of a particular users' contextual assignment of meaning to terms. We present a method for processing a raw stream of cross-modal input---i.e., linguistic instructions, visual perception of a scene and a concurrent trace of 3D eye tracking fixations---to produce the segmentation of objects with a correspondent association to high-level concepts. To test our framework we present experiments in a table-top object manipulation scenario. Our results show our model learns the user's notion of colour and shape from a small number of physical demonstrations, generalising to identifying physical referents for novel combinations of the words.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge