Svetlin Penkov

Neural Abstract Reasoner

Nov 12, 2020

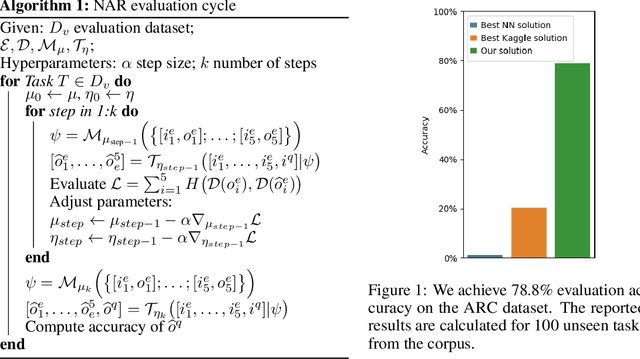

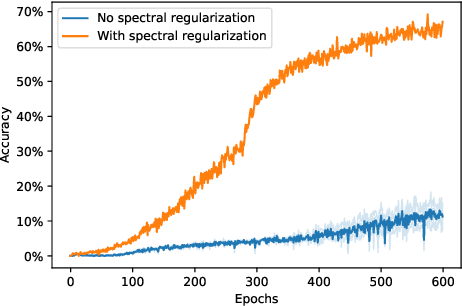

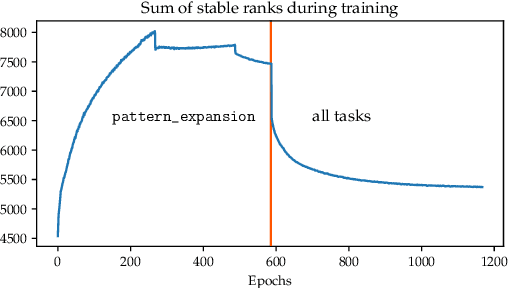

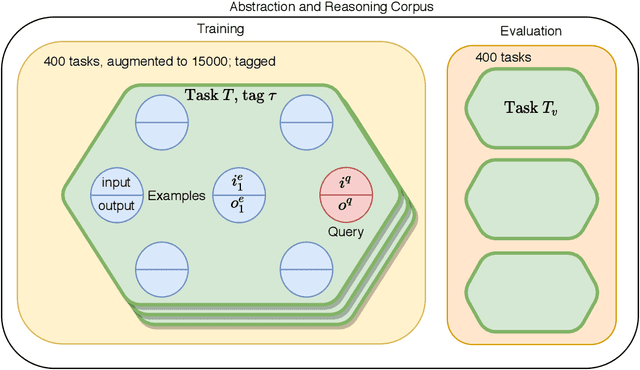

Abstract:Abstract reasoning and logic inference are difficult problems for neural networks, yet essential to their applicability in highly structured domains. In this work we demonstrate that a well known technique such as spectral regularization can significantly boost the capabilities of a neural learner. We introduce the Neural Abstract Reasoner (NAR), a memory augmented architecture capable of learning and using abstract rules. We show that, when trained with spectral regularization, NAR achieves $78.8\%$ accuracy on the Abstraction and Reasoning Corpus, improving performance 4 times over the best known human hand-crafted symbolic solvers. We provide some intuition for the effects of spectral regularization in the domain of abstract reasoning based on theoretical generalization bounds and Solomonoff's theory of inductive inference.

Iterative Model-Based Reinforcement Learning Using Simulations in the Differentiable Neural Computer

Jun 17, 2019

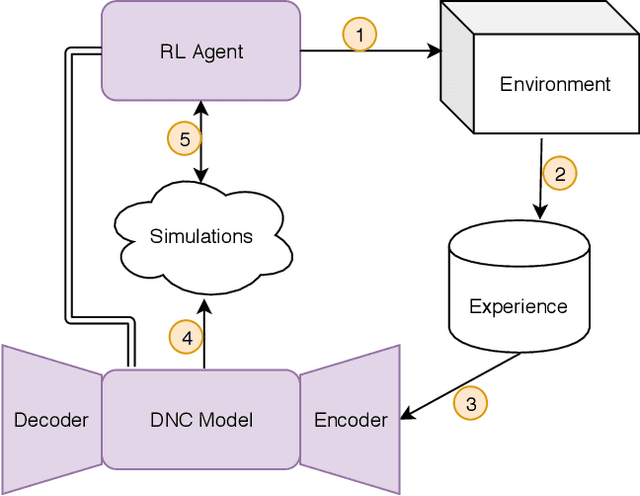

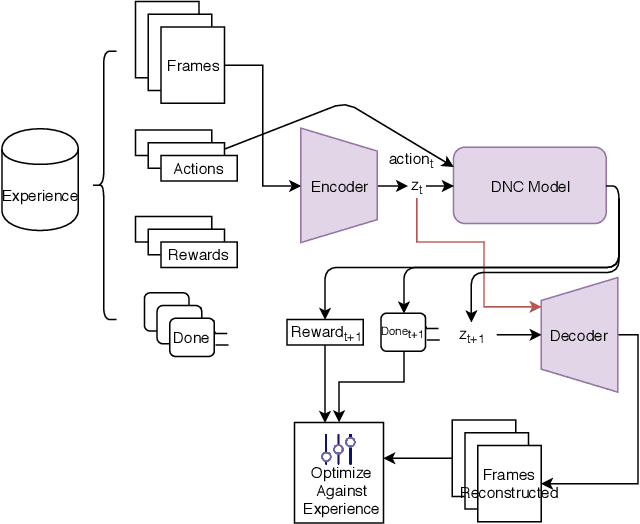

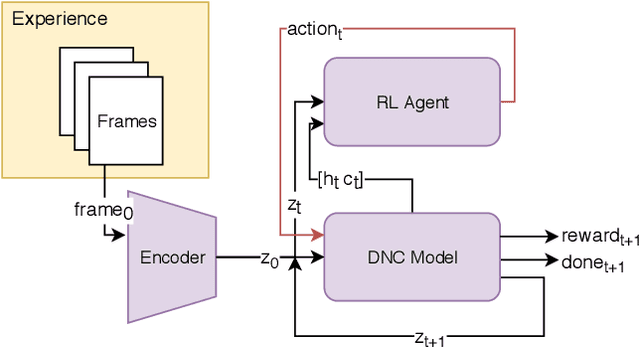

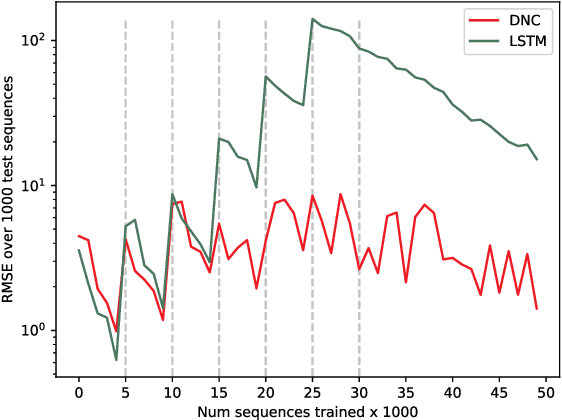

Abstract:We propose a lifelong learning architecture, the Neural Computer Agent (NCA), where a Reinforcement Learning agent is paired with a predictive model of the environment learned by a Differentiable Neural Computer (DNC). The agent and DNC model are trained in conjunction iteratively. The agent improves its policy in simulations generated by the DNC model and rolls out the policy to the live environment, collecting experiences in new portions or tasks of the environment for further learning. Experiments in two synthetic environments show that DNC models can continually learn from pixels alone to simulate new tasks as they are encountered by the agent, while the agents can be successfully trained to solve the tasks using Proximal Policy Optimization entirely in simulations.

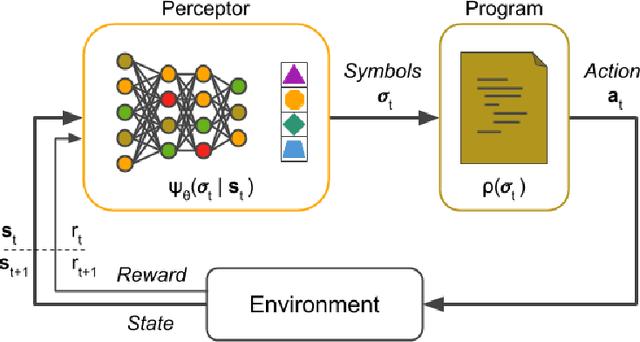

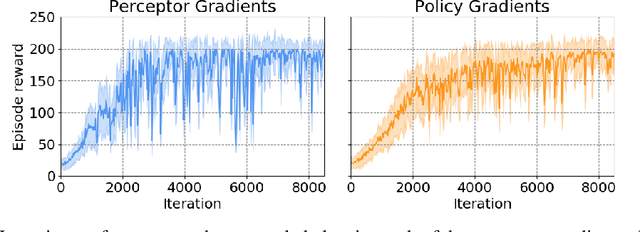

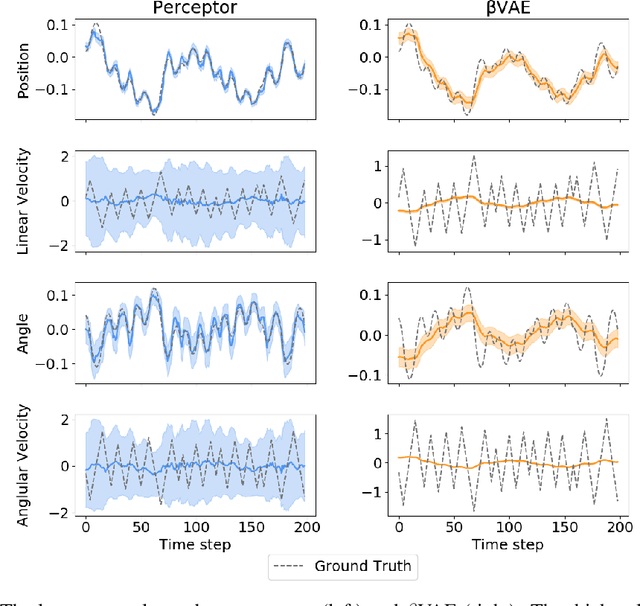

Learning Programmatically Structured Representations with Perceptor Gradients

May 02, 2019

Abstract:We present the perceptor gradients algorithm -- a novel approach to learning symbolic representations based on the idea of decomposing an agent's policy into i) a perceptor network extracting symbols from raw observation data and ii) a task encoding program which maps the input symbols to output actions. We show that the proposed algorithm is able to learn representations that can be directly fed into a Linear-Quadratic Regulator (LQR) or a general purpose A* planner. Our experimental results confirm that the perceptor gradients algorithm is able to efficiently learn transferable symbolic representations as well as generate new observations according to a semantically meaningful specification.

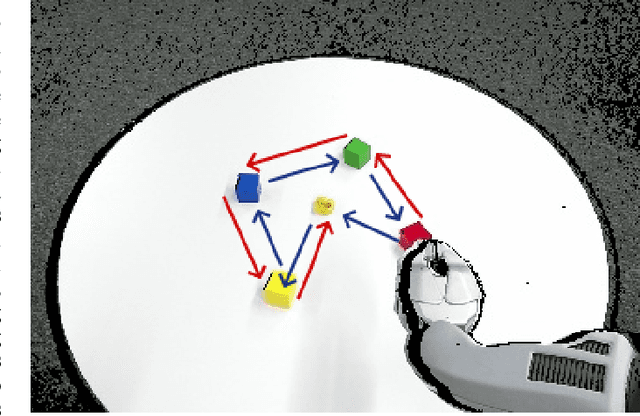

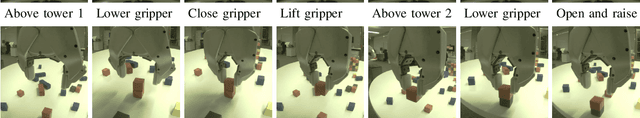

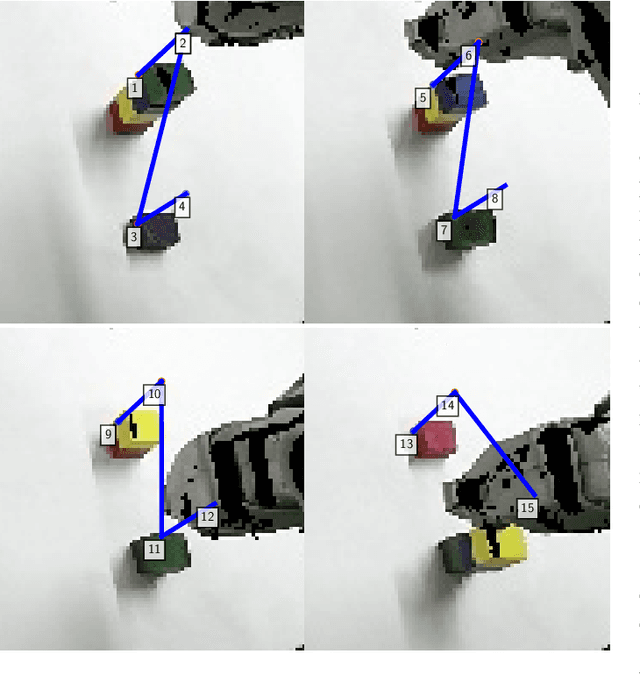

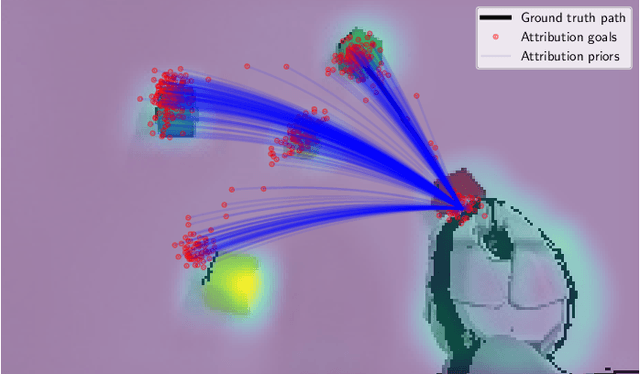

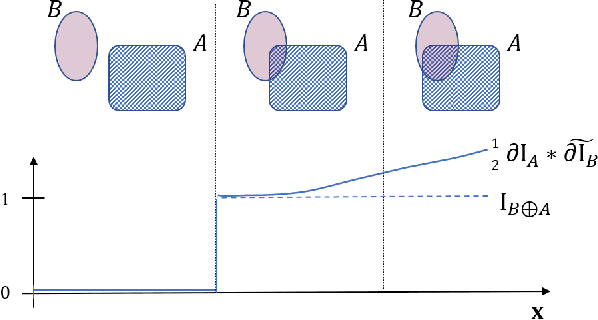

From explanation to synthesis: Compositional program induction for learning from demonstration

Feb 27, 2019

Abstract:Hybrid systems are a compact and natural mechanism with which to address problems in robotics. This work introduces an approach to learning hybrid systems from demonstrations, with an emphasis on extracting models that are explicitly verifiable and easily interpreted by robot operators. We fit a sequence of controllers using sequential importance sampling under a generative switching proportional controller task model. Here, we parameterise controllers using a proportional gain and a visually verifiable joint angle goal. Inference under this model is challenging, but we address this by introducing an attribution prior extracted from a neural end-to-end visuomotor control model. Given the sequence of controllers comprising a task, we simplify the trace using grammar parsing strategies, taking advantage of the sequence compositionality, before grounding the controllers by training perception networks to predict goals given images. Using this approach, we are successfully able to induce a program for a visuomotor reaching task involving loops and conditionals from a single demonstration and a neural end-to-end model. In addition, we are able to discover the program used for a tower building task. We argue that computer program-like control systems are more interpretable than alternative end-to-end learning approaches, and that hybrid systems inherently allow for better generalisation across task configurations.

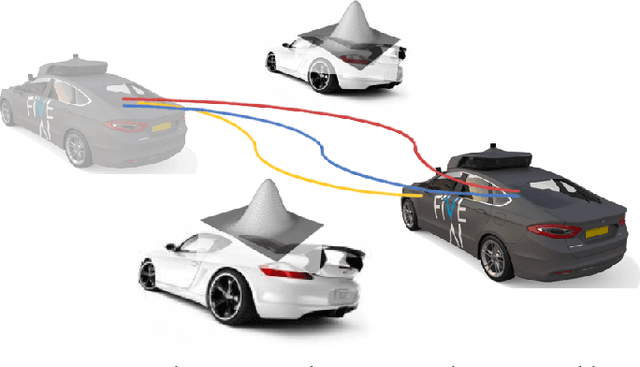

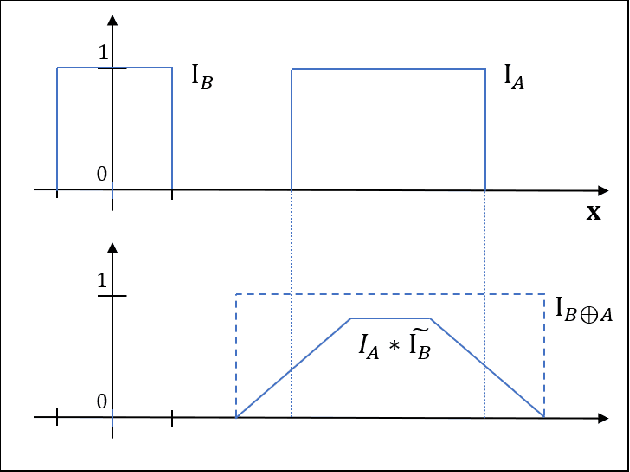

Efficient Computation of Collision Probabilities for Safe Motion Planning

Apr 15, 2018

Abstract:We address the problem of safe motion planning. As mobile robots and autonomous vehicles become increasingly more prevalent in human-centered environments, the need to ensure safety in the sense of guaranteed collision free behaviour has taken renewed urgency. Achieving this when perceptual modules provide only noisy estimates of objects in the environment requires new approaches. Working within a probabilistic framework for describing the environment, we present methods for efficiently calculating a probabilistic risk of collision for a candidate path. This may be used to stratify a set of candidate trajectories by levels of a safety threshold. Given such a stratification, based on user-defined thresholds, motion synthesis techniques could optimise for secondary criteria with the assurance that a primary safety criterion is already being satisfied. A key contribution of this paper is the use of a `convolution trick' to factor the calculation of integrals providing bounds on collision risk, enabling an $O(1)$ computation even in cluttered and complex environments.

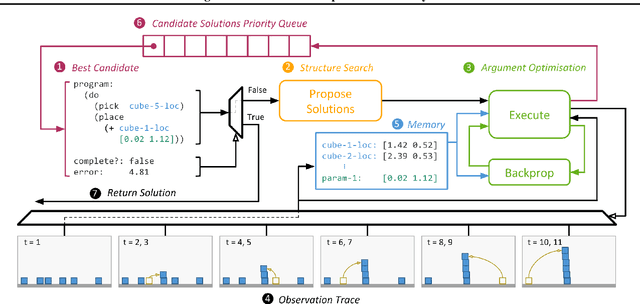

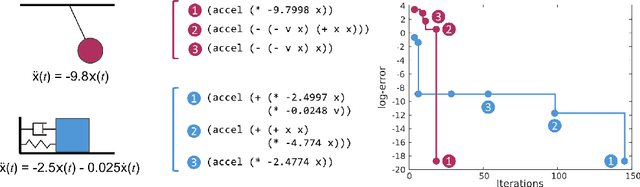

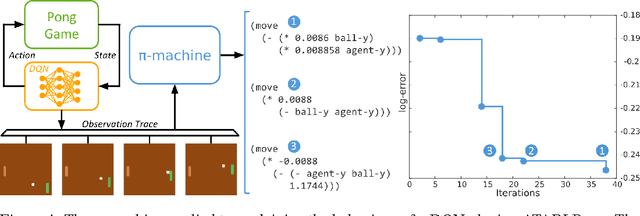

Using Program Induction to Interpret Transition System Dynamics

Jul 26, 2017

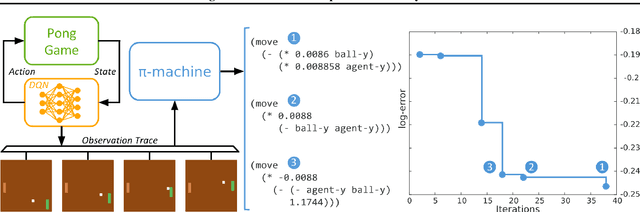

Abstract:Explaining and reasoning about processes which underlie observed black-box phenomena enables the discovery of causal mechanisms, derivation of suitable abstract representations and the formulation of more robust predictions. We propose to learn high level functional programs in order to represent abstract models which capture the invariant structure in the observed data. We introduce the $\pi$-machine (program-induction machine) -- an architecture able to induce interpretable LISP-like programs from observed data traces. We propose an optimisation procedure for program learning based on backpropagation, gradient descent and A* search. We apply the proposed method to two problems: system identification of dynamical systems and explaining the behaviour of a DQN agent. Our results show that the $\pi$-machine can efficiently induce interpretable programs from individual data traces.

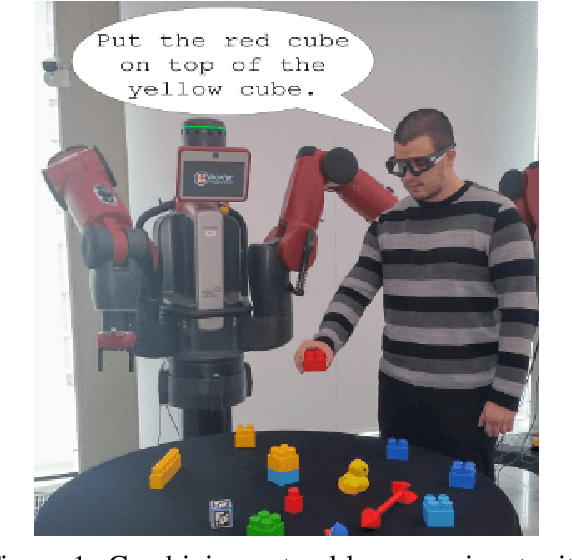

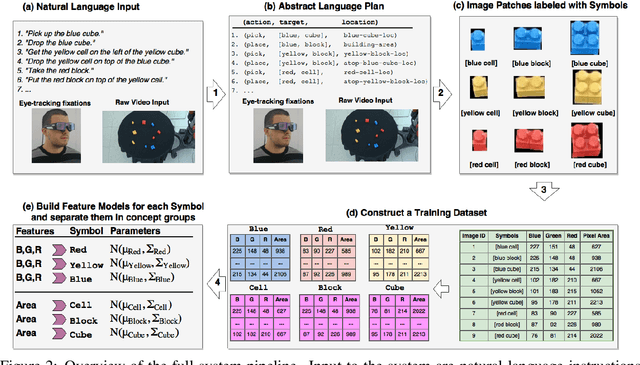

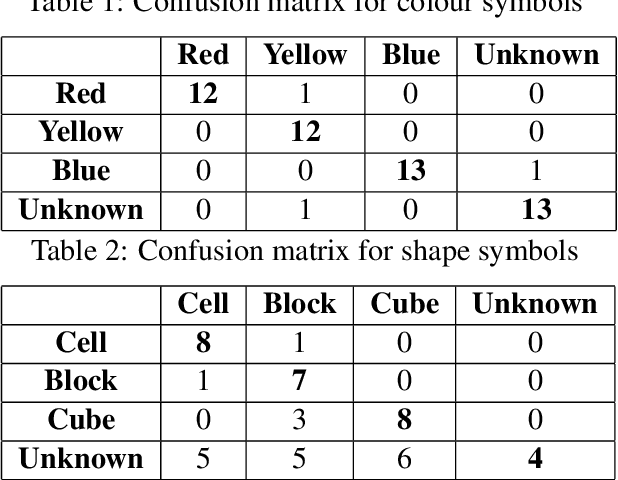

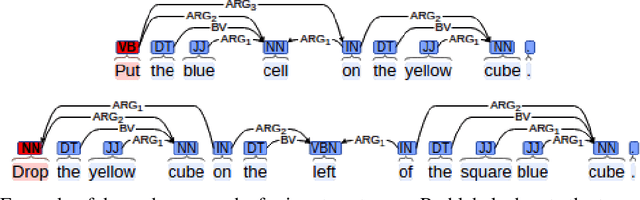

Grounding Symbols in Multi-Modal Instructions

Jun 01, 2017

Abstract:As robots begin to cohabit with humans in semi-structured environments, the need arises to understand instructions involving rich variability---for instance, learning to ground symbols in the physical world. Realistically, this task must cope with small datasets consisting of a particular users' contextual assignment of meaning to terms. We present a method for processing a raw stream of cross-modal input---i.e., linguistic instructions, visual perception of a scene and a concurrent trace of 3D eye tracking fixations---to produce the segmentation of objects with a correspondent association to high-level concepts. To test our framework we present experiments in a table-top object manipulation scenario. Our results show our model learns the user's notion of colour and shape from a small number of physical demonstrations, generalising to identifying physical referents for novel combinations of the words.

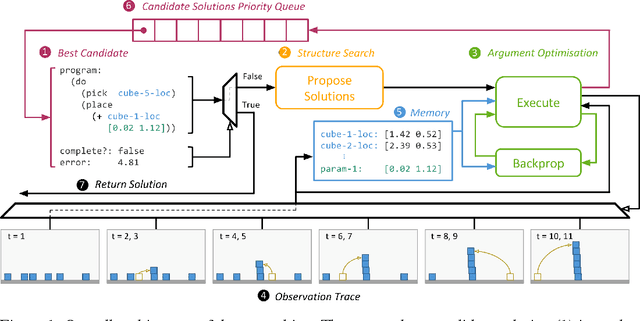

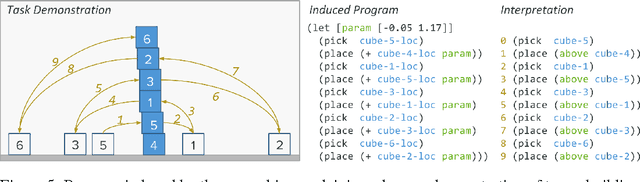

Explaining Transition Systems through Program Induction

May 23, 2017

Abstract:Explaining and reasoning about processes which underlie observed black-box phenomena enables the discovery of causal mechanisms, derivation of suitable abstract representations and the formulation of more robust predictions. We propose to learn high level functional programs in order to represent abstract models which capture the invariant structure in the observed data. We introduce the $\pi$-machine (program-induction machine) -- an architecture able to induce interpretable LISP-like programs from observed data traces. We propose an optimisation procedure for program learning based on backpropagation, gradient descent and A* search. We apply the proposed method to three problems: system identification of dynamical systems, explaining the behaviour of a DQN agent and learning by demonstration in a human-robot interaction scenario. Our experimental results show that the $\pi$-machine can efficiently induce interpretable programs from individual data traces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge