Yongsong Huang

GTFMN: Guided Texture and Feature Modulation Network for Low-Light Image Enhancement and Super-Resolution

Jan 27, 2026Abstract:Low-light image super-resolution (LLSR) is a challenging task due to the coupled degradation of low resolution and poor illumination. To address this, we propose the Guided Texture and Feature Modulation Network (GTFMN), a novel framework that decouples the LLSR task into two sub-problems: illumination estimation and texture restoration. First, our network employs a dedicated Illumination Stream whose purpose is to predict a spatially varying illumination map that accurately captures lighting distribution. Further, this map is utilized as an explicit guide within our novel Illumination Guided Modulation Block (IGM Block) to dynamically modulate features in the Texture Stream. This mechanism achieves spatially adaptive restoration, enabling the network to intensify enhancement in poorly lit regions while preserving details in well-exposed areas. Extensive experiments demonstrate that GTFMN achieves the best performance among competing methods on the OmniNormal5 and OmniNormal15 datasets, outperforming them in both quantitative metrics and visual quality.

U-Harmony: Enhancing Joint Training for Segmentation Models with Universal Harmonization

Jan 21, 2026Abstract:In clinical practice, medical segmentation datasets are often limited and heterogeneous, with variations in modalities, protocols, and anatomical targets across institutions. Existing deep learning models struggle to jointly learn from such diverse data, often sacrificing either generalization or domain-specific knowledge. To overcome these challenges, we propose a joint training method called Universal Harmonization (U-Harmony), which can be integrated into deep learning-based architectures with a domain-gated head, enabling a single segmentation model to learn from heterogeneous datasets simultaneously. By integrating U-Harmony, our approach sequentially normalizes and then denormalizes feature distributions to mitigate domain-specific variations while preserving original dataset-specific knowledge. More appealingly, our framework also supports universal modality adaptation, allowing the seamless learning of new imaging modalities and anatomical classes. Extensive experiments on cross-institutional brain lesion datasets demonstrate the effectiveness of our approach, establishing a new benchmark for robust and adaptable 3D medical image segmentation models in real-world clinical settings.

Dual Prompting for Diverse Count-level PET Denoising

May 05, 2025Abstract:The to-be-denoised positron emission tomography (PET) volumes are inherent with diverse count levels, which imposes challenges for a unified model to tackle varied cases. In this work, we resort to the recently flourished prompt learning to achieve generalizable PET denoising with different count levels. Specifically, we propose dual prompts to guide the PET denoising in a divide-and-conquer manner, i.e., an explicitly count-level prompt to provide the specific prior information and an implicitly general denoising prompt to encode the essential PET denoising knowledge. Then, a novel prompt fusion module is developed to unify the heterogeneous prompts, followed by a prompt-feature interaction module to inject prompts into the features. The prompts are able to dynamically guide the noise-conditioned denoising process. Therefore, we are able to efficiently train a unified denoising model for various count levels, and deploy it to different cases with personalized prompts. We evaluated on 1940 low-count PET 3D volumes with uniformly randomly selected 13-22\% fractions of events from 97 $^{18}$F-MK6240 tau PET studies. It shows our dual prompting can largely improve the performance with informed count-level and outperform the count-conditional model.

IRSRMamba: Infrared Image Super-Resolution via Mamba-based Wavelet Transform Feature Modulation Model

May 16, 2024

Abstract:Infrared (IR) image super-resolution faces challenges from homogeneous background pixel distributions and sparse target regions, requiring models that effectively handle long-range dependencies and capture detailed local-global information. Recent advancements in Mamba-based (Selective Structured State Space Model) models, employing state space models, have shown significant potential in visual tasks, suggesting their applicability for IR enhancement. In this work, we introduce IRSRMamba: Infrared Image Super-Resolution via Mamba-based Wavelet Transform Feature Modulation Model, a novel Mamba-based model designed specifically for IR image super-resolution. This model enhances the restoration of context-sparse target details through its advanced dependency modeling capabilities. Additionally, a new wavelet transform feature modulation block improves multi-scale receptive field representation, capturing both global and local information efficiently. Comprehensive evaluations confirm that IRSRMamba outperforms existing models on multiple benchmarks. This research advances IR super-resolution and demonstrates the potential of Mamba-based models in IR image processing. Code are available at \url{https://github.com/yongsongH/IRSRMamba}.

Learn From Orientation Prior for Radiograph Super-Resolution: Orientation Operator Transformer

Dec 27, 2023

Abstract:Background and objective: High-resolution radiographic images play a pivotal role in the early diagnosis and treatment of skeletal muscle-related diseases. It is promising to enhance image quality by introducing single-image super-resolution (SISR) model into the radiology image field. However, the conventional image pipeline, which can learn a mixed mapping between SR and denoising from the color space and inter-pixel patterns, poses a particular challenge for radiographic images with limited pattern features. To address this issue, this paper introduces a novel approach: Orientation Operator Transformer - $O^{2}$former. Methods: We incorporate an orientation operator in the encoder to enhance sensitivity to denoising mapping and to integrate orientation prior. Furthermore, we propose a multi-scale feature fusion strategy to amalgamate features captured by different receptive fields with the directional prior, thereby providing a more effective latent representation for the decoder. Based on these innovative components, we propose a transformer-based SISR model, i.e., $O^{2}$former, specifically designed for radiographic images. Results: The experimental results demonstrate that our method achieves the best or second-best performance in the objective metrics compared with the competitors at $\times 4$ upsampling factor. For qualitative, more objective details are observed to be recovered. Conclusions: In this study, we propose a novel framework called $O^{2}$former for radiological image super-resolution tasks, which improves the reconstruction model's performance by introducing an orientation operator and multi-scale feature fusion strategy. Our approach is promising to further promote the radiographic image enhancement field.

Infrared Image Super-Resolution via GAN

Dec 01, 2023Abstract:The ability of generative models to accurately fit data distributions has resulted in their widespread adoption and success in fields such as computer vision and natural language processing. In this chapter, we provide a brief overview of the application of generative models in the domain of infrared (IR) image super-resolution, including a discussion of the various challenges and adversarial training methods employed. We propose potential areas for further investigation and advancement in the application of generative models for IR image super-resolution.

Target-oriented Domain Adaptation for Infrared Image Super-Resolution

Nov 15, 2023

Abstract:Recent efforts have explored leveraging visible light images to enrich texture details in infrared (IR) super-resolution. However, this direct adaptation approach often becomes a double-edged sword, as it improves texture at the cost of introducing noise and blurring artifacts. To address these challenges, we propose the Target-oriented Domain Adaptation SRGAN (DASRGAN), an innovative framework specifically engineered for robust IR super-resolution model adaptation. DASRGAN operates on the synergy of two key components: 1) Texture-Oriented Adaptation (TOA) to refine texture details meticulously, and 2) Noise-Oriented Adaptation (NOA), dedicated to minimizing noise transfer. Specifically, TOA uniquely integrates a specialized discriminator, incorporating a prior extraction branch, and employs a Sobel-guided adversarial loss to align texture distributions effectively. Concurrently, NOA utilizes a noise adversarial loss to distinctly separate the generative and Gaussian noise pattern distributions during adversarial training. Our extensive experiments confirm DASRGAN's superiority. Comparative analyses against leading methods across multiple benchmarks and upsampling factors reveal that DASRGAN sets new state-of-the-art performance standards. Code are available at \url{https://github.com/yongsongH/DASRGAN}.

Vicinal Feature Statistics Augmentation for Federated 3D Medical Volume Segmentation

Oct 23, 2023Abstract:Federated learning (FL) enables multiple client medical institutes collaboratively train a deep learning (DL) model with privacy protection. However, the performance of FL can be constrained by the limited availability of labeled data in small institutes and the heterogeneous (i.e., non-i.i.d.) data distribution across institutes. Though data augmentation has been a proven technique to boost the generalization capabilities of conventional centralized DL as a "free lunch", its application in FL is largely underexplored. Notably, constrained by costly labeling, 3D medical segmentation generally relies on data augmentation. In this work, we aim to develop a vicinal feature-level data augmentation (VFDA) scheme to efficiently alleviate the local feature shift and facilitate collaborative training for privacy-aware FL segmentation. We take both the inner- and inter-institute divergence into consideration, without the need for cross-institute transfer of raw data or their mixup. Specifically, we exploit the batch-wise feature statistics (e.g., mean and standard deviation) in each institute to abstractly represent the discrepancy of data, and model each feature statistic probabilistically via a Gaussian prototype, with the mean corresponding to the original statistic and the variance quantifying the augmentation scope. From the vicinal risk minimization perspective, novel feature statistics can be drawn from the Gaussian distribution to fulfill augmentation. The variance is explicitly derived by the data bias in each individual institute and the underlying feature statistics characterized by all participating institutes. The added-on VFDA consistently yielded marked improvements over six advanced FL methods on both 3D brain tumor and cardiac segmentation.

* 28th biennial international conference on Information Processing in Medical Imaging (IPMI 2023): Oral Paper

Infrared Image Super-Resolution: Systematic Review, and Future Trends

Dec 22, 2022

Abstract:Image Super-Resolution (SR) is essential for a wide range of computer vision and image processing tasks. Investigating infrared (IR) image (or thermal images) super-resolution is a continuing concern within the development of deep learning. This survey aims to provide a comprehensive perspective of IR image super-resolution, including its applications, hardware imaging system dilemmas, and taxonomy of image processing methodologies. In addition, the datasets and evaluation metrics in IR image super-resolution tasks are also discussed. Furthermore, the deficiencies in current technologies and possible promising directions for the community to explore are highlighted. To cope with the rapid development in this field, we intend to regularly update the relevant excellent work at \url{https://github.com/yongsongH/Infrared_Image_SR_Survey

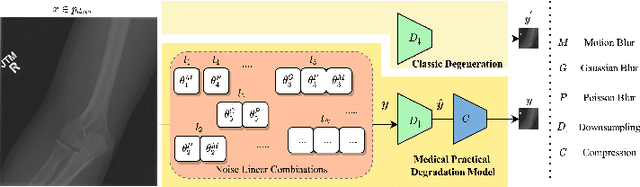

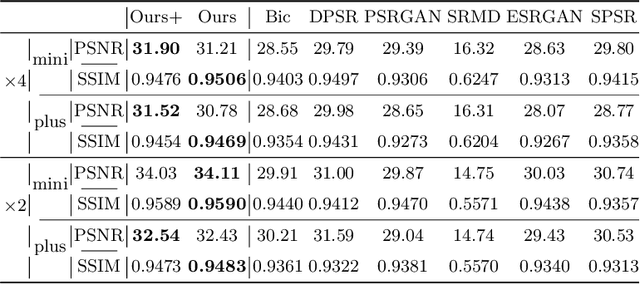

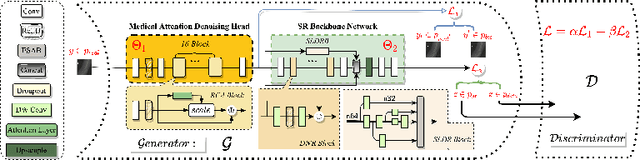

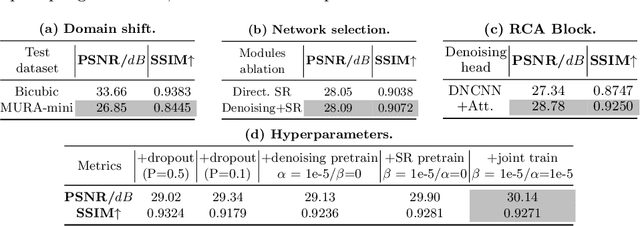

Rethinking Degradation: Radiograph Super-Resolution via AID-SRGAN

Aug 05, 2022

Abstract:In this paper, we present a medical AttentIon Denoising Super Resolution Generative Adversarial Network (AID-SRGAN) for diographic image super-resolution. First, we present a medical practical degradation model that considers various degradation factors beyond downsampling. To the best of our knowledge, this is the first composite degradation model proposed for radiographic images. Furthermore, we propose AID-SRGAN, which can simultaneously denoise and generate high-resolution (HR) radiographs. In this model, we introduce an attention mechanism into the denoising module to make it more robust to complicated degradation. Finally, the SR module reconstructs the HR radiographs using the "clean" low-resolution (LR) radiographs. In addition, we propose a separate-joint training approach to train the model, and extensive experiments are conducted to show that the proposed method is superior to its counterparts. e.g., our proposed method achieves $31.90$ of PSNR with a scale factor of $4 \times$, which is $7.05 \%$ higher than that obtained by recent work, SPSR [16]. Our dataset and code will be made available at: https://github.com/yongsongH/AIDSRGAN-MICCAI2022.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge