Yongguang Li

Revisiting CLIP for SF-OSDA: Unleashing Zero-Shot Potential with Adaptive Threshold and Training-Free Feature Filtering

Apr 19, 2025Abstract:Source-Free Unsupervised Open-Set Domain Adaptation (SF-OSDA) methods using CLIP face significant issues: (1) while heavily dependent on domain-specific threshold selection, existing methods employ simple fixed thresholds, underutilizing CLIP's zero-shot potential in SF-OSDA scenarios; and (2) overlook intrinsic class tendencies while employing complex training to enforce feature separation, incurring deployment costs and feature shifts that compromise CLIP's generalization ability. To address these issues, we propose CLIPXpert, a novel SF-OSDA approach that integrates two key components: an adaptive thresholding strategy and an unknown class feature filtering module. Specifically, the Box-Cox GMM-Based Adaptive Thresholding (BGAT) module dynamically determines the optimal threshold by estimating sample score distributions, balancing known class recognition and unknown class sample detection. Additionally, the Singular Value Decomposition (SVD)-Based Unknown-Class Feature Filtering (SUFF) module reduces the tendency of unknown class samples towards known classes, improving the separation between known and unknown classes. Experiments show that our source-free and training-free method outperforms state-of-the-art trained approach UOTA by 1.92% on the DomainNet dataset, achieves SOTA-comparable performance on datasets such as Office-Home, and surpasses other SF-OSDA methods. This not only validates the effectiveness of our proposed method but also highlights CLIP's strong zero-shot potential for SF-OSDA tasks.

CLIP-Powered Domain Generalization and Domain Adaptation: A Comprehensive Survey

Apr 19, 2025Abstract:As machine learning evolves, domain generalization (DG) and domain adaptation (DA) have become crucial for enhancing model robustness across diverse environments. Contrastive Language-Image Pretraining (CLIP) plays a significant role in these tasks, offering powerful zero-shot capabilities that allow models to perform effectively in unseen domains. However, there remains a significant gap in the literature, as no comprehensive survey currently exists that systematically explores the applications of CLIP in DG and DA, highlighting the necessity for this review. This survey presents a comprehensive review of CLIP's applications in DG and DA. In DG, we categorize methods into optimizing prompt learning for task alignment and leveraging CLIP as a backbone for effective feature extraction, both enhancing model adaptability. For DA, we examine both source-available methods utilizing labeled source data and source-free approaches primarily based on target domain data, emphasizing knowledge transfer mechanisms and strategies for improved performance across diverse contexts. Key challenges, including overfitting, domain diversity, and computational efficiency, are addressed, alongside future research opportunities to advance robustness and efficiency in practical applications. By synthesizing existing literature and pinpointing critical gaps, this survey provides valuable insights for researchers and practitioners, proposing directions for effectively leveraging CLIP to enhance methodologies in domain generalization and adaptation. Ultimately, this work aims to foster innovation and collaboration in the quest for more resilient machine learning models that can perform reliably across diverse real-world scenarios. A more up-to-date version of the papers is maintained at: https://github.com/jindongli-Ai/Survey_on_CLIP-Powered_Domain_Generalization_and_Adaptation.

Data-Efficient CLIP-Powered Dual-Branch Networks for Source-Free Unsupervised Domain Adaptation

Oct 21, 2024

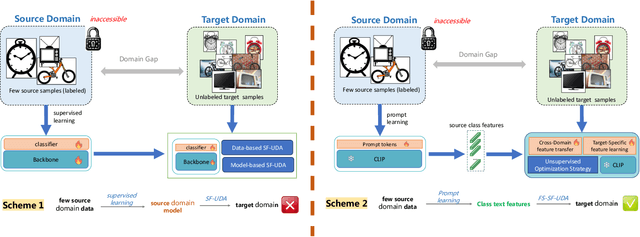

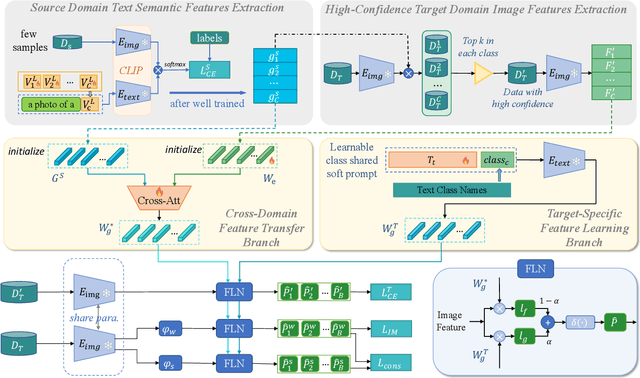

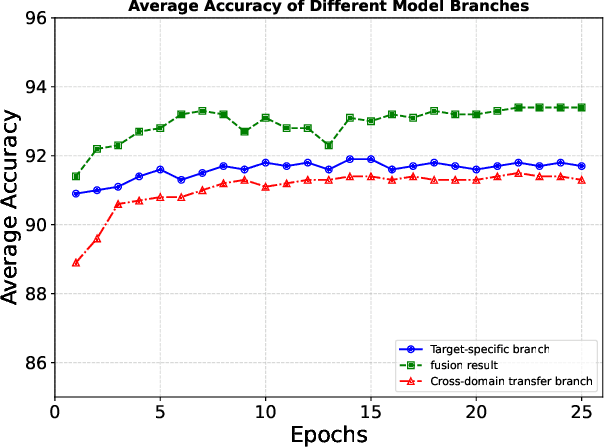

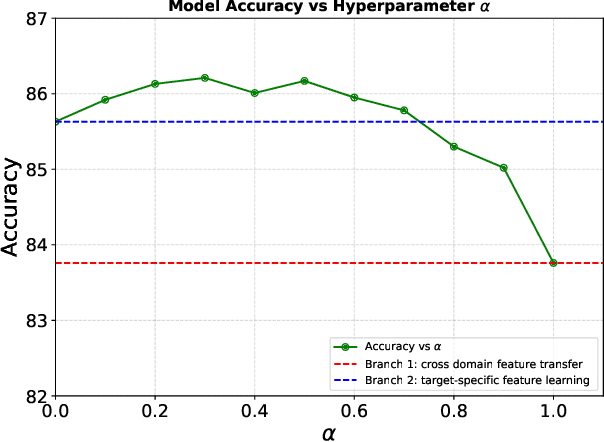

Abstract:Source-Free Unsupervised Domain Adaptation (SF-UDA) aims to transfer a model's performance from a labeled source domain to an unlabeled target domain without direct access to source samples, addressing data privacy issues. However, most existing SF-UDA approaches assume the availability of abundant source domain samples, which is often impractical due to the high cost of data annotation. In this paper, we explore a more challenging scenario where direct access to source domain samples is restricted, and the source domain contains only a few samples. To tackle the dual challenges of limited source data and privacy concerns, we introduce a data-efficient, CLIP-powered dual-branch network (CDBN in short). We design a cross-modal dual-branch network that integrates source domain class semantics into the unsupervised fine-tuning of the target domain. It preserves the class information from the source domain while enhancing the model's generalization to the target domain. Additionally, we propose an unsupervised optimization strategy driven by accurate classification and diversity, which aims to retain the classification capability learned from the source domain while producing more confident and diverse predictions in the target domain. Extensive experiments across 31 transfer tasks on 7 public datasets demonstrate that our approach achieves state-of-the-art performance compared to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge