Yiqi Tong

Proto-EVFL: Enhanced Vertical Federated Learning via Dual Prototype with Extremely Unaligned Data

Jul 30, 2025Abstract:In vertical federated learning (VFL), multiple enterprises address aligned sample scarcity by leveraging massive locally unaligned samples to facilitate collaborative learning. However, unaligned samples across different parties in VFL can be extremely class-imbalanced, leading to insufficient feature representation and limited model prediction space. Specifically, class-imbalanced problems consist of intra-party class imbalance and inter-party class imbalance, which can further cause local model bias and feature contribution inconsistency issues, respectively. To address the above challenges, we propose Proto-EVFL, an enhanced VFL framework via dual prototypes. We first introduce class prototypes for each party to learn relationships between classes in the latent space, allowing the active party to predict unseen classes. We further design a probabilistic dual prototype learning scheme to dynamically select unaligned samples by conditional optimal transport cost with class prior probability. Moreover, a mixed prior guided module guides this selection process by combining local and global class prior probabilities. Finally, we adopt an \textit{adaptive gated feature aggregation strategy} to mitigate feature contribution inconsistency by dynamically weighting and aggregating local features across different parties. We proved that Proto-EVFL, as the first bi-level optimization framework in VFL, has a convergence rate of 1/\sqrt T. Extensive experiments on various datasets validate the superiority of our Proto-EVFL. Even in a zero-shot scenario with one unseen class, it outperforms baselines by at least 6.97%

H2Tune: Federated Foundation Model Fine-Tuning with Hybrid Heterogeneity

Jul 30, 2025Abstract:Different from existing federated fine-tuning (FFT) methods for foundation models, hybrid heterogeneous federated fine-tuning (HHFFT) is an under-explored scenario where clients exhibit double heterogeneity in model architectures and downstream tasks. This hybrid heterogeneity introduces two significant challenges: 1) heterogeneous matrix aggregation, where clients adopt different large-scale foundation models based on their task requirements and resource limitations, leading to dimensional mismatches during LoRA parameter aggregation; and 2) multi-task knowledge interference, where local shared parameters, trained with both task-shared and task-specific knowledge, cannot ensure only task-shared knowledge is transferred between clients. To address these challenges, we propose H2Tune, a federated foundation model fine-tuning with hybrid heterogeneity. Our framework H2Tune consists of three key components: (i) sparsified triple matrix decomposition to align hidden dimensions across clients through constructing rank-consistent middle matrices, with adaptive sparsification based on client resources; (ii) relation-guided matrix layer alignment to handle heterogeneous layer structures and representation capabilities; and (iii) alternating task-knowledge disentanglement mechanism to decouple shared and specific knowledge of local model parameters through alternating optimization. Theoretical analysis proves a convergence rate of O(1/\sqrt{T}). Extensive experiments show our method achieves up to 15.4% accuracy improvement compared to state-of-the-art baselines. Our code is available at https://anonymous.4open.science/r/H2Tune-1407.

A Comprehensive Survey of Federated Transfer Learning: Challenges, Methods and Applications

Mar 03, 2024

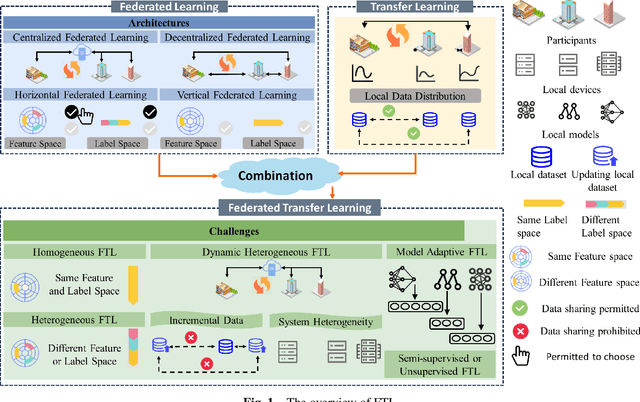

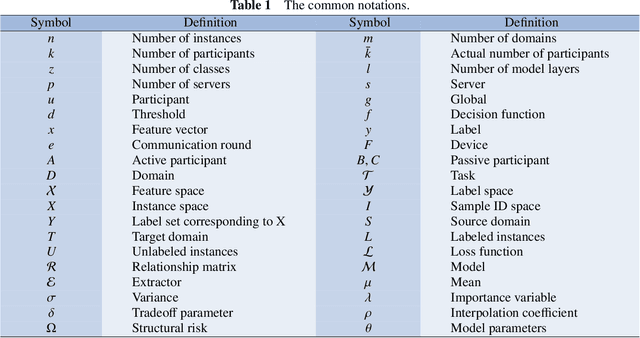

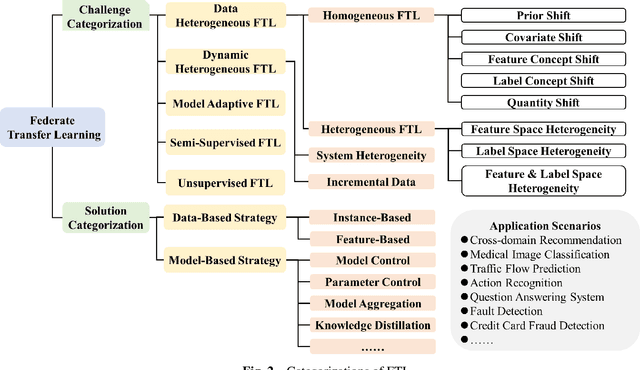

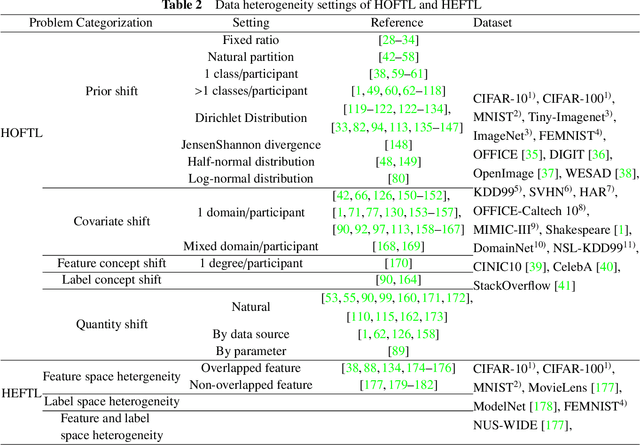

Abstract:Federated learning (FL) is a novel distributed machine learning paradigm that enables participants to collaboratively train a centralized model with privacy preservation by eliminating the requirement of data sharing. In practice, FL often involves multiple participants and requires the third party to aggregate global information to guide the update of the target participant. Therefore, many FL methods do not work well due to the training and test data of each participant may not be sampled from the same feature space and the same underlying distribution. Meanwhile, the differences in their local devices (system heterogeneity), the continuous influx of online data (incremental data), and labeled data scarcity may further influence the performance of these methods. To solve this problem, federated transfer learning (FTL), which integrates transfer learning (TL) into FL, has attracted the attention of numerous researchers. However, since FL enables a continuous share of knowledge among participants with each communication round while not allowing local data to be accessed by other participants, FTL faces many unique challenges that are not present in TL. In this survey, we focus on categorizing and reviewing the current progress on federated transfer learning, and outlining corresponding solutions and applications. Furthermore, the common setting of FTL scenarios, available datasets, and significant related research are summarized in this survey.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge