Ying Ni

Towards Quantum Tensor Decomposition in Biomedical Applications

Feb 19, 2025

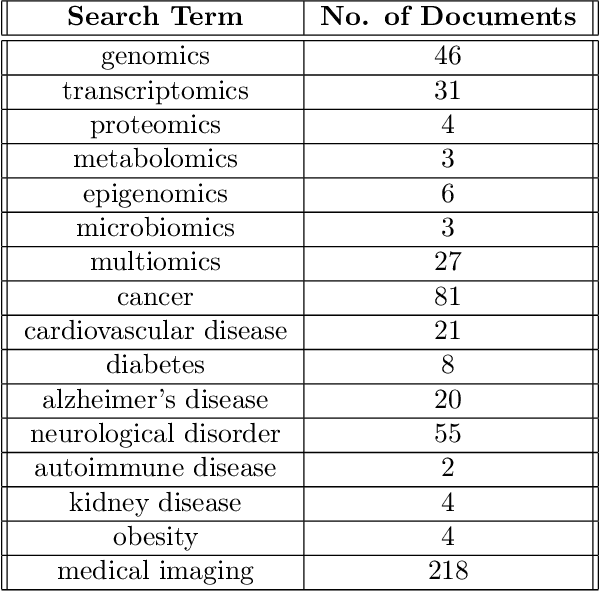

Abstract:Tensor decomposition has emerged as a powerful framework for feature extraction in multi-modal biomedical data. In this review, we present a comprehensive analysis of tensor decomposition methods such as Tucker, CANDECOMP/PARAFAC, spiked tensor decomposition, etc. and their diverse applications across biomedical domains such as imaging, multi-omics, and spatial transcriptomics. To systematically investigate the literature, we applied a topic modeling-based approach that identifies and groups distinct thematic sub-areas in biomedicine where tensor decomposition has been used, thereby revealing key trends and research directions. We evaluated challenges related to the scalability of latent spaces along with obtaining the optimal rank of the tensor, which often hinder the extraction of meaningful features from increasingly large and complex datasets. Additionally, we discuss recent advances in quantum algorithms for tensor decomposition, exploring how quantum computing can be leveraged to address these challenges. Our study includes a preliminary resource estimation analysis for quantum computing platforms and examines the feasibility of implementing quantum-enhanced tensor decomposition methods on near-term quantum devices. Collectively, this review not only synthesizes current applications and challenges of tensor decomposition in biomedical analyses but also outlines promising quantum computing strategies to enhance its impact on deriving actionable insights from complex biomedical data.

Towards Socially Responsive Autonomous Vehicles: A Reinforcement Learning Framework with Driving Priors and Coordination Awareness

Sep 18, 2023

Abstract:The advent of autonomous vehicles (AVs) alongside human-driven vehicles (HVs) has ushered in an era of mixed traffic flow, presenting a significant challenge: the intricate interaction between these entities within complex driving environments. AVs are expected to have human-like driving behavior to seamlessly integrate into human-dominated traffic systems. To address this issue, we propose a reinforcement learning framework that considers driving priors and Social Coordination Awareness (SCA) to optimize the behavior of AVs. The framework integrates a driving prior learning (DPL) model based on a variational autoencoder to infer the driver's driving priors from human drivers' trajectories. A policy network based on a multi-head attention mechanism is designed to effectively capture the interactive dependencies between AVs and other traffic participants to improve decision-making quality. The introduction of SCA into the autonomous driving decision-making system, and the use of Coordination Tendency (CT) to quantify the willingness of AVs to coordinate the traffic system is explored. Simulation results show that the proposed framework can not only improve the decision-making quality of AVs but also motivate them to produce social behaviors, with potential benefits for the safety and traffic efficiency of the entire transportation system.

Teaching Autonomous Vehicles to Express Interaction Intent during Unprotected Left Turns: A Human-Driving-Prior-Based Trajectory Planning Approach

Jul 29, 2023Abstract:With the integration of Autonomous Vehicles (AVs) into our transportation systems, their harmonious coexistence with Human-driven Vehicles (HVs) in mixed traffic settings becomes a crucial focus of research. A vital component of this coexistence is the capability of AVs to mimic human-like interaction intentions within the traffic environment. To address this, we propose a novel framework for Unprotected left-turn trajectory planning for AVs, aiming to replicate human driving patterns and facilitate effective communication of social intent. Our framework comprises three stages: trajectory generation, evaluation, and selection. In the generation stage, we use real human-driving trajectory data to define constraints for an anticipated trajectory space, generating candidate motion trajectories that embody intent expression. The evaluation stage employs maximum entropy inverse reinforcement learning (ME-IRL) to assess human trajectory preferences, considering factors such as traffic efficiency, driving comfort, and interactive safety. In the selection stage, we apply a Boltzmann distribution-based method to assign rewards and probabilities to candidate trajectories, thereby facilitating human-like decision-making. We conduct validation of our proposed framework using a real trajectory dataset and perform a comparative analysis against several baseline methods. The results demonstrate the superior performance of our framework in terms of human-likeness, intent expression capability, and computational efficiency. Limited by the length of the text, more details of this research can be found at https://shorturl.at/jqu35

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge