Yinan Zou

Can Multimodal Large Language Models Understand Spatial Relations?

May 25, 2025Abstract:Spatial relation reasoning is a crucial task for multimodal large language models (MLLMs) to understand the objective world. However, current benchmarks have issues like relying on bounding boxes, ignoring perspective substitutions, or allowing questions to be answered using only the model's prior knowledge without image understanding. To address these issues, we introduce SpatialMQA, a human-annotated spatial relation reasoning benchmark based on COCO2017, which enables MLLMs to focus more on understanding images in the objective world. To ensure data quality, we design a well-tailored annotation procedure, resulting in SpatialMQA consisting of 5,392 samples. Based on this benchmark, a series of closed- and open-source MLLMs are implemented and the results indicate that the current state-of-the-art MLLM achieves only 48.14% accuracy, far below the human-level accuracy of 98.40%. Extensive experimental analyses are also conducted, suggesting the future research directions. The benchmark and codes are available at https://github.com/ziyan-xiaoyu/SpatialMQA.git.

Communication-Efficient Cooperative Localization: A Graph Neural Network Approach

Apr 10, 2025Abstract:Cooperative localization leverages noisy inter-node distance measurements and exchanged wireless messages to estimate node positions in a wireless network. In communication-constrained environments, however, transmitting large messages becomes problematic. In this paper, we propose an approach for communication-efficient cooperative localization that addresses two main challenges. First, cooperative localization often needs to be performed over wireless networks with loopy graph topologies. Second is the need for designing an algorithm that has low localization error while simultaneously requiring a much lower communication overhead. Existing methods fall short of addressing these two challenges concurrently. To achieve this, we propose a vector quantized message passing neural network (VQ-MPNN) for cooperative localization. Through end-to-end neural network training, VQ-MPNN enables the co-design of node localization and message compression. Specifically, VQ-MPNN treats prior node positions and distance measurements as node and edge features, respectively, which are encoded as node and edge states using a graph neural network. To find an efficient representation for the node state, we construct a vector quantized codebook for all node states such that instead of sending long messages, each node only needs to transmit a codeword index. Numerical evaluations demonstrates that our proposed VQ-MPNN approach can deliver localization errors that are similar to existing approaches while reducing the overall communication overhead by an order of magnitude.

Proximal Gradient-Based Unfolding for Massive Random Access in IoT Networks

Dec 04, 2022

Abstract:Grant-free random access is an effective technology for enabling low-overhead and low-latency massive access, where joint activity detection and channel estimation (JADCE) is a critical issue. Although existing compressive sensing algorithms can be applied for JADCE, they usually fail to simultaneously harvest the following properties: effective sparsity inducing, fast convergence, robust to different pilot sequences, and adaptive to time-varying networks. To this end, we propose an unfolding framework for JADCE based on the proximal gradient method. Specifically, we formulate the JADCE problem as a group-row-sparse matrix recovery problem and leverage a minimax concave penalty rather than the widely-used $\ell_1$-norm to induce sparsity. We then develop a proximal gradient-based unfolding neural network that parameterizes the algorithmic iterations. To improve convergence rate, we incorporate momentum into the unfolding neural network, and prove the accelerated convergence theoretically. Based on the convergence analysis, we further develop an adaptive-tuning algorithm, which adjusts its parameters to different signal-to-noise ratio settings. Simulations show that the proposed unfolding neural network achieves better recovery performance, convergence rate, and adaptivity than current baselines.

Gan-Based Joint Activity Detection and Channel Estimation For Grant-free Random Access

Apr 04, 2022

Abstract:Joint activity detection and channel estimation (JADCE) for grant-free random access is a critical issue that needs to be addressed to support massive connectivity in IoT networks. However, the existing model-free learning method can only achieve either activity detection or channel estimation, but not both. In this paper, we propose a novel model-free learning method based on generative adversarial network (GAN) to tackle the JADCE problem. We adopt the U-net architecture to build the generator rather than the standard GAN architecture, where a pre-estimated value that contains the activity information is adopted as input to the generator. By leveraging the properties of the pseudoinverse, the generator is refined by using an affine projection and a skip connection to ensure the output of the generator is consistent with the measurement. Moreover, we build a two-layer fully-connected neural network to design pilot matrix for reducing the impact of receiver noise. Simulation results show that the proposed method outperforms the existing methods in high SNR regimes, as both data consistency projection and pilot matrix optimization improve the learning ability.

Knowledge-Guided Learning for Transceiver Design in Over-the-Air Federated Learning

Mar 28, 2022

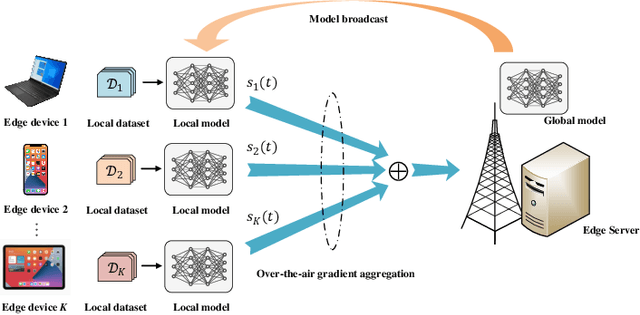

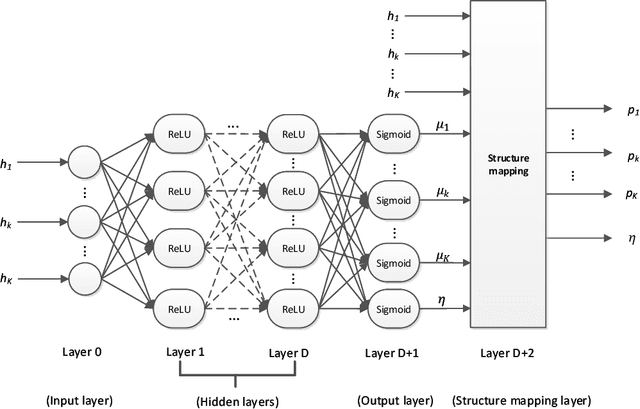

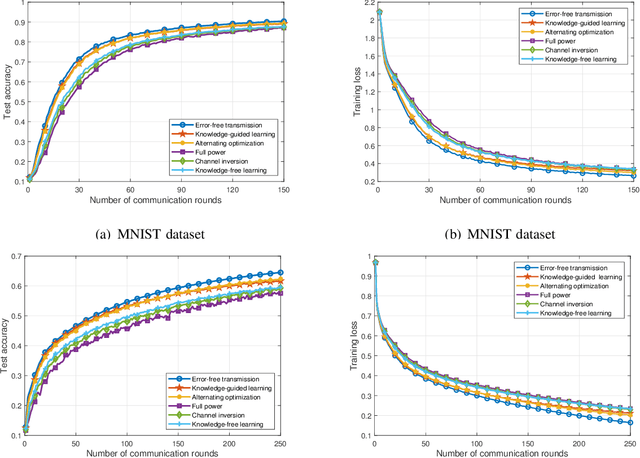

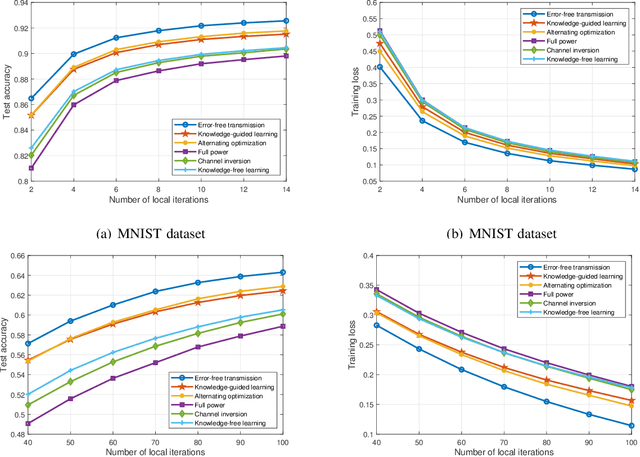

Abstract:In this paper, we consider communication-efficient over-the-air federated learning (FL), where multiple edge devices with non-independent and identically distributed datasets perform multiple local iterations in each communication round and then concurrently transmit their updated gradients to an edge server over the same radio channel for global model aggregation using over-the-air computation (AirComp). We derive the upper bound of the time-average norm of the gradients to characterize the convergence of AirComp-assisted FL, which reveals the impact of the model aggregation errors accumulated over all communication rounds on convergence. Based on the convergence analysis, we formulate an optimization problem to minimize the upper bound to enhance the learning performance, followed by proposing an alternating optimization algorithm to facilitate the optimal transceiver design for AirComp-assisted FL. As the alternating optimization algorithm suffers from high computation complexity, we further develop a knowledge-guided learning algorithm that exploits the structure of the analytic expression of the optimal transmit power to achieve computation-efficient transceiver design. Simulation results demonstrate that the proposed knowledge-guided learning algorithm achieves a comparable performance as the alternating optimization algorithm, but with a much lower computation complexity. Moreover, both proposed algorithms outperform the baseline methods in terms of convergence speed and test accuracy.

Learning Proximal Operator Methods for Massive Connectivity in IoT Networks

Dec 06, 2021

Abstract:Grant-free random access has the potential to support massive connectivity in Internet of Things (IoT) networks, where joint activity detection and channel estimation (JADCE) is a key issue that needs to be tackled. The existing methods for JADCE usually suffer from one of the following limitations: high computational complexity, ineffective in inducing sparsity, and incapable of handling complex matrix estimation. To mitigate all the aforementioned limitations, we in this paper develop an effective unfolding neural network framework built upon the proximal operator method to tackle the JADCE problem in IoT networks, where the base station is equipped with multiple antennas. Specifically, the JADCE problem is formulated as a group-sparse-matrix estimation problem, which is regularized by non-convex minimax concave penalty (MCP). This problem can be iteratively solved by using the proximal operator method, based on which we develop a unfolding neural network structure by parameterizing the algorithmic iterations. By further exploiting the coupling structure among the training parameters as well as the analytical computation, we develop two additional unfolding structures to reduce the training complexity. We prove that the proposed algorithm achieves a linear convergence rate. Results show that our proposed three unfolding structures not only achieve a faster convergence rate but also obtain a higher estimation accuracy than the baseline methods.

Optimal Receive Beamforming for Over-the-Air Computation

May 11, 2021

Abstract:In this paper, we consider fast wireless data aggregation via over-the-air computation (AirComp) in Internet of Things (IoT) networks, where an access point (AP) with multiple antennas aim to recover the arithmetic mean of sensory data from multiple IoT devices. To minimize the estimation distortion, we formulate a mean-squared-error (MSE) minimization problem that involves the joint optimization of the transmit scalars at the IoT devices as well as the denoising factor and the receive beamforming vector at the AP. To this end, we derive the transmit scalars and the denoising factor in closed-form, resulting in a non-convex quadratic constrained quadratic programming (QCQP) problem concerning the receive beamforming vector.Different from the existing studies that only obtain sub-optimal beamformers, we propose a branch and bound (BnB) algorithm to design the globally optimal receive beamformer.Extensive simulations demonstrate the superior performance of the proposed algorithm in terms of MSE. Moreover, the proposed BnB algorithm can serve as a benchmark to evaluate the performance of the existing sub-optimal algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge